MultiProcessor systems: a high-level overview

Peddinti Sriram Bharadwaj

Peddinti Sriram BharadwajTable of contents

Prerequisites

You must have a basic knowledge of processes in single-processor systems. For that, you can read my previous article.

Not all configurations mentioned in this article exist on consumer products. Some may be too expensive and powerful that exists only on specific applications and servers/supercomputers.

You must know that we have various processor lineups and families by every significant CPU/GPU manufacturer.

It would be best if you had a basic idea of multithreading/

The basic premise of caching.

What are multiprocessor systems?

As the name suggests, multiprocessor systems have more than one general-purpose processor. Not every system with multiple processors can be deemed a multiprocessor system. For example, you can have a configuration with a single general-purpose processor and various special-purpose microprocessors; built into the lower levels of hardware that can function autonomously. These are Single processor systems.

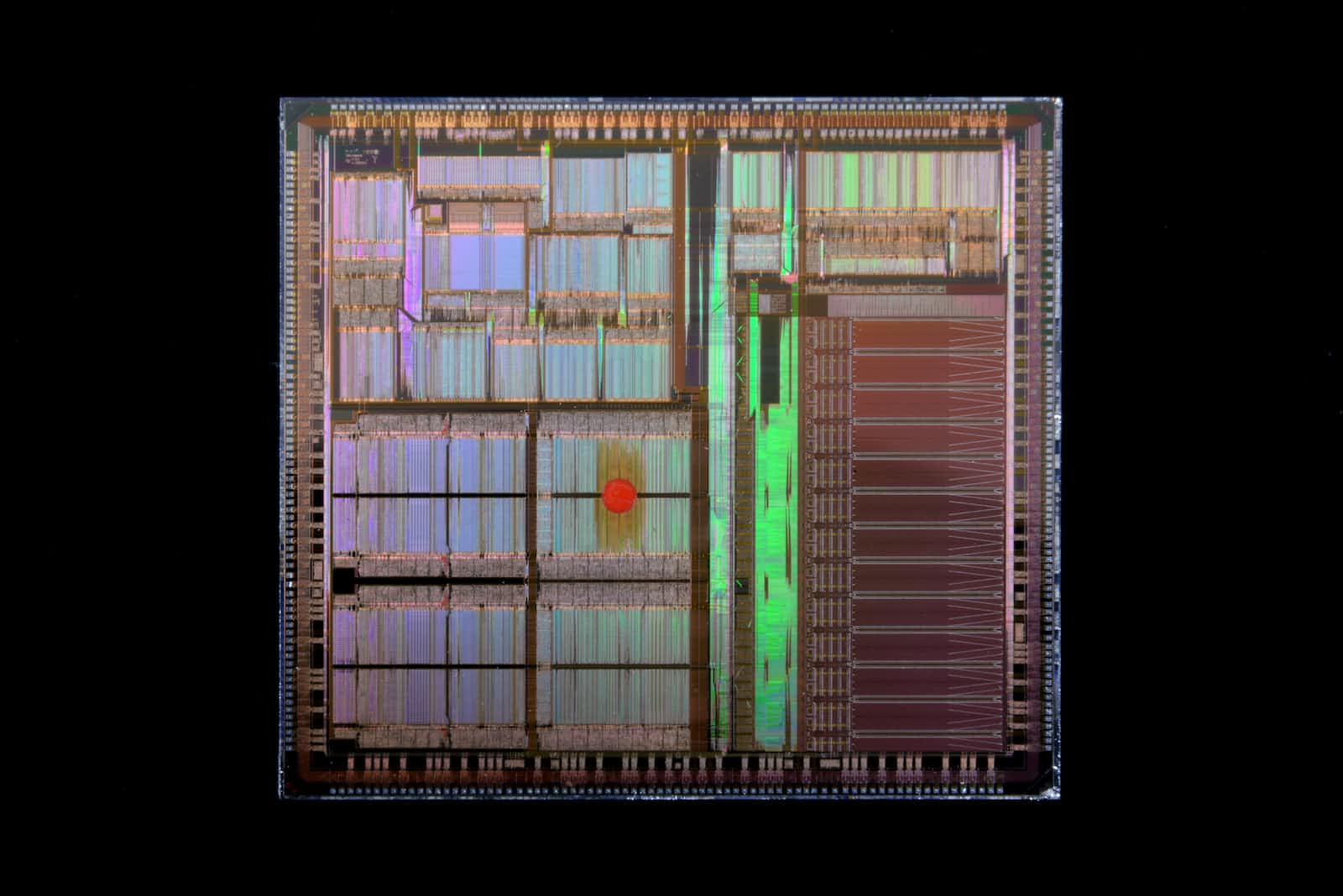

Multiprocessor systems demand that hardware resources be replicated for each processor, and they may or may not be sharing some hardware resources. In applications requiring high computational capability with no need for Interprocess communication, each processor has a dedicated set of resources, which is expensive.

image description

Merits

High availability and reliability.

High computational power.

Capacity and support for multiprogramming.

Challenges

High power consumption.

Expensive hardware replication.

A complex Operating system is needed to manage all processors effectively.

Takes up a lot of space, not ideal for personal or home use.

Inter-processor communication takes considerable time, making it harder to synchronize them.

A viable alternative: multicore systems

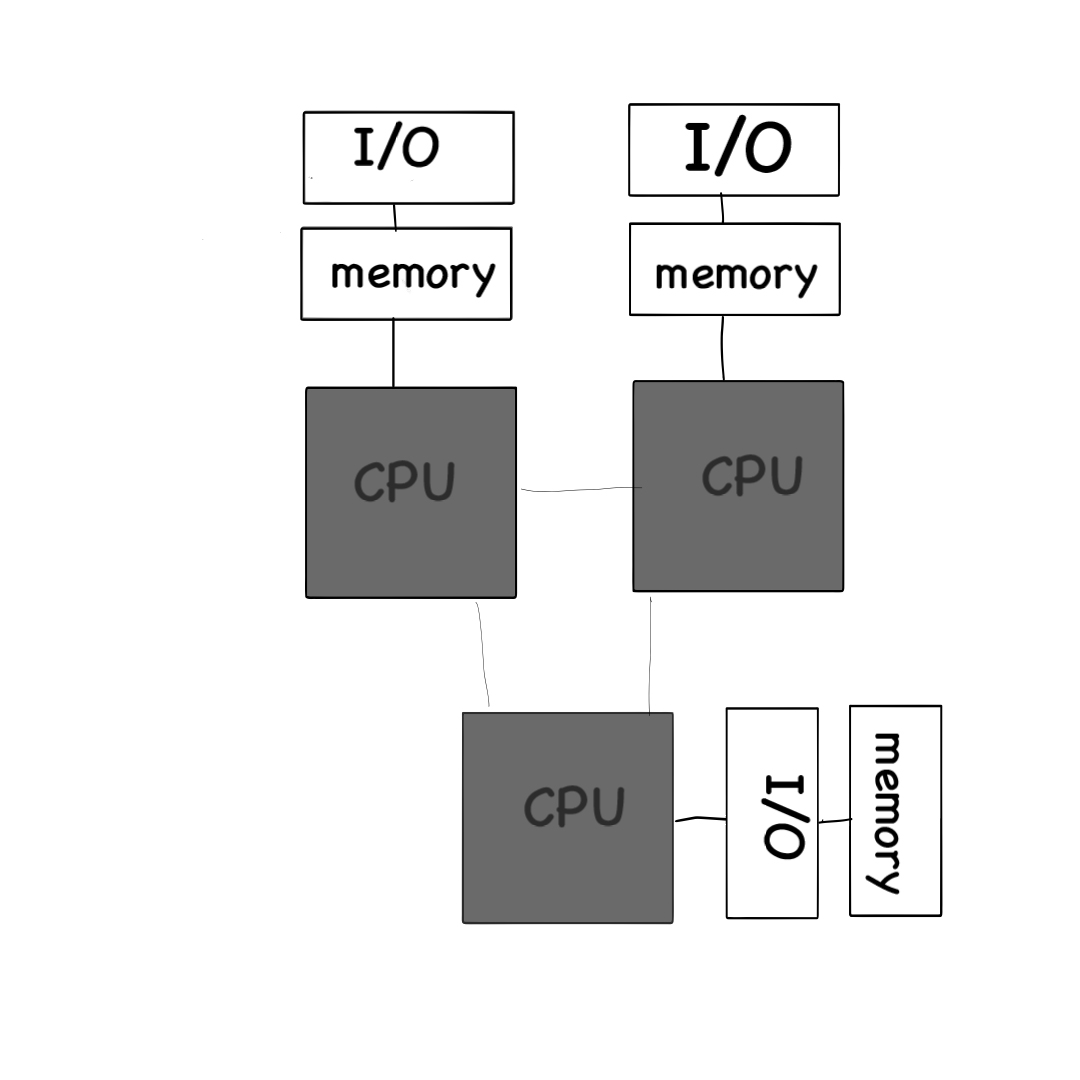

With the advent of IBM's Power 4(a dual-core processor), there seemed to be a way to get the performance of multiple processors without much hardware replication. The idea is to grow various processors on a single physical chip and work in lockstep. The constituent cores are called cores and are viewed as logically separate units by the os. From then, more and more consumer chip manufacturers began developing multicore chips, and today, nearly all personal computers and server-level hardware use multicore processors.

The merits were so promising that no primary consumer product uses a single-core processor now. These cores can now have their own private cache, memory, and data buses and can communicate directly. The chip, as a whole, uses additional computing resources such as memory and I/O devices. The last commercial Single processor was the Celeron G470 by Intel.

image description

Merits

Faster on-chip communication.

Less expensive than multiple processor systems.

General OS can work on the system.

Higher power efficiency because of resource sharing.

Multithreading can be easily implemented.

Challenges

Resource sharing should be synchronized.

It cannot speed up serialized processes as much as expected.

Debugging and testing can be more complex.

Cache coherency and adapting to legacy software is a challenge.

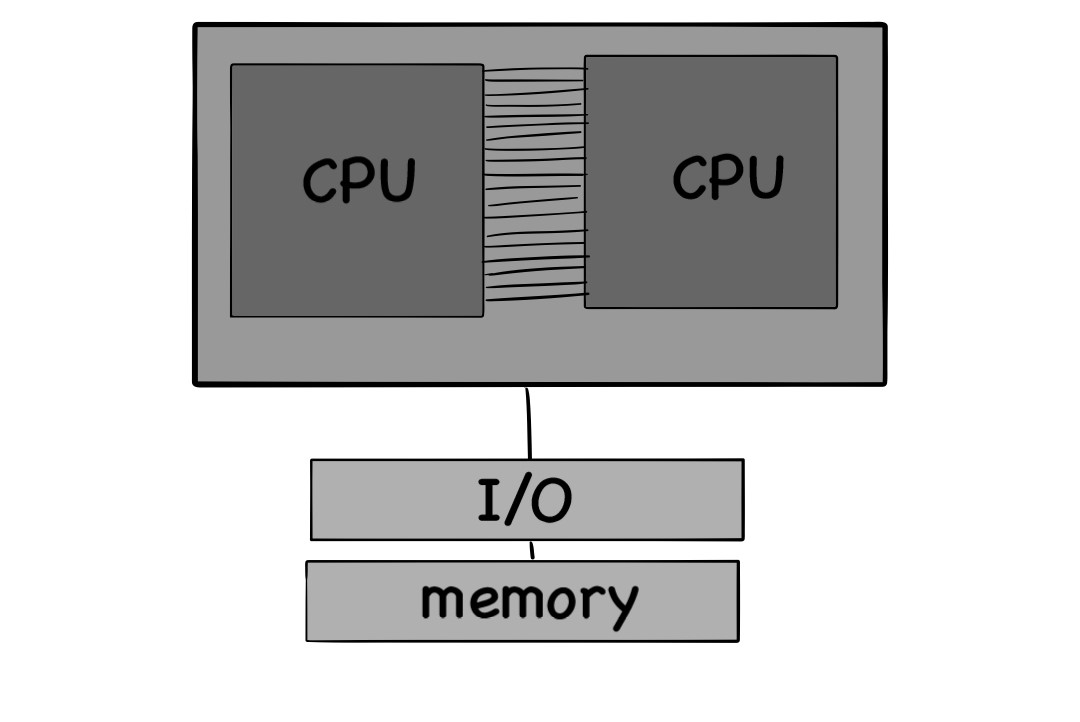

A more powerful adaption: clustered systems

Clustered systems are a network of many "similar" standalone systems that are connected over a LAN. These clustered systems provide much higher availability and redundancy than multicore systems and are used for industry-level and scientific purposes. Most of the supercomputers that you see today are clustered systems. Serves and supercomputers typically contain thousands of cores reaching zettabyte and petabyte performance levels.

The Operating systems working on these systems should handle load balancing, coherency, and thread synchronization. These clustered systems may or may not be sharing a common memory pool. These days even Storage Area Networks (SAN) are being built over this technology.

src:-https://www.racksolutions.com/news//app/uploads/AdobeStock_90603827-1-scaled.jpeg

image description

Merits

Improved performance.

Load balancing.

Simple management of resources with a central terminal.

Reduced downtime in real-time applications.

Resource sharing.

High scalability and modularity in rack form architecture.

Challenges

Complex architecture to build and maintain.

Specialized software only can take advantage of this.

Expensive hardware and maintenance.

Coherency and consistency are hard to maintain, given the thousands of processors.

Offerings of multiprocessor systems

High availability

High availability (HA) refers to a system or infrastructure's ability to remain operational and accessible for an extended period, typically measured in terms of uptime, without significant disruptions or downtime. The main goal of implementing high availability is to ensure that critical services or applications remain accessible and functioning despite hardware failures, software issues, or other unforeseen problems.

Redundancy

Redundancy in multiprocessor and multicore systems refers to using duplicate hardware or resources to provide backup or failover capabilities. The primary goal of redundancy is to enhance the reliability and fault tolerance of the system, ensuring that critical tasks and services can continue to operate even in the presence of hardware failures or errors.

Fault Tolerance

Systems can continue operation even after a component failure. They can detect, diagnose, and correction of failure. Typically these systems have hardware and software duplication, with all instances working in lockstep. A task is performed on all the cases, and if any result differs, it is identified as faulty and stops servicing the upcoming lessons.

In complex scientific applications such as simulations, the results derived from each instance are taken, and the average is considered the final result.

Whenever one or more instances go down, the operation continues on the healthy ones.

Graceful Degradation

It is the ability to continue providing service proportional to the level of surviving hardware. The performance dramatically drops as more and more components fail and come to a point when complete replacement or hardware servicing is needed.

Parallelism and multithreading

Unlike a single processor system switching rapidly between processes (concurrency), a multiprocessor/multicore system can have parallelism and keep multiple programs executing simultaneously. Multithreaded applications can make good use of this hardware.

Some popular PC multicore processors

Intel

earlier i3 processors used to come with dual cores, and starting from the 8th generation(coffee lake), they come with a quad-core variant. i5 processors are available in dual-core, quadcore, and Hexa-core configurations. i7 processors come in quad, hexa, and octa-core designs.

AMD Ryzen

Ryzen 3 series come with quad and Hexa-core configurations. Ryzen 5 series comes in quad hexa and octa-core designs. Ryzen 7 series predominantly come in octa-core designs. Ryzen 9 series come with Octa, dodeca, and hexadeca cores.

Apple Silicon

The M1 lineup came with hexa core configuration, the M1 max with ten cores, and the M2 lineup with 10-12 cores.

src:-https://miro.medium.com/v2/resize:fit:1400/1*X9irQskeFWfSQ-Gls97WmQ.png

image description

There are, however, many other chip manufacturers like Qualcomm, Snapdragon, and Nvidia; you can check out their official websites for more information on their core count.

Process Scheduling

In multiprocessor systems, there are two approaches:-

1) asymmetric multiprocessor systems

One processor will handle all the scheduling and resource management in asymmetric multiprocessor systems. The other processors execute user code. So, the scheduling is simple to implement in this case, and we need to be concerned with only one processor. No need to worry about synchronization of scheduling and resource allocation; the boss processor takes all care.

2) symmetric multiprocessor systems

In symmetric multiprocessor systems, each processor will have two approaches, each with a queue, or there will be a standard queue for all processes to schedule. In the latter case, the scheduling is relatively simple as there is no need to establish synchronization.

Memory access in multiprocessor systems

1) Uniform Memory Access (UMA)

A single memory controller is used for all the processors to access memory. This ensures that each processor has the same access time. This architecture is easy to implement, and the standard memory controller is the bottleneck. Also, bandwidth for the data and the address buses are shared among all the processors, resulting in low bandwidth for each processor.

2) Non Uniform Memory Access(NUMA)

Each processor has a dedicated memory controller; thus, access times for one processor are not limited to another. There is also no bandwidth sharing, much faster than uniform memory access. It is usually harder to implement.

Processor affinity

When a process runs on a CPU, the temporary runtime data will be cached. And so, when a process is frequently allocated to a processor, a lot of caching happens, and thus the process runs faster because of fewer cache misses as time progresses. Let us say a different processor is allocated to the process now. Two things can happen, either repopulate the cache of the new processor from the old processor or start a fresh cache population in the new processor. Both ways are expensive and time-consuming.

So, keeping a process running on the same processor makes sense. The Operating Systems do this. Some operating systems provide soft affinity, which attempts to maintain processor affinity but doesn't guarantee to do so.

On the other hand, some Operating systems guarantee that a specific process can run only on a subset of a given processor, called hard affinity. Modern operating systems often implement both kinds of affinities.

Load balancing

We want to utilize our hardware fully since we have multiple processors or cores. So, to ensure this, we provide that no single processor takes up more load than the others. Also, if only one processor is being used, there is no taking advantage of multithreaded support.

There are two general approaches to load balancing: 1) pull migration and 2) push migration.

With push migration, a specific task periodically checks the load on each processor and, if it finds an imbalance, evenly distributes the load by moving processes from overloaded to idle or less-busy processes. Pull migration occurs when an idle processor pulls a waiting task from a pusy processor.

Both are not mutually exclusive and are implemented together.

Balancing can counteract the effects of processor affinity as processes can be shifted from one CPU to the other.

What next?

Now that we know how a multiprocessor system functions, in the following article, we shall know how multithreading and parallel programming can take advantage of these architectures.

Subscribe to my blog to be updated on my every article!

Subscribe to my newsletter

Read articles from Peddinti Sriram Bharadwaj directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Peddinti Sriram Bharadwaj

Peddinti Sriram Bharadwaj

I am a learner