Naive Hyperparameter Exploration using MNIST

Ayokunle Adeniyi

Ayokunle Adeniyi

For deep learning enthusiasts, working with the MNIST dataset is the equivalent of writing the "hello world" program when learning a new scripting language like Python, JavaScript etc. In this project, I experiment with the vast and highly dimensional hyperparameter space using the MNIST dataset.

About the MNIST dataset

The Modified National Institute of Standards and Technology (MNIST) dataset comprises 60000 images of handwritten numbers. All images are in greyscale and have a pixel size of 28 (height) by 28 (width). The M in the MNIST dataset indicates that it was created from the original NIST dataset and can be considered a subset of the NIST dataset. It currently serves as a good database for machine learning enthusiasts to learn different classification and learning models without having to bother with the complexities of data cleaning and data generally preprocessing.

Experiment

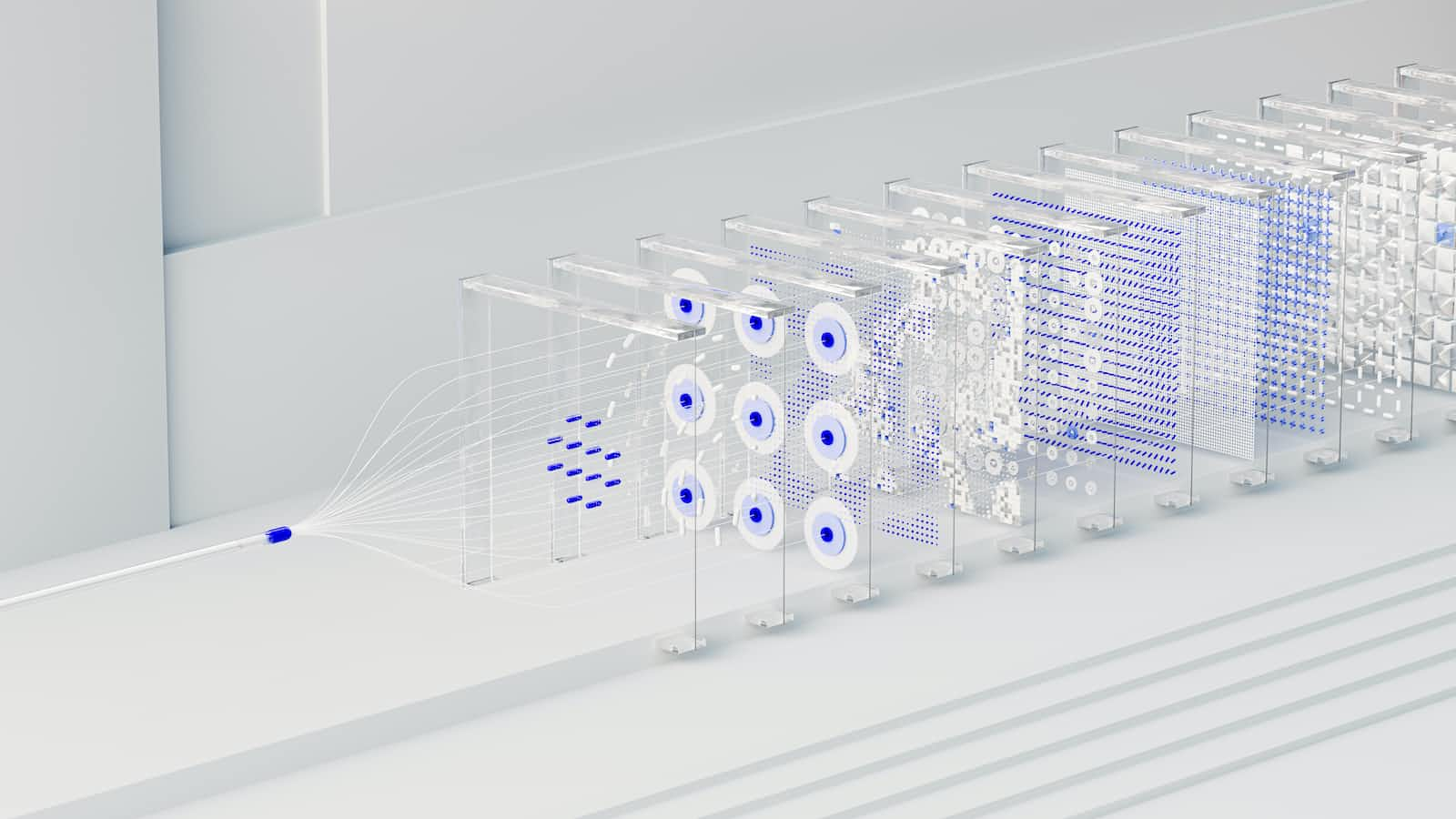

This project aims to explore the systematic, incremental changes to the hyperparameters in getting the optimal performance of the models built during the experiment. This process, commonly referred to as hyperparameters optimisation, focuses on fine-tuning models to generalise better on unseen data, which is usually the essence of modelling.

Google Collab Code

References

Deng, L. (2012). The MNIST Database of Handwritten Digit Images for Machine Learning Research [Best of the Web]. IEEE Signal Processing Magazine, 29(6), 141–142. https://doi.org/10.1109/MSP.2012.2211477

Subscribe to my newsletter

Read articles from Ayokunle Adeniyi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ayokunle Adeniyi

Ayokunle Adeniyi

A Data professional with a Master's degree in Big Data and 4 years of experience managing and optimizing large data infrastructure in the financial services and gaming industry. SQL is my playground. I enjoy working with different databases, tools and technologies in data engineering with a keen interest in SRE, Cloud and AI.