Llama 2 AI - The BEST from META AI is FREE!!!

Sumi Sangar

Sumi Sangar

This is the best available open source large language model and that is from Facebook meta AI.

Yes, llama 2 has been launched officially and no leak no torrent you can directly go ahead and download the model. It’s quite amazing the kind of approach that meta AI has taken in this regard and this blog, we’re going to break down entirely from the technical details of this model to everything from how you access the model. And we’re also going to go through the life playground and then see some examples to see and understand how Llama 2 performs. To begin with, Facebook AI, or meta AI has introduced Llama 2. They’re calling this the next generation of our open-source large language model.

The biggest highlight of this entire thing is Llama 2 is completely available for free for both research and commercial use. This is a very contrasting approach but if you had listened to the recent podcast where Mark Zuckerberg appeared on Licks Friedman's podcast, you know that Mark Zuckerberg has fully gone with the open source model. This is a great approach for people who are open source enthusiasts and researchers with open AI trying to be the closed AI.

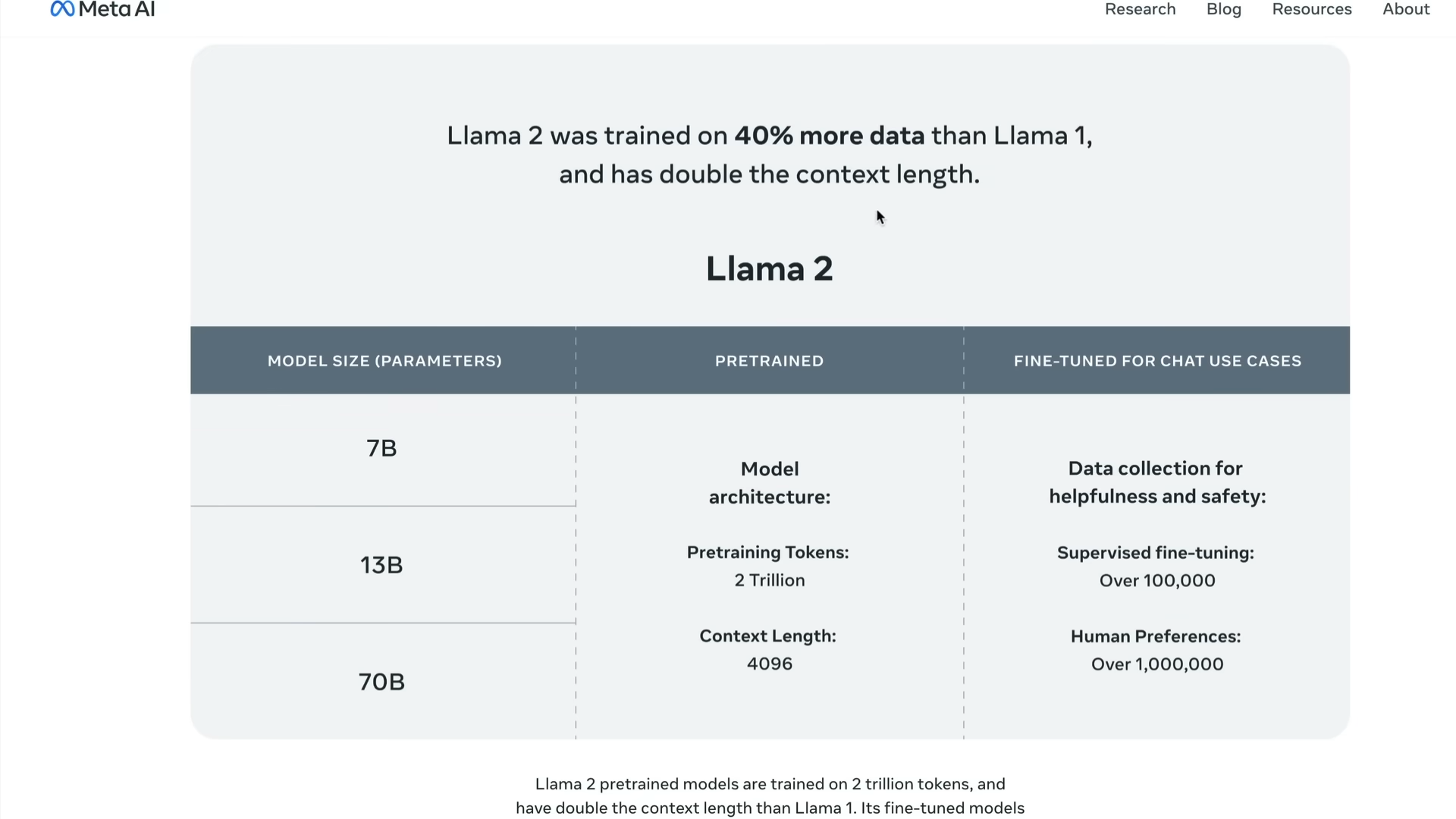

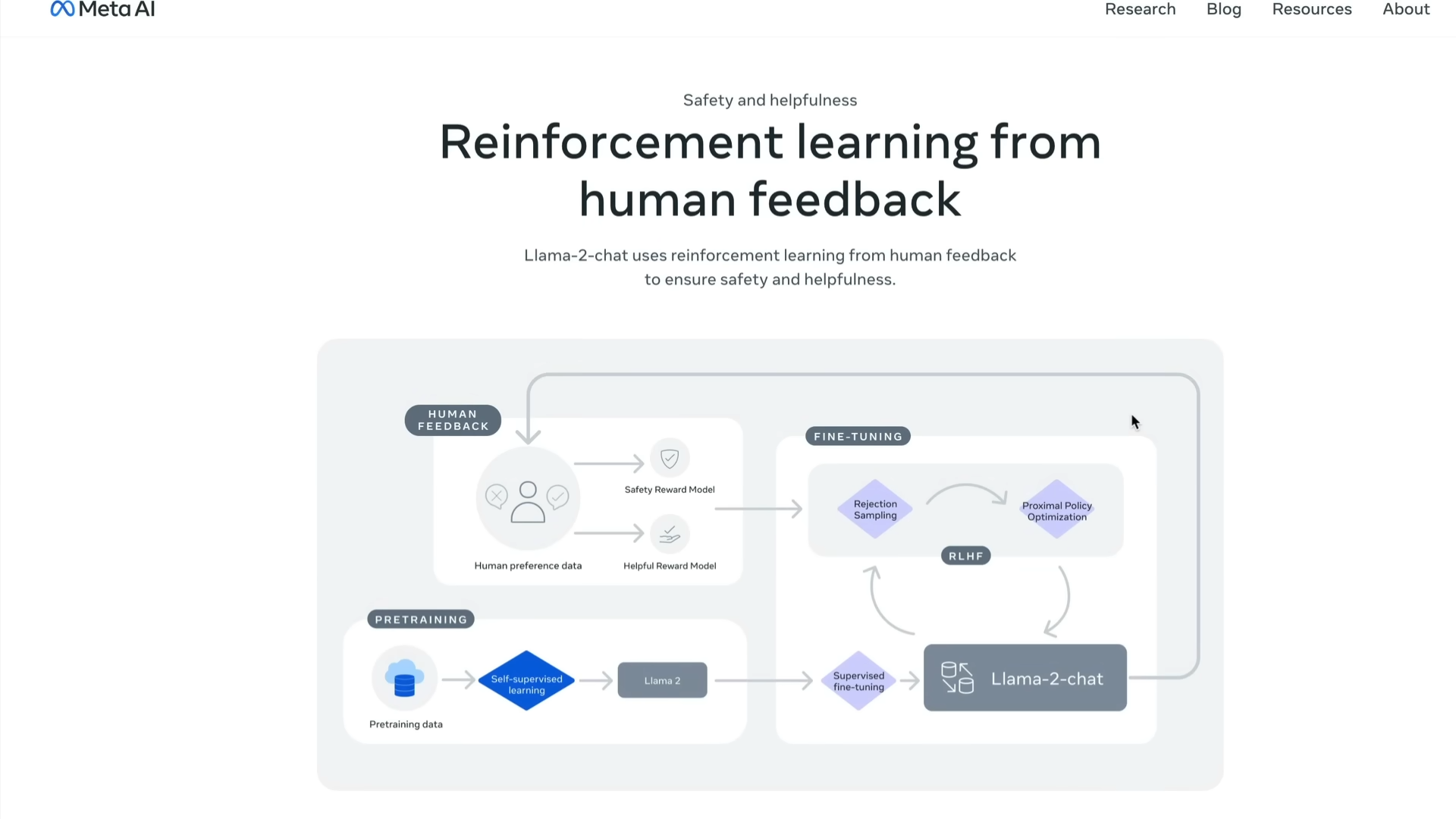

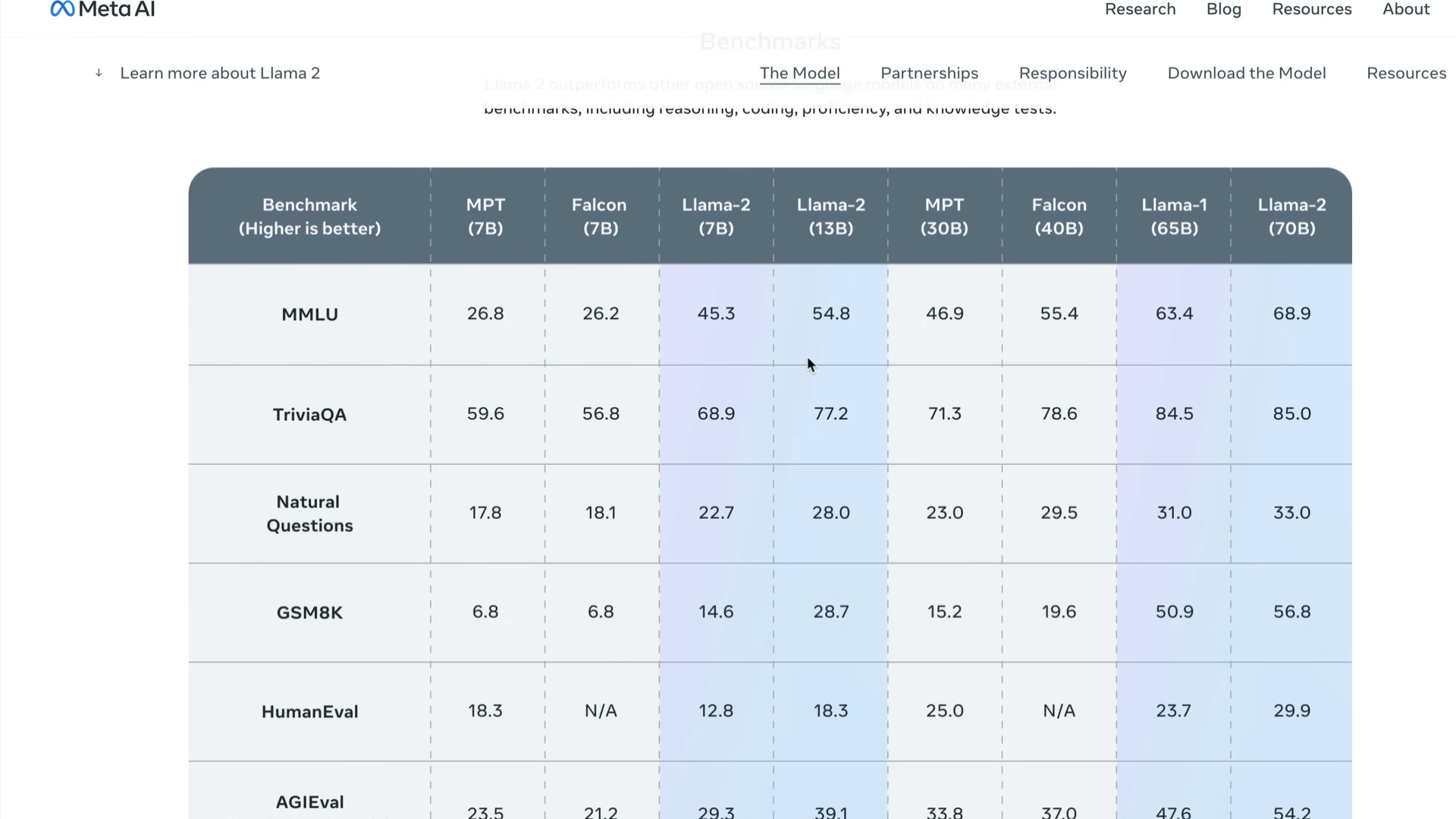

Facebook is trying to be the savior of open-source AI with Llama 2 and it’s not just that they’re releasing any model it’s not like it’s one of their models. It’s the best model at this point and we’re going to get into the benchmarks. So just to quickly learn about llama 2’s general parameters llama 2 is trained on more than 40 percent more data than llama 1 and it has got double the context length which means llama 2 supports 4096 approximately 4k tokens and it has been trained on 2 trillion tokens. If you know llama 1 was trained around 1.2 is 1.3 is trillion llama 2 is trained on 2 trillion tokens and facebook is releasing it with three model sizes 7 billion parameter 13 billion parameter 70 billion parameter and if you want to know llama 2 is it like only the base model thanks to facebook they’re also releasing a fine-tuned model for chat use cases that are trained supervised fine-tuner which is also known as instruct fine-tuned over 100,000 open data sets and also trained on 1 million human annotated data set you might be surprised that we have got our LHF there so we have got reinforcement learning from human feedback this is one of the secret sources of chat GPT and the closed models that are available so llama 2 which is an open source model which is trained on 2 trillion tokens which has got a really good fine-tuning also has got our LHF reinforcement learning from human feedback where they employ actual humans to annotate the data set get the response and then take that feedback back to the model to fine-tune the model in reinforcement learning so that the model learns to give the best responses that human beings usually appreciate if you do the if you see the benchmarks llama 2 is like very well up ahead like if you just compare the 7 billion parameter model just look at the first three mpt falcon llama 2 everybody seems to be falcon fun.

If you see the benchmarks for mmlu llama 2 is like way way ahead of 7 billion parameter falcon if you see natural questions falcon has scored 18 and llama 2 has scored 22 if you see gsm 8k llama 2 is almost more than double and most of these benchmarks. You see llama 2 is crushing completely this is just for 7 billion parameter model that you can you know simply fit in a smaller GPU but now when you go ahead with the larger models, especially with the 70 billion which is the biggest and the largest model then you would still see llama 2. You know smashing and squashing everybody else in the list it’s more than 30 billion mpt is more than 70 billion Falcon. Llama 2 is crushing in every single benchmark that is available.

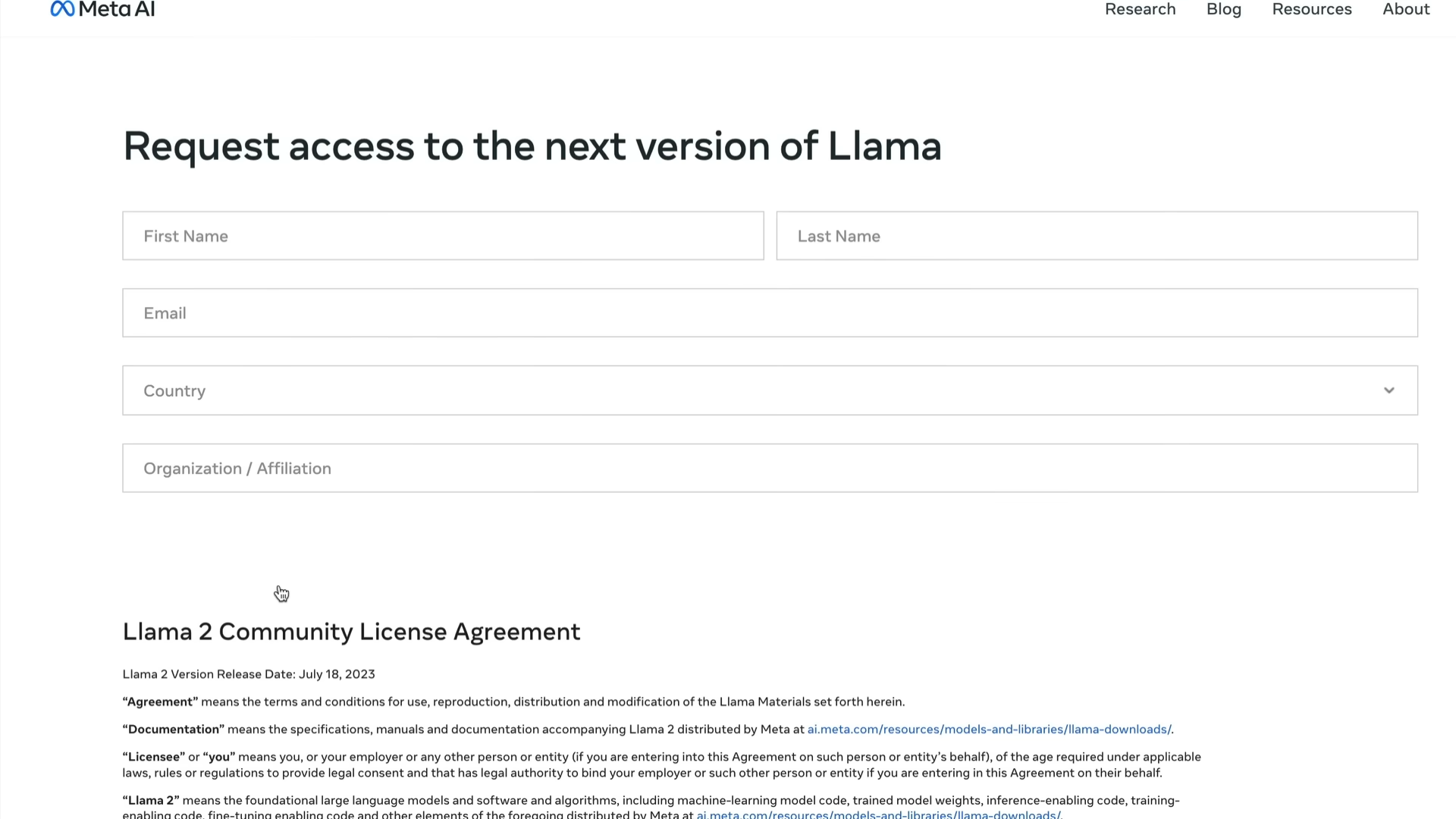

If you see the technical details of this model you can you would understand that it is used all the open data sets that are available for instruction fine-tuning and it is also releasing the instruct fine-tuned model which is available for the chat model in itself. So the key elements that you need to understand if you’re going to use the llama model is that it’s available for commercial and free users they’re also releasing the base llama 2 model and they’re also releasing the chat model and the chat model is not any chat model it is a model with RLHF reinforcement learning with human or from human feedback. How do you access the model all you have to do is go here and click download the model and that will take you to submit this link you have to just go here enter your first name last name email eddy country organization affiliation. It has a lot of details about what you should not do. What you can do with this is click accept terms and services except in download you will get to download the model in itself which also comes with certain responsibilities when you download the model there are certain things that you are going to get one is the model code the model weights the read be there is responsible use guide the license acceptable use policy on the model card.

So all these things come with the model in itself and like I said before you get two models you have like two models that are available for you llama to the base model. And also the fine-tuned llama to chat which is also available for free use that you can use free and commercial purposes. So now let’s go see live how the model how does it fit. So you have the playground that has been supported by undressing. Horovitz which is quite surprising, to be honest, I was quite surprised to see a 16z hosting a meta-ia model also not entirely surprising. I guess like if you go to the partnerships and you see a lot of logos you see you see Atlash and you see so geo orange define what I was surprised to see is?

I was quite surprised to see Microsoft here once again anywhere. There is ai mentioned on the internet it seems like Microsoft seems to have their presence there Microsoft has invested in open ai Microsoft has invested in the opening is competitor Microsoft is releasing its large language model and Microsoft seems to have I don’t know what capacity by giving azure or I don’t know what capacity but Microsoft is also being listed as another partner of llama too and honestly I’m surprised. But it seems like Microsoft is going full-on to bed anywhere wherever ei research is happening and that might be a good thing for Microsoft to have a company in itself. So yeah good luck Sakya. You guys are doing amazing.

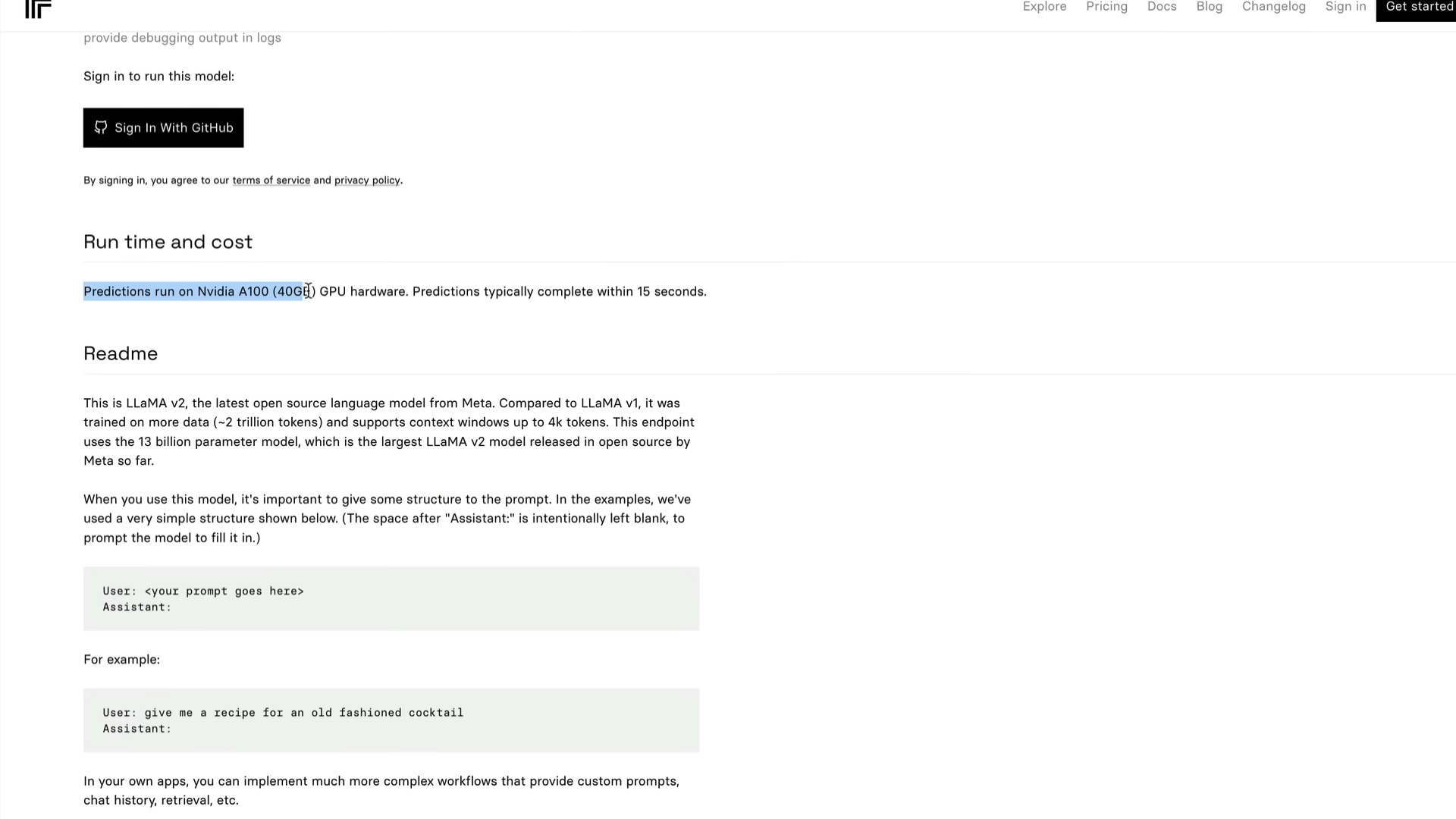

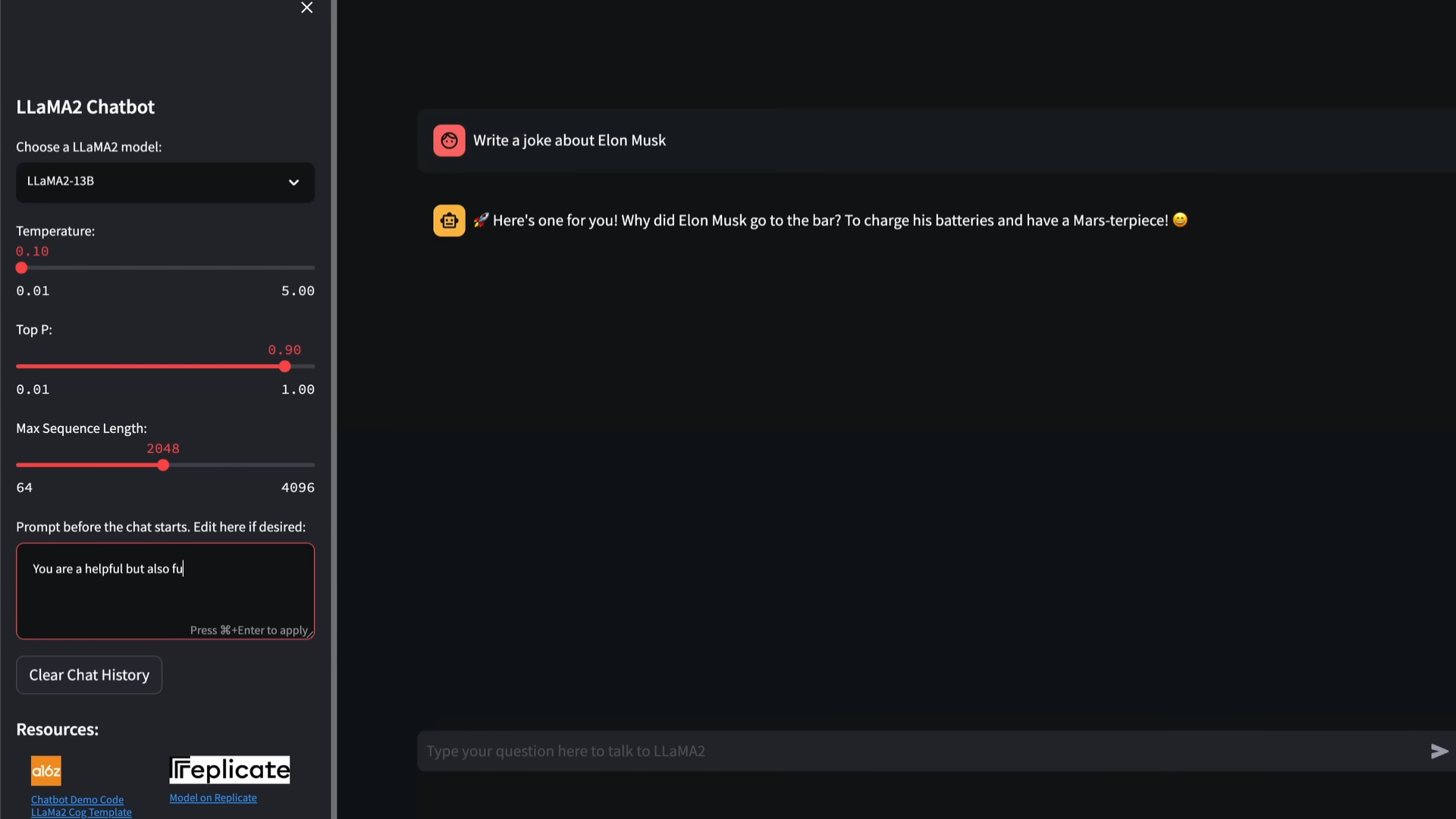

If you go ahead with the model in itself llama2.ai you get to select the 13 billion parameter model or the 7 billion parameter model interestingly you can see how much time it takes so every prediction runs on nvia nvia if you run this on nvia a100 which is a 40 GB GPU hardware predictions typically complete within 15 seconds that’s what replicate says so if we go ahead and then you know ask the cliched question that we usually ask write a joke about Elon Musk here is one for you why did Elon go to the bar to charge his batteries and have a masterpiece it’s strange okay let’s change the context here you are helpful but also funny and sarcastic assistant try to answer every question like an Indian auntie okay so this is the context that we are giving okay now we are going to ask your question are you going to say hey I just scored 100 out of 100 in my exams what do you think so typically you know it’s a thing to say that we usually Indians are not satisfied even if you get 100 marks so this is like a typical thing that that is like a running joke about like people in my country so let’s let’s see what llama 2 says and if llama 2 can actually make like a sarcastic comment about whatever we have just asked so the temperature is set at 0.10 oh it says oh no you must be genius or beta. So it says beta in English sor

ry in beta in the Indian language Hindi means a child or you know son.

I think son is like my Hindi is quite bad or maybe you just studied hard and ate a lot of chai and samosas, either way, I’m proud of you oh it’s proud of me. So that’s not necessarily the right one, okay? So we’re going to change the context again you are a helpful assistant. If you do not know the answer please say I don’t know and let’s go ahead and ask a programming question. I’m going to just simply ask a question. I want to fine-tune llama models how do I do it in Python send this message I just said I want to fine-tune llama models you also get to change the context length to 4000 tokens, which is something that I’m not going to do it now. But you can do it this is like a free interface where you can go play with the model I’m not sure how long this free interface is going to stay but given that it is being backed by Andruis and Horowitz A16Z. I guess it might stay for a quite long time because now that we have a clogged playground for us to play with the model. And another good thing here is that most of the free playgrounds like chat GPT or clogged do not let you play with the model parameters I think this is very important for us to do especially.

If you’re somebody who is looking at llama not from a personal use perspective but also you know from a developer perspective playing with these parameters can give a lot of variety of answers. I appreciate the opening I appreciate meta AI for being open about giving us this parameter to play with this. It didn’t answer about llama because you know I expected not to lower but llama so that’s a good thing here. So this is the interface. If you want to have the chatbot demo code you can click here and actually get the chatbot demo code. So the code is available the docker file is available. They’ve also given you how you can start using it if you’ve got the GPU available itself for you and yeah. So this is another amazing thing where you know the entire code is available for you to use and if you want the cocked template you can go here and click and then get the cocked template as well.

So this is for you to like if you want to use it for this is the first one I guess what is this why does it say llama is for a search purpose only it’s not intended for commercial use I think this is talking about the first version if you want to run it but anyways. The second version has got all the details in itself if you want to use it. I appreciate the fact that you know it’s very straightforward for you to download the model it’s available for free and commercial use it’s ranging from 7 billion to 70 billion parameter model. I’m happy that some company has decided to spend a lot of money in doing RLHF and then to release a model as open source. I mean having a strong open community on my blog. I’m quite happy to see that meta AI is trying to be the poster boy of open-source AI here and I am looking forward to seeing how we can run this code on google collab and the free google collab. And also the fine-tuning aspect let me know in the comment section after playing around with this model.

How do you feel about it?

I didn’t get time to play around with this model a lot but that is my next video um about exploring Llama 2 in fact like trying to compare Llama 2 and chat to get responses to see how this fares concerning the holy grail GPT 4 the King of all open AI.

Subscribe to my newsletter

Read articles from Sumi Sangar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sumi Sangar

Sumi Sangar

Software Engineer with more than 10 years of experience in Software Development. I have a knowledge of several programming languages and a Full Stack profile building custom software (with a strong background in Frontend techniques & technologies) for different industries and clients; and lately a deep interest in Data Science, Machine Learning & AI. Experienced in leading small teams and as a sole contributor. Joints easily to production processes and is very collaborative in working in multidisciplinary teams.