The Magic of Effortless Software Deployment with Docker!

Jagan Balasubramanian

Jagan Balasubramanian

Hello, everyone! I am Jagan Balasubramanian. In this blog, I am thrilled to demystify the magic of Docker. We'll embark on a journey through various essential topics, uncovering the evolution of software deployment, the challenges faced by traditional approaches, and the breakthroughs Docker offers to overcome these obstacles.

Let's dive right in and explore the fascinating world of software deployment. Here's a glimpse of the topics we'll be covering:

What is Software Deployment?

Software Deployment: Before Docker's Rise

Why Docker?

Stay tuned as we uncover the mystery behind Docker and embrace the revolutionary world of containerization in the future.

Now, Let's unlock the true potential of software deployment together!

What is Software Deployment?

Software deployment is one of the most important aspects of the software development process. Deployment is the mechanism through which applications are delivered from developers to users. To summarize, software deployment refers to the process of making the application work on a target device, whether it be a test server, production environment or a user's computer or a mobile device.

Software Deployment: Before Docker's Rise!

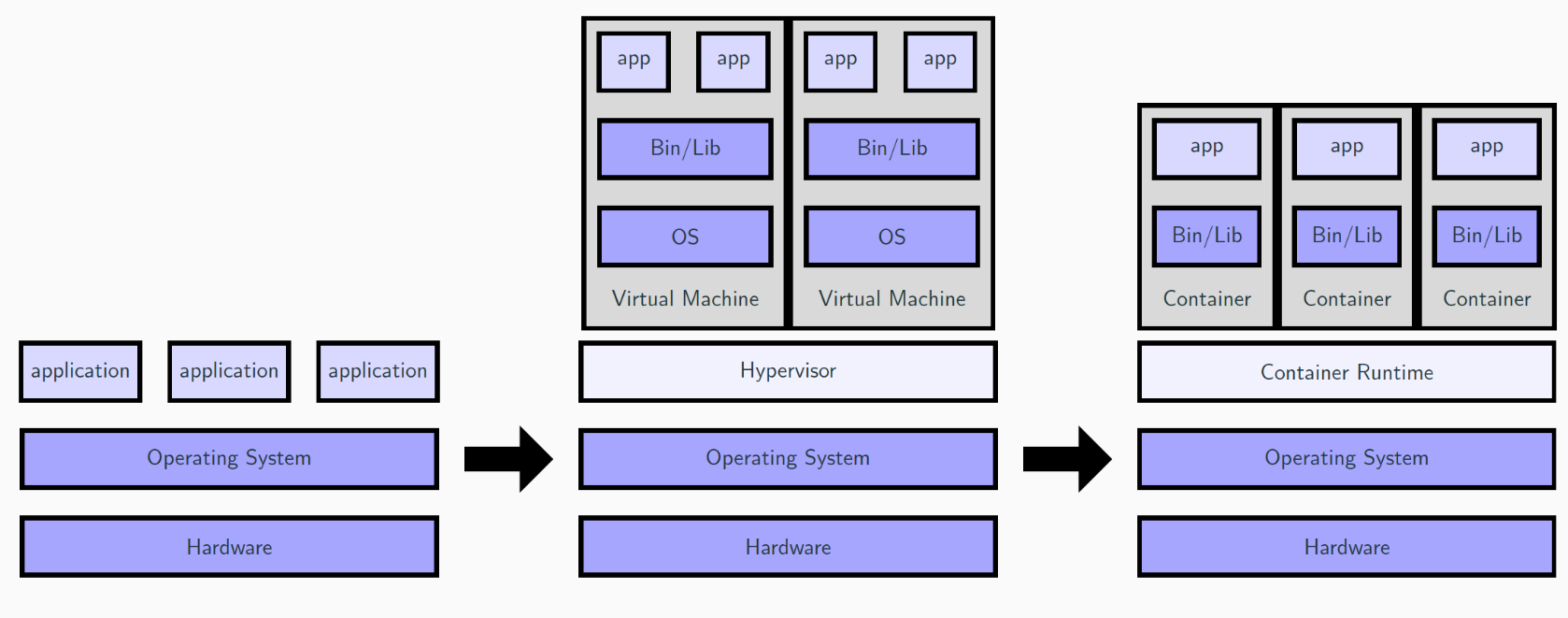

In the early days of software deployment, each application required its dedicated physical server (computer). This traditional approach was costly, resource-intensive, and led to wastefulness. Applications often used only a fraction of the server's resources, leaving a significant portion idle. Additionally, when an application needed more resources than anticipated, adding new servers was the only option, leading to over-provisioning and unnecessary expenditure. Scaling down was equally challenging, making resource optimization a complex task. This is what we call "Traditional Deployment."

The Advent of Virtualization: To overcome the limitations of traditional deployment, virtualization emerged as a game-changing technique. Virtualization allowed multiple "mini-computers" (virtual machines or VMs) to coexist within a single physical server. Each VM acted as a self-contained environment, running its own operating system and applications in isolation. This improved resource utilization, as applications could be distributed across VMs based on their resource needs. Scaling became more flexible, with the ability to add or remove VMs as required. While virtualization provided some advantages, it introduced new challenges.

Disadvantages of Virtualization: Despite improved resource allocation, VMs came with their overhead. Running separate operating systems for each VM consumed significant memory and CPU resources, reducing the number of VMs that could run on a server. Moreover, VMs required more time to start due to the booting of individual OS instances, affecting the application's responsiveness. Managing VMs also led to large storage footprints, as each VM included a full OS and its dependencies.

The Rise of Containerization: To address the limitations of virtualization, containerization entered the scene as a more efficient alternative. Containers do not have their own full operating system; instead, they share the host system's kernel, making them much more lightweight compared to virtual machines.

Containers utilize OS images that include only the necessary libraries and dependencies needed to run the application. These images are lightweight and efficient, providing the application with the required runtime environment. As a result, containers start almost instantly, and they have smaller image sizes, resulting in better storage efficiency compared to VMs.

Why Docker?

In the world of software deployment, we have explored various techniques like traditional deployment, virtualization, and containerization. Among these, containerization stands out as the preferred choice due to its superior isolation capabilities compared to virtualization. Containerization achieved this through the use of namespaces and cgroups, providing complete isolation and resource allocation for applications.

Let's use an analogy to simplify containerization's magic.

Imagine your house as your computer, and each room inside the house represents a container. The namespaces give each room its own isolated space, restricting what it can access. Meanwhile, the engineer (cgroups) ensures each room gets the necessary resources from the house to function properly.

Containerd, the container runtime, leverages namespaces and cgroups to create containers. Docker, the powerful tool that builds on containerd's capabilities, offering additional functionalities like networking and volumes.

Can we create containers and isolate applications manually using namespaces and cgroups?

Absolutely! But here comes the beauty of Docker—it streamlines the process, sparing us the pain of doing it ourselves .

Have you tried using containerization in your software development journey? How has Docker's simplicity influenced your containerization experience?

Subscribe to my newsletter

Read articles from Jagan Balasubramanian directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Jagan Balasubramanian

Jagan Balasubramanian

I am a open source contributor and an aspiring DevOps engineer who loves to write blogs and explore with a community .