The Art of Prompt Engineering

padmanabha reddy

padmanabha reddy

Prompt engineering is a relatively new discipline which involves developing and optimizing prompts to efficiently use language models (LMs) for a wide variety of applications and research topics. Prompt engineering skills help to better understand the capabilities and limitations of large language models (LLMs). If you’re still wondering what prompt engineering is all about, let’s just ask ChatGPT!

Regardless of whether we’re utilizing prompt engineering for simple daily queries, finding recipe inspirations, or planning out next trip using the web user interface (e.g. ChatGPT UI), or if we’re involved in developing applications that access large language model APIs (e.g. ChatGPT API), improving our prompt writing skills will significantly enhance the quality of responses we get. Understanding prompt engineering also provides useful insights into the workings of large language models and their capabilities and limitations.

Large Language Models(LLM's):

Large language models are highly sophisticated natural language processing (NLP) algorithms powered by deep learning techniques and trained on massive datasets. These models, such as GPT-3 and BERT, have the ability to understand, generate, and manipulate human language with remarkable accuracy and versatility. They leverage vast amounts of text data to capture complex language patterns, context, and semantics, making them powerful tools for a wide range of NLP tasks and applications.

Getting started with Prompt Engineering:-

Effective engineering of prompts significantly enhances the performance of large language models(LLM's), leading to responses that exhibit higher accuracy, relevance, and overall usefulness. The prompt itself acts as an input to the model, which signifies the impact on the model output. A good prompt will get the model to produce desirable output, whereas working iteratively from a bad prompt will help us understand the limitations of the model and how to work with it.

Guidelines/principles for prompting:-

Isa Fulford and Andrew Ng in the ChatGPT Prompt Engineering for Developers course mentioned two main principles of prompting:

Principle 1: Write clear and specific instructions:

This principle emphasizes the importance of providing unambiguous and precise instructions to the language model. When crafting prompts, it is essential to be clear about what information you want the model to generate and the format you expect the answer in. Avoid using vague language or open-ended questions that might lead to ambiguous responses. Instead, use explicit instructions, examples, and context to guide the model towards the desired output. Well-defined prompts help the model understand the task at hand and produce more accurate and relevant responses.

Principle 2: Give the model time to “think”:

The principle acknowledges that language models, despite their vast capabilities, still need time to process information and generate responses. Asking complex questions or expecting immediate answers might overwhelm the model and lead to inaccurate or incomplete outputs. Therefore, it is crucial to give the model sufficient time to "think" and process the prompt effectively. One way to achieve this is by breaking down complex questions into smaller sub-tasks and providing clear step-by-step instructions. Additionally, specifying a delay or a "wait" command in the prompt can allow the model to process information more thoroughly and generate better responses.

"""Prompting is akin to guiding a curious and intelligent child. While the child has vast potential, it requires clear instructions, examples, and a defined output format to understand what is expected. We need to allow the child some time to process and solve the problem, offering step-by-step instructions if needed. Sometimes, the child's imagination may lead to creative responses, similar to how large language models can generate hallucinations. To prevent this, it is crucial to grasp the context and craft appropriate prompts that guide the model in providing accurate answers.

"""

Prompt Engineering techniques:-

Prompt engineering is a growing field, with research on this topic rapidly increasing from 2022 onwards. Some of the state-of-the-art prompting techniques commonly used include n-shot prompting, chain-of-thought (CoT) prompting, and generated knowledge prompting.

1. N-shot prompting (Zero-shot prompting, Few-shot prompting):

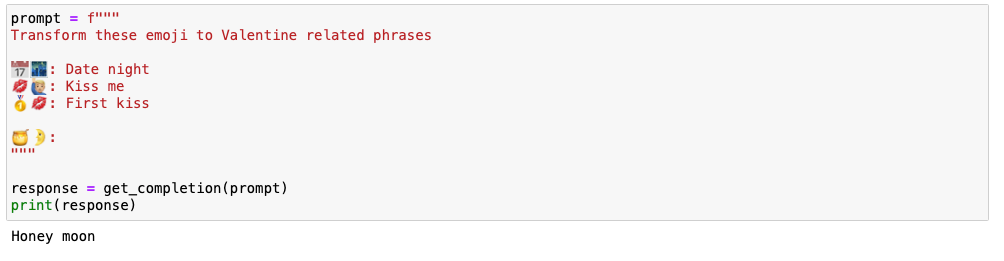

"N" refers to the number of training examples or clues provided to the model to make predictions. Zero-shot prompting is a special case where the model makes predictions without any additional training data.

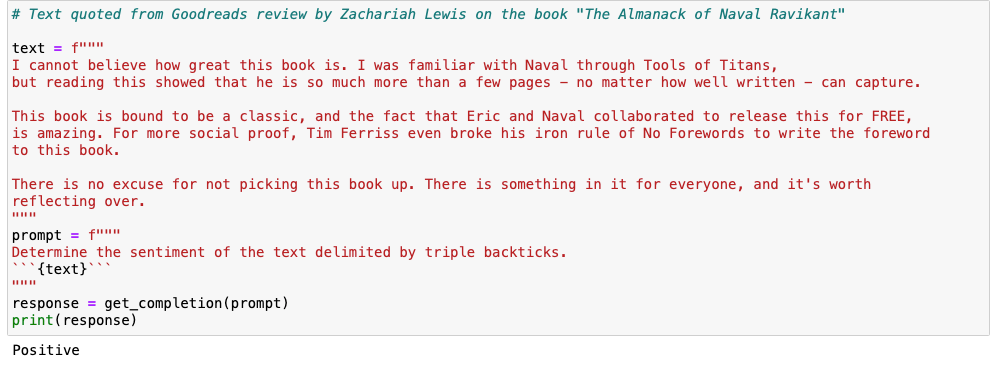

In Zero-shot prompting, the model can handle common and straightforward tasks such as classification (e.g., sentiment analysis, spam classification), text transformation (e.g., translation, summarizing, expanding), and simple text generation. This is possible because the model has been extensively trained on a wide range of language tasks and has learned to generalize well to new tasks without specific fine-tuning.

The flexibility of zero-shot prompting makes it a powerful tool for various natural language processing tasks, allowing users to leverage the model's pre-existing knowledge to perform predictions without the need for task-specific training data.

Few-shot prompting uses a small amount of data (typically between two and five) to adapt its output based on these small examples. These examples are meant to steer the model to better performance for a more context-specific problem.

2. Chain-of-Thought (CoT) prompting:-

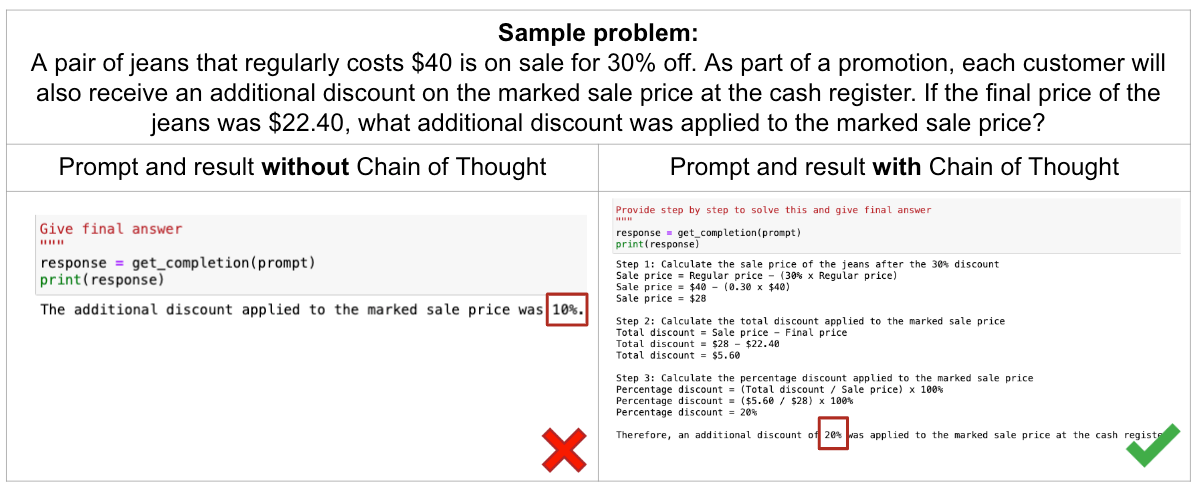

In 2022, Google researchers introduced "Chain-of-Thought" prompting, a novel approach that involves prompting a language model to provide intermediate reasoning steps along with the final answer for multi-step problems. The concept behind this technique is to emulate an intuitive thought process similar to how humans work through complex reasoning tasks.

Instead of just asking for a direct answer, the model is encouraged to generate a sequence of logical steps or explanations that lead to the final solution. This can help in understanding the model's reasoning process and ensure that it arrives at the correct answer through logical and coherent steps, enhancing its interpretability and reliability for intricate problem-solving tasks.

This method enables models to decompose multi-step problems into intermediate steps, enabling them to solve complex reasoning problems that are not solvable with standard prompting methods.

3. Generated knowledge prompting:-

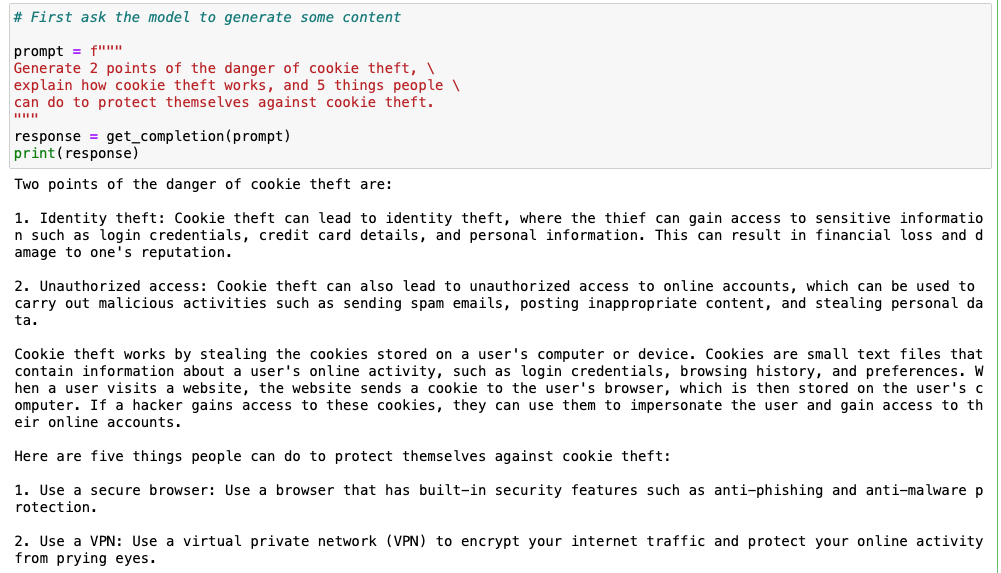

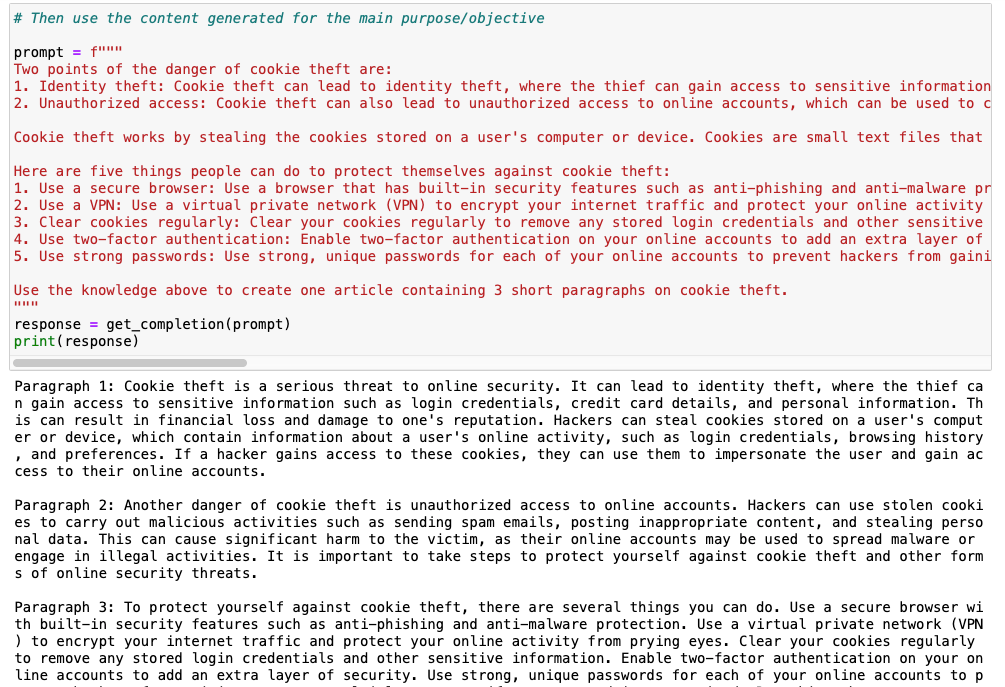

Generated knowledge prompting involves asking a large language model (LLM) to generate relevant and valuable information based on a specific question or prompt. The idea is to utilize the generated knowledge as additional input to enhance the LLM's response to a subsequent inquiry.

For instance, if you want the LLM to write an article about cybersecurity, specifically focusing on cookie theft, you can first prompt it to generate potential risks and protective measures related to cookie theft. By doing so, the LLM gains valuable insights and can produce a more informative and comprehensive blog post on the topic.

Additional tactics:-

On top of the above-specified techniques, you can also use these tactics below to make the prompting more effective

Use delimiters like triple backticks (```), angle brackets (<>), or tags (<tag> </tag>) to indicate distinct parts of the input, making it cleaner for debugging and avoiding prompt injection.

Ask for structured output (i.e. HTML/JSON format), this is useful for using the model output for another machine processing.

Specify the intended tone of the text to get the tonality, format, and length of model output that you need. For example, you can instruct the model to formalize the language, generate not more than 50 words, etc.

Modify the model’s temperature parameter to play around the model’s degree of randomness. The higher the temperature, the model’s output would be random than accurate, and even hallucinate.

sources:-

https://learn.deeplearning.ai/chatgpt-prompt-eng/lesson/1/introduction

Subscribe to my newsletter

Read articles from padmanabha reddy directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by