Tiny PowerShell Project 7 - SQLite & CRUD

Hooman Pegahmehr

Hooman Pegahmehr

Although we can download and install System.Data.SQLite .NEt provider, load the libraries, and go about using SQLite that way, PSSQLite module exists for interacting with SQLite databases without having to deal with .NET classes.

Here's how to use PSSQLite:

Install the PSSQLite Module:

If you're using PowerShellGet (available in PowerShell 5 and later, including PowerShell Core), you can install the module directly from the PowerShell Gallery:

Install-Module -Name PSSQLite -Scope CurrentUserThe

-Scope CurrentUserswitch ensures the module is installed only for the current user, avoiding the need for elevated permissions.Use PSSQLite cmdlets:

After installation, you can use the provided cmdlets to work with SQLite. Here's a quick example:

# Create or open a SQLite database $databasePath = "./MyDatabase.sqlite" # Run a query against the SQLite database Invoke-SqliteQuery -DataSource $databasePath -Query @" CREATE TABLE IF NOT EXISTS users ( id INTEGER PRIMARY KEY, name TEXT NOT NULL, age INTEGER ); INSERT INTO users (name, age) VALUES ('John Doe', 30); "@ # Retrieve data $users = Invoke-SqliteQuery -DataSource $databasePath -Query "SELECT * FROM users" $users | ForEach-Object { Write-Output "Name: $($_.name), Age: $($_.age)" }Explore Additional Cmdlets:

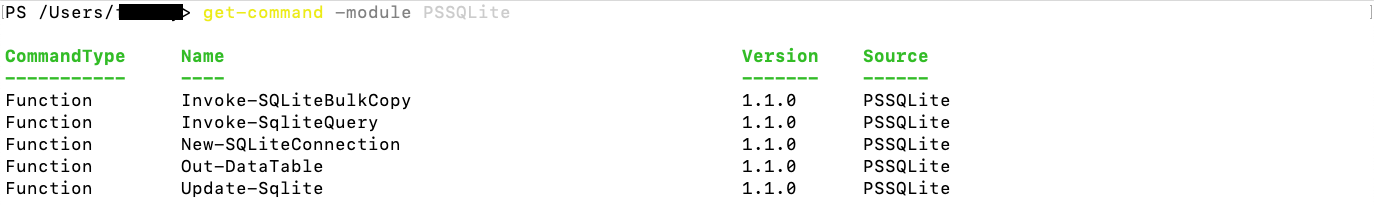

get-command -module PSSQLite

As you see above, we can use this command to see the available cmdlet to use. This module makes SQLite interactions in PowerShell more intuitive, especially for those already familiar with PowerShell cmdlets.

Here's CRUD example using PSSQLite module:

- Create:

$databasePath = "./MyDatabase.sqlite"

try {

# Create a table (if not exists) and insert a new user

Invoke-SqliteQuery -DataSource $databasePath -Query @"

CREATE TABLE IF NOT EXISTS users (

id INTEGER PRIMARY KEY,

name TEXT NOT NULL,

age INTEGER

);

INSERT INTO users (name, age) VALUES ('John Doe', 30);

"@

}

catch {

Write-Error "Error encountered during setup: $_"

}

- Read:

try {

# Fetch all users

$users = Invoke-SqliteQuery -DataSource $databasePath -Query "SELECT * FROM users"

$users | ForEach-Object {

Write-Output "ID: $($_.id), Name: $($_.name), Age: $($_.age)"

}

}

catch {

Write-Error "Error encountered during read operation: $_"

}

- Update with Exception Handling:

try {

# Update John Doe's age

Invoke-SqliteQuery -DataSource $databasePath -Query "UPDATE users SET age = 31 WHERE name = 'John Doe'"

}

catch {

Write-Error "Error encountered during update operation: $_"

}

- Delete:

try {

# Delete John Doe from the database

Invoke-SqliteQuery -DataSource $databasePath -Query "DELETE FROM users WHERE name = 'John Doe'"

}

catch {

Write-Error "Error encountered during delete operation: $_"

}

Here are a few key points:

$_in thecatchblock refers to the current exception. It provides detailed information about the error.If you have resources that need to be cleaned up or closed (like open files or network connections), you can use the

finallyblock, which will execute regardless of whether an exception was thrown.

With this approach, you can gracefully handle errors and ensure that your script provides meaningful feedback in the event of unexpected issues. Now let's focus on our tiny project, I start by creating the following table:

CREATE TABLE SystemMetrics (

id INTEGER PRIMARY KEY AUTOINCREMENT,

CPUUsage INTEGER,

MemoryUsage INTEGER,

createdAt DATETIME DEFAULT CURRENT_TIMESTAMP

);

Here's a breakdown of the SQL command:

id: An integer column that is the primary key for the table. TheAUTOINCREMENTkeyword ensures that each new record gets a unique ID that is incremented from the last one.CPUUsageandMemoryUsage: Both are integer columns to store the respective usage metrics.createdAt: ADATETIMEcolumn that defaults to the current timestamp whenever a new record is created. TheDEFAULT CURRENT_TIMESTAMPkeyword sets the default value for this column to the current date and time whenever a new row is added, so you don't have to manually input the timestamp.

To execute this in PowerShell using the PSSQLite module:

$databasePath = "./MyDatabase.sqlite"

try {

# Create the SystemMetrics table

Invoke-SqliteQuery -DataSource $databasePath -Query @"

CREATE TABLE SystemMetrics (

id INTEGER PRIMARY KEY AUTOINCREMENT,

CPUUsage INTEGER,

MemoryUsage INTEGER,

createdAt DATETIME DEFAULT CURRENT_TIMESTAMP

);

"@

}

catch {

Write-Error "Error encountered during table creation: $_"

}

This will create a table SystemMetrics in the SQLite database with the described columns and constraints.

Now, how do we capture/capture the available CPU and Memory usage?

$cpuCounter = "\Processor(_Total)\% Processor Time"

$cpuLoad = Get-Counter -Counter $cpuCounter -SampleInterval 1 -MaxSamples 1

$cpuUtilization = $cpuLoad.CounterSamples[0].CookedValue

$roundedCpuUtilization = [Math]::Ceiling($cpuUtilization)

Write-Output "Rounded CPU Utilization: $roundedCpuUtilization%"

$memory = Get-CimInstance -ClassName Win32_OperatingSystem

$totalMemory = $memory.TotalVisibleMemorySize

$freeMemory = $memory.FreePhysicalMemory

$usedMemory = $totalMemory - $freeMemory

$memoryUtilization = ($usedMemory / $totalMemory) * 100

$roundedMemoryUtilization = [Math]::Ceiling($memoryUtilization)

Write-Output "Memory Utilization: $roundedMemoryUtilization%"

Now have a simple script that can calculate the utilization, let's think about how we can run this every hour. To run the provided script every hour, you have a few different methods available. Here are two primary methods you can use: Scheduled Jobs and loops. Scheduled jobs are ideal for production scenarios, but to keep the project tiny and experimental we're going to use a loop. You can keep a PowerShell script running indefinitely that sleeps for an hour between executing your commands:

while ($true) {

$cpuCounter = "\Processor(_Total)\% Processor Time"

$cpuLoad = Get-Counter -Counter $cpuCounter -SampleInterval 1 -MaxSamples 1

$cpuUtilization = $cpuLoad.CounterSamples[0].CookedValue

$roundedCpuUtilization = [Math]::Ceiling($cpuUtilization)

Write-Output "Rounded CPU Utilization: $roundedCpuUtilization%"

$memory = Get-CimInstance -ClassName Win32_OperatingSystem

$totalMemory = $memory.TotalVisibleMemorySize

$freeMemory = $memory.FreePhysicalMemory

$usedMemory = $totalMemory - $freeMemory

$memoryUtilization = ($usedMemory / $totalMemory) * 100

$roundedMemoryUtilization = [Math]::Ceiling($memoryUtilization)

Write-Output "Memory Utilization: $roundedMemoryUtilization%"

Start-Sleep -Seconds 3600 # Sleep for 1 hour

}

You can run this script directly, and it will continue to fetch and display the metrics every hour. However, this method isn't ideal for long-term or production use because it relies on the PowerShell session remaining open and uninterrupted.

Now let's try to write the data into our SQLite database instead of writing it to the session.

$databasePath = "./MyDatabase.sqlite"

while ($true) {

$cpuCounter = "\Processor(_Total)\% Processor Time"

$cpuLoad = Get-Counter -Counter $cpuCounter -SampleInterval 1 -MaxSamples 1

$cpuUtilization = $cpuLoad.CounterSamples[0].CookedValue

$roundedCpuUtilization = [Math]::Ceiling($cpuUtilization)

$memory = Get-CimInstance -ClassName Win32_OperatingSystem

$totalMemory = $memory.TotalVisibleMemorySize

$freeMemory = $memory.FreePhysicalMemory

$usedMemory = $totalMemory - $freeMemory

$memoryUtilization = ($usedMemory / $totalMemory) * 100

$roundedMemoryUtilization = [Math]::Ceiling($memoryUtilization)

# Insert metrics into the SQLite table

try {

$query = @"

INSERT INTO SystemMetrics (CPUUsage, MemoryUsage)

VALUES ($roundedCpuUtilization, $roundedMemoryUtilization)

"@

Invoke-SqliteQuery -DataSource $databasePath -Query $query

}

catch {

Write-Error "Error encountered while inserting metrics: $_"

}

Start-Sleep -Seconds 3600 # Sleep for 1 hour

}

There you have it, this will continually gather the CPU and memory utilization, round them, and then insert them into the SQLite database every hour

Subscribe to my newsletter

Read articles from Hooman Pegahmehr directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Hooman Pegahmehr

Hooman Pegahmehr

Hooman Pegahmehr is a performance-driven, analytical, and strategic Technology Management Professional, employing information technology best practices to manage software and web development lifecycle in alignment with client requirements. He builds high-quality, scalable, and reliable software, systems, and architecture while ensuring secure technology service delivery as well as transcending barriers between technology, creativity, and business, aligning each to capture the highest potential of organization resources and technology investments. He offers 8+ years of transferable experience in creating scalable web applications and platforms using JavaScript software stack, including MongoDB, Express, React, and Node, coupled with a focus on back-end development, data wrangling, API design, security, and testing. He utilizes a visionary perspective and innovative mindset to collect and translate technical requirements into functionalities within the application while writing codes and producing production-ready systems for thousands of users. He designs, develops, and maintains fully functioning platforms using modern web-based technologies, including MERN Stack (MongoDB, Express, React, Node). As a dynamic and process-focused IT professional, Hooman leverages cutting-edge technologies to cultivate differentiated solutions and achieve competitive advantages while supporting new systems development lifecycle. He excels in creating in-house solutions, replacing and modernizing legacy systems, and eliminating outsourcing costs. He exhibits verifiable success in building highly responsive full-stack applications and incident management systems using advanced analytical dashboards while translating complex concepts in a simplified manner. Through dedication towards promoting a culture of collaboration, Hooman empowers and motivates diverse personnel to achieve technology-focused business objectives while administering coaching, training, and development initiatives to elevate personnel performance and achieve team synergy. He earned a winning reputation for transforming, revitalizing, streamlining, and optimizing multiple programs and web-based applications to drive consistent communications across cross-functional organization-wide departments. He manages multiple projects from concept to execution, utilizing prioritization and time management capabilities to complete deliverables on time, under budget, and in alignment with requirements.