Week 7,8 - GSOC 2023

Rohan Babbar

Rohan Babbar

This blog post briefly describes the progress on the project achieved during the 7th and 8th weeks of GSOC.

Work Done

Added MPIVStack operator.

Added MPIHStack which is the adjoint of MPIVStack

Added Least Squares Migration(LSM) tutorial using the new MPIVStack operator.

Added support for `_matvec` and `_rmatvec` to work with broadcasted arrays.

Added a method in DistributedArray to add

ghost cellswhich will be later used in the FirstDerivative and Second Derivative classes.

Added MPIVStack Operator

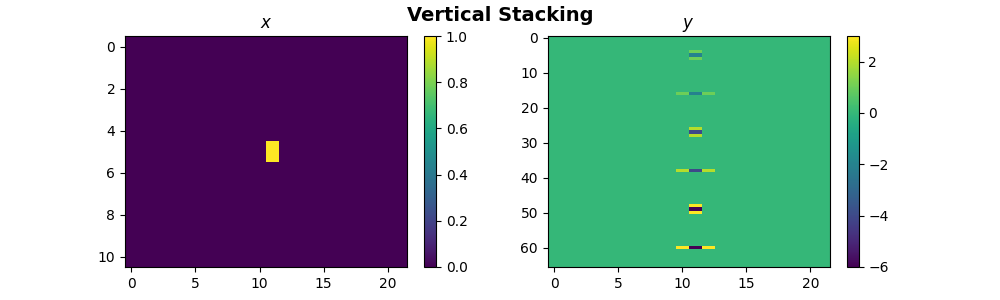

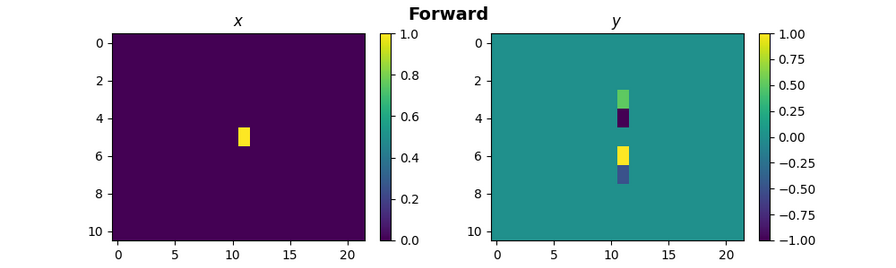

The pylops_mpi.basicoperators.MPIVStack Operator is used to vertically stack a set of pylops Linear operators using MPI. The model and data vectors are both pylops_mpi.DistributedArray. My mentors and I discussed the implementation of matrix-vector multiplication. We reached a consensus that for the forward operation, it would be ideal for the user to provide a broadcasted array as input, resulting in a scattered array as the output. Conversely, for the adjoint operation, the user would input a scattered array, and the output would be a broadcasted array. I also created visual examples to facilitate user understanding.

The above example is run using 3 processes using the make run_examples to vertically stack linear operators at different ranks.

Added MPIHStack Operator

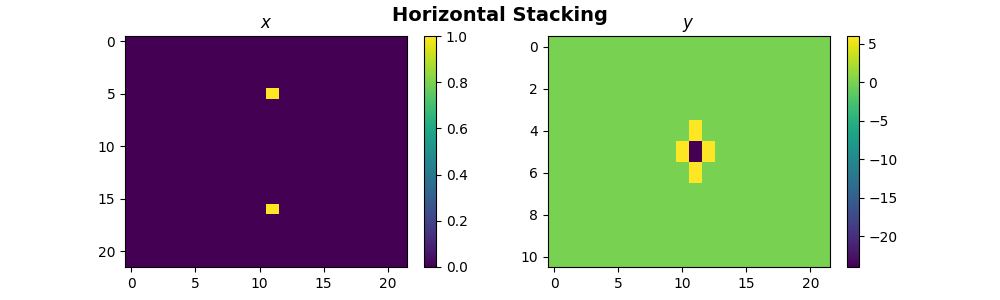

Since the pylops_mpi.basicoperators.MPIHStack is the adjoint of the already created MPIVStack operator, we just call the adjoint of MPIVStack to handle its matrix-vector multiplication, so that we do not need to handle the operator separately. The MPIHStack stacks the pylops linear operators horizontally.

The above example is run using 3 processes using the make run_examples to horizontally stack linear operators at different ranks.

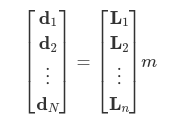

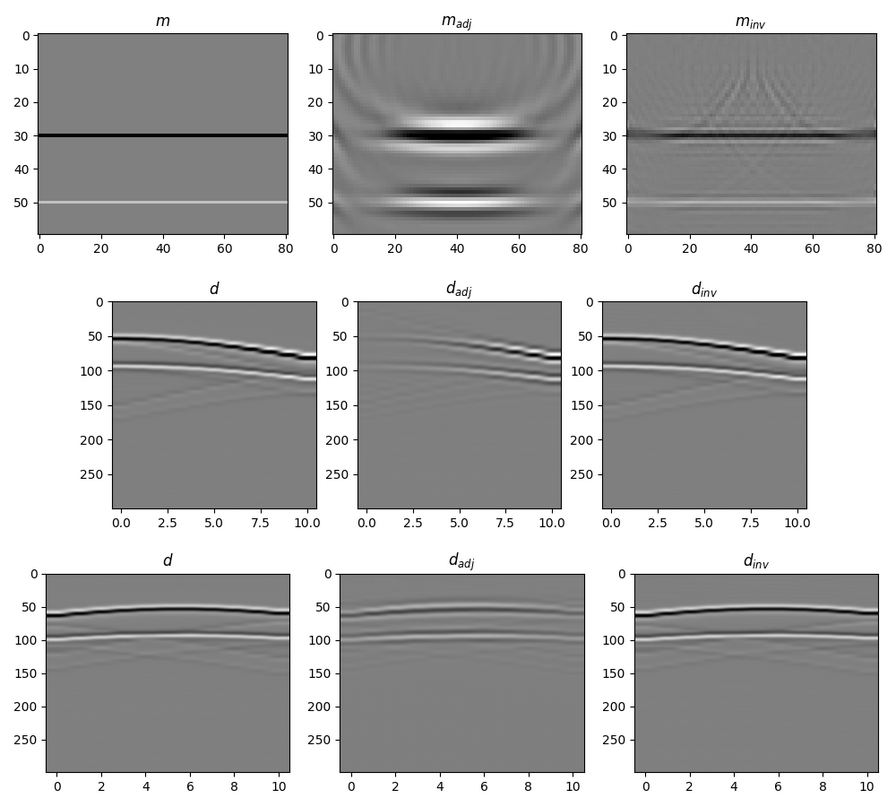

Added Least Squares Migration(LSM) tutorial

To see how the MPIVStack works on a real-life problem, we added a seismic migration tutorial using our newly created MPIVStack operator.

where Li is a least-squares modeling operator, di is the data, and m is the broadcasted reflectivity at every location on the subsurface. We create a pylops.waveeqprocessing.LSM at each rank and then push them into an MPIVStack to perform a matrix-vector product with the broadcasted reflectivity at every location on the subsurface. Some visual examples of the data and model are shown below.

Added support for `_matvec` and `_rmatvec` to work with broadcasted arrays.

The default implementation of the DistributedArray uses the partition=SCATTER approach. To implement the partition=BROADCAST approach, we made slight adjustments to the above methods. This will prove highly beneficial when collaborating with other pylops_mpi operators, enabling the creation of more advanced operators.

Ny, Nx = 11, 22

Fop = pylops.FirstDerivative(dims=(Ny, Nx), axis=0, dtype=np.float64)

Mop = pylops_mpi.asmpilinearoperator(Op=Fop)

Sop = pylops.SecondDerivative(dims=(Ny, Nx), axis=0, dtype=np.float64)

VStack = pylops_mpi.MPIVStack(ops=[(rank + 1) * Sop, ])

FullOp = VStack @ Mop

On performing matrix-vector multiplication in forward mode using the FullOp operator:

Added add_ghost_cells method in the DistributedArray class

This method generates a ghosted numpy array, which includes the local array from the DistributedArray along with ghost cells sourced from either the preceding or succeeding process, or even both. The significance of this method becomes particularly pronounced in the creation of the FirstDerivative and SecondDerivative operators.

Challenges I ran into

Given my limited familiarity with Seismic Migration, I encountered initial challenges while attempting to create an accurate example. Additionally, devising the logic and implementation for the add_ghost_cells method proved to be time-consuming, as addressing diverse ghost cell requirements across ranks presented a complexity. However, thanks to guidance from my mentors, online resources, and my problem-solving efforts, I ultimately succeeded in successfully implementing it.

To do in the coming weeks

With the successful implementation of the add_ghost_cells method, my next step will involve working on the implementation of the MPIFirstDerivative, followed by the MPISecondDerivative.

Thank you for reading my post!

Subscribe to my newsletter

Read articles from Rohan Babbar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by