Part1 : The basics

urgensherpa

urgensherpaIf you need to deploy and scale multiple instances of containers, operate multiple services, and scale them up and down, K8's can be used.

Official doc for prod setup here.

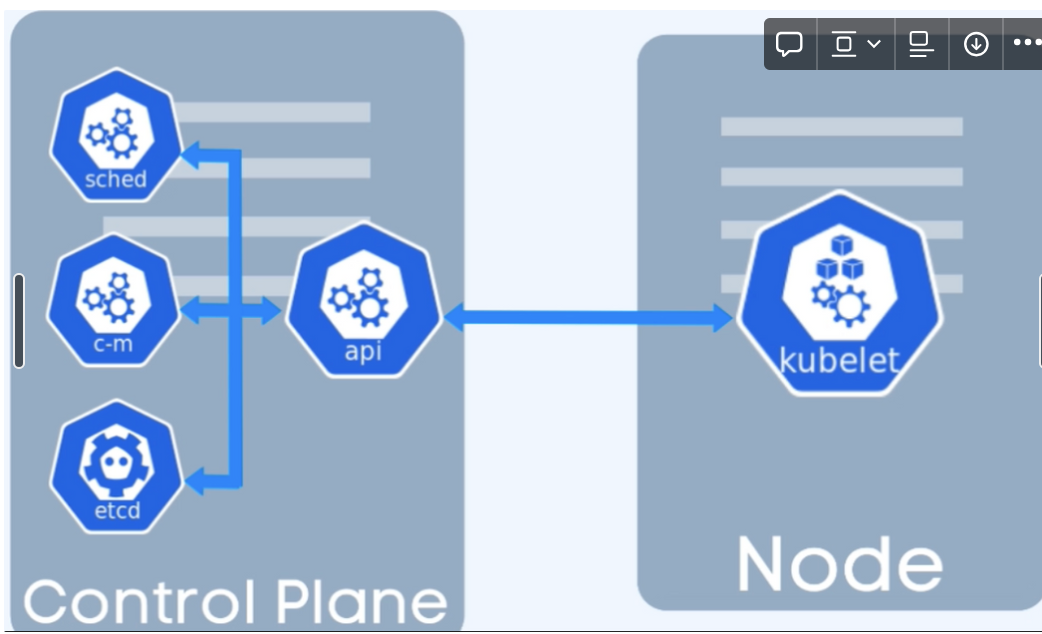

static pods -they are directly scheduled by kubelet daemon, the static pod needs CR (container runtime) and kubelet daemon running

Regular Pod Scheduling

→api server gets request → scheduler:which node? → kubelet: schedules pod

Static pod scheduling

kubelet watches a specific location on the node it is running i,e /etc/kubernetes/manifests

kubelet schedules the pod when it finds a Pod manifest

communication occurs over TLS

PKI certificate used for client Authentication

Kubelet runs on both the worker node and control plane, and talks to the container runtime for scheduling.

Kubelet —talksto—> CRI (container runtime interface)—>talksTo—> container Runtime

Initially, K8 supported just docker →new container runtime emerged → k8 created a common Container runtime interface → containers

Docker did not follow k8 CRI, therefore k8 had to create a docker shim

Kubelet —talksto——> CRI (container runtime interface)—talksTo—>DockerShim(bridge)—> container Runtime

the dockerShim is removed from kubernetes v1.24

Also docker is entire stack and not just runtime - it has CLI ,docker UI,api, used for building image

Lightweight containers emerged -

CRIO

containerd (ill be using this one for this series)

- Containerd is compatible with docker images. you can run your application docker image in containerd

Install containerD ( v 1.7.3 is current at the time of writing) , kubelet( 1.28.2-1.1) kubeadm, kubectl on all 3 machines***

https://github.com/containerd/containerd/blob/main/docs/getting-started.md

You must disable swap other wise the cluster init will fail (use related flag if swap needs to be used)

note:- if your disk space is less than 10 GB ; pods will not run as expected

#!/bin/bash

mkdir /cri

cd /cri/

wget https://github.com/containerd/containerd/releases/download/v1.7.3/containerd-1.7.3-linux-amd64.tar.gz

wget https://github.com/opencontainers/runc/releases/download/v1.1.8/runc.amd64

install -m 755 runc.amd64 /usr/local/sbin/runc

tar Cxzvf /usr/local containerd-1.7.3-linux-amd64.tar.gz

wget https://github.com/containernetworking/plugins/releases/download/v1.3.0/cni-plugins-linux-amd64-v1.3.0.tgz

mkdir -p /opt/cni/bin

tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.3.0.tgz

mkdir -p /usr/local/lib/systemd/system/

cat <<EOF | tee /usr/local/lib/systemd/system/containerd.service

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

#uncomment to enable the experimental sbservice (sandboxed) version of containerd/cri integration

#Environment="ENABLE_CRI_SANDBOXES=sandboxed"

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/local/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=infinity

# Comment TasksMax if your systemd version does not supports it.

# Only systemd 226 and above support this version.

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

EOF

Containerd uses a configuration file located in /etc/containerd/config.toml for specifying daemon level options. A sample configuration file can be found here https://github.com/containerd/containerd/blob/main/docs/man/containerd-config.toml.5.md

cat <<EOF | tee /etc/containerd/config.toml

version = 2

root = "/var/lib/containerd"

state = "/run/containerd"

oom_score = 0

imports = ["/etc/containerd/runtime_*.toml", "./debug.toml"]

[grpc]

address = "/run/containerd/containerd.sock"

uid = 0

gid = 0

[debug]

address = "/run/containerd/debug.sock"

uid = 0

gid = 0

level = "info"

[metrics]

address = ""

grpc_histogram = false

[cgroup]

path = ""

[plugins]

[plugins."io.containerd.monitor.v1.cgroups"]

no_prometheus = false

[plugins."io.containerd.service.v1.diff-service"]

default = ["walking"]

[plugins."io.containerd.gc.v1.scheduler"]

pause_threshold = 0.02

deletion_threshold = 0

mutation_threshold = 100

schedule_delay = 0

startup_delay = "100ms"

[plugins."io.containerd.runtime.v2.task"]

platforms = ["linux/amd64"]

sched_core = true

[plugins."io.containerd.service.v1.tasks-service"]

blockio_config_file = ""

rdt_config_file = ""

[plugins."io.containerd.grpc.v1.cri"]

sandbox_image = "registry.k8s.io/pause:3.9"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

runtime_type = "io.containerd.runc.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true

EOF

touch /etc/containerd/debug.toml

# journalctl -xeu containerd.service comaplained that debug.toml was mssing

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# sysctl params required by setup, params persist across reboots

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# Apply sysctl params without reboot

sudo sysctl --system

systemctl daemon-reload

systemctl enable --now containerd

systemctl restart containerd

#note run belwo to unmask if previous installation of containerd was done using docker source

systemctl unmask containerd.service

change sandbox_image = "registry.k8s.io/pause:3.9" with relevant version in containerd config /etc/containerd/config.toml ; if you get this error :- detected that the sandbox image "registry.k8s.io/pause:3.8" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.k8s.io/pause:3.9" as the CRI sandbox image error

Use this to find current containerd config containerd config dump

The default configuration can be generated via containerd config default > /etc/containerd/config.toml.

In Kubernetes, the pause/sandbox container serves as the “parent container” for all of the containers in your pod. The pause container has two core responsibilities.

Serves as the basis of Linux namespace sharing in the pod (holds network ip).

PID (process ID) namespace sharing enabled, it serves as PID 1 for each pod and reaps zombie processes.

kubeadm init --pod-network-cidr=10.244.0.0/16

our Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.50:6443 --token 8uetqr.l97boi95q4yu5bxo --discovery-token-ca-cert-hash sha256:d20dd3xx47

The token can be also generated manually using command below

important: join the nodes ONLY AFTER installing cni like flannel or calico

The token can be also generated manually using command below

kubeadm token create --print-join-command

kubeadm join 192.168.1.50:6443 --token b2mfng.zcm7afuv641lt49m --discovery-token-ca-cert-hash sha256:d20dd3166ca5542a5c0f6a075b880c6043298d74e93f90b28a57ad5abde6479a

Note: make sure the --pod-network-cidr is large enough and covers the CNI pod network

kubeadm reset #use this to reset the config (useful if cluster doesnt work)

rm -rf /etc/cni/net.d to remove old net configurations to reset/cleanup

root@masterNode:~# kubectl get node --kubeconfig /etc/kubernetes/admin.conf

#kubectl get node --kubeconfig /etc/kubernetes/admin.conf

NAME STATUS ROLES AGE VERSION

masternode NotReady control-plane 4d11h v1.27.4

By default, kubectl looks for a file named config in the $HOME/.kube directory. You can specify other kubeconfig files by setting the KUBECONFIG environment variable or by setting the --kubeconfig flag.

--kubeconfig /etc/kubernetes/admin.conf this can be exported using env variable or users profile

eg. export KUBECONFIG=/etc/kubernetes/admin.conf OR

cat /etc/kubernetes/admin.conf > ~/.kube/config

Note: the nodes have not joined the cluster yet

Fundamental objects

pod, service, configmap,secret,ingress,deployments,statefulSets, namespace,volumes

Pod: The smallest deployable unit in Kubernetes. It represents a single instance of a running process in a cluster. Pods can host one or more containers that share the same network and storage.

Service: An abstraction that defines a set of Pods and a policy by which to access them. Services provide a stable IP address and DNS name to enable communication between different parts of an application.

ConfigMap: A Kubernetes resource used to store configuration data separately from application code. It allows you to decouple configuration settings from your application code and manage them more flexibly.

Secret: Similar to ConfigMaps, Secrets are used to store sensitive information such as passwords, tokens, and keys. They are stored in a base64-encoded format and are more secure than ConfigMaps for sensitive data.

Ingress: An API object that manages external access to services within a Kubernetes cluster. It provides rules for routing incoming HTTP and HTTPS traffic to different services based on the host or path.

Deployment: A higher-level resource that manages the deployment and scaling of applications. It provides declarative updates to applications, ensuring that the desired state is maintained over time.

StatefulSet: A higher-level resource similar to a Deployment, but designed for stateful applications. It maintains a unique identity for each Pod and ensures stable, predictable network identifiers and persistent storage.

Namespace: A virtual cluster within a Kubernetes cluster. It is used to create isolated environments, organize resources, and provide separation between different applications or teams.

Volumes: A way to provide persistent storage for containers. Volumes can be used to store data that needs to survive container restarts, such as configuration files or databases.

Install flannel cni (on master)

kubectl apply -f kube-flannel.yml --kubeconfig /etc/kubernetes/admin.conf

kube-flannel.yml is available https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml (kubeflannel)

Due to some issue in flannel, the flannel pods were in crashloopback state with this logged "Error registering network: failed to acquire lease: node "ubuntu1" pod cidr not assigned". This issue was resolved by creating the file /run/flannel/subnet.env on all nodes.

cat <<EOF | tee /run/flannel/subnet.env

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.244.0.0/16

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

EOF

Run patch on master node which applies to worker nodes :-

kubectl patch node masternode1 -p '{"spec":{"podCIDR":"10.244.0.0/16"}}'

kubectl patch node workernode1 -p '{"spec":{"podCIDR":"10.244.0.0/16"}}'

kubectl patch node workernode2 -p '{"spec":{"podCIDR":"10.244.0.0/16"}}'

Some commands that can help troubleshoot:-

kubectl describe pod --namespace=kube-system <podname>

kubectl logs <podname> -n kube-system

tail /var/log/syslog

Subscribe to my newsletter

Read articles from urgensherpa directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by