Build, Compile, and Fit Models in TensorFlow Part II

Arthur Kaza

Arthur Kaza

In this article, we will discuss how to fit a model, we will be using the Jenga illustration to make ideas clear. Fitting a model is the most important challenge for a Machine Learning Engineer on his building model journey. Ready to take place as an ML Engineer in fitting models?

Overview

A model must be trained on data to fit.

Similar to playing Jenga, fitting a model is a process. We begin with a shaky skyscraper made of blocks (the model). After feeding the model data, we begin removing bricks to determine if the tower still stands (the model is accurate in its predictions). We need to add some blocks back (change the model's parameters) if the tower collapses before trying again. The goal of fitting a model is to find a set of parameters that allows the model to make accurate predictions on unseen data. This is a trial-and-error process, and it can take some time to find the best set of parameters.

Fitting a model means training it on a dataset of data. The goal of training a model is to find the parameters that minimize the loss function. The loss function is a measure of how well the model is performing. Do you want to discover, how it works on TensorFlow? Let's talk about it now.

Fitting a model with TensorFlow

In TensorFlow, we can fit a model using the fit function. The fit function takes several arguments, including the dataset, the number of epochs, and the learning rate. To fit a model in TensorFlow, we can use the following code:

model.fit(dataset, epochs=100, learning_rate=0.001)

In this code, we are fitting the model on the dataset for 100 epochs with a learning rate of 0.001.

Note: The dataset is a collection of data points, each of which has a label. The number of epochs is the number of times the model will be trained on the dataset. The learning rate is a parameter that controls how much the model's parameters are updated each time it is trained.

Once the model has been fitted, we can evaluate its performance on a test dataset. The test dataset is a collection of data points that the model has not seen before. We can evaluate the model's performance by calculating the accuracy, which is the percentage of data points that the model predicts correctly. We can be compared with a unit test in classic coding, to see how good is our work.

Note that you evaluate your model on a test dataset, here is an example code:

model.evaluate(test_dataset)

To end with this part let me show you a great example of the process: build, compile and fit a model with naturally the evaluation at the end :

import tensorflow as tf

# Create a dataset of data points.

data = tf.data.Dataset.from_tensor_slices([[1, 2], [3, 4]])

# build a model that will predict the value of y given the value of x.

model = tf.keras.Sequential([

tf.keras.layers.Dense(1, input_shape=(1,))

])

# Compile a model with an adam optimizer and a loss function.

model.compile(optimizer='adam', loss='mse')

# Train or fit the model on the data.

model.fit(data, epochs=10)

# Evaluate the model on the data.

model.evaluate(data)

This code creates a dataset of data points, creates a model that will predict the value of y given the value of x, compiles the model with the adam optimizer and a loss function, trains the model on the data, and evaluates the model on the data.

The tf.data.Dataset.from_tensor_slices() function creates a dataset of data points from a list of tensors. In this case, the list of tensors is [[1, 2], [3, 4]], which represents two data points. The tf.keras.Sequential() function creates a sequential model, which is a type of model that consists of a series of layers. In this case, the model consists of a single Dense layer, which is a type of layer that performs linear regression. The model.compile() function compiles the model with an optimizer and a loss function. The optimizer is used to update the model's parameters during training, and the loss function is used to measure the model's performance. The model.fit() function trains the model on the data. The model is trained for 10 epochs, which means that the model is trained on the data 10 times. The model.evaluate() function evaluates the model on the data. The model is evaluated on the data to see how well it performs.

Challenges fitting with TensorFlow

For machine learning engineers, fitting is a particularly difficult process that also takes patience and all of your ML engineering talents; here is a short summary of the most typical ones:

Choosing the right optimizer. The optimizer is a function that updates the model's parameters to minimize the loss function. There are many different optimizers to choose from, and the right choice will depend on the specific problem you are trying to solve. Some of the most popular optimizers include Adam (it reminds you something I guess), SGD, and Adagrad.

Regularizing the model. Overfitting is a common problem in machine learning, where the model learns too much from the training data and does not generalize well to new data. There are a number of techniques for regularizing models, such as L1 and L2 regularization.

Tuning the hyperparameters. The hyperparameters of a model are the parameters that control the model's behavior, such as the learning rate and the number of hidden layers. Finding the right values for these hyperparameters can be a time-consuming process, but it is important to get them right in order to achieve good performance.

Dealing with imbalanced data. Imbalanced data is a problem where there are more examples of one class than another. This can make it difficult for the model to learn to predict the minority class. There are a number of techniques for dealing with imbalanced data, such as oversampling and undersampling.

Evaluating the model. Once you have trained a model, it is important to evaluate its performance on a held-out test set. This will give you an idea of how well the model will generalize to new data. There are several different metrics that can be used to evaluate a model, such as accuracy, precision, and recall.

These are just a few of the challenges that machine learning engineers face when fitting models with TensorFlow. By following some tips, you can overcome all this, in the next articles of this series, we will come over each challenge.

Review of concepts and conclusion

Imagine that you are trying to build a Jenga tower that is as tall as possible. You start by stacking the blocks randomly, but the tower quickly falls over. You then start to experiment with different ways of stacking the blocks. You might try stacking them in a spiral pattern, or you might try stacking them in a checkerboard pattern. You might also try adding more or fewer blocks to the tower.

As you experiment, you will start to learn what works and what doesn't work. You will learn that some patterns are more stable than others, and you will learn that the number of blocks in the tower affects its stability.

Eventually, you will find a pattern that allows you to build a Jenga tower that is very tall. This pattern is the set of parameters that allows the tower to be as stable as possible.

Fitting a model on TensorFlow is a similar process. We start with a model that is not very accurate, and we then experiment with different parameters. We might try changing the number of layers in the model, or we might try changing the activation functions in the model. We might also try using a different loss function or optimizer.

As we experiment, we will start to learn what works and what doesn't work. We will learn that some architectures are more accurate than others, and we will learn that the choice of loss function and optimizer affects the accuracy of the model.

Eventually, we will find a set of parameters that allows the model to be as accurate as possible. This set of parameters is the model that we will use to make predictions on new data.

Yes, during our journey in these articles, we focused on general concepts on how to build, compile and fit models with TensorFlow, the next one will focus on powerful tools and tricks to make it look easy, one of them is TensorBoard in Google Colab.

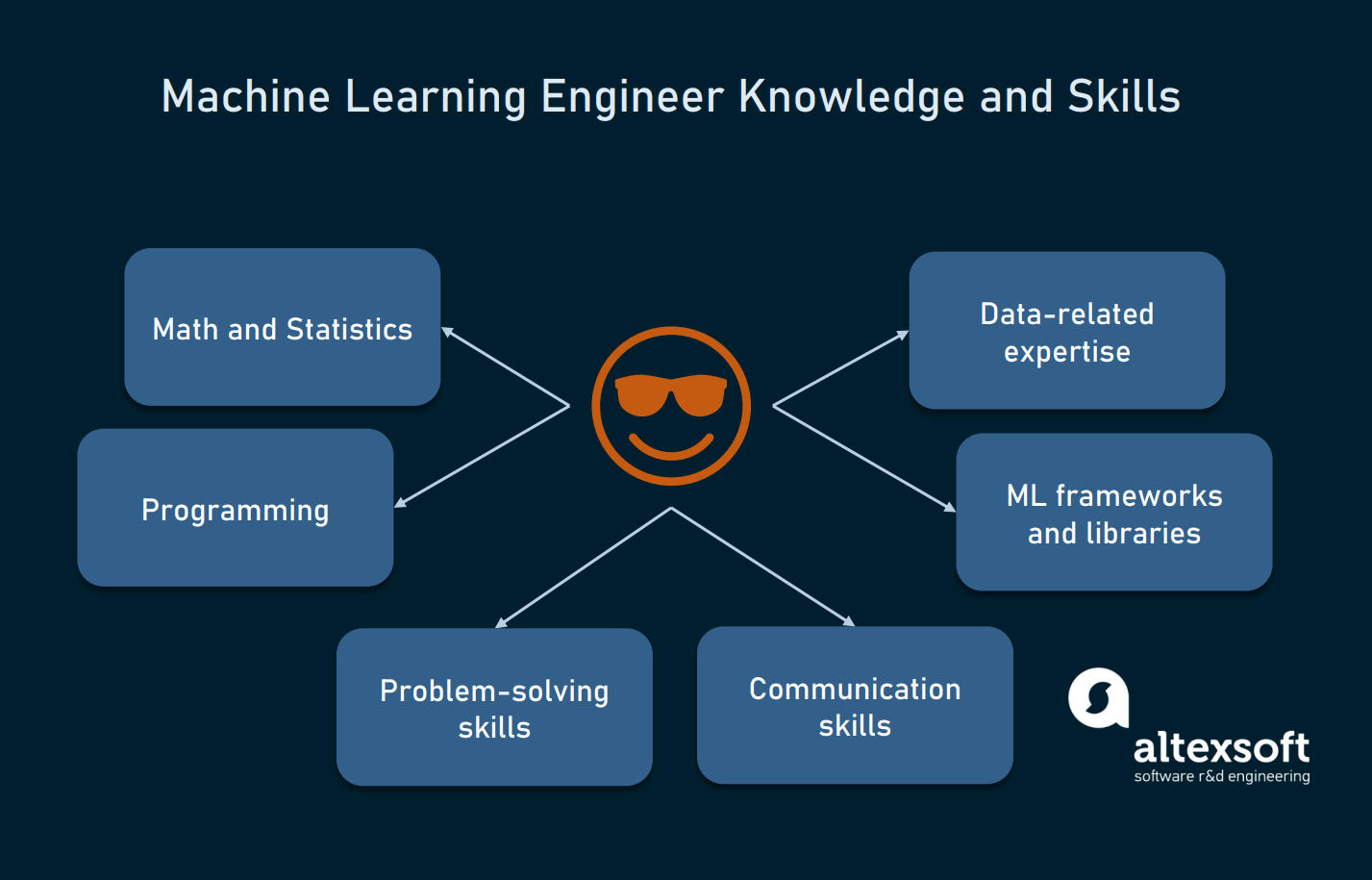

Before turning the page look at this, what an ML Engineer needs as hard and soft skills to perform:

Keep in mind that Machine learning engineers are the builders of the future. They use their skills to create algorithms that can learn from data and make predictions.

Remember, building machine learning models is like building a tower of blocks. It takes time and practice, but it can be lots of fun too! 😊

Arthur Kaza spartanwk@gmail.com @#PeaceAndLove @Copyright_by_Kaz’Art / @ArthurStarks

Subscribe to my newsletter

Read articles from Arthur Kaza directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Arthur Kaza

Arthur Kaza

Senior Data Scientist with over 7 years of international experience in Finance AI scoring systems and risk analysis, specializing in the design and optimization of information systems, loan scoring model, data science, and AI. As a AI researcher, he works on Computer Vision applications to Energy resize and Cache Generated Augmented Architecture applications for protected culture and languages. Arthur Kaza is recognized as a Google Developer Expert in Machine Learning (AI) by Google for his significant contributions to AI in Google communities in his region, with nearly 5 years of experience designing technological learning programs, hackathons, and mentorship. A true technology enthusiast and community leader, he has mentored over 100 young professionals in technological learning programs he managed across Central and East Africa.