The master data-lookup pattern

Kariuki George

Kariuki George

Welcome back, this is part 2 of the "caching patterns with Redis" series. Click here to get to the first part.

Assume you are building the next multitenant e-commerce application like Shopify, you'd like some data/customizations/configurations to be loaded into the shop before the customer can do pretty much anything. The data can include the shop's name, currency, supported payment platforms, UI elements such as select values, dropdown dialogues, themes, etc. This process should be super fast to prevent the user from getting disappointed by the slow loading times or total blocking time.

Considering the data/configurations/customizations changes infrequently compared to other data such as product stocks, we can cache this data ahead of time and for longer periods. In practice, this data is called master data, especially when it also represents the organization's core data that is considered essential for its operations.

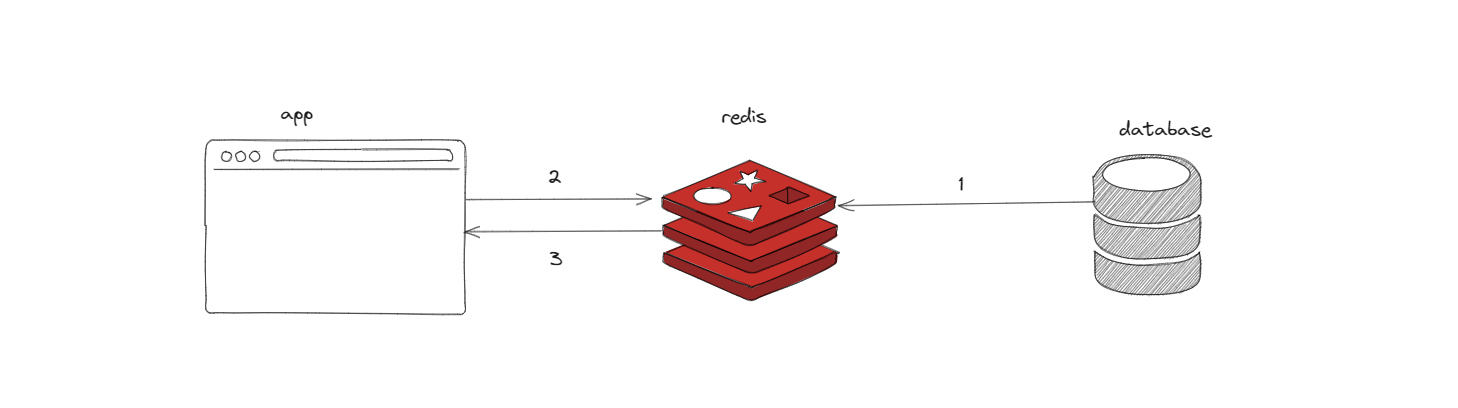

To see how this pattern functions, consider the image below.

Read the master data from the database.

This operation stores a copy of the data in Redis upfront. This pre-caches the data for fast retrieval.

The master data is loaded into Redis continuously using a cron job or a script.

The application requests the backend/API for master data.

The backend/API serves the master data directly from Redis instead of the database thus very low response times.

When should one consider this pattern?

When serving master data at speed

Nearly all applications require access to master data and preaching the data with Redis delivers it to users at super high speeds thus low loading and blocking times.

When supporting massive master tables

Some master tables often have millions of records. Searching through the tables can cause performance bottlenecks. Preparing the necessary data before time can increase performance.

When avoiding spending on expensive hardware and software investments.

Defer costly infrastructure enhancements by using Redis. Get the performance and scaling benefits without asking the CFO to write a check.

Let's see how we can use the master lookup to speed up our posts application. We can prefetch and cache posts every 10 minutes thus any new requests to get a list of posts can have a super fast response time.

// Fetch and cache posts

export const cachePosts = async () => {

const posts = await db.posts.findMany({ take: 30 });

await redis.set("posts", JSON.stringify(posts));

};

// Cron to invoke cachePosts every 10 mins

export const prefetchTasks = cron.schedule(

"*/10 * * * *",

async () => {

await cachePosts();

},

{ name: "prefetchPosts", scheduled: true, runOnInit: true }

);

// Fetch pre-fetched posts from the cache

export const getPosts = async (): Promise<IPost[]> => {

// Master data-lookup

const postsString = await redis.get("posts");

let posts: IPost[] = [];

if (postsString && postsString !== "null") {

posts = JSON.parse(postsString);

}

return posts;

};

// The http router

router.get("/", async (_req, res, next) => {

try {

const posts = await getPosts();

res.json(posts);

} catch (error) {

next(error);

}

});

Thank you for reading this far, I hope you are liking it. Feel free to comment on your thoughts and jump into the next blog in the series.

Subscribe to my newsletter

Read articles from Kariuki George directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Kariuki George

Kariuki George

making the hard concepts simple for everyone