Week 9,10 - GSOC 2023

Rohan Babbar

Rohan Babbar

This blog post briefly describes the progress on the project achieved during the 9th and 10th weeks of GSOC.

Work Done

Added a decorator called

reshapedthat will handle additional communication under the hood if the shapes provided by the users are not aligned.Added MPIFirstDerivative

Added MPISecondDerivative

Added MPILaplacian

Added

local_shapesas a new parameter to theDistributedArrayclass.Included important documentation covering installation, contribution guidelines, and more.

Added reshaped decorator

In my previous blog post, I discussed the utilization of the add_ghost_cells method. This method facilitates the transfer of ghost cells to either the preceding or succeeding process, or both, thereby achieving alignment with the operator shape at each rank.

The reshaped functionality operates behind the scenes, facilitating the redistribution or reshaping of local arrays within the DistributedArray at each rank. This adjustment ensures alignment with the operator. It's important to note that the reshaped function involves additional communication, utilizing the send/recv MPI methods. For optimal performance, users are advised to reshape their provided shapes to align with the operator. This practice minimizes the need for communication.

Example of how to use it:

# `_matvec` (forward) function can be simplified to

@reshaped

def _matvec(self, x: DistributedArray):

y = do_things_to_reshaped(y)

return y

where x will be reshaped to an N-D array depending on the operator and y will be raveled to give a 1-D DistributedArray as output.

The decorator is extensively employed throughout the repository in classes such as MPIBlockDiag, MPIVStack, MPIFirstDerivative, MPISecondDerivative and more.

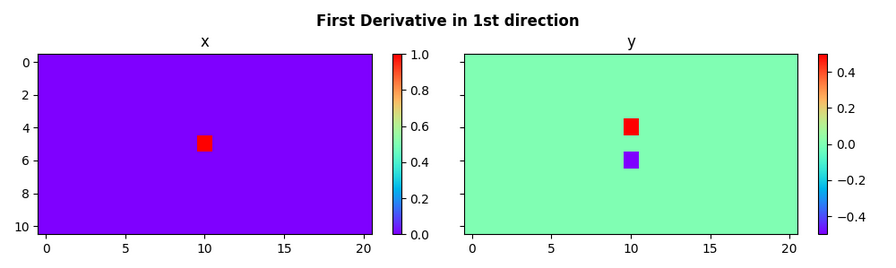

Added MPIFirstDerivative

With the successful integration of both the reshaped and add_ghost_cells functionalities, the implementation of the FirstDerivative operator was simplified. The pylops_mpi.basicoperators.MPIFirstDerivative class applies a first-derivative using forward, backward, and centered stencils, all of which are computed using an N-D DistributedArray partitioned along axis=0.

Addressing the corner cells at each rank is managed by leveraging the add_ghost_cells method, allowing the selective transfer of border cells between processes. While the functionality of the MPIFirstDerivative method resembles that of pylops.FirstDerivative, it distinguishes itself by utilizing DistributedArray for both input and output, as opposed to the NumPy arrays utilized by pylops.

An example was also created by me for ease of understanding:

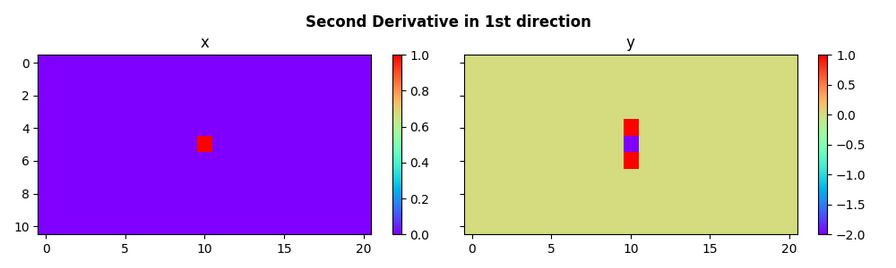

Added MPISecondDerivative

Just as in the case of MPIFirstDerivative, the implementation of pylops_mpi.basicoperators.MPISecondDerivative also makes use of the internal functionality of add_ghost_cells for communication of border cells between processes. The MPISecondDerivative class employs forward, backward, and centered stencils to apply the second derivative. These stencils are computed using an N-D DistributedArray that is partitioned along axis=0

An example was also created by me for ease of understanding:

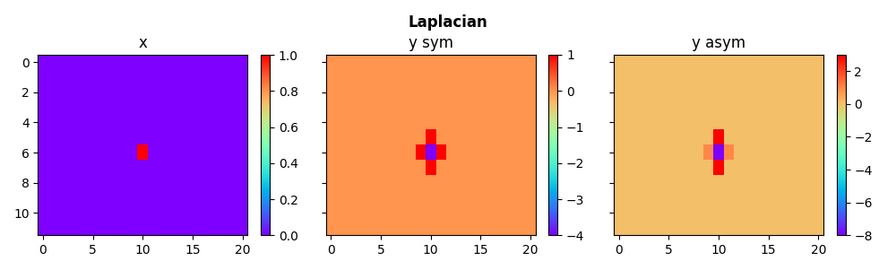

Added MPILaplacian

The pylops_mpi.basicoperators.MPILaplacian operator applies a second derivative across multiple directions of a Distributed array. To achieve this, I employed the MPISecondDerivative operator to compute the second derivative along the first direction (axis=0). When dealing with other axis values, the pylops.SecondDerivative operator is incorporated into the MPIBlockDiag operator. This setup facilitates the subsequent matrix-vector multiplication, which involves the SecondDerivative operator and the distributed data.

An example was also created by me for ease of understanding:

Added local_shapes as a new parameter to the DistributedArray class

While experimenting with the library, my mentors and I recognized the value of maintaining a default local_split method for automatically detecting the shape of local arrays. This default behavior proves beneficial and should remain the default setting. However, it's also important to empower users by offering the choice to specify their local shapes. To facilitate this, we've introduced the parameter local_shapes to the DistributedArray class.

This feature holds significant potential as it allows users to define local shapes for specific ranks that are already aligned with the corresponding operators at those ranks. This alignment reduces the need for communication between processes, contributing to enhanced efficiency.

Note: It is important to ensure that the number of processes is equal to the length of the local_shapes parameter. Additionally, ensure that these local_shapes align with the global shape.

One example of how to use this feature:

from pylops_mpi import DistributedArray

arr = DistributedArray(global_shape=100, local_shapes=[(30, ), (40, ), (30, )])

print(arr.rank, arr)

# Output

# 0 <DistributedArray with global shape=(100,), local shape=(30,), dtype=<class 'numpy.float64'>, processes=[0, 1, 2])>

# 1 <DistributedArray with global shape=(100,), local shape=(40,), dtype=<class 'numpy.float64'>, processes=[0, 1, 2])>

# 2 <DistributedArray with global shape=(100,), local shape=(30,), dtype=<class 'numpy.float64'>, processes=[0, 1, 2])>

Added Documentation

With all components now well-prepared and the package poised for release, I included essential documentation that covers various aspects. This documentation provides detailed installation procedures for both users and developers, contributing guidelines tailored for developers, and a comprehensive overview of the library's functionalities.

Challenges I ran into

Implementing the logic for the reshaped functionality proved to be quite challenging, requiring nearly a week of thinking and coding. Ensuring that the decorator integration didn't cause failures in existing tests was a top priority. For the MPI Derivative operators, the original documentation from pylops was a useful resource. It helped in successfully implementing the _matvec and _rmatvec functions for all stencils.

As for the local_shapes parameter, it necessitated modifications across multiple files where instances of the DistributedArray was created. This aspect demanded careful adjustments and consumed a decent amount of time.

To do in the coming weeks

As the GSOC program draws to a close, my next steps involve enhancing the documentation and refining the coding style. Simultaneously, I will work on creating a test version for pylops-mpi by utilizing the test PyPI platform.

Thanks for reading my post!

Subscribe to my newsletter

Read articles from Rohan Babbar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by