Docker Fundamentals

Manish Negi

Manish Negi

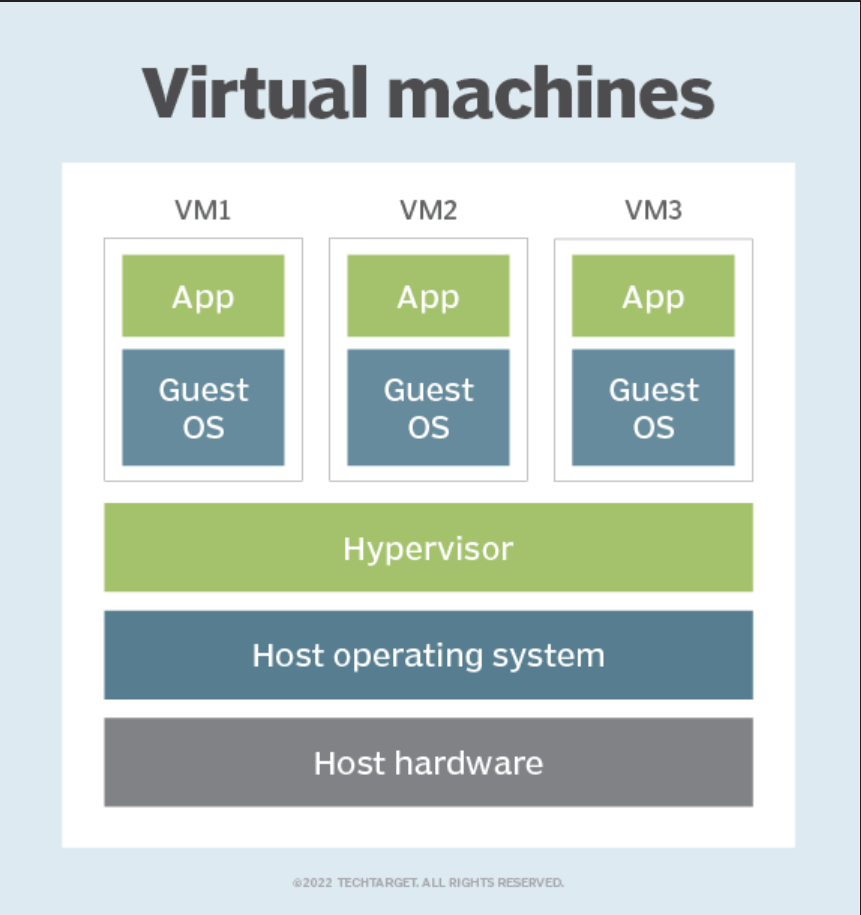

Virtual Machine vs Docker

Virtual machines (VMs) and Docker are both technologies used to isolate and manage software applications, but they do so in different ways and have distinct use cases. Let's explore the differences between them:

Virtual Machines (VMs):

Isolation: VMs provide hardware-level isolation. Each VM runs a complete operating system (OS) instance, and multiple VMs share the physical hardware of a host machine.

Resource Overhead: VMs have higher resource overhead since they require a full OS along with its binaries, libraries, and drivers. This can lead to significant memory and storage consumption.

Performance: VMs are generally slower in terms of startup time and overall performance due to the overhead of running a complete OS for each VM.

Hypervisor: VMs are managed by a hypervisor (e.g., VMware, Hyper-V), which abstracts the physical hardware and allows multiple VMs to run on a single physical machine.

Isolation Level: VMs provide stronger isolation between applications because they run separate operating systems, reducing the risk of conflicts between applications.

Portability: VMs can be less portable since moving VMs between different hosts or environments may require dealing with OS compatibility issues.

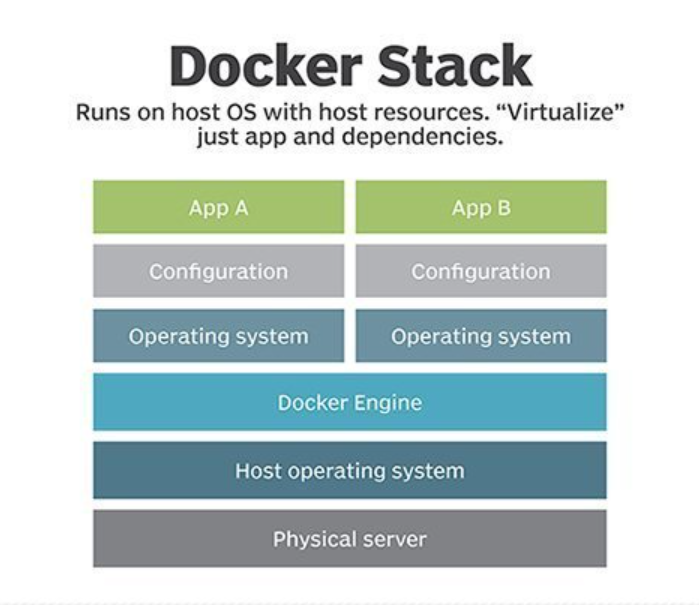

Docker:

Isolation: Docker uses containerization to isolate applications. Containers share the host OS's kernel, but they have their own isolated filesystem, runtime, and processes.

Resource Overhead: Containers have lower resource overhead since they don't require a full OS; they share the host OS's kernel and use a layered file system approach to share common components efficiently.

Performance: Docker containers are generally faster in terms of startup time and overall performance due to their lightweight nature.

Container Engine: Docker containers are managed by the Docker engine, which facilitates container creation, distribution, and management.

Isolation Level: While containers are more lightweight and efficient, they provide a slightly lower level of isolation compared to VMs, as they share the same OS kernel. This means there's a slightly higher risk of conflicts between applications if not managed properly.

Portability: Docker containers are highly portable, as they encapsulate the application along with its dependencies in a consistent environment. Containers can run on any system that supports Docker, regardless of the underlying OS.

Use Cases:

VMs: VMs are well-suited for scenarios that require strong isolation, running different operating systems on a single host, or when running legacy applications that can't be easily containerized.

Docker: Docker is excellent for lightweight application deployment, microservices architecture, continuous integration, and continuous deployment (CI/CD) pipelines, and scenarios where portability and resource efficiency are crucial.

In summary, if you need strong isolation and compatibility with various operating systems, VMs might be the better choice. If you prioritize lightweight and efficient application deployment with portability across different environments, Docker containers are more appropriate. It's worth noting that the choice between VMs and Docker depends on the specific requirements of your project and the trade-offs you're willing to make.

Docker Engine:

The "Docker engine," also known as the "Docker daemon," is the core component of the Docker platform. It is responsible for managing and running Docker containers on a host system. The Docker engine enables containerization, a technology that allows applications and their dependencies to be packaged and isolated within lightweight, portable containers.

Here are the key components and responsibilities of the Docker engine:

Docker Daemon: The Docker daemon is a background process that manages Docker containers on a host system. It listens for Docker API requests and performs actions such as building, running, and stopping containers. The daemon also manages images, volumes, networks, and other resources associated with Docker containers.

Docker CLI (Command-Line Interface): The Docker CLI is a command-line tool that allows users to interact with the Docker engine. It provides commands for building, running, stopping, and managing containers, as well as working with images, networks, volumes, and other Docker resources.

Container Images: Container images are read-only templates that include the application code, runtime, libraries, and other dependencies required to run an application. Docker images are used to create Docker containers. The Docker engine fetches images from Docker registries, which are repositories that store and distribute images.

Docker Containers: Docker containers are instances of running applications that are isolated from each other and the host system. Containers are created from Docker images and provide a consistent environment for applications to run across different environments.

Networking: The Docker engine provides networking capabilities to allow containers to communicate with each other and with the external world. It can create and manage virtual networks that connect containers, and it also provides port mapping to expose container ports externally.

Volumes: Docker volumes are used for persistent data storage and sharing between containers. Volumes provide a way to store data separately from the container's filesystem, ensuring that data remains available even if the container is removed.

Container Lifecycle Management: The Docker engine is responsible for starting, stopping, and restarting containers. It manages container resource allocation, including CPU, memory, and other system resources.

Security and Isolation: The Docker engine enforces isolation between containers, preventing one container from accessing another container's files or processes. It also offers security features such as user namespaces, SELinux, and AppArmor to enhance container security.

Logging and Monitoring: The Docker engine supports the logging and monitoring of containerized applications. It provides options to capture container logs and statistics, allowing administrators to monitor and troubleshoot container behavior.

The Docker engine is the heart of the Docker ecosystem and has been instrumental in popularizing containerization and enabling microservices architectures. It simplifies application deployment, makes applications more portable, and improves resource utilization by providing a consistent environment for running applications across different environments.

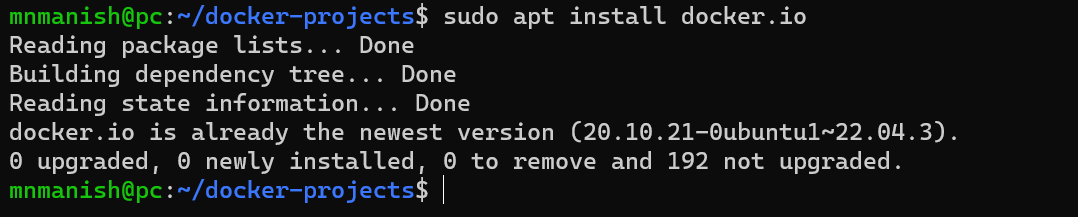

How to install Docker in Linux/Ubuntu?

sudo apt install docker.io: To install the docker in Linux/ubuntu

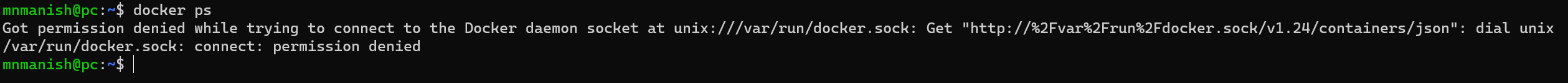

How to resolve permission denied error in docker?

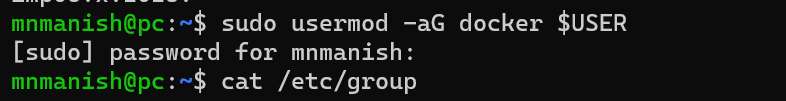

docker ps: to list all the running containers

NOTE: When we install docker in Linux it creates a group for docker and in order to access the docker we need to add the user to the docker group. (Above error)

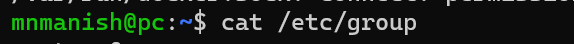

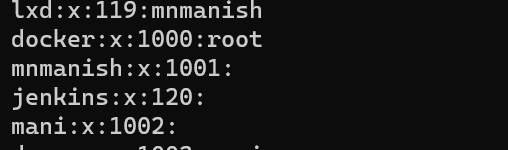

cat /etc/group: To check all the groups and users

In the docker group add mnmanish user to give access and solve permission denied error.

sudo usermod -aG docker $USER: To add the current user to the docker group

cat /etc/group: To show the groups and users (mnmanish has been added as a user to the docker group)

sudo reboot: To restart the system

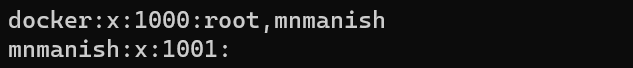

-> If get this error { Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running? }

-> Then use the commands:

sudo -b unshare --pid --fork --mount-proc /lib/systemd/systemd --system-unit=basic.target

sudo -E nsenter --all -t $(pgrep -xo systemd) runuser -P -l $USER -c "exec $SHELL"

docker ps: To list all the running containers

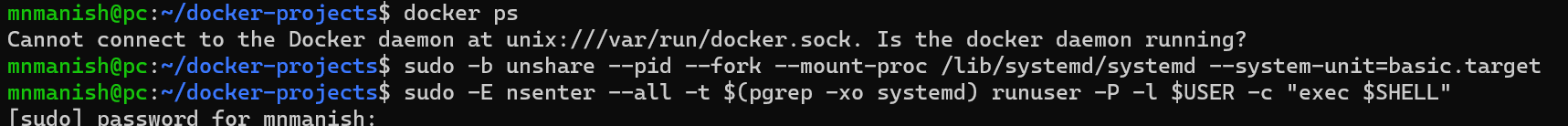

How to pull an image from the docker hub repository?

docker pull mysql:latest - To pull the MySQL latest image from the docker hub repository.

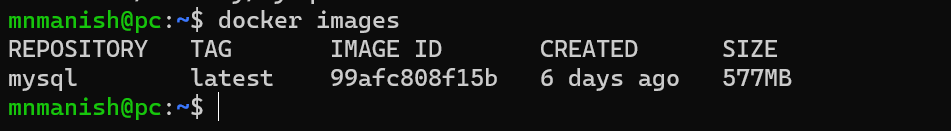

docker images: To view all the images

NOTE: If an image is coming from the docker hub it is known as an image pull.

If an image comes from a docker file it is known as an image build.

How to run an image(the image in the running state is called a container)?

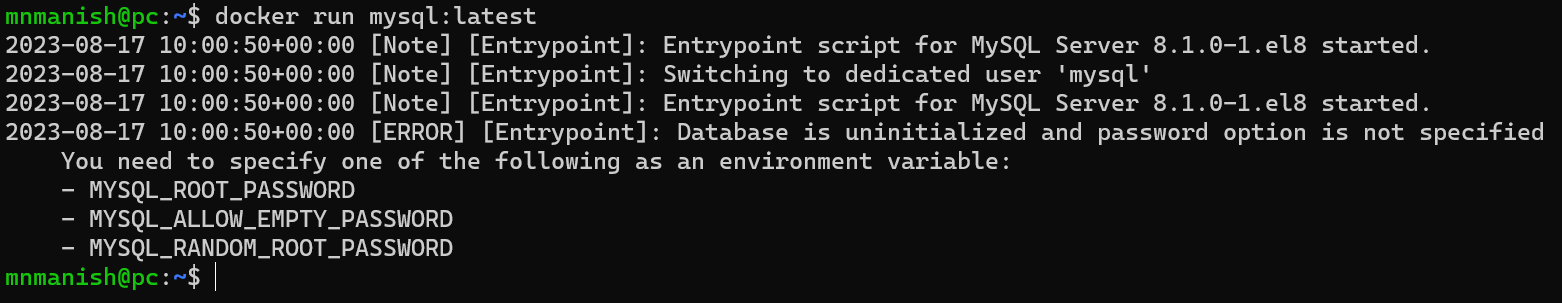

docker run mysql:latest - To run the image mysql (askin to verify the environment variable)

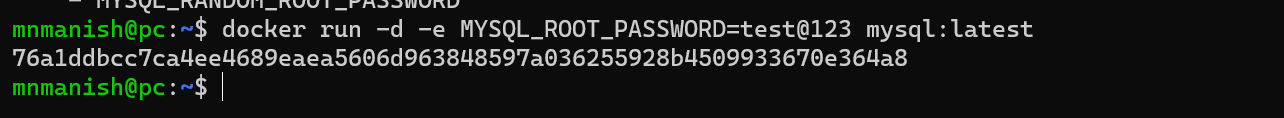

docker run -d -e MYSQL_ROOT_PASSWORD=test@123 mysql:latest

:To run the image mysql:latest in daemon(detached mode/background) using the environment variable MYSQL_ROOT_PASSWORD

-d: Refers to daemon/background/detached mode

-e: Refers to environment variable

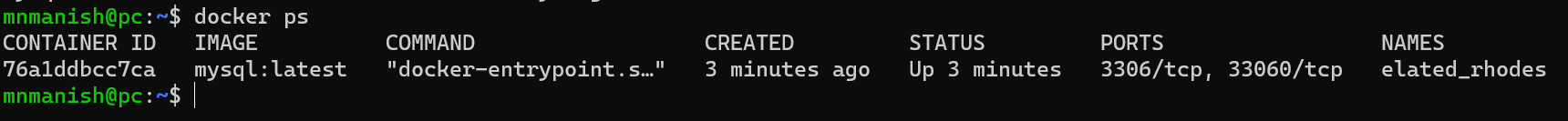

docker ps: To view all the running containers

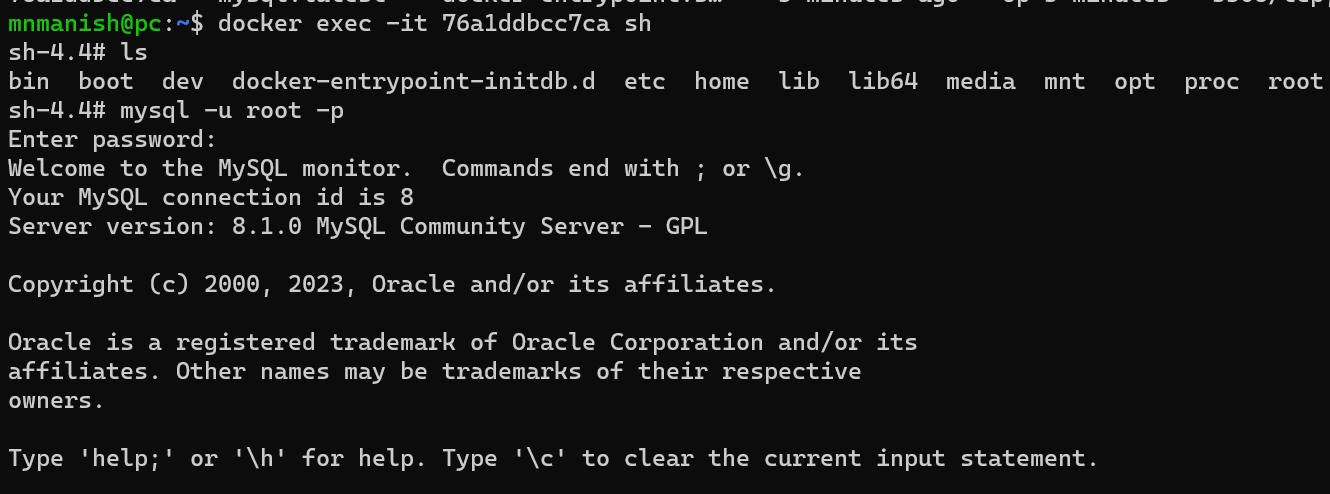

How to interact with the application inside the container?

docker exec -it 76a1ddbcc7ca sh: To interact with the container through shell

-it: interact with terminal

76a1ddbcc7ca: container id

sh: shell

mysql -u root -p: To set the user(-u) root and password (-p)

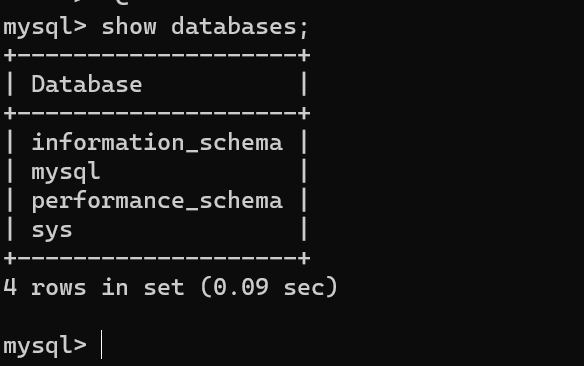

show databases; To view all the databases(sql command)

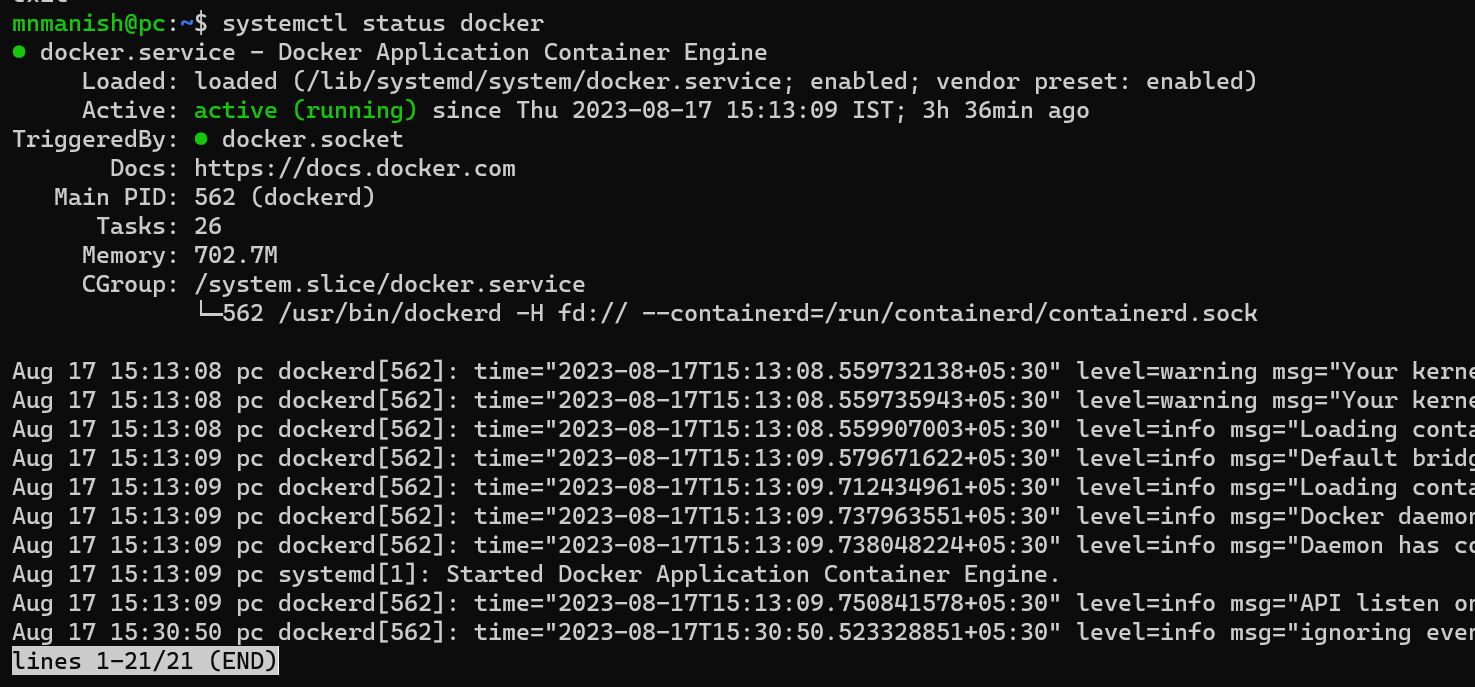

How to check the status of Docker?

systemctl status docker: To check the status of the docker

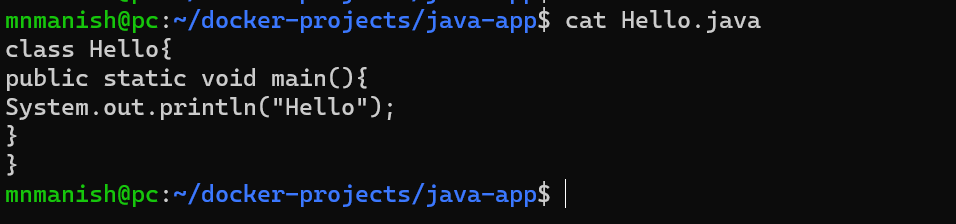

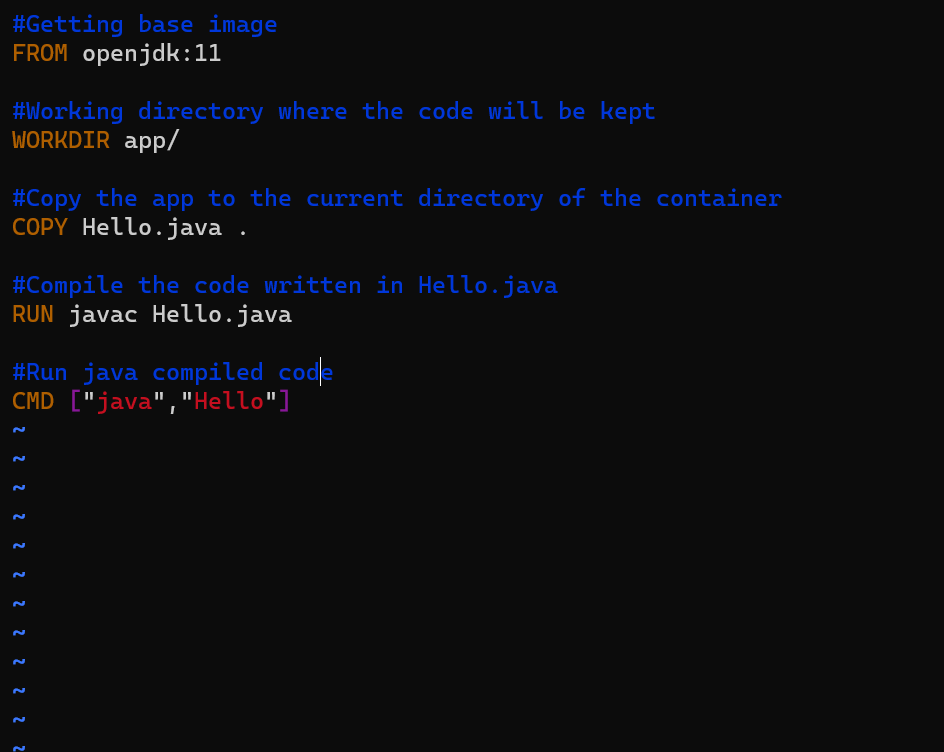

How to create a Dockerfile for a Java application?

vim Hello.java: To create a file

cat Hello.java: To show the in file

vim Dockerfile: To create a file

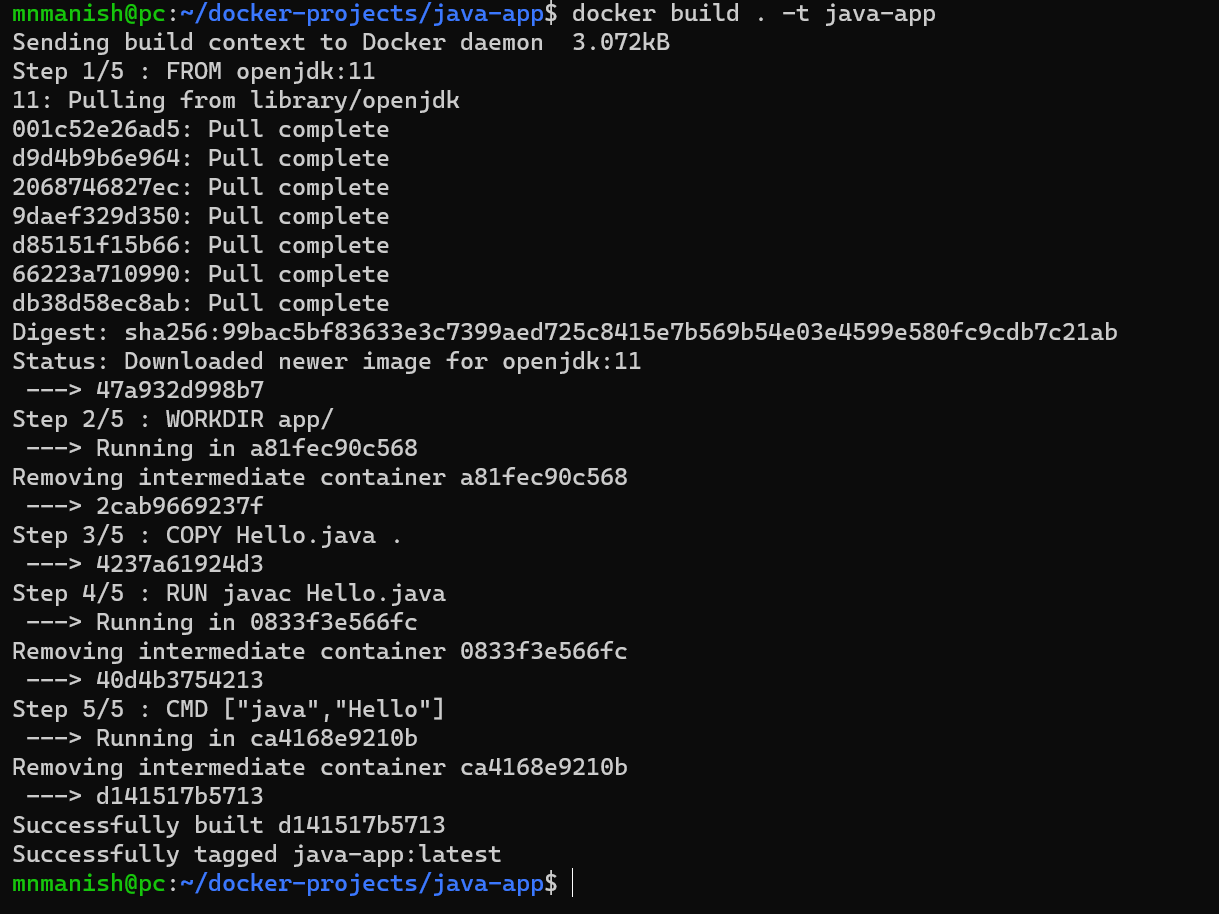

How to build an image from a Dockerfile?

docker build . -t java-app: To build the image in the current directory from the Docker file in the current directory with name java-app

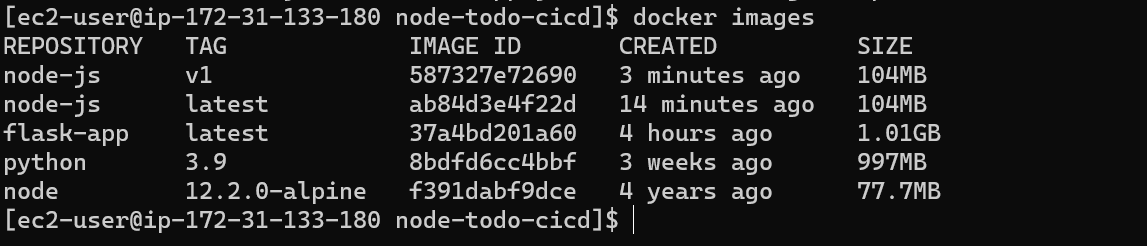

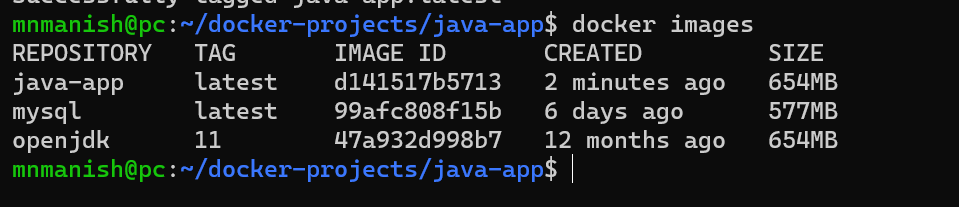

docker images: To list all the images(java-app image has been built)

How to make a container from a docker image?

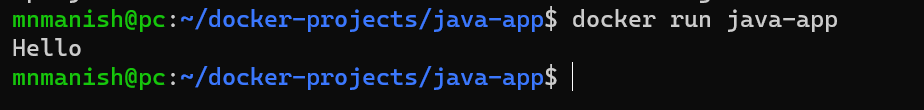

docker run java-app: To make a container from java-app image

How to deploy a Flask app using docker and aws?

vim app.py: To create a python file

NOTE: (Go to chatgpt and ask for code for the flask app with port 5000 and ip should be exposed also ask for the version to run the application)

Code for flask app

from flask import Flask, render_template, request

app = Flask(name)

@app.route('/') def home(): return "Welcome to the Flask App!"

@app.route('/form', methods=['GET', 'POST']) def form(): if request.method == 'POST': name = request.form['name'] message = f"Hello, {name}!" return render_template('message.html', message=message) return render_template('form.html')

if name == 'main': app.run(host='0.0.0.0', port=5000, debug=True)

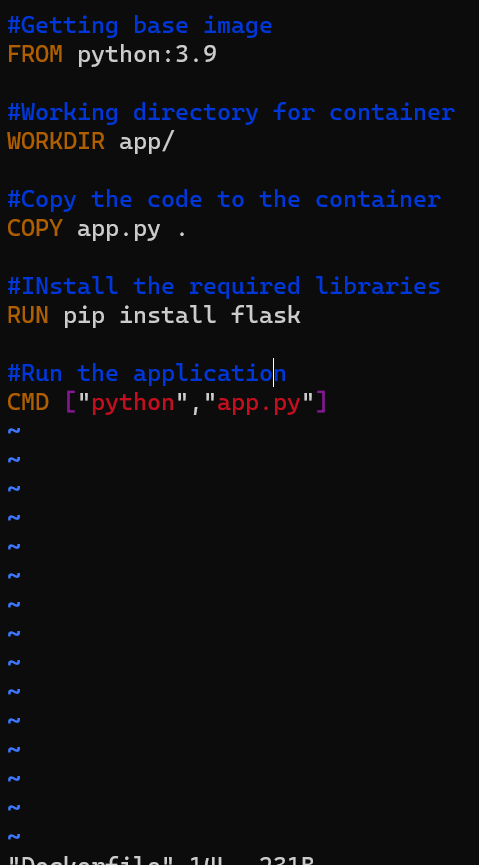

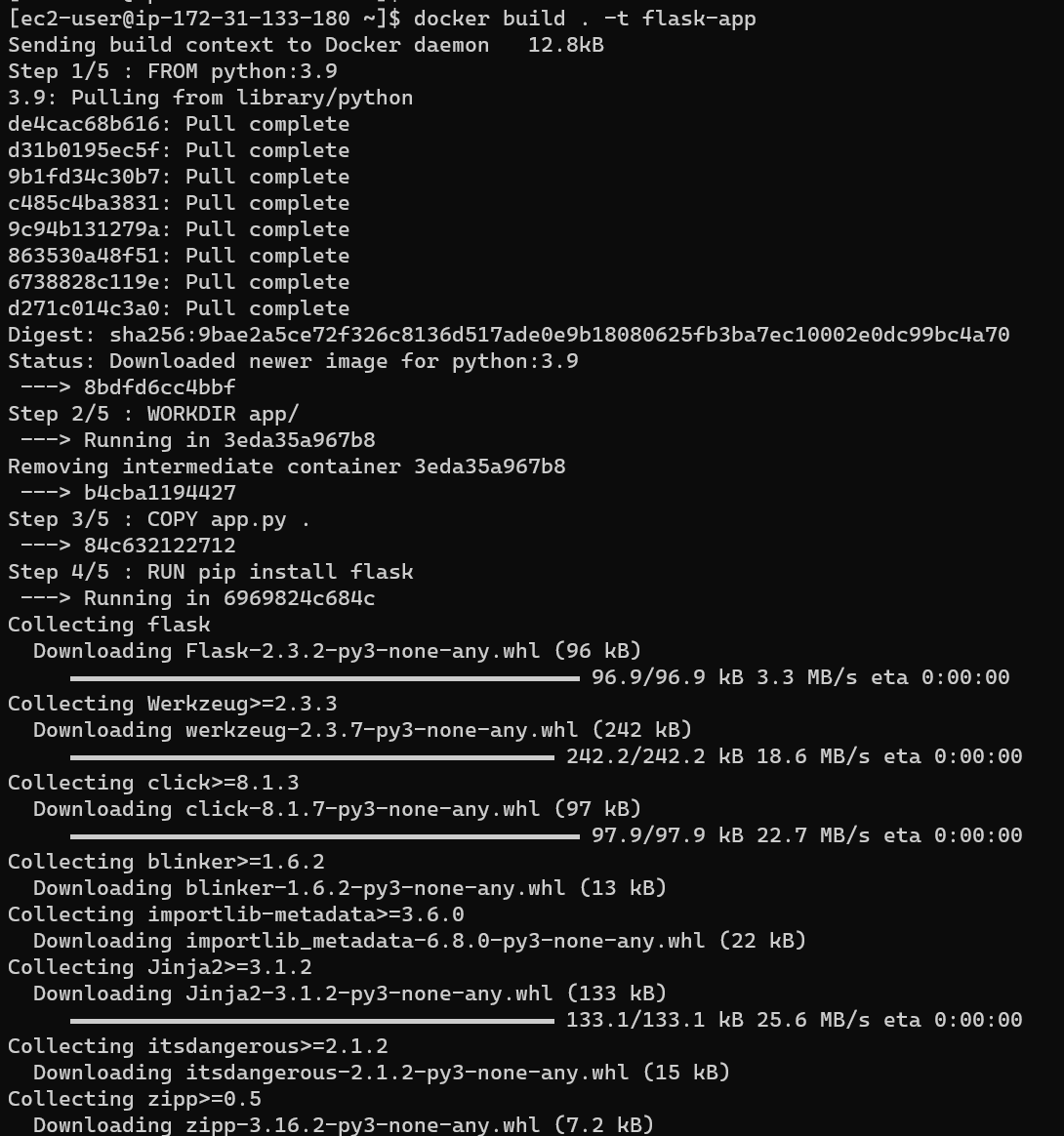

vim Dockerfile: To create Dockerfile for the application

#Getting base image

FROM python:3.9

#Working directory for container

WORKDIR app/

#Copy the code to the container

COPY app.py .

#INstall the required libraries

RUN pip install flask

#Run the application

CMD ["python","app.py"]

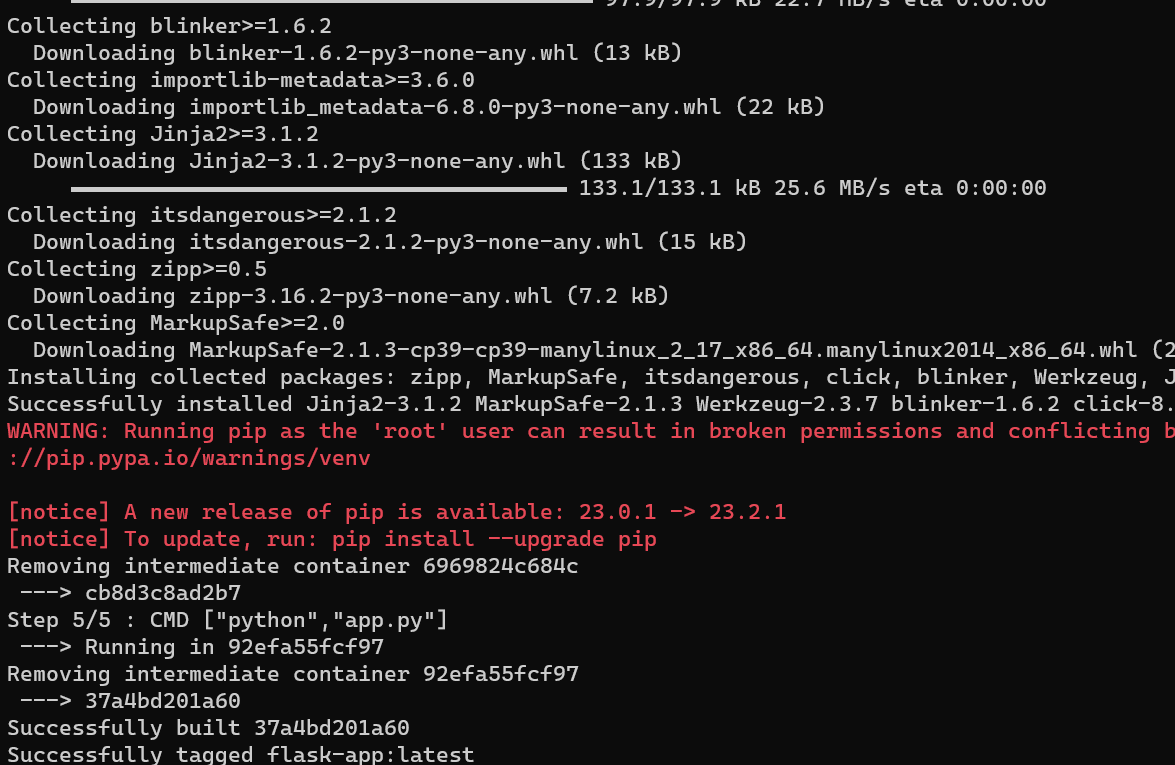

docker build . -t flask-app: To build the image from the Dockerfile

docker images: To view all the images

docker run -d -p 5000:5000 flask-app : To run the flask-app image in detach mode with mapping of host port 5000 with container port 5000

docker ps: To view all the running containers

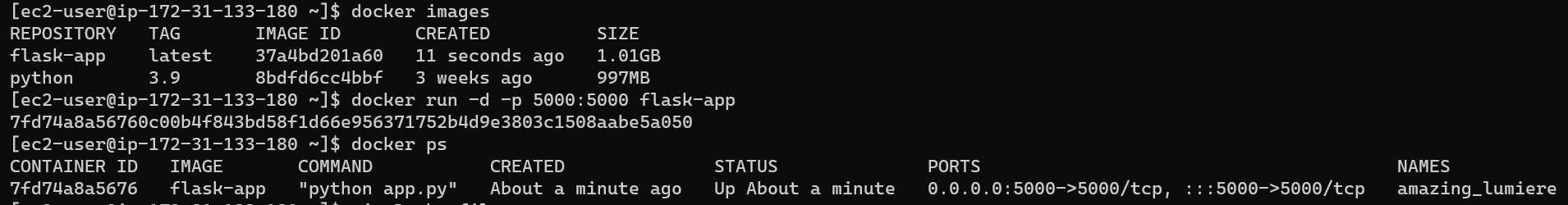

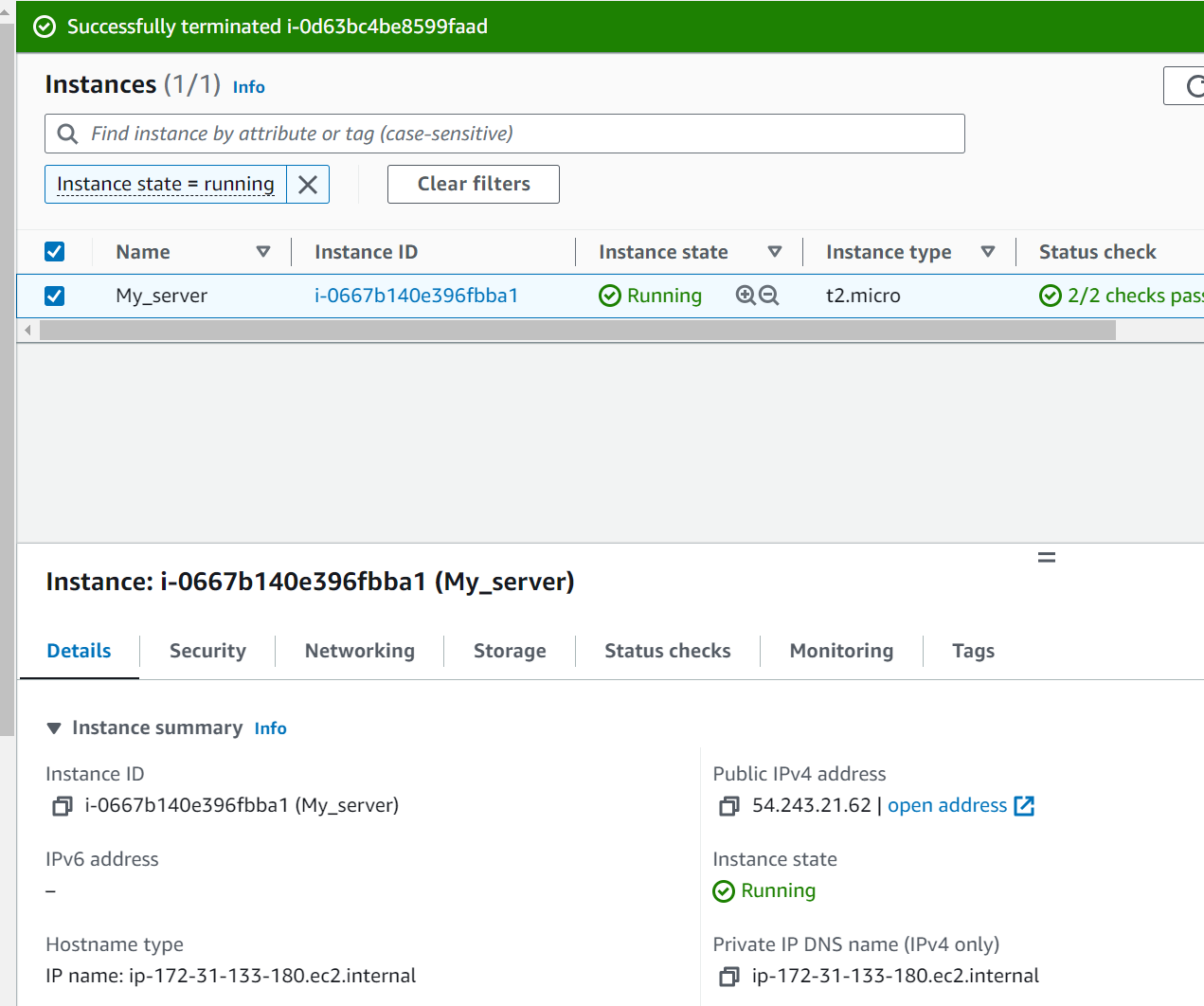

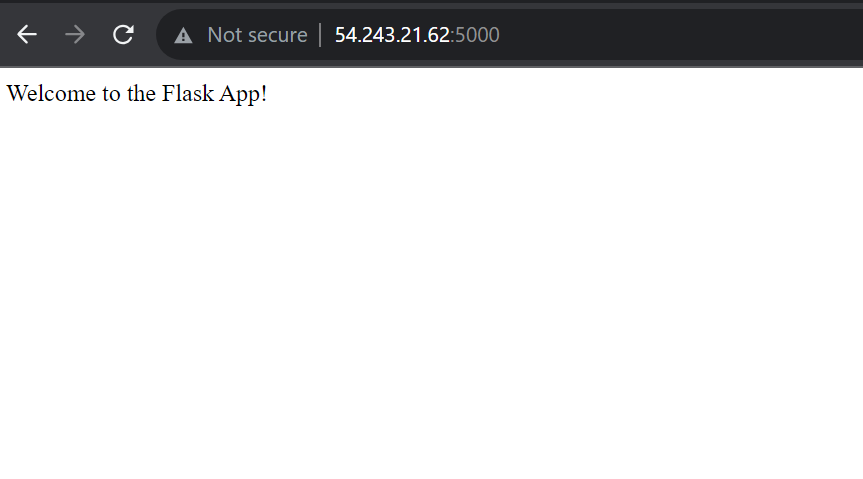

\=> Now go to aws and in the ec2 instances, copy the public ipv4 address and paste it into the browser with the ip address:5000 port (that is mapped)

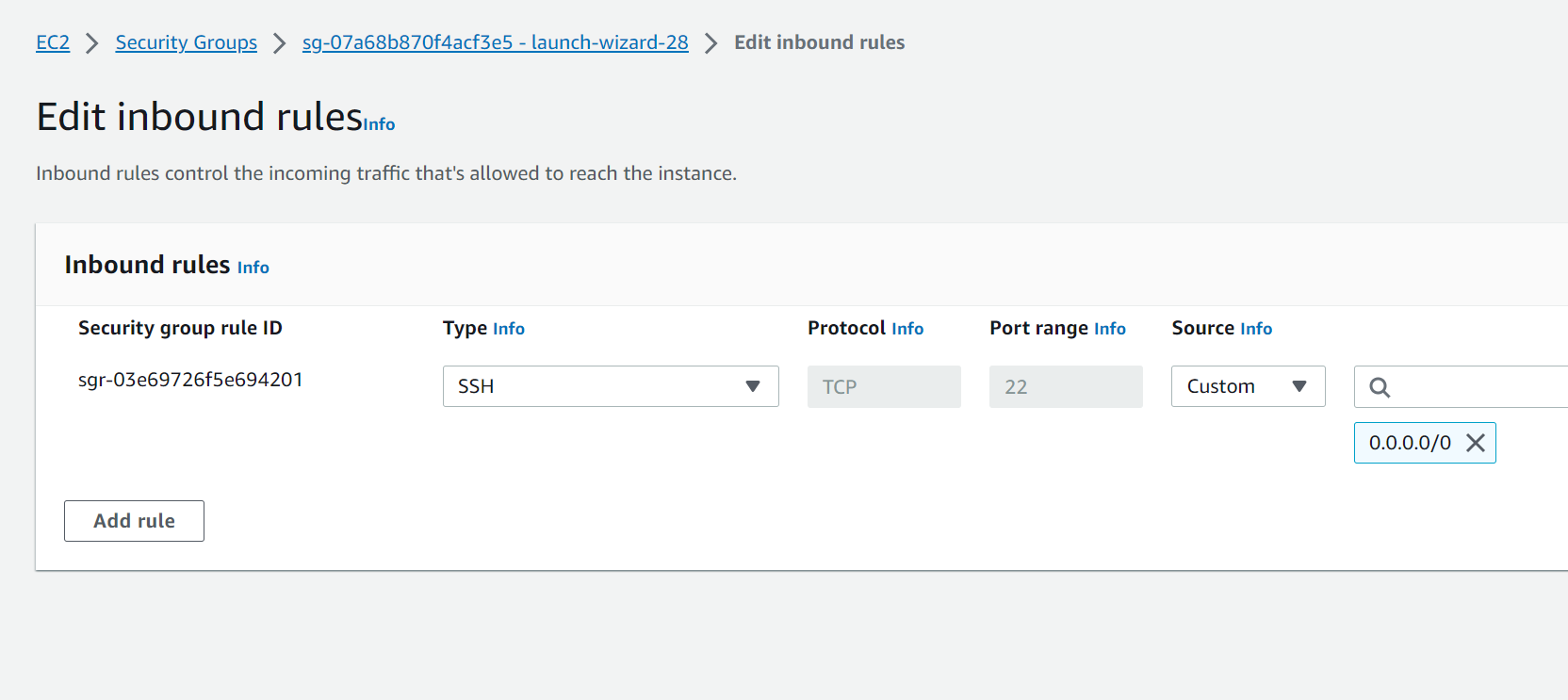

->Still, the ip will not show the application as we need to edit the inbound rules in the security group of the ec2 instance.

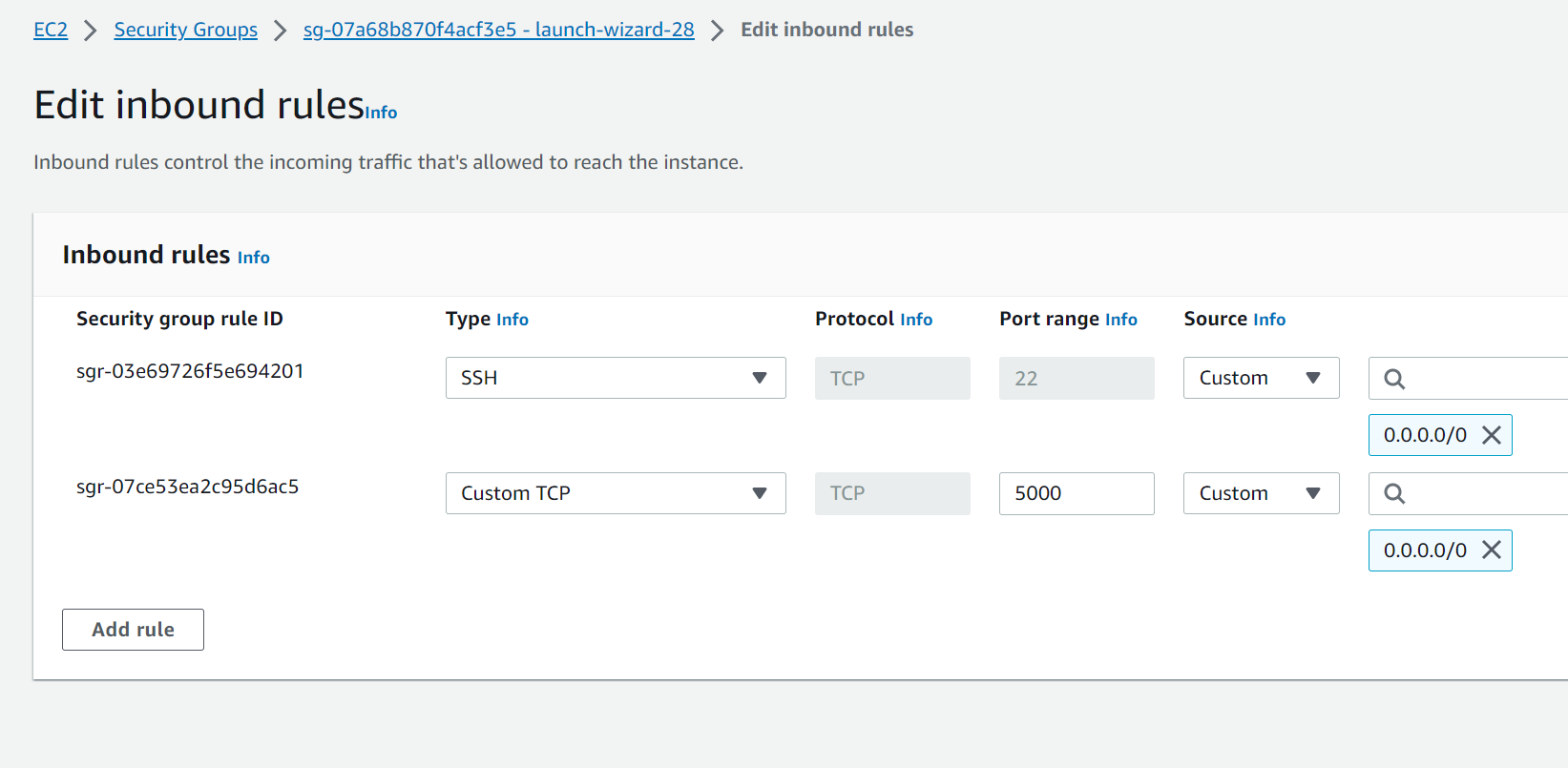

->Under security group add rule custom TCP port 5000 from anywhere and save.

-> Now refresh the IP address of ec2 and the application is deployed successfully.

INTERVIEW QUESTION

What are the 3 scenarios in which the container is accessible or not accessible?

No port EXPOSE: If we choose this then the container will only be accessible inside the container.

EXPOSE PORT: If we choose this, then the container will be exposed to other containers only but not to the host system.

-p 8000:8000 -> If we choose this then the container will be exposed to the host system.

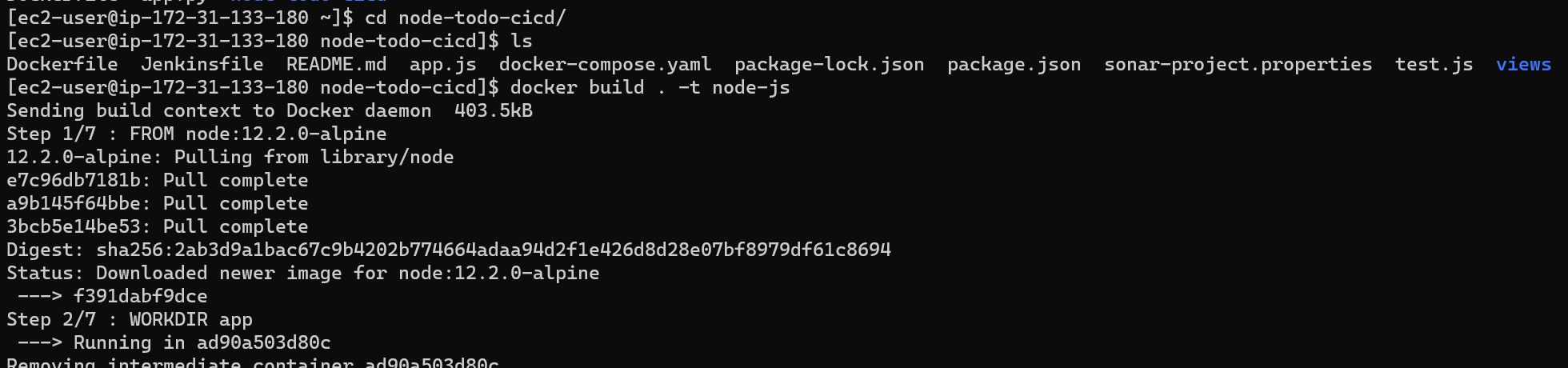

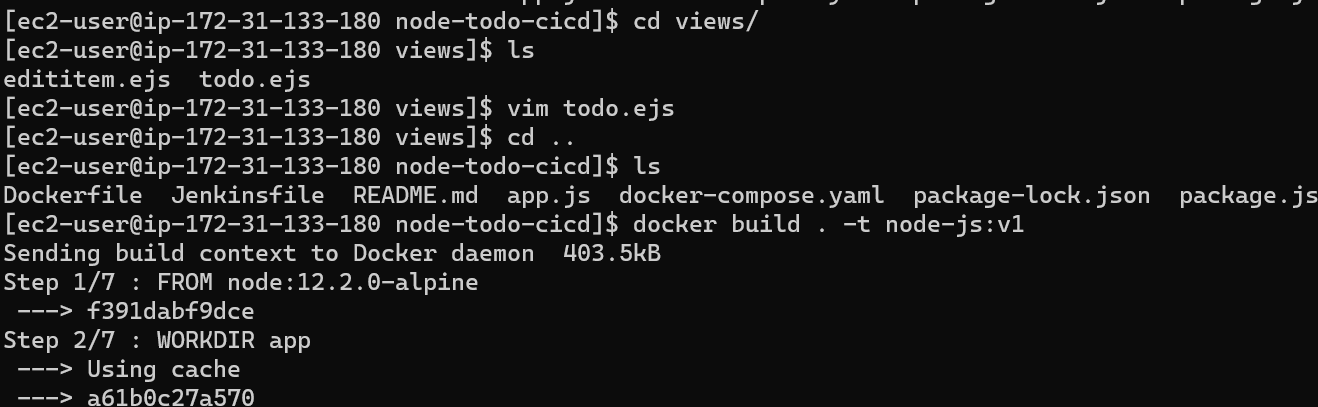

How to deploy node-js application using docker and aws?

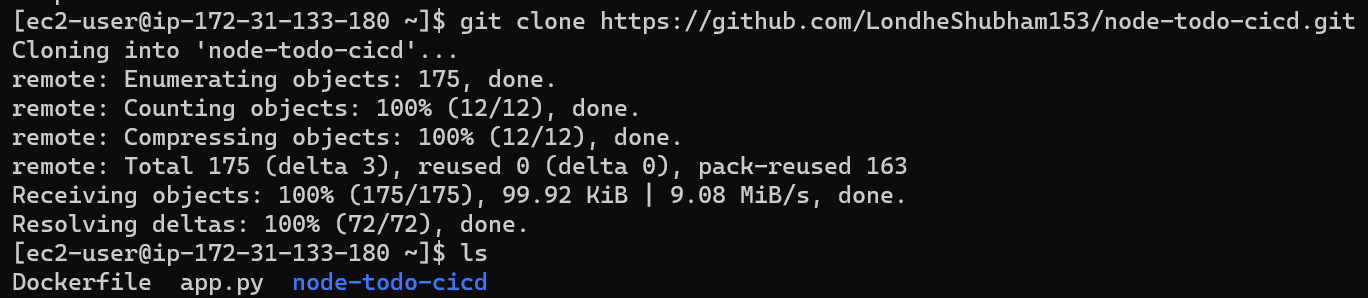

git clone https://github.com/LondheShubham153/node-todo-cicd.git

-> To copy the code into local

docker build . -t node-js:latest -> To build the image of node-js application

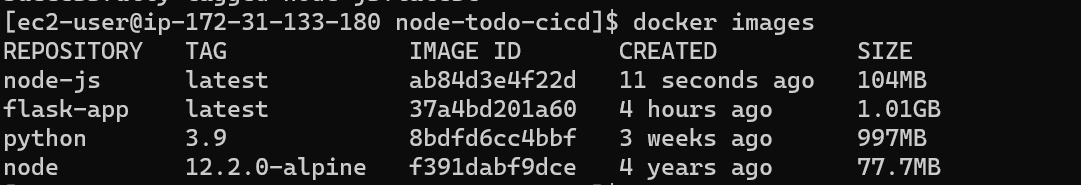

docker images: To view all the images

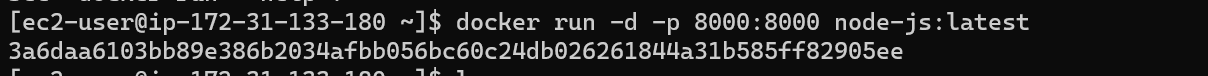

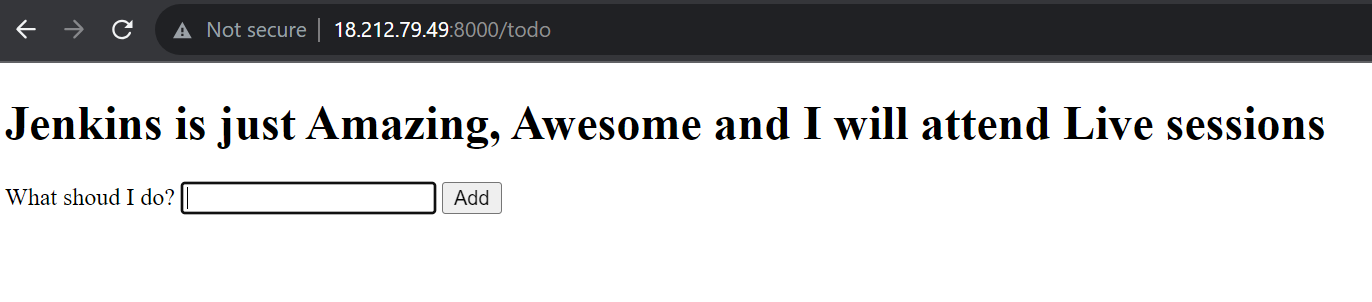

docker run -d -p 8000:8000 node-js:latest ->To run the image to make container of node-js application

-> Now, go to ec2 instance and edit the security group with inbound rules from port 8000 and save.

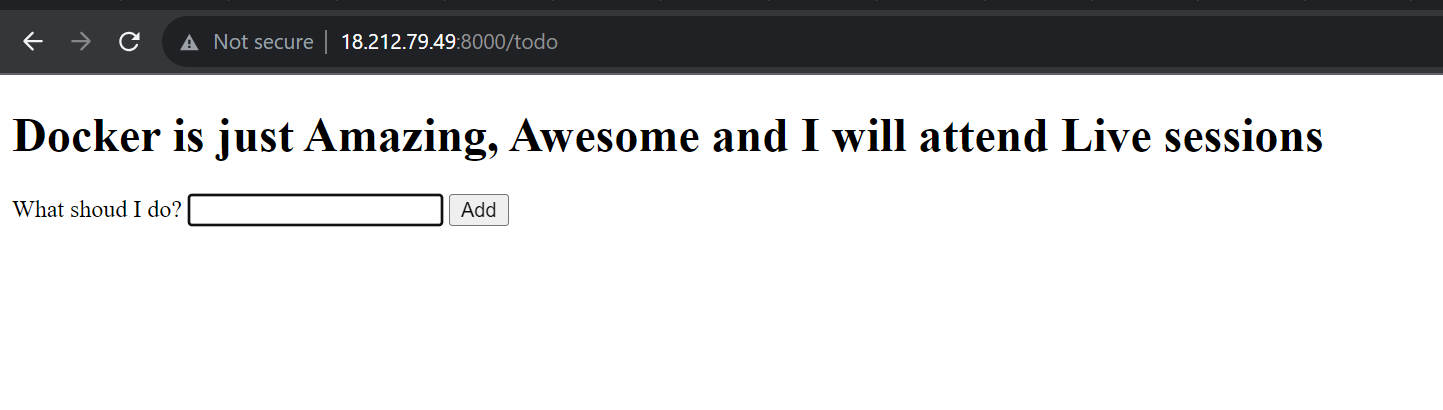

Go to views and edit the file todo.ejs

vim todo.ejs: To edit the file (replace Jenkins with docker)

docker build . -t node-js:v1 ->to build the image again as node-js:latest has code which display "Jenkins" line

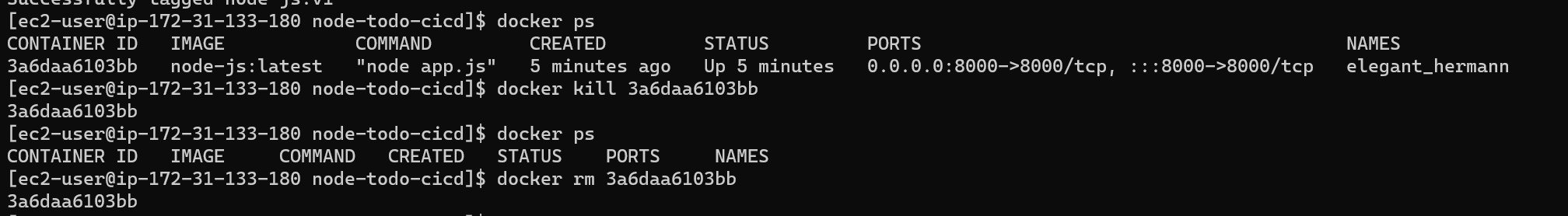

docker ps: To view all the running containers (node-js:latest is running)

docker kill 3a6daa6103bb: To kill the running container

docker rm 3a6daa6103bb: To remove the container

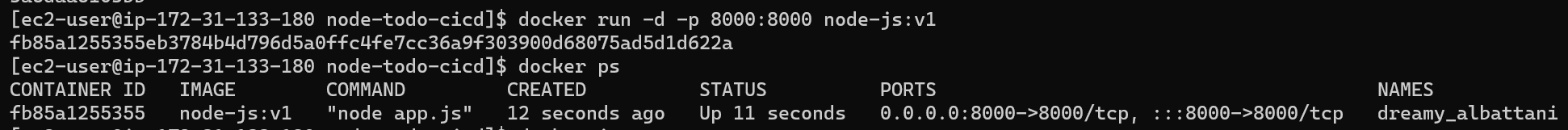

docker run -d -p 8000:8000 node-js:v1 -> To run the image to make a container which has "Docker" line

docker images: To view all the images

Subscribe to my newsletter

Read articles from Manish Negi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by