Anything-LLM: Empowering Conversations with Your Documents

Ajay Ravi

Ajay Ravi

Introduction

In the realm of AI tooling, the concept of "chatting with your documents" has gained significant traction. Anything-LLM is a groundbreaking solution that enables users to engage in dynamic conversations with their documents. In this comprehensive blog post, we will delve into the intricacies of Anything-LLM, exploring its architecture, key features, and the benefits it brings to users. By the end, you'll have a thorough understanding of how Anything-LLM revolutionizes document interaction.

Understanding LLM:

LLM stands for "Large Language Model." It refers to a class of artificial intelligence models that are designed to understand and generate human-like text. LLMs are trained on vast amounts of text data and can perform various language-related tasks, such as language translation, text generation, question answering, and more. These models can process and generate coherent and contextually relevant text, making them valuable tools for natural language processing and understanding. Prominent examples of LLMs include GPT-3 (Generative Pre-trained Transformer 3) and its successors, which have garnered attention for their impressive language capabilities.

Understanding Anything-LLM:

Anything-LLM is a full-stack application that transforms your documents into intelligent chatbots. It leverages advanced technologies like LangChain, Pinecone, and Chroma to facilitate seamless conversations. The primary objective of Anything-LLM is to provide an accessible and user-friendly interface for interacting with documents, regardless of the user's technical expertise.

Architecture and Technologies:

LangChain: LangChain is a powerful language processing technology that forms the backbone of Anything-LLM. It enables the application to understand and generate human-like text, facilitating natural and coherent conversations. LangChain utilizes state-of-the-art deep learning models and natural language processing techniques to comprehend the context and generate meaningful responses.

Pinecone: Pinecone is a vector database that plays a crucial role in Anything-LLM. It allows for efficient similarity search, enabling quick retrieval of relevant information. Pinecone's speed and scalability make it an ideal choice for storing and retrieving document embeddings. By mapping high-dimensional vectors to a searchable index, Pinecone enables fast and accurate document retrieval, enhancing the conversational experience.

Chroma: Chroma is another vector database that can be used with Anything-LLM. It provides an alternative to Pinecone, offering flexibility in vector storage options. Chroma is designed to handle large-scale data processing and provides efficient similarity search capabilities, making it a suitable choice for storing and retrieving document embeddings.

Key Features of Anything-LLM:

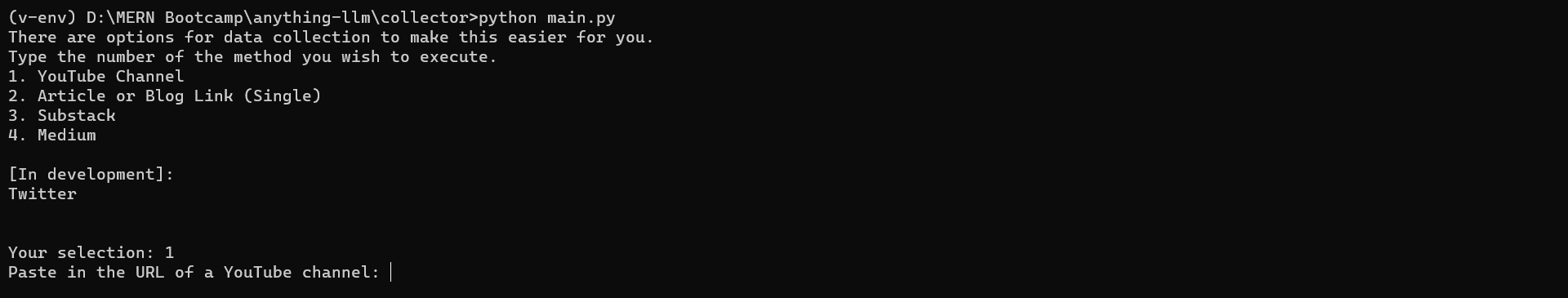

Document Collection: Anything-LLM provides tools to collect documents from various sources, including YouTube channels, Substacks, Medium, Gitbooks, and more. This feature allows users to easily integrate their existing content into the chatbot, enabling seamless conversations with their documents.

User-Friendly Interface: Anything-LLM boasts a sleek and intuitive user interface that allows users to effortlessly manage their documents. The interface provides features for adding, removing, and organizing documents within the chatbot. Users can easily navigate through their document collections and access relevant information with ease.

Cost Optimization: Anything-LLM incorporates vector caching, which significantly reduces the costs associated with embedding documents. Once a document is embedded and paid for, subsequent embeddings of the same document are free. This cost-saving feature is particularly beneficial for users with large document collections, as it eliminates the need to pay for embedding the same document multiple times.

Logical Partitions: Anything-LLM introduces the concept of workspaces, which act as logical partitions for organizing documents. Workspaces enable users to group related documents and maintain separate memories for different contexts or topics. This feature enhances organization and flexibility, allowing users to manage and access their documents more efficiently.

Persistent Storage: Anything-LLM stores all documents, embeddings, and chats locally using an SQLite database. This ensures that data is preserved even if the application is shut down, allowing for seamless continuation of conversations upon restarting. The persistent storage feature ensures that users can easily resume their interactions with their documents without any loss of data.

Benefits of Anything-LLM:

Accessibility: Anything-LLM aims to be accessible to users of all technical backgrounds. By providing a user-friendly interface and simplifying the document integration process, it enables a broader audience to engage in meaningful conversations with their documents. The intuitive interface and straightforward workflows make it easy for users to navigate and interact with their document collections.

Enhanced Document Interaction: Anything-LLM empowers users to have dynamic and interactive conversations with their documents. Whether it's extracting insights, seeking information, or generating ideas, Anything-LLM facilitates a seamless and engaging experience. Users can ask questions, receive relevant responses, and conversationally explore their documents, enhancing their understanding and utilization of the information.

Cost Savings: The vector caching feature of Anything-LLM significantly reduces costs associated with embedding documents. This makes it an economical choice for users with extensive document collections, potentially saving them thousands of dollars. By eliminating the need to pay for embedding the same document multiple times, Anything-LLM provides a cost-effective solution for document interaction.

Organization and Flexibility: With workspaces and logical partitions, Anything-LLM offers a structured approach to document management. Users can easily organize and categorize their documents, enabling efficient retrieval and context-specific conversations. The logical partitions feature allows users to maintain separate memories for different workspaces, facilitating focused and relevant interactions with their documents.

What are Private-LLM and Local-LLM?

Private LLM and Local LLM are variations of language models that prioritize privacy and local processing, respectively. Let's explore each concept and understand how AnythingLLM can outperform them.

Private LLM: Private LLM refers to a language model that operates with a focus on privacy. It aims to address concerns related to data privacy and security by ensuring that user data remains confidential and is not shared with external entities. Private LLMs typically run on local devices or private servers, allowing users to have more control over their data.

Local LLM: Local LLM, on the other hand, refers to a language model that runs entirely on the user's local device, without relying on external servers or cloud-based infrastructure. This approach offers benefits such as reduced latency, improved data privacy, and the ability to operate offline. Local LLMs are particularly useful in scenarios where internet connectivity may be limited or when users prefer to keep their data local.

Now, let's discuss how AnythingLLM can outperform these models:

Accessibility: While Private LLMs prioritize privacy, they often require significant technical expertise and resources to set up and maintain. Anything-LLM, on the other hand, provides a user-friendly interface and simplifies the process of interacting with documents. It offers a comprehensive package that allows users to collect, process, and chat with their documents seamlessly, making it more accessible to a wider range of users.

Scalability: Private LLMs and Local LLMs may face limitations in terms of scalability. Processing large document collections or handling high volumes of queries can be challenging for these models, especially when running on resource-constrained devices. Anything-LLM, with its efficient vector caching and scalable technologies like Pinecone and Chroma, can handle large document collections and simultaneous queries effectively, ensuring optimal performance and responsiveness.

Cost Efficiency: Private LLMs often require dedicated infrastructure and resources, which can be costly to set up and maintain. Local LLMs may also incur high computational costs, especially when processing large amounts of data. AnythingLLM addresses these concerns by optimizing costs through vector caching. Once a document is embedded and paid for, subsequent embeddings of the same document are free, resulting in significant cost savings for users with extensive document collections.

Enhanced User Experience: AnythingLLM offers a user-friendly interface with a sleek UI and intuitive features for managing and interacting with documents. It provides logical partitions (workspaces) that allow users to organize their documents effectively and maintain separate memories for different contexts. This enhances the user experience and makes it easier to navigate and engage with the documents.

Conclusion:

Anything-LLM represents a significant advancement in the field of document interaction. By leveraging advanced technologies and providing a user-friendly interface, it empowers users to engage in dynamic conversations with their documents. With features like document collection, cost optimization, logical partitions, and persistent storage, Anything-LLM offers a comprehensive solution for document management and interaction. As the ecosystem evolves, Anything-LLM continues to pave the way for accessible and engaging conversations with our ever-expanding knowledge base. With its focus on accessibility, user experience, and cost savings, Anything-LLM opens up new possibilities for users to unlock the full potential of their documents. AnythingLLM aims to be the most user-centric open-source document chatbot with incoming integrations with Google Drive, Github repos, and more.

Link to repository : https://github.com/Mintplex-Labs/anything-llm

Subscribe to my newsletter

Read articles from Ajay Ravi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ajay Ravi

Ajay Ravi

Unleashing Web Development Superpowers while Diving into the Exciting Realms of Blockchain and DevOps