Kubeadm Installation on Master and Worker Nodes: Day31 & Day32

Namrata Kumari

Namrata KumariWhat is Deployment in k8s?

A Deployment provides a configuration for updates for Pods and ReplicaSets.

You describe a desired state in a Deployment, and the Deployment Controller changes the actual state to the desired state at a controlled rate. You can define Deployments to create new replicas for scaling, or to remove existing Deployments and adopt all their resources with new Deployments.

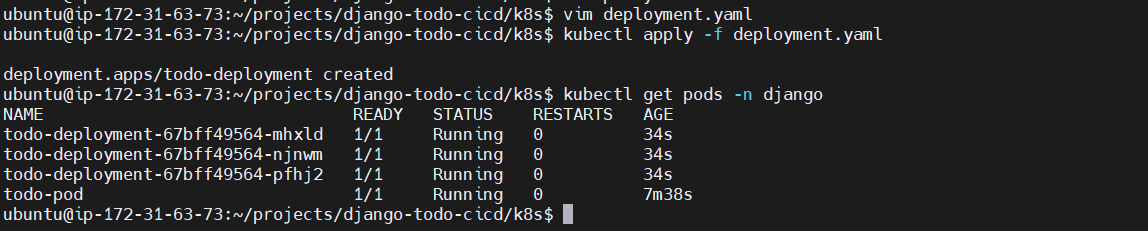

Create one Deployment file to deploy a sample todo-app on K8s using "Auto-healing" and "Auto-Scaling" feature

add a deployment.yml file (sample is kept in the folder for your reference)

apply the deployment to your k8s (minikube) cluster by command

kubectl apply -f deployment.yml

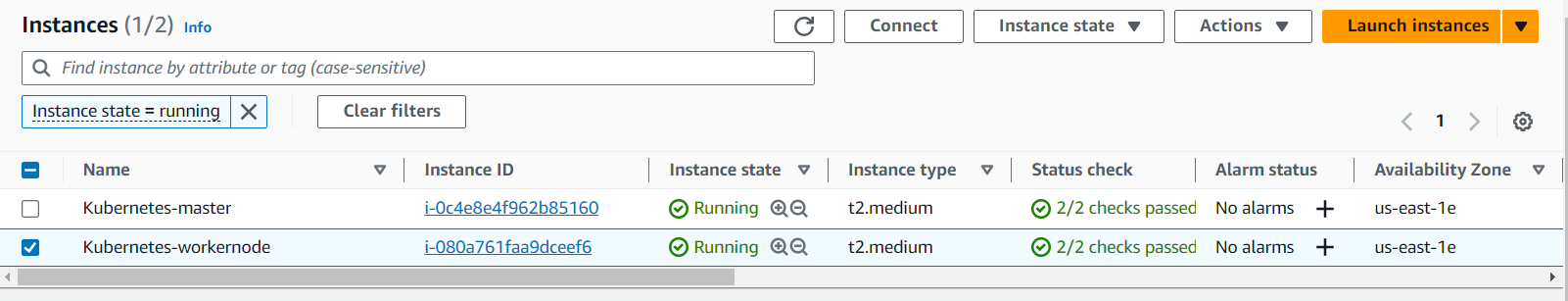

Pre-requisites:

Ubuntu OS (Xenial or later)

sudo privileges

Internet access

t2.medium instance type or higher

Master Node & Worker Node Setup:

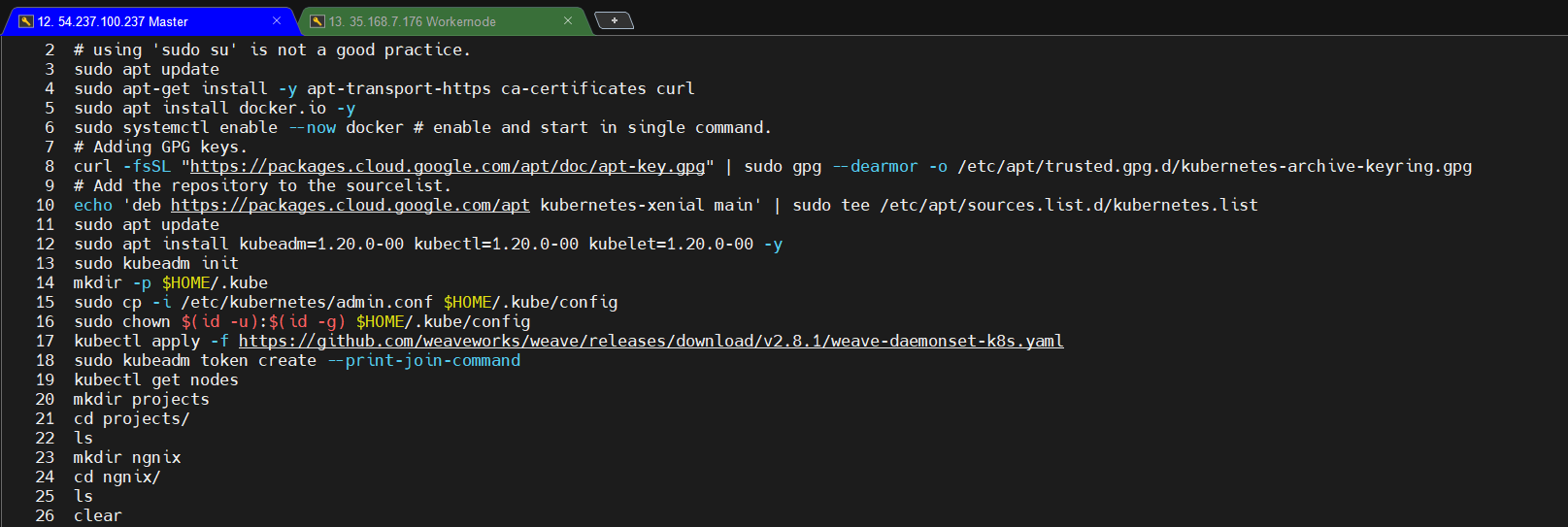

Run the following commands on both the master and worker nodes to prepare them for kubeadm.

# using 'sudo su' is not a good practice. sudo apt update sudo apt-get install -y apt-transport-https ca-certificates curl sudo apt install docker.io -y sudo systemctl enable --now docker # enable and start in single command. # Adding GPG keys. curl -fsSL "https://packages.cloud.google.com/apt/doc/apt-key.gpg" | sudo gpg --dearmor -o /etc/apt/trusted.gpg.d/kubernetes-archive-keyring.gpg # Add the repository to the sourcelist. echo 'deb https://packages.cloud.google.com/apt kubernetes-xenial main' | sudo tee /etc/apt/sources.list.d/kubernetes.listsudo apt update sudo apt install kubeadm=1.20.0-00 kubectl=1.20.0-00 kubelet=1.20.0-00 -y

Master Node

Initialize the Kubernetes master node:

sudo kubeadm initSet up local kubeconfig (both for root user and normal user):

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configApply Weave network:

kubectl apply -fhttps://github.com/weaveworks/weave/releases/download/v2.8.1/weave-daemonset-k8s.yamlGenerate a token for worker nodes to join:

sudo kubeadm token create --print-join-commandExpose port 6443 in the Security group for the Worker to connect to Master Node:

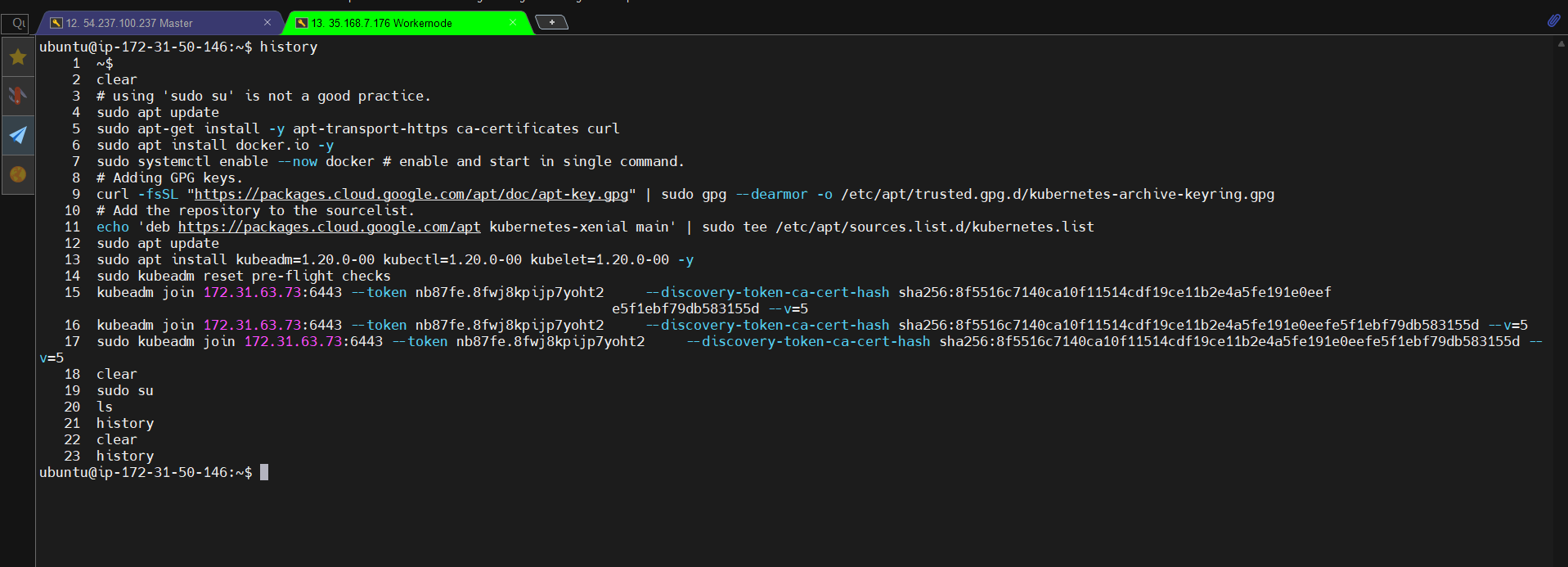

Worker-Node

Run the following commands on the worker node.

sudo kubeadm reset pre-flight checksPaste the join command you got from the master node and append

--v=5at the end. Make sure either you are working as sudo user or usesudobefore the commandAfter succesful join->

Verify Cluster Connection

On Master Node: kubectl get nodes

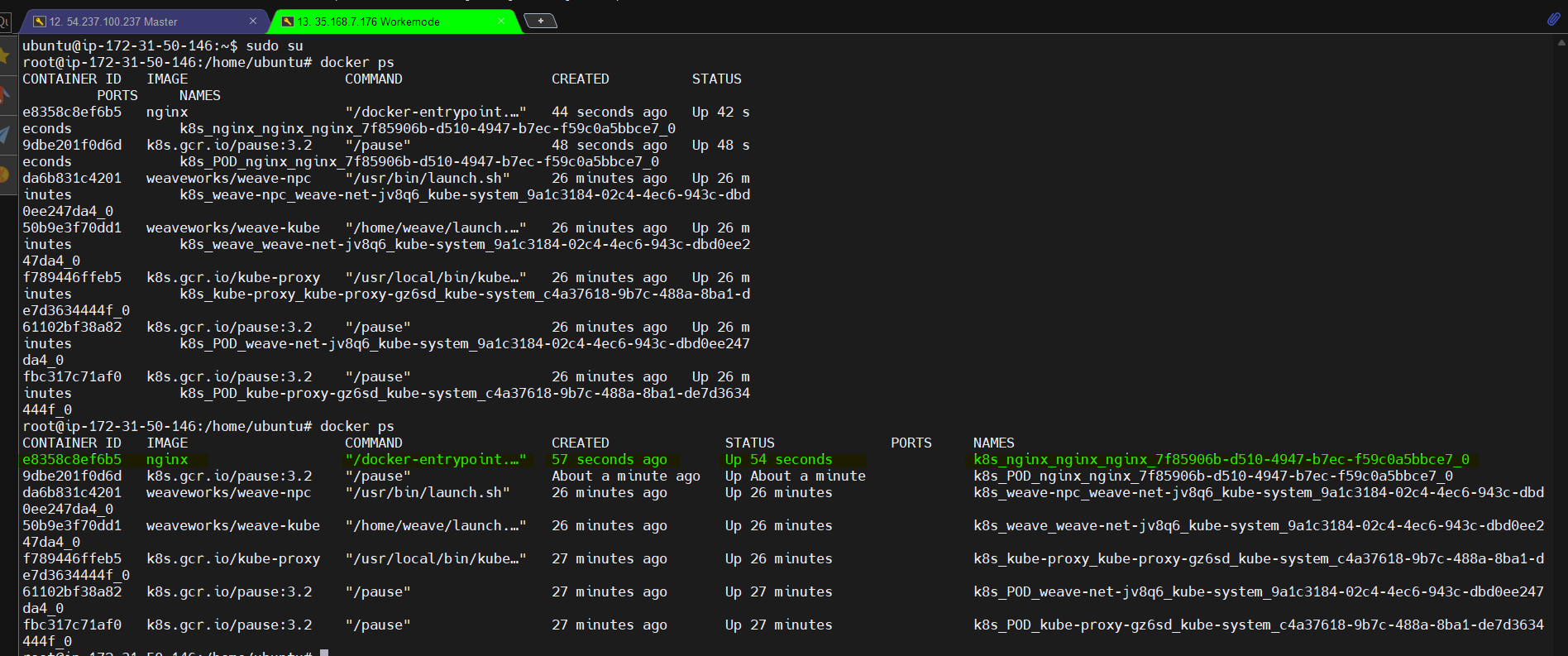

On Worker Node: docker ps

Running Nginx Pod on K8s: Part-1

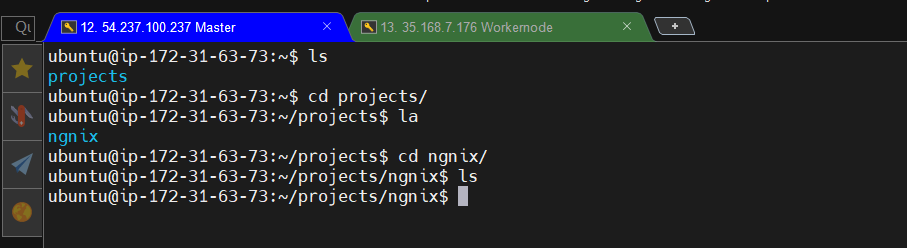

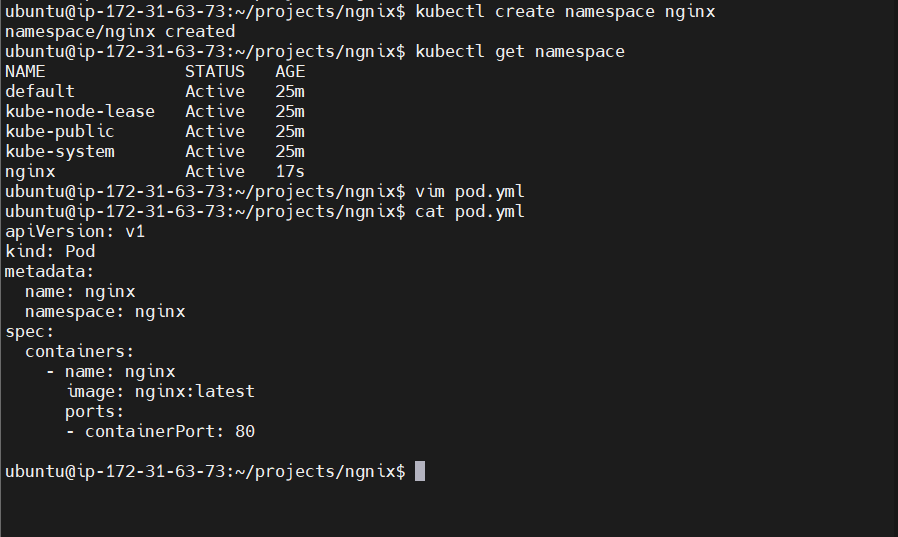

Making a directory 'Projects > nginx' to create and store all required manifest files.

Creating namespace and writing manifest file for pod.yml

[Purpose of creating Namespace: Namespaces are used to create isolated environments within a cluster. They help in organizing and segmenting resources like pods, services, and deployments. By using namespaces, you can have multiple projects or teams sharing the same cluster without interfering with each other's resources. It also helps prevent naming conflicts and provides better resource management and access control]

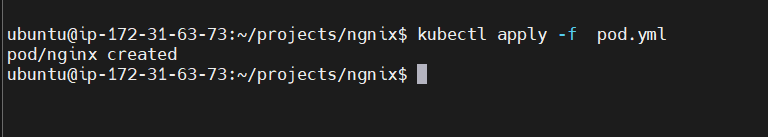

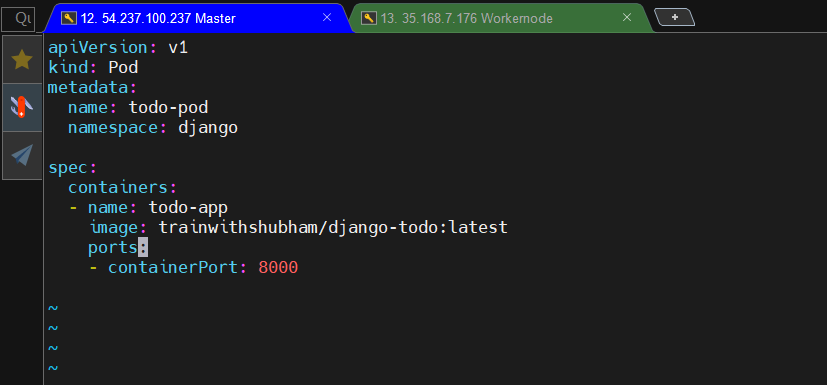

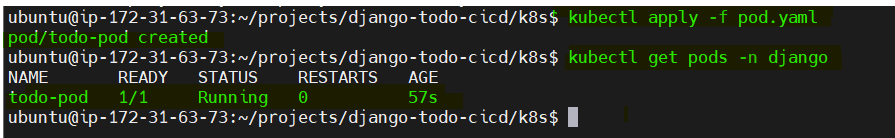

After writing pod.yml file we need to create pod, to create pod we need to run command: kubectl apply -f pod.yml

You can verify from workernode that nginx container created through : docker ps

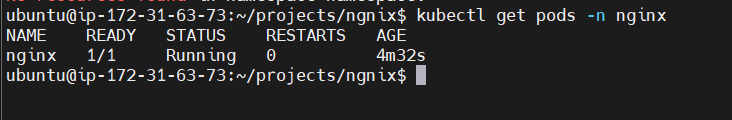

We can check the same on the Master node as well through: kubectl get pods -n nginx

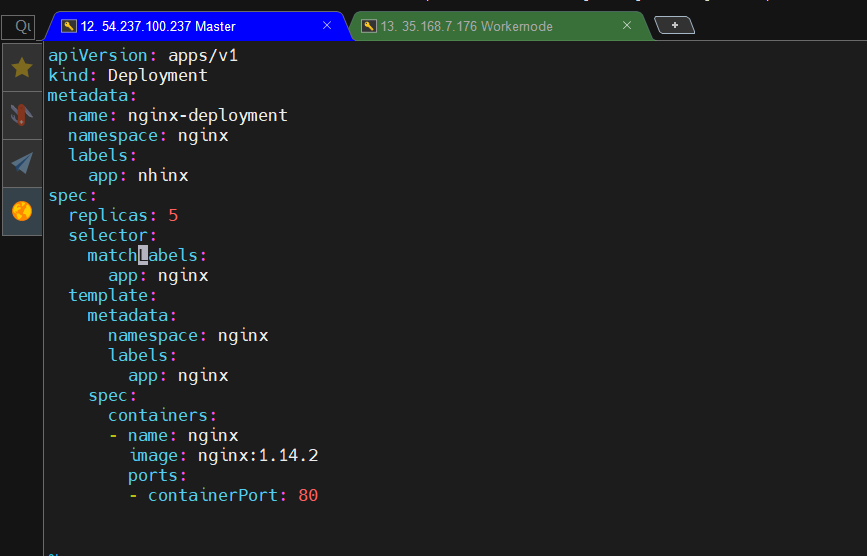

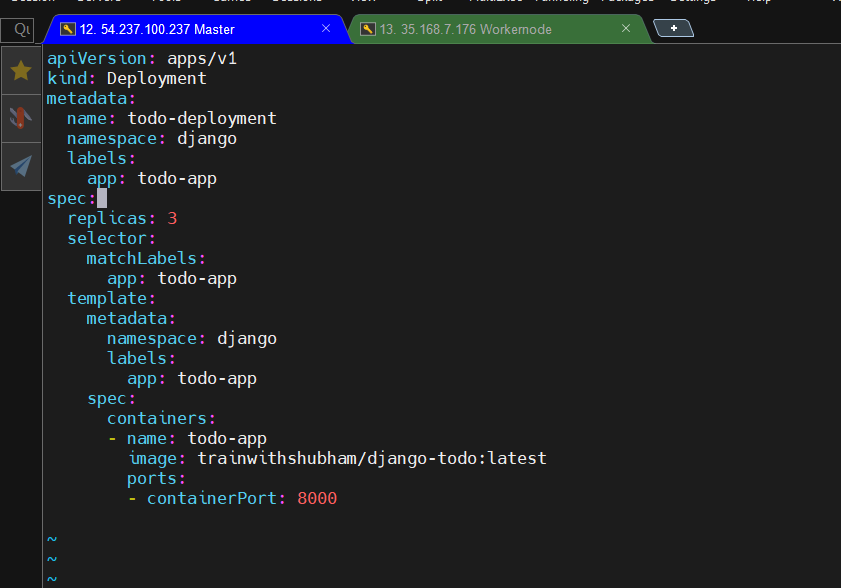

Creating Deployment manifest file: [we create deployment manifest file tp manage deployments and scaling of a set of pods. The deployment manifest file serves as a blueprint for creating and maintaining the desired number of identical pods running your application.]

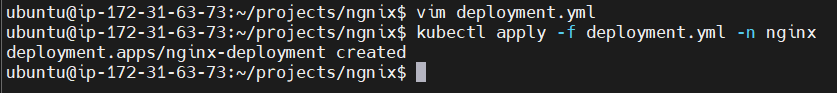

Creating deployment manifest file : kubectl apply -f deployment.yml -n nginx

You can see created deployment pods : kubectl get pods -n nginx

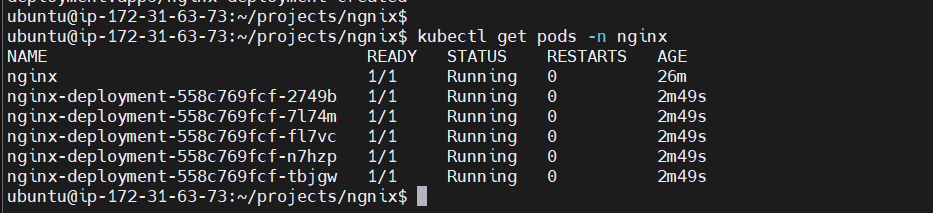

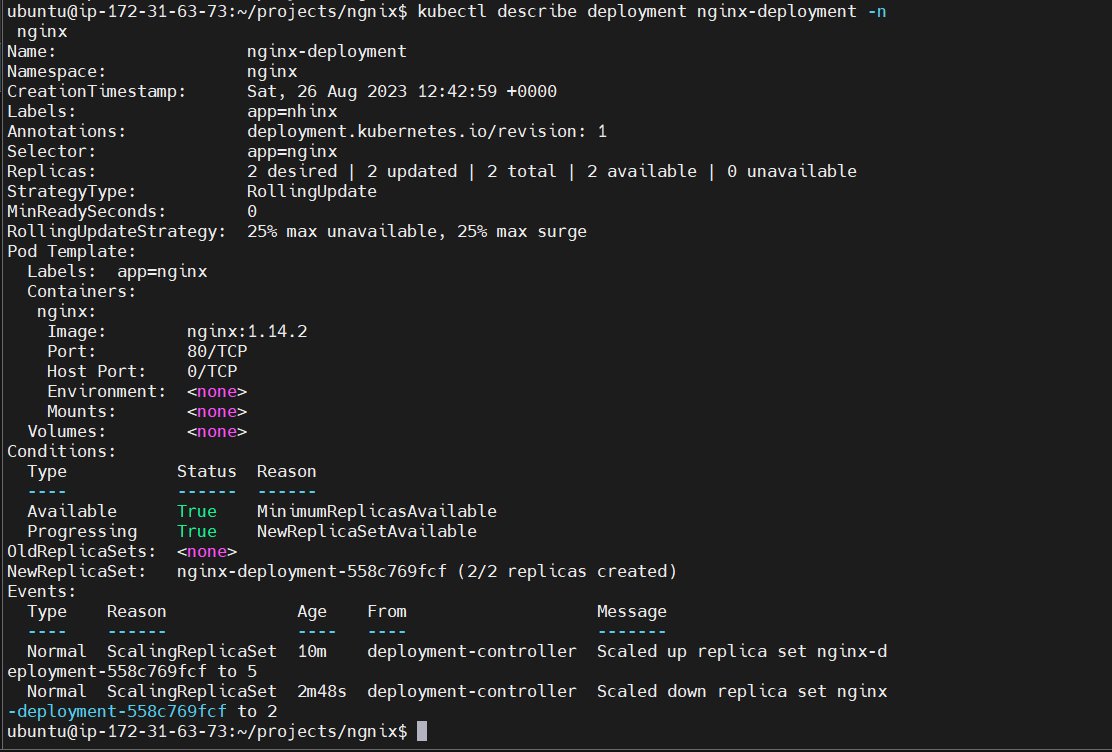

To get more information about the deployment run:kubectl describe deployment nginx-deployment -n nginx

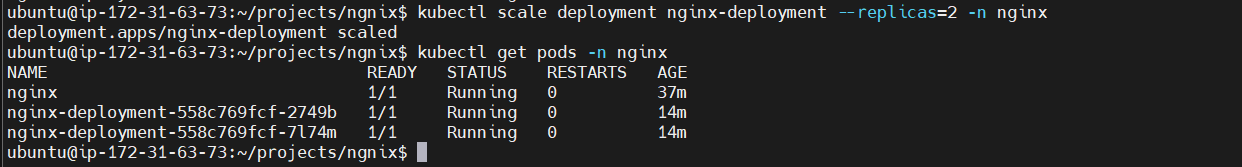

Trying rolling update: kubectl scale deployment nginx-deployment --replicas=2 -n nginx

[Rolling update is a way to perform updates or changes to your application without causing service disruptions]

Though Nginx is running however it is not accessible through port 80

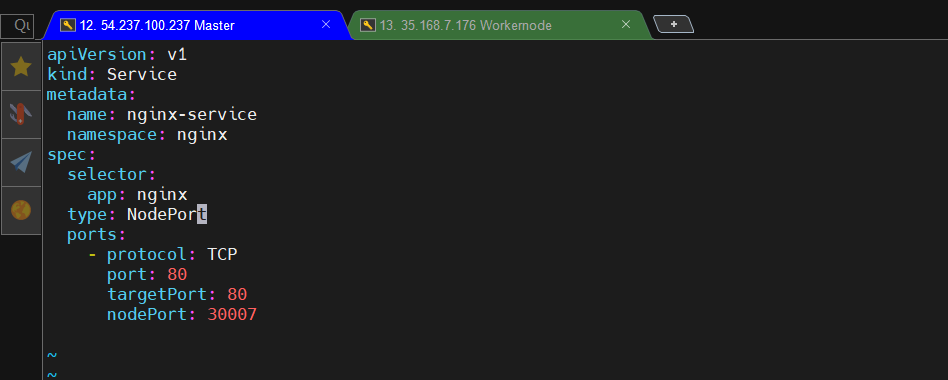

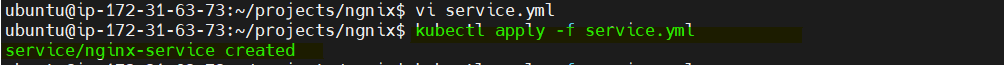

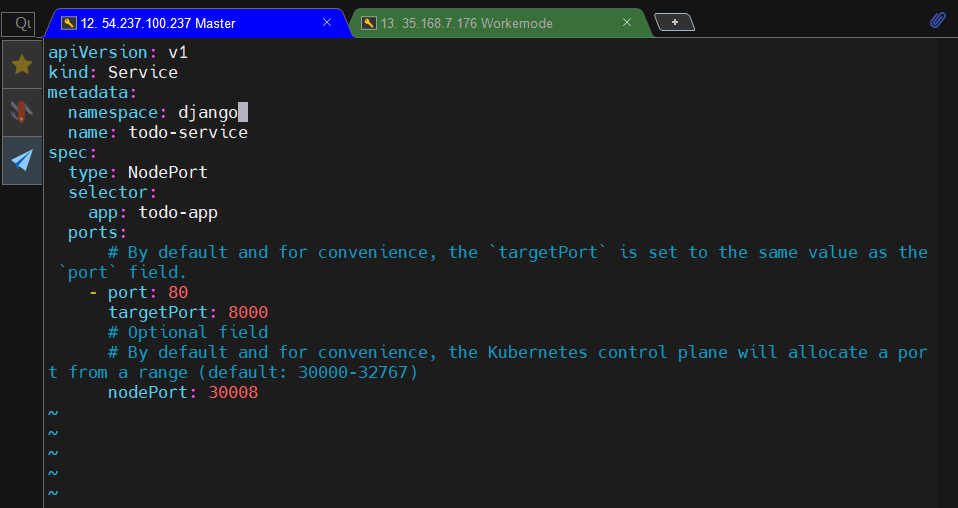

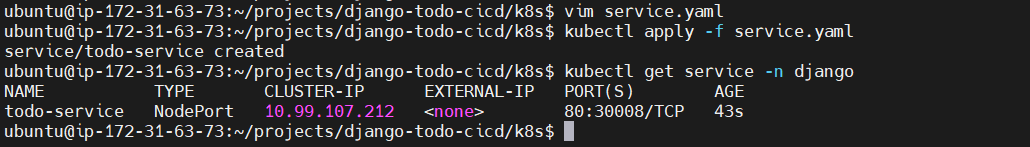

To access nginx in the Kubernetes cluster, we need to create a service manifest file.

[A Service manifest file is an abstraction that provides a stable IP address and a DNS name for a set of pods in a deployment, making it easy to expose those pods to other services within or outside the cluster. A Service manifest file allows you to define networking rules for how your pods can be accessed by other parts of the application or external users]

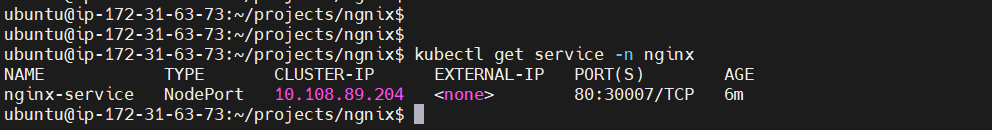

To check the created service in Kubernetes:kubectl get service -n nginx

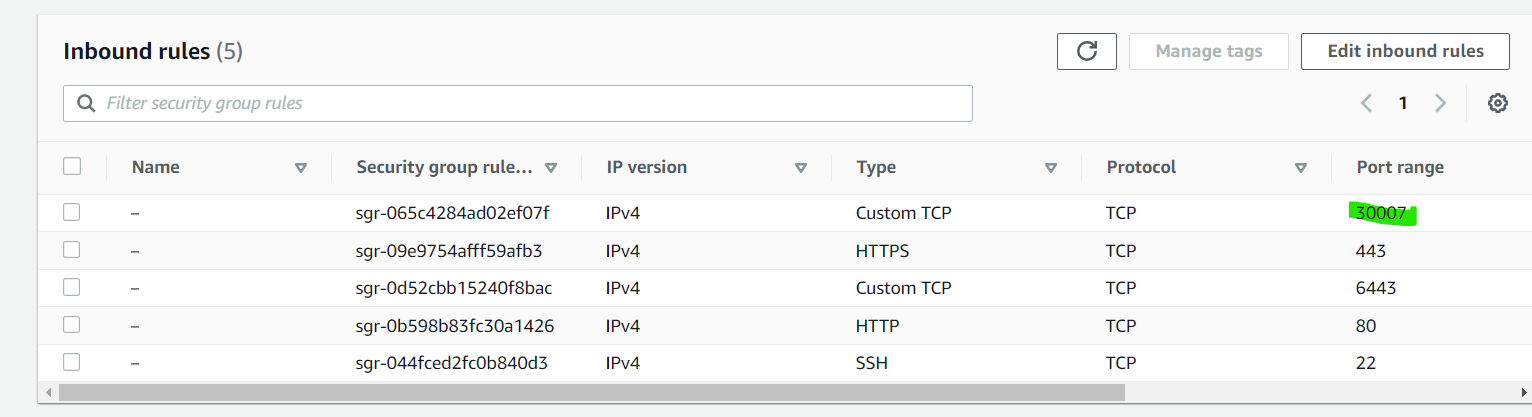

Open port 30007 in Workernode inbound rules

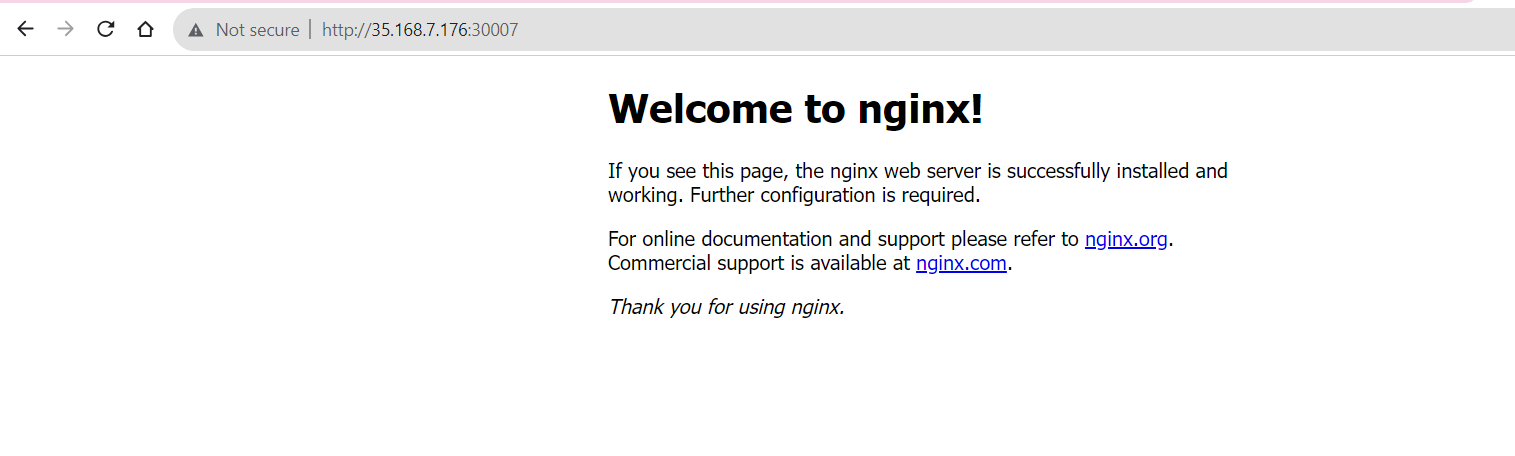

Accessing Nginx pod

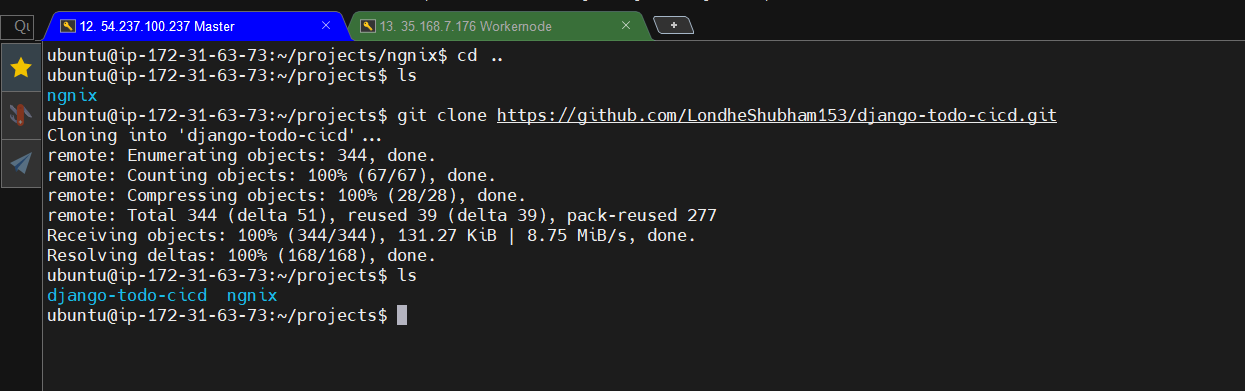

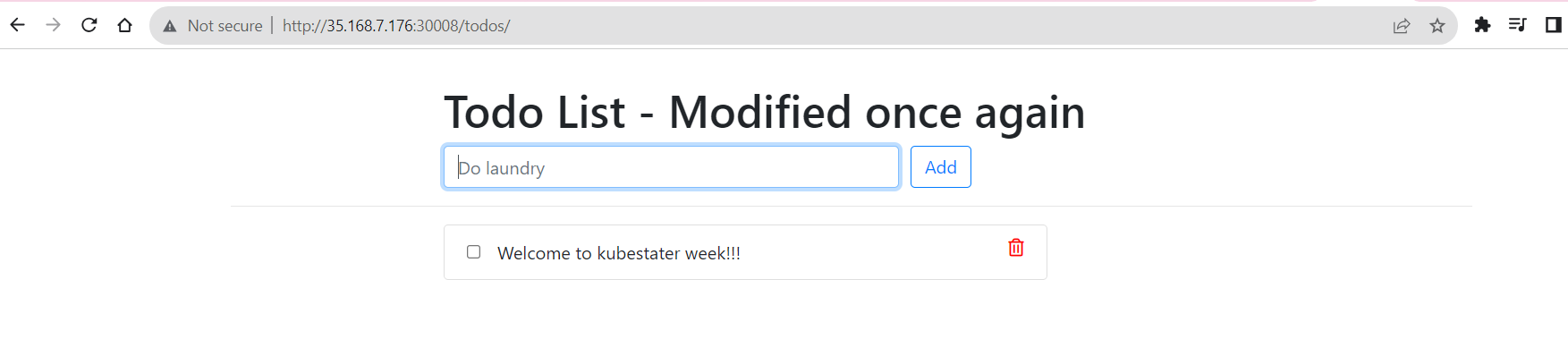

Running django-todo-cicd Pod : Part-2

Clone django todo cice app from this repo: https://github.com/LondheShubham153/django-todo-cicd

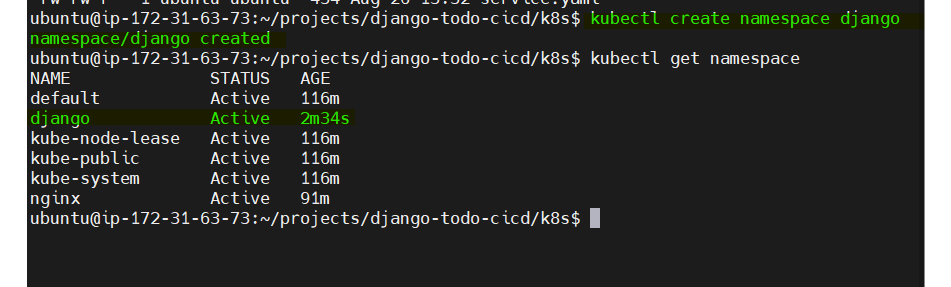

Creating a namespace for Django app

Updating pod.yaml

Updating deployment.yaml

Updating service.yaml file

Thank you for reading my blog! Happy Learning!!!😊

Stay tuned for more DevOps articles and follow me on Hashnode and connect on LinkedIn (https://www.linkedin.com/in/namratakumari04/ for the latest updates and discussions.

Subscribe to my newsletter

Read articles from Namrata Kumari directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Namrata Kumari

Namrata Kumari

Proficient in a variety of DevOps technologies, including AWS, Linux, Python, Shell Scripting, Docker, Terraform, Kubernetes and Computer Networking.