Deploying 2048 Application on AWS EKS Cluster

Aditya Dhopade

Aditya Dhopade

What we are going to do?

We will be deploying the 2048 Application on the EKS Cluster; which will contain the VPC with Public and Private Subnet. The Application will be in the Private Subnet.

What is EKS?

In the Kubernetes Cluster, there are 2 components they are Control Plane aka Master Node and the Data Plane aka Worker Nodes

Various combinations can exist in this setup like

Single master, Single Worker

Single master, Multiple Worker

Multiple master, Multiple Worker

NOTE: For High Availability generally the 3 Node Control Plane [Master Node] Architecture is followed.

There can be multiple ways to create a cluster.

First Way

Creating the EC2 Instances for both the master and the worker nodes; we need to configure the API, ETCD, Scheduler, Controller manager, Cloud Control manager, Controller Manager for Master and CNI, Container Runtime, DNS, KubeProxy in the Worker Nodes

But this is error-prone as it requires multiple levels of setup.

Second Way

Using KOPS; providing the AWS credentials to the KOPS it will generate the master and the Worker Nodes for us and we can pass it to the dev team.

But if some master goes down then due to Certificate Expired, API Server Down and ETCD Crashed then it will be very difficult to make it again working as there will be multiple clusters that can face these issues.

So whenever there is manual repetitive work the AWS provides us with a solution [In Context to Cloud]

EKS

Amazon Elastic Kubernetes Service (Amazon EKS) is a managed Kubernetes service that makes it easy for you to run Kubernetes on AWS and on-premises.

Amazon EKS automatically manages the availability and scalability of the Kubernetes control plane nodes responsible for scheduling containers, managing application availability, storing cluster data, and other key tasks.

NOTE: EKS is a Managed Control Plane, not a Data plane i.e. only Managed Master Node NOT Worker Nodes

But the worker nodes can be very easily attached to the Control Plane [master Node] using the EC2 Instances or we can use Fargate.

Third Way [Preffered Way]

Using Fargate; AWS Fargate is a technology that you can use with Amazon ECS, and Amazon EKS to run containers without having to manage servers or clusters of Amazon EC2 instances. With Fargate, you no longer have to provision, configure, or scale clusters of virtual machines to run containers.

EKS + Fargate gives us the Robust, Highly stable Highly Available architecture.

Here the EKS handles and manages the Control Plane and Fargate manages the Data Plane.

Using this the overhead of the DevOps Engineer gets less and can focus on other activities.

We need an Ingress Controller here to create a Load Balancer automatically as and when it sees an ingress Resource so that the external user can manage the access to the 2048 game Application.

Enough with the theory; let us have a hands-on for the EKS Cluster

[MUST VISIT Rrepository for Installations of Tools Required]

Refer to the Prerequisites.md on Repository For installations

To create an eks Cluster; It will take 10-15-20 minutes

eksctl create cluster --name 2048-cluster --region us-east-1 --fargate

# updating the AWS CLI to get the new cluster in the local

aws eks update-kubeconfig --name 2048-cluster --region us-east-1

On getting created we can see multiple tabs on the AWS Console for the cluster including the Pods, ReplicaSets, Deployments, StatefulSets etc.[We do not need to use the CLI for exploring these resources as it is taken care of by eksctl]

On the Overview Tab there is OpenID Connect provider URL ==> With this cluster we have created we can integrate any identity provider like Okta, or Keycloak [It acts like LDAP where we have created all our users of organization].

AWS allows us to attach any identity providers; so that for this EKS we can manage IAM or any other identity provider When we want to make use of it we can use it with no hassle.

Pod wants to talk to s3 bucket, cloud watch or any other service, eks control plane if we are not integrating with the IAM Identity Provider then how will we give access to the POD

So Whenever a resource in AWS wants to talk to s3 bucket ==> We need IAM Roles

Similarly, when we create the K8 pods we can integrate the IAM roles here with the K8 service account; so that we can talk to any other services

On compute Tab ==> At the Bottom Section we can see the Fargate Profiles.

In the Fargate profile by default there are only 2 namespaces they are default, kube-system we can deploy PODs only on these 2 namespaces but we can add another profile to add a new namespace. We are going to add a new fargate-profile

To deploy the 2048 application POD using the deployment use

eksctl create fargateprofile --cluster demo-cluster --region us-east-1 --name alb-sample-app --namespace game-2048

We need to create a fargate profile

eksctl create fargateprofile --cluster 2048-cluster --region us-east-1 --name alb-sample-app --namespace game-2048

For reference, the deployment file that we will be deploying to create the deployment link is below

# this file has all the configuration related to deployments, service and ingress

https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.5.4/docs/examples/2048/2048_full.yaml ==>

# It creates and updates resources in a cluster

kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.5.4/docs/examples/2048/2048_full.yaml

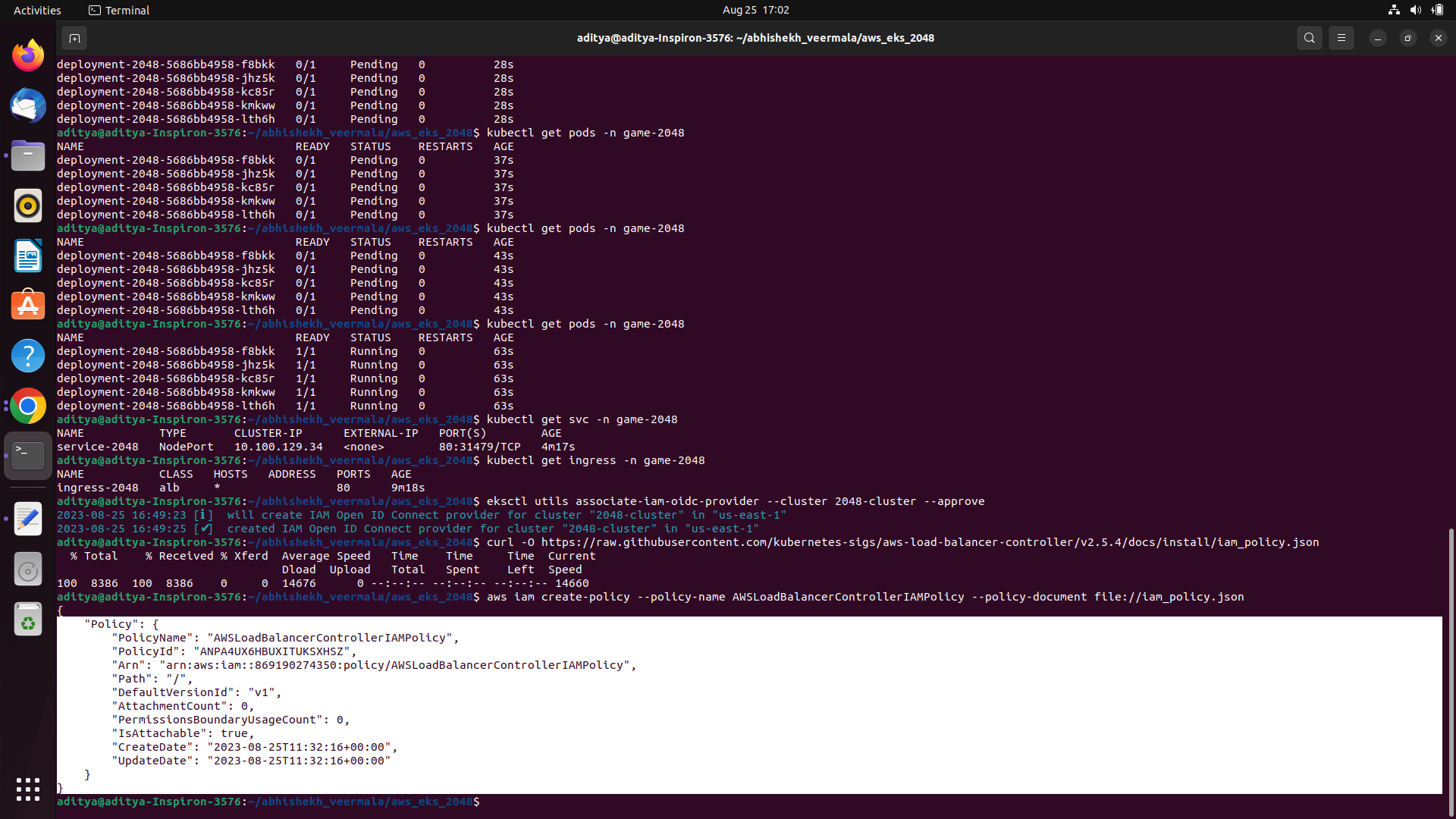

# see if the pods are up

kubectl get pods -n game-2048

# see if services are ready

kubectl get svc -n game-2048

We have not yet created the Ingress Controller and without it, there will be no use of creating the ingress resource.

# it will get you all the ingress resources

kubectl get ingress -n game-2048

| name | class | hosts | address | ports | age |

| ingress-2048alb | alb | * | 80 | 9m12s | |

Ingress is created; class is alb; host can be anything[anybody trying to access the application load balancer is fine]

Once we deploy the ingress controller then there will be an address there.

But firstly before creating the Ingress Controller, we need to configure the IAM OIDC provider Why?

The Ingress Controller [ALB Controller] they are nothing but the Kubernetes Pods and they need to talk to the AWS Services so they must need certain permissions for that and that is resolved using the IAM OIDC provider.

Configure the iam-oidc-provider then

eksctl utils associate-iam-oidc-provider --cluster 2048-cluster --approve

Need to install the ALB Controller as in K8 every controller is nothing but a POD and we are going to grant the access to AWS Services such as ALB[Application Load Balancers]

# aws load balancers controller iam_policy

curl -O https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.5.4/docs/install/iam_policy.json

# [It is from the ALB Controller Documentatetion itself]

https://github.com/kubernetes-sigs/aws-load-balancer-controller/blob/main/docs/install/iam_policy.json

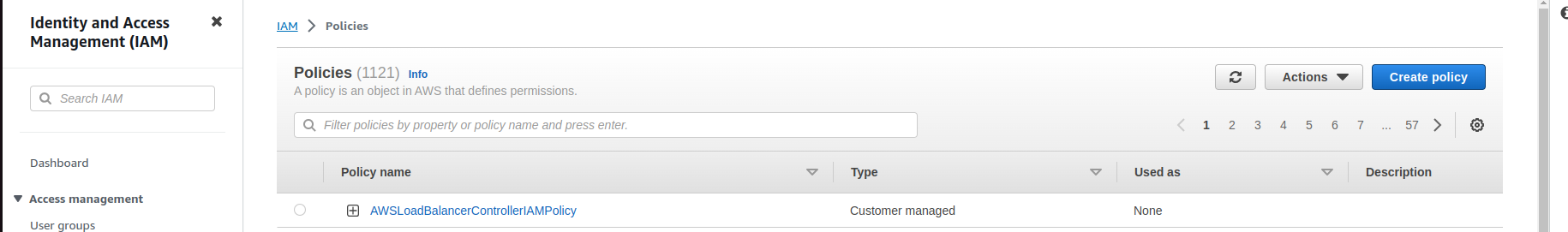

To create the IAM Create Policy

aws iam create-policy --policy-name AWSLoadBalancerControllerIAMPolicy --policy-document file://iam_policy.json

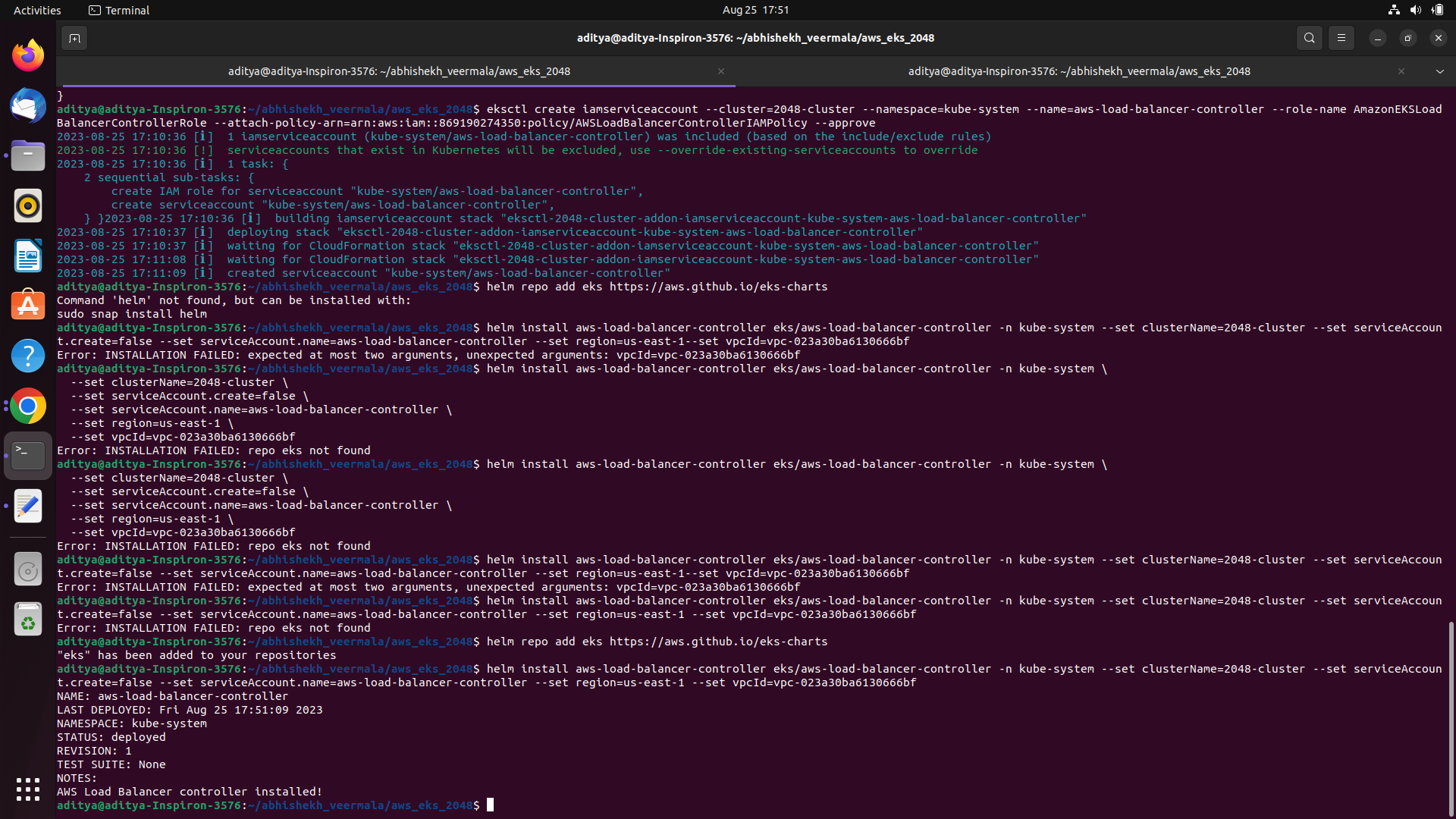

TO create the IAM ROLE

eksctl create iamserviceaccount \

--cluster=<your-cluster-name> \ #replace your cluster name

--namespace=kube-system \

--name=aws-load-balancer-controller \

--role-name AmazonEKSLoadBalancerControllerRole \

--attach-policy-arn=arn:aws:iam::<your-aws-account-id>:policy/ AWSLoadBalancerControllerIAMPolicy \ #replace your aws account id here

--approve

Let us now proceed with the installations of the ALB Controller [Ingress Controller]

We are going to use the Helm Charts; Helm charts will run the controller and it will use this service account to run the pods.

Add the Helm Repo

helm repo add eks https://aws.github.io/eks-charts

Installing the ALB Controller with the help of Helm

helm install aws-load-balancer-controller eks/aws-load-balancer-controller \

-n kube-system \

--set clusterName=<your-cluster-name> \ #replace the cluster name

--set serviceAccount.create=false \

--set serviceAccount.name=aws-load-balancer-controller \

--set region=<region> \ # replace the region us-east-1

--set vpcId=<your-vpc-id> # vpc id

# O/P will look like this

# NAME: aws-load-balancer-controller

# LAST DEPLOYED: Fri Aug 25 17:51:09 2023

# NAMESPACE: kube-system

# STATUS: deployed

# REVISION: 1

# TEST SUITE: None

# NOTES:

# AWS Load Balancer controller installed!

We can get the vpc-id by going to the EKS cluster in aws console ==> in the Networking tab ==> WE get the vpc id

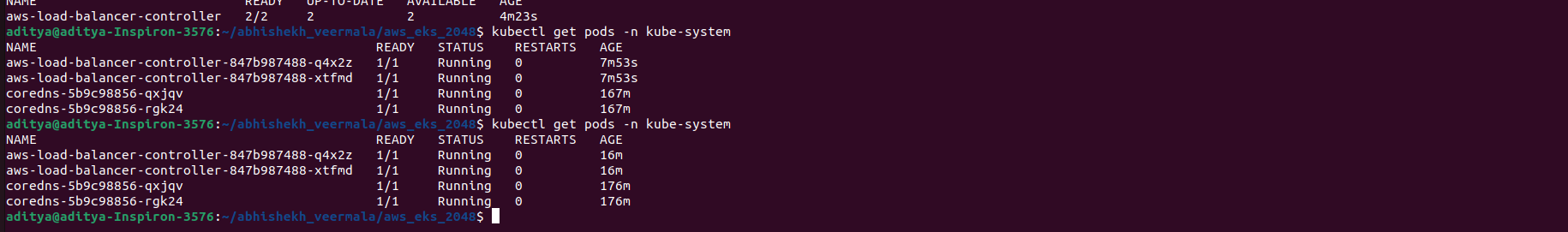

One final check we have to do is that the Application Load balancer is created and there are at least 2 replicas of it [As load balancer acts on a minimum of two instances]

kubectl get deployment -n kube-system aws-load-balancer-controller

kubectl get pods -n kube-system

Now let us see if the ALB Controller has created an ALB or not

Now let us see if the ALB Controller has created a ALB or not

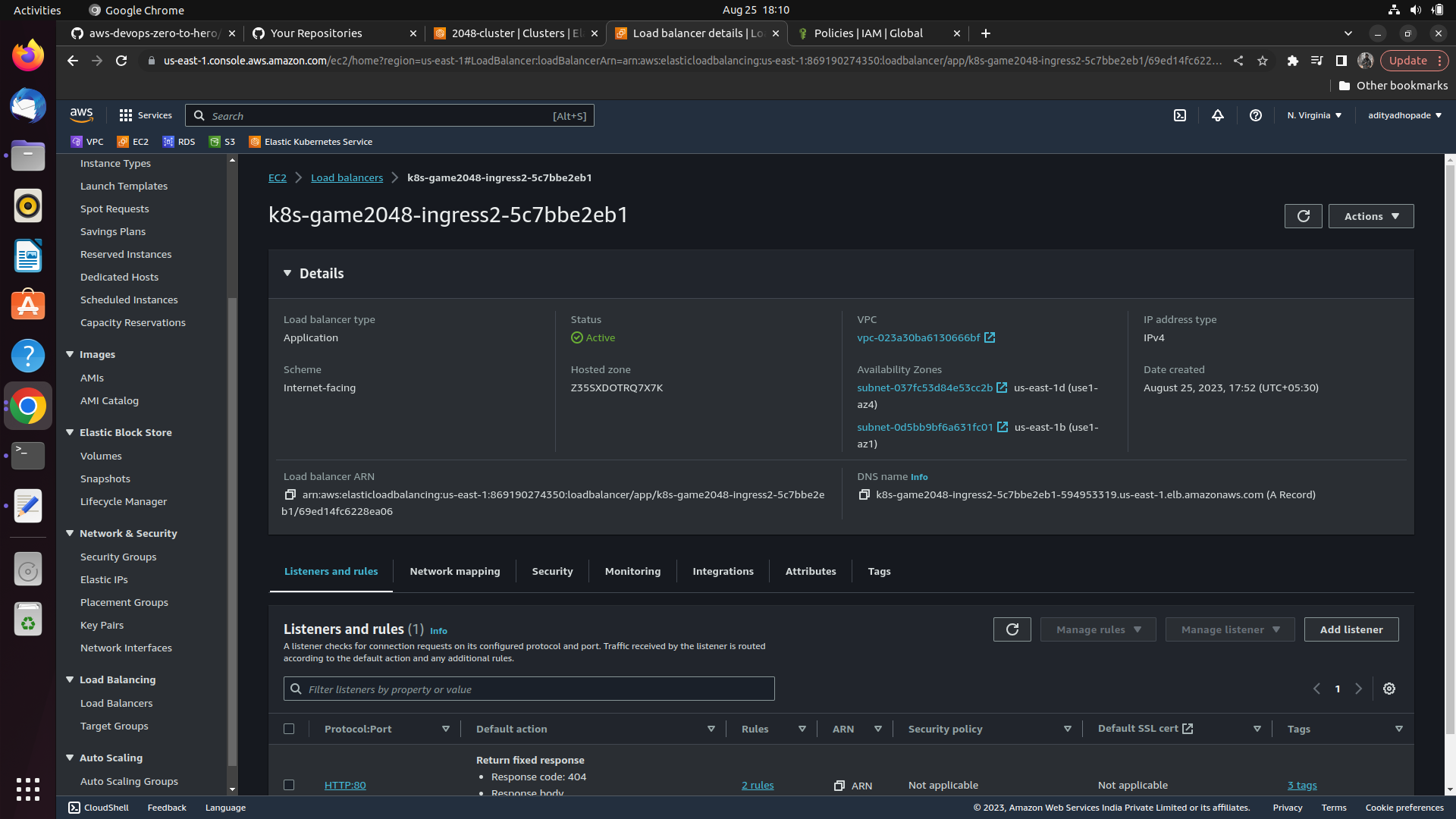

Go to AWS console => EC2 => Load balancer ==> We can see k8s-game2048-ingress2-5c7bbe2eb1 get created ==> It is created with the help of the ALB Controller

Now check out the ingress resource here

kubectl get ingress -n game-2048

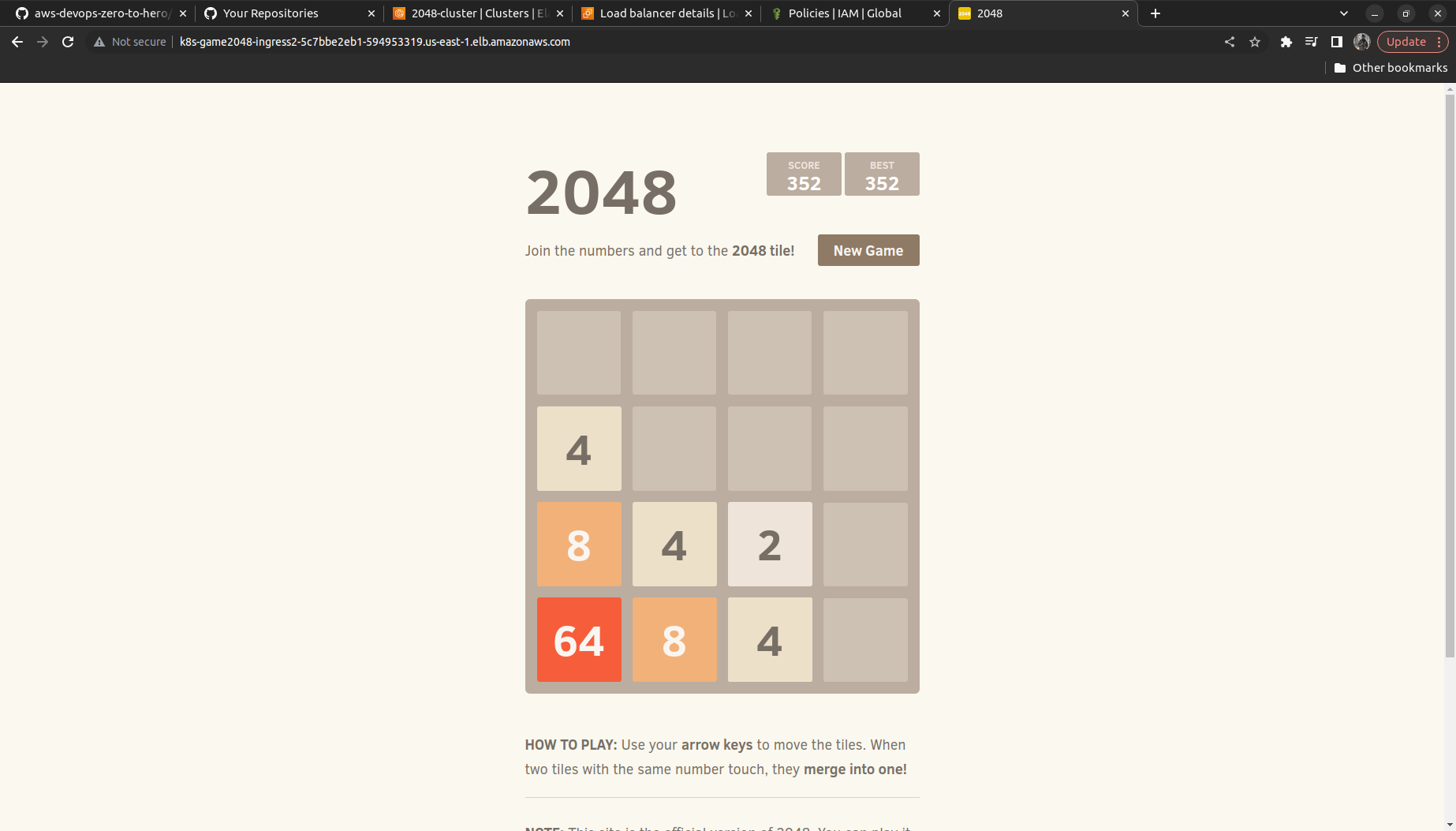

Now we the something in the address field; that something is nothing but the DNS Name of the Application Load Balancer; such that external user can create using the DNS name and can connect to the application present in the POD

Now try hitting the browser and go to the load balancer section[newly created] ==> Copy its DNS name and compare it to the address in the ingress then?

As they are both the same ==> It means the ingress controller has read the ingress resource and created the load balancers

We need to wait till the Load balancers get active and then try hitting the DNS Link in here

Subscribe to my newsletter

Read articles from Aditya Dhopade directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Aditya Dhopade

Aditya Dhopade

A passionate DevOps Engineer with 2+ years of hands-on experience on various DevOps tools. Supporting, automating, and optimising deployment process, leveraging configuration management, CI/CD, and DevOps processes.