Parallel Programming Paradigms - Part 2

Krishna N Mehta

Krishna N MehtaWelcome back to our exploration of parallel programming paradigms. In our previous articles, we delved into shared memory architecture and its intricacies. Now, let's continue by learning distributed memory systems and delve into some key concepts that shape the world of parallel programming.

Explicit Communication: The Essence of Distributed Memory Systems

In the realm of distributed memory systems, communication takes a different approach. Unlike shared memory systems, where processors share a common memory space, distributed memory systems require programmers to explicitly call upon send and receive functions for data transfer between processors. This clear and direct communication is like messages passing between neighbours in a vast digital community.

Scalability and Understanding Memory Behavior

One of the standout benefits of distributed memory architecture is its superior scalability. As more processors are added to the system, its performance doesn't buckle under the increased load. However, this scalability isn't without its prerequisites. Programmers must grasp the nuances of local and non-local memory behaviour. It's akin to knowing your way around different neighbourhoods in a bustling city.

Data Parallelism and Task Parallelism

As we dive deeper into parallel programming paradigms, two distinct approaches emerge: data parallelism and task parallelism.

In data parallel programming, the same function is executed across different data items. Each processor processes its own set of data, harmoniously contributing to the whole computation. It's like a synchronized dance where each dancer performs the same steps in harmony but on their distinct path.

Task parallel programming, on the other hand, involves different functions assigned to each processor. These functions communicate through shared memory or direct communication. It's a bit like a choreographed performance, where each dancer has their unique routine but contributes to the collective artistic expression.

Managing Dependencies

In parallel programming, the name of the game is efficiency. Breaking down a problem into tasks and distributing them among processors is just the beginning. Managing dependencies among tasks is crucial. The goal? To design tasks that have minimal interdependence, optimizing the flow of computation. Think of it as orchestrating a symphony where each musician's contribution is essential for a harmonious result.

Analyzing Latency, Throughput, and Scalability

Efficiency isn't just about raw power; it's about how effectively a program processes data. To gauge this, we need to consider two key metrics: latency and throughput.

Latency measures the time it takes to process a single piece of data. It's like timing how quickly a chef prepares a single dish. On the other hand, throughput measures how many times a program can process data in a fixed time frame. Imagine counting how many dishes that chef can churn out in an hour.

Scalability is another vital factor in parallel programming. It examines how well a program's performance scales up when more processors are added to the mix. Efficient algorithms exhibit scalability by achieving speedup proportional to the number of processors.

Speedup and Efficiency: The Dual Goals

Parallel programming is a quest for speedup and efficiency. Speedup is the measure of how much faster a program runs on multiple processors compared to a single processor. It's the engine that drives the appeal of parallel computing – the promise of accomplishing tasks at breathtaking speeds.

Efficiency, however, is the true jewel. Efficiency is the ratio of speedup to the number of processors used. It answers the question: How effectively are we utilizing our resources? We strive for high efficiency because it ensures that our parallelized efforts are making the most of the available computational power.

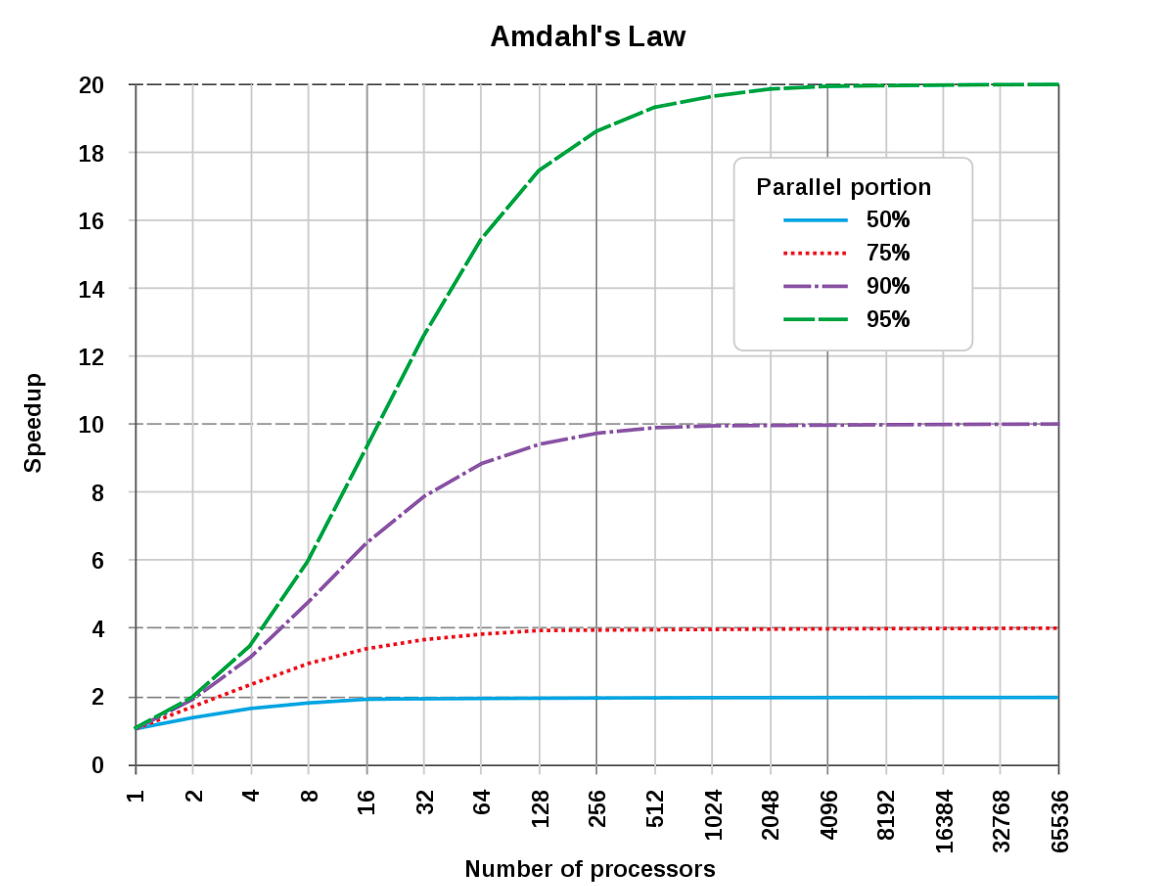

Amdahl's Law

As we venture deeper, we encounter Amdahl's Law, a foundational principle in parallel programming. This law reminds us that the maximum speedup a program can achieve is limited by the sequential portion of the code. If even a fraction of the program remains sequential, the speedup is capped. Amdahl's Law serves as a reality check, reminding us that dependencies can't be disregarded.

Our journey into parallel programming paradigms continues to evolve, enriching our understanding of this intricate field. In our upcoming article, we'll explore the nuances of performance issues, architectural styles, and the diverse landscape of parallel machines. Stay tuned as we journey further into the captivating world of parallel computing!

Subscribe to my newsletter

Read articles from Krishna N Mehta directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Krishna N Mehta

Krishna N Mehta

I am a developer passionate about building websites. I love learning new things and always up for a meaningful conversation.