Kubernetes Fundamentals:

Alok Raj

Alok Raj

What is Kubernetes:

Kubernetes is an open-source container orchestration platform designed to automate the deployment, scaling, management, and operation of containerized applications. Containers are a lightweight and efficient way to package and run applications, and Kubernetes simplifies the process of managing and scaling these containers in a highly dynamic and efficient manner.

Key features and functions of Kubernetes include:

Container Orchestration: Kubernetes manages the deployment of containers, ensuring that the right number of containers are running at all times, and automatically replacing failed containers.

Scaling: It can automatically scale applications up or down based on load or resource utilization, allowing for efficient resource allocation.

Load Balancing: Kubernetes can distribute incoming network traffic across multiple instances of an application, ensuring high availability and even distribution of requests.

Self-Healing: If a container or node fails, Kubernetes can automatically reschedule and restart containers to maintain the desired state.

Configuration Management: It allows you to manage application configurations and store them as code, making it easier to maintain consistent environments.

Rolling Updates: Kubernetes supports rolling updates, allowing you to update applications without downtime by gradually replacing old containers with new ones.

Resource Management: You can define resource limits and requests for containers, ensuring efficient resource utilization within the cluster.

Service Discovery and Routing: Kubernetes provides built-in mechanisms for service discovery and routing, making it easy for containers to communicate with each other.

Storage Orchestration: It manages the lifecycle of storage resources, allowing containers to use persistent storage volumes.

Multi-Cloud and Hybrid Cloud Support: Kubernetes can be used on various cloud providers or on-premises data centers, making it highly versatile.

Kubernetes has become the de facto standard for container orchestration and is widely used in modern application development and deployment. It simplifies the management of containerized applications, making them more reliable, scalable, and easier to maintain.

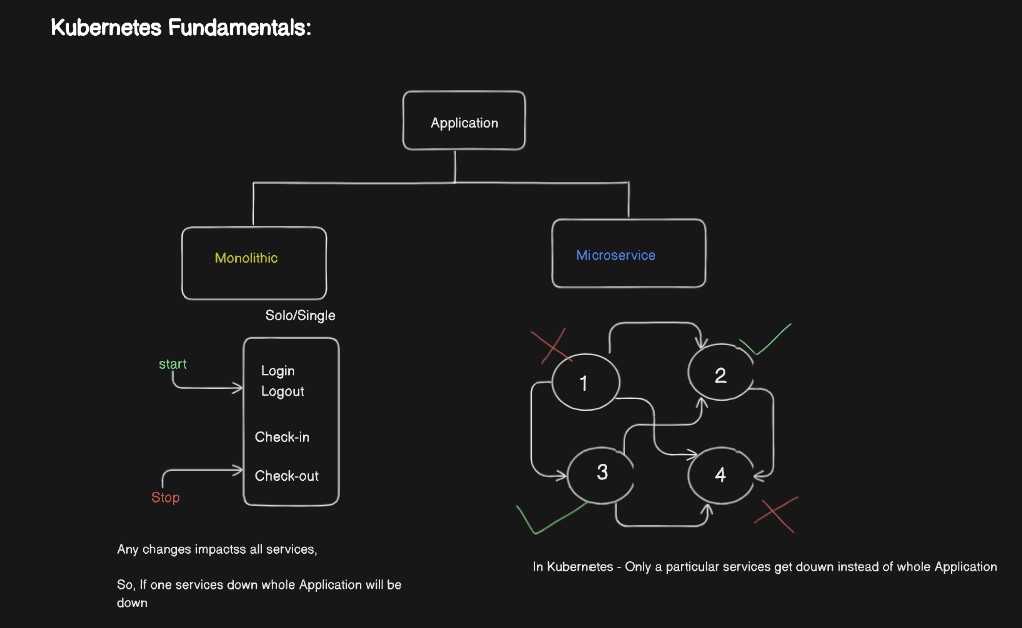

To understand the impact of using Kubernetes let's understand with the example of Microservices:

🏗️ Microservices are like building blocks in Kubernetes. Instead of one big connected building (monolithic app), picture lots of standalone houses (microservices). Each house serves a specific purpose and can be managed separately.

🔧 Kubernetes manages these houses efficiently. It handles their deployment, scaling, and balance. Each house (microservice) runs in its container for isolation. This modular approach makes developing, testing, and maintaining complex apps easier.

💪 Microservices in Kubernetes mean modern app-building. They're flexible, scalable, and resilient because they're smaller, standalone units that work together seamlessly. 🚀

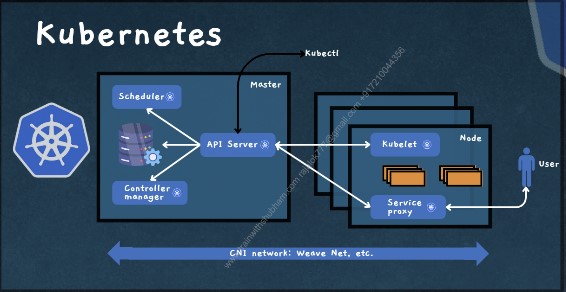

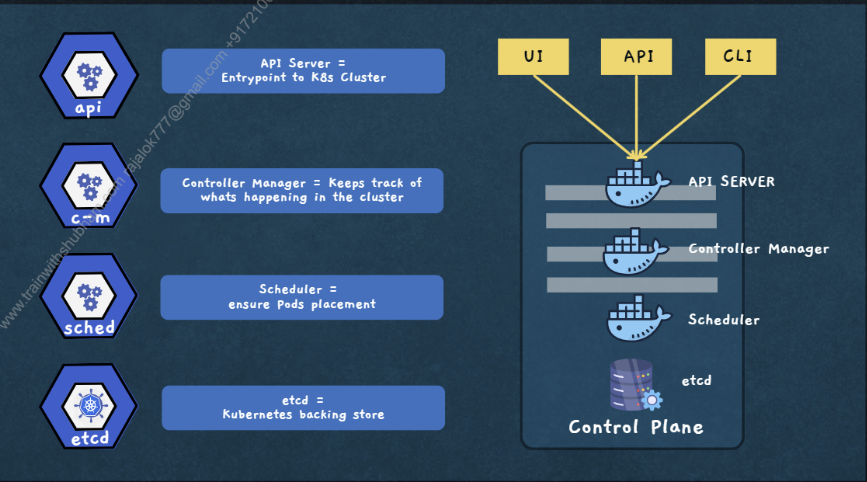

The architecture of Kubernetes:

Master Node:

API Server: This is the central control point for the entire Kubernetes cluster. It serves as the entry point for all administrative tasks and user interactions. When you issue commands or make requests to the cluster (e.g., deployment requests), they go through the API server. It also validates and processes these requests, making sure they conform to the desired state of the cluster.

etcd: This is a distributed key-value store that acts as the cluster's "database." It stores all the configuration data for the cluster, including information about nodes, pods, services, and more. The etcd database is crucial for maintaining the cluster's state and ensuring consistency.

Scheduler: The scheduler is responsible for deciding where to run containers within the cluster. When you create a new pod (a single instance of a container), the scheduler selects an appropriate worker node to place it on, considering factors like resource availability and constraints.

Controller Manager: This component includes various controllers that continuously monitor the cluster's desired state and the actual state. If there are any discrepancies (e.g., a pod crashes), the controller manager takes corrective actions to bring the cluster back to the desired state. Examples of controllers include the Replication Controller and the ReplicaSet Controller.

Cloud Controller Manager (Optional): In cloud environments like AWS or Azure, this component provides integration with the cloud provider's APIs. It allows Kubernetes to manage cloud-specific resources such as load balancers and persistent storage.

Worker Node:

kubelet: This is the agent that runs on each worker node in the cluster. It communicates with the control plane (API server) and ensures that the containers running on its node are in the desired state. It takes care of tasks like starting, stopping, and monitoring containers.

kube-proxy: Responsible for network proxying on the worker nodes. It maintains network rules on the node and handles network communication for services that need to connect to other services or pods. Essentially, it acts as a "traffic cop" for network requests.

Container Runtime: This is the software responsible for running containers. Docker is a commonly used runtime, but Kubernetes supports various container runtimes, including containerd and CRI-O. It's the component that allows you to package and run your applications in containers.

Pods:

- Pods are the smallest deployable units in Kubernetes. They can contain one or more containers that share the same network namespace, storage volumes, and IP address. Pods are the atomic unit of scheduling, meaning the scheduler places pods on worker nodes, not individual containers.

Services:

- Services provide network abstraction to expose a set of pods as a network service. They allow for load balancing and automatic service discovery within the cluster. There are different types of services, including ClusterIP, NodePort, and LoadBalancer, each with specific use cases.

Volumes:

- Volumes provide persistent storage to containers. They are used to store data that should persist beyond the lifecycle of a container. Kubernetes supports various volume types and can be integrated with cloud storage solutions.

Ingress:

- Ingress is responsible for managing external access to services within the cluster. It acts as a "front door" for routing HTTP and HTTPS traffic to the appropriate services based on rules and configurations.

Namespace:

- Namespaces provide a way to logically divide a single physical cluster into multiple virtual clusters. They are often used to isolate resources and control access, making it easier to manage multi-tenant environments or different stages of development.

Annotations and Labels:

- Kubernetes allows you to add metadata in the form of labels and annotations to various resources (e.g., pods, services). Labels are used to select and filter resources, while annotations provide additional information about resources.

In summary, the Kubernetes architecture is a distributed system designed to manage containerized applications across a cluster of nodes. It provides a robust framework for deploying, scaling, and managing applications, allowing developers and administrators to focus on defining the desired state of their applications while Kubernetes takes care of the underlying infrastructure and automation.

Subscribe to my newsletter

Read articles from Alok Raj directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Alok Raj

Alok Raj

DevOps Enthusiast. SysOps Administrator with 8+ years of technical experience in AWS, Azure, CI/CD pipelines, Docker, Jenkins, and infrastructure design DevOps.