Sandbox for learning Druid + Kafka

Adam Whitter

Adam WhitterObjective today

What I want to do in this article is give you the tools you need to get started with Apache Druid + Apache Kafka so you can jump in and start learning how great these two are together.

By the end of this article I want you to be in a position to make use of a Druid+Kafka sandbox that you can start up and shut down within which you can do your learning.

What you are going to need

Not much. You're going to need to have docker installed wherever you want your sandbox to run. You can follow these instructions if, like me, you're using a mac.

You're going to need command line access to this enviroment. Well, that's pretty easy - right?

And you're going to need a mug of tea and of course a few biscuits. I would estimate this to be a two-biscuit-tutorial. I'll take you through the steps one biscuit at a time.

Biscuit#1

From the command line create yourself a new directory. Maybe call it something like test. Download these two files:

mkdir test

cp ~/Downloads/kd.yml test/

cp ~/Downloads/environment test/

cd test

Quick sip of tea.

Biscuit#2

Lets use docker to start druid and kafka up.

docker-compose -f kd.yml up -d

docker-compose -f kd.ymp ps

docker-compose -f kd.yml ps ✔ 3466 18:22:32

WARNING: Compose V1 is no longer supported and will be removed from Docker Desktop in an upcoming release. See https://docs.docker.com/go/compose-v1-eol/

Name Command State Ports

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

broker /druid.sh broker Up 0.0.0.0:8082->8082/tcp

coordinator /druid.sh coordinator Up 0.0.0.0:8081->8081/tcp

historical1 /druid.sh historical Up 0.0.0.0:8083->8083/tcp

kafkabroker /etc/confluent/docker/run Up 0.0.0.0:9091->9091/tcp, 9092/tcp, 0.0.0.0:9101->9101/tcp

middlemanager1 /druid.sh middleManager Up 0.0.0.0:8091->8091/tcp, 0.0.0.0:8100->8100/tcp, 0.0.0.0:8101->8101/tcp, 0.0.0.0:8102->8102/tcp, 0.0.0.0:8103->8103/tcp, 0.0.0.0:8104->8104/tcp, 0.0.0.0:8105->8105/tcp

postgres docker-entrypoint.sh postgres Up 5432/tcp

router /druid.sh router Up 0.0.0.0:8888->8888/tcp

zookeeper /docker-entrypoint.sh zkSe ... Up 0.0.0.0:2181->2181/tcp, 2888/tcp, 3888/tcp, 8080/tcp

Proof of the pudding....

That's it. You're done - we have Apache Druid up and running, Apache Kafka is working, and they are both on the same network and able to talk to each other (we'll confirm this fact with more biscuits in later tutorials)

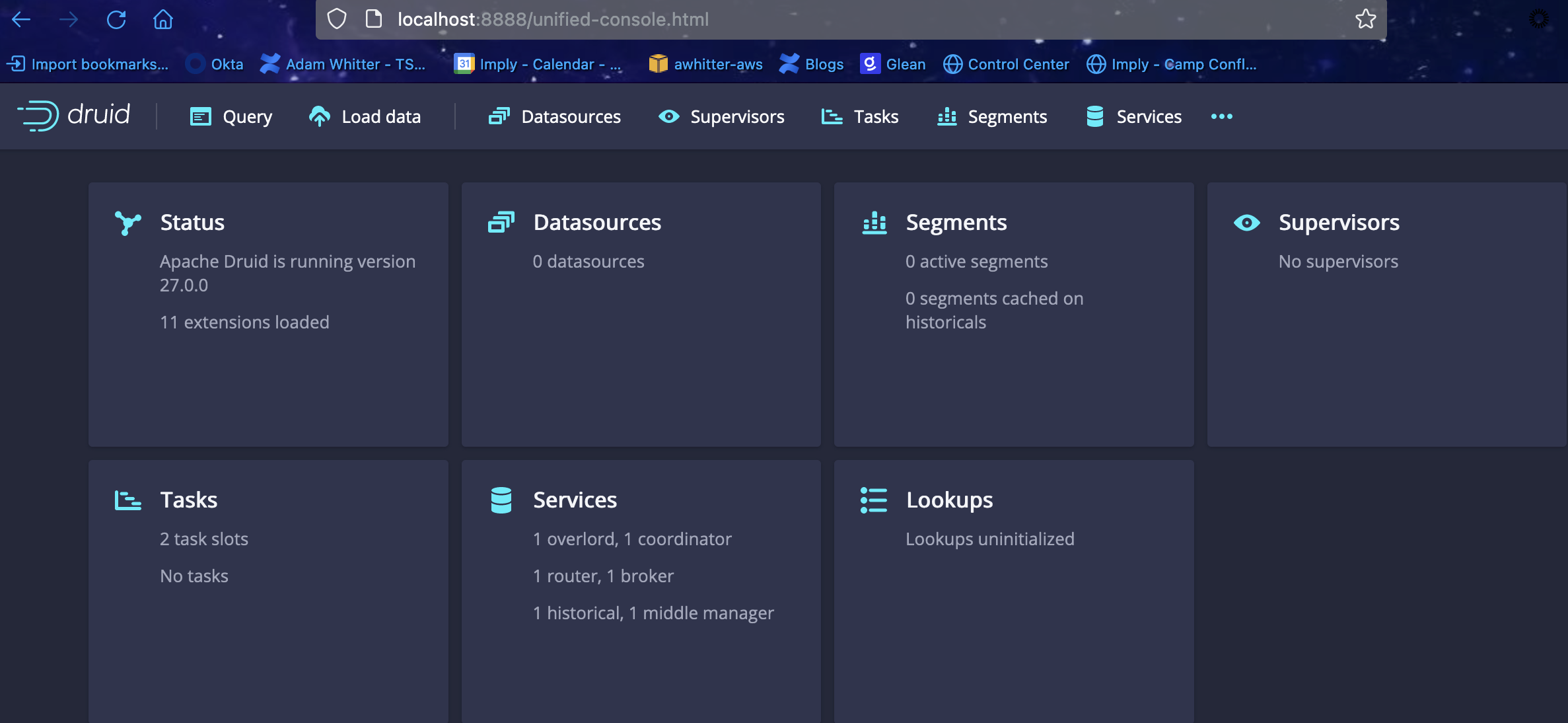

First lets show that Druid is working. Point your browser at http://localhost:8888

You should see the console above. It can take a few minutes for everything to start up so be patient.

Next lets check that Kafka is working. that's an easy command we can run:

docker exec kafkabroker kafka-topics --bootstrap-server localhost:9091 --list ✔ 3486 19:17:12

__consumer_offsets

_confluent-command

_confluent-metrics

_confluent-telemetry-metrics

_confluent_balancer_api_state

And there you go. Druids working. Kafkas running. Our work here is done!

What's next?

Next tutorial we'll make this environment a bit more useful. start to generate some data in Kafka, ingest it in druid, put some pretty visualisations on the top. Watch this space!

Subscribe to my newsletter

Read articles from Adam Whitter directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by