Data Engineering Best Practices: Why Extract, Transform, and Load (ETL) Should Be Decoupled

Kailash Sukumaran

Kailash Sukumaran

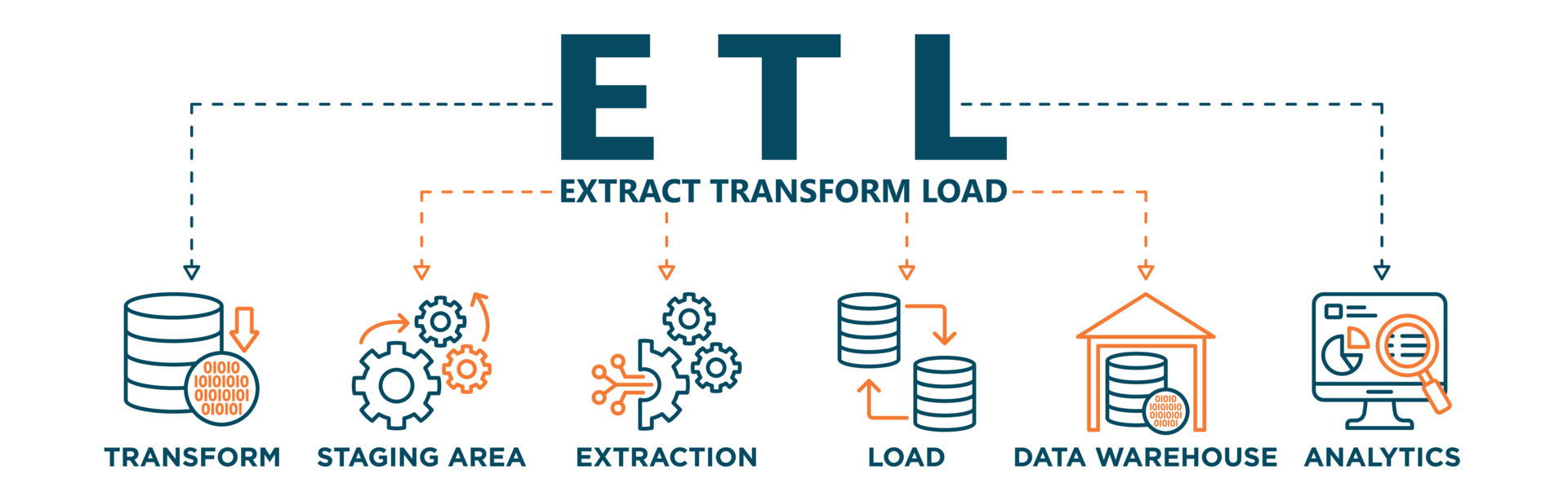

In the ever-evolving world of data management and analytics, one principle remains steadfast: data is the lifeblood of informed decision-making. This data, often dispersed across various sources and formats, must be collected, transformed, and loaded into a target system for analysis. This process, known as Extract, Transform, and Load (ETL), has traditionally been treated as a monolithic operation. However, the trend today is to decouple the ETL process, splitting it into distinct stages. In this blog, we will explore the compelling reasons why ETL should be decoupled.

1. Scalability and Parallel Processing

Decoupling ETL allows for each stage to scale independently. This means you can allocate resources specifically where they are needed. For example, if your data extraction stage requires more horsepower due to a sudden increase in data volume, you can scale it up without impacting the transformation or loading phases. This scalability ensures optimal resource utilization and faster processing times, especially when dealing with large datasets.

2. Fault Tolerance

ETL pipelines often span multiple systems and networks, making them susceptible to failures at various points. Decoupling the process enables better fault tolerance. When one stage encounters an issue, it can be isolated and retried without affecting the entire ETL flow. This enhances the overall reliability of the pipeline, ensuring that data is successfully processed and loaded even in the face of transient errors.

3. Flexibility and Maintenance

In a coupled ETL process, any change to one stage can have a cascading effect on the entire pipeline, requiring extensive testing and potentially causing downtime. Decoupling mitigates this risk by allowing for flexibility in development and maintenance. Each stage can be modified, upgraded, or replaced without disrupting the others. This means faster development cycles, easier debugging, and less risk of introducing unintended consequences.

4. Real-time and Stream Processing

As businesses increasingly demand real-time insights, decoupled ETL provides a foundation for streaming data processing. Data can be extracted, transformed, and loaded as it arrives, enabling organizations to make decisions in near real-time. This is especially valuable in domains such as financial services, where timely data is critical for trading decisions and risk management.

5. Better Resource Management

With decoupled ETL, resource allocation becomes more efficient. Resources can be dynamically allocated based on the requirements of each stage. This means you don’t need to provision the same resources for extraction, transformation, and loading, saving on costs and optimizing performance.

6. Improved Monitoring and Logging

Decoupled ETL pipelines allow for granular monitoring and logging at each stage. This visibility into the individual components of the process enables better debugging, performance tuning, and error handling. It also facilitates compliance with data governance and auditing requirements.

Conclusion

Decoupling the ETL process is a strategic move that aligns with the evolving demands of modern data management. It offers benefits in terms of scalability, fault tolerance, flexibility, real-time processing, resource management, and monitoring. By embracing this approach, organizations can harness the full potential of their data while reducing complexity, improving efficiency, and staying agile in a rapidly changing data landscape. As you embark on or refine your data integration journey, consider decoupling your ETL processes to unlock new possibilities and drive data-driven success.

Subscribe to my newsletter

Read articles from Kailash Sukumaran directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Kailash Sukumaran

Kailash Sukumaran

Experienced Data Engineer with a track record of six years, specializing in PySpark/Python and Azure technologies. Proficient in designing and implementing robust Data Engineering architectures and creating data-marts to address complex business challenges. Skilled in end-to-end development, adept at requirement gathering, and known for building strong client relationships across diverse industries, such as insurance, travel, and utilities.