Diving Deep into Node.js Streams

Dr. Alwin Simon

Dr. Alwin Simon

In the digital realm, we often find practical parallels with the real world.

One of the most powerful tools in the world of web development, particularly within the Node.js ecosystem, is streams.

But what exactly are streams and why are they so transformative?

If you've ever watched a movie online, you've experienced a form of streaming.

Platforms like Netflix allow you to watch the movie as it's being downloaded, chunk by chunk.

This process, of handling data in manageable bits, is the essence of streams in Node.js.

Breaking Down the Basics

At its core, streaming is the act of processing data piece by piece, rather than waiting for the entire dataset.

This is similar to how online movie streaming platforms operate, delivering content chunk by chunk.

In Node.js, instead of waiting for all data to arrive, streams process data piece by piece, making operations especially efficient when dealing with large datasets or real-time data.

The Power and Need for Streams

Imagine trying to transfer a large set of encyclopedias from one room to another. Instead of waiting to move them all at once, wouldn't it be more efficient to transfer a few books at a time?

This "piece-by-piece" approach is not only manageable but also allows you to start organizing the books in the new room even before you've moved them all.

This is the efficiency that streams introduce:

Performance: Streams break data into smaller chunks, reducing strain on system memory.

Real-time Processing: For real-time data applications, streams ensure data is processed as soon as it arrives.

Error Handling: Processing data in chunks allows for better error detection and handling.

Stream Types

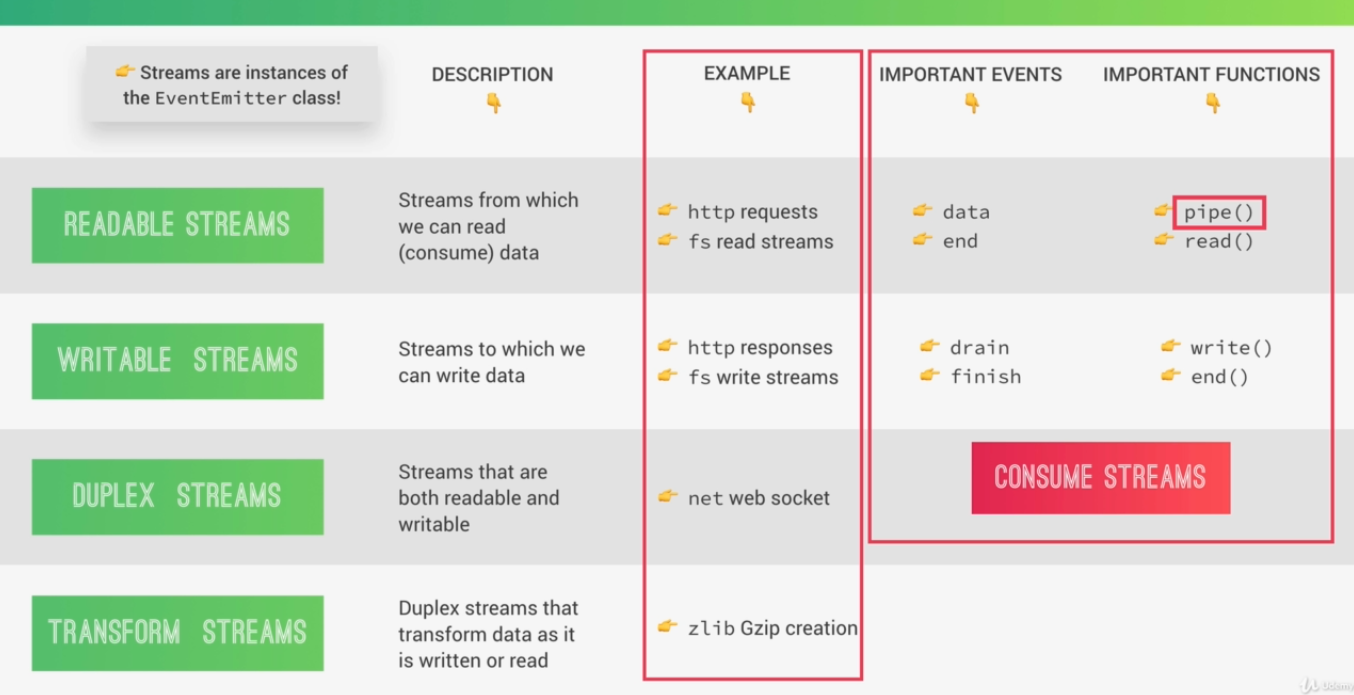

Node.js categorizes streams into four primary types:

Readable Streams: Analogous to a book you're reading. It's a source of data you can consume but not modify directly.

Writable Streams: The opposite of readable streams, akin to writing in a journal where you're producing content.

Duplex Streams: Think of a two-way walkie-talkie, where you can both listen (read data) and talk (write data).

Transform Streams: These take data, process it, and output the modified data, much like a live translator in a conversation.

Streams in Action: Practical Scenarios

1. File Manipulation: Reading and writing large files can be memory-intensive. With streams, you handle files chunk by chunk, reducing memory overhead.

javascriptCopy codeconst fs = require('fs');

const readableStream = fs.createReadStream('largeInputFile.txt');

const writableStream = fs.createWriteStream('outputFile.txt');

readableStream.pipe(writableStream);

2. Data Compression: If you need to compress a large file, streams can handle this seamlessly.

javascriptCopy codeconst fs = require('fs');

const zlib = require('zlib');

const readableStream = fs.createReadStream('largeFile.txt');

const writableStream = fs.createWriteStream('compressedFile.gz');

const gzip = zlib.createGzip();

readableStream.pipe(gzip).pipe(writableStream);

3. Real-time Data Processing: Streams shine in applications that require real-time data processing, such as stock trading platforms or live chat applications.

Best Practices and Common Pitfalls

Error Handling: Always have error handlers for your streams to manage unexpected issues.

Backpressure: This happens when data production outpaces consumption. Handling backpressure is vital to prevent potential application issues.

Clean Up: Ensure streams are closed correctly to avoid memory leaks.

Conclusion

Streams are a cornerstone in the Node.js world.

They tackle challenges posed by data-heavy applications by breaking data into chunks and processing it progressively.

With the insights from this guide, you're poised to leverage the power of streams in your Node.js endeavors.

For further exploration, the official Node.js documentation is an invaluable resource. Dive in, and happy streaming! 🌊

Subscribe to my newsletter

Read articles from Dr. Alwin Simon directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Dr. Alwin Simon

Dr. Alwin Simon

Hi there! I'm Alwin Simon, a medico turned engineer driven by an immense passion for technology. I've always been fascinated by the possibilities that lie at the intersection of medicine and engineering, and it's this passion that led me to embark on a remarkable journey. As a self-taught MERN Stack Developer, my focus primarily lies in back-end development. I've invested countless hours honing my skills and gaining a deep understanding of the intricacies involved in building robust and efficient systems. The ever-evolving world of technology never fails to excite me, and I'm constantly pushing myself to learn and stay up-to-date with the latest advancements. In addition to my technical expertise, I have a strong entrepreneurial spirit. I've had the incredible opportunity to co-found, serve as COO, and be a director of a health tech company. These experiences have provided me with invaluable insights into the startup world and taught me the importance of innovation, resilience, and strategic thinking. One aspect that particularly intrigues me is brand building. I firmly believe that a strong brand identity is essential in today's competitive landscape. I'm constantly curious about exploring innovative strategies to create impactful brands and understand the dynamics of connecting with customers on a deeper level. Combining my medical background, engineering skills, startup experience, and interest in brand building, I strive to make a lasting impact in the world of technology. I'm always eager to take on new challenges, expand my knowledge, and push the boundaries of what's possible. Join me on this exciting journey as I continue to explore, learn, and make a difference in the ever-evolving world of technology and innovation. Together, let's shape the future!