🤖 Day 3 of #30DaysOfAIwithAadi

Aaditya Champaneri

Aaditya Champaneri

Journey into Neural Networks and Backpropagation

Today, let's delve into the fascinating world of neural networks and the ingenious technique called backpropagation. These concepts are the backbone of modern machine learning and deep learning, driving innovations like image recognition, natural language processing, and more.

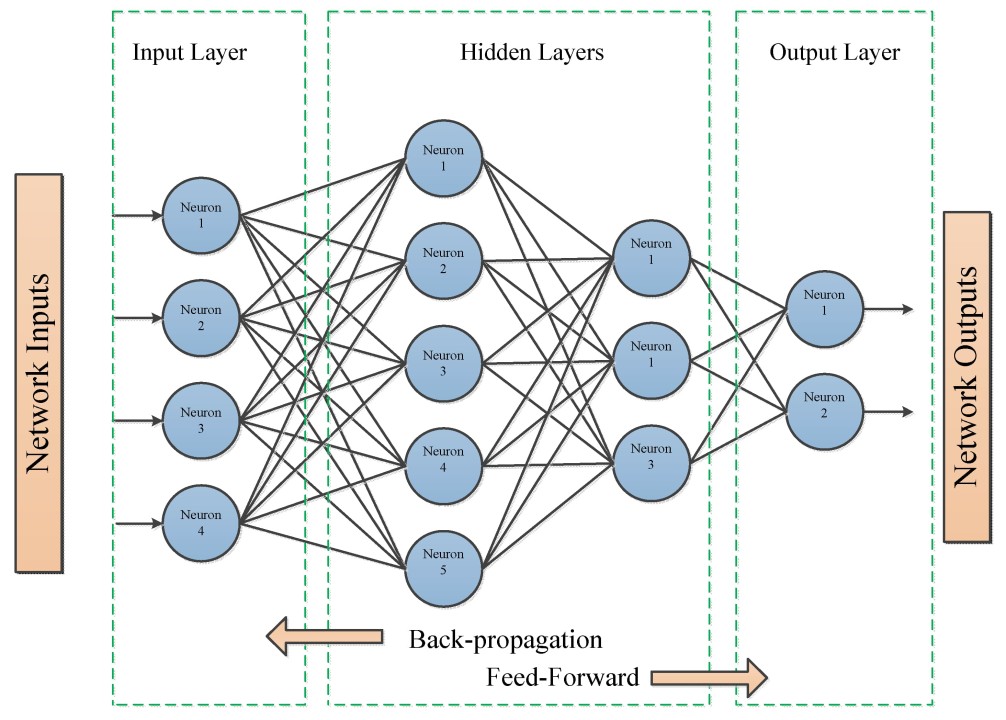

Neural Networks: At its core, a neural network is inspired by the human brain's structure. It's composed of interconnected nodes, or neurons, organized into layers. The three primary layers are the input layer, hidden layers (one or more), and the output layer. Neurons in each layer process information and transmit it to the next layer, allowing the network to learn complex patterns from data.

For example, consider image recognition. Each neuron might analyze a specific feature, like edges or colors. As you move through the layers, neurons collectively recognize higher-level patterns, ultimately identifying objects in the image.

- Handwritten Digit Recognition is an example of Neural Networks

Now, Let’s understand how it works:

Imagine you want to build a neural network that can recognize handwritten digits, like those in postal codes on envelopes. This application is a classic example of how neural networks can be used for image classification.

Backpropagation: Now, imagine teaching a neural network to perform a task, like recognizing handwritten digits. You provide it with labeled data (images of digits along with their actual values) to learn from. This is where backpropagation comes in.

Backpropagation is a fundamental technique in training artificial neural networks. It's a supervised learning algorithm that enables neural networks to learn and improve their performance by adjusting their internal parameters, such as weights and biases.

Here's how it works:

Forward Pass: During the training process, input data is fed forward through the neural network. Each neuron in a layer processes the input and passes it to the neurons in the next layer. This process continues through the layers until the network produces an output.

Error Calculation: The output generated by the network is compared to the expected or target output (ground truth). The difference between the predicted output and the actual target output is known as the error or loss.

Backward Pass (Backpropagation): Backpropagation begins with the computed error. It then works backward through the network, layer by layer. For each layer, it calculates how much each neuron in that layer contributed to the error.

Weight and Bias Updates: Using calculus and the chain rule, backpropagation calculates the gradients of the error with respect to the weights and biases of each neuron. These gradients indicate the direction and magnitude of changes needed in the weights and biases to minimize the error.

Gradient Descent: The calculated gradients are used to update the weights and biases of the neurons in each layer. The network adjusts these parameters in the opposite direction of the gradient, effectively reducing the error.

Repeat: Steps 1 to 5 are repeated for multiple iterations or epochs, gradually refining the network's parameters. As the training progresses, the network's ability to make accurate predictions improves.

The key idea behind backpropagation is to iteratively fine-tune the neural network's parameters to minimize the prediction error. This process continues until the network reaches a point where further adjustments result in negligible improvements.

Backpropagation is a learning algorithm that enables neural networks to adjust their internal parameters (weights and biases) during training to improve their performance. Here's how it works:

The network makes predictions, and you compare them to the actual labels to calculate an error.

Backpropagation propagates this error backward through the network, layer by layer, to determine how much each neuron's contribution led to the error.

Using calculus, the algorithm then adjusts the weights and biases of each neuron slightly in the opposite direction of the error, aiming to minimize it.

This process repeats for numerous data samples (epochs), gradually refining the network's ability to make accurate predictions.

Backpropagation is the foundation for training deep neural networks and has enabled significant advancements in various fields, including image and speech recognition, natural language processing, and autonomous systems. It's a critical tool for harnessing the power of neural networks and making them effective at solving complex real-world problems.

Let’s Understand the real world of Example: Imagine you're building a spam email filter using a neural network. During training, the network examines thousands of emails, some labeled as spam and others as not. The network starts with random weights and biases. As it processes emails, backpropagation identifies which neurons contributed to correct and incorrect classifications. It then tweaks the parameters to reduce errors. Over time, the network becomes adept at distinguishing spam from legitimate emails.

Understanding neural networks and backpropagation is like having the keys to a world of AI possibilities. From predicting stock prices to translating languages, these concepts empower you to tackle a myriad of complex tasks. So,

for they are the engines propelling AI innovations into the future. 🚀🧠

Subscribe to my newsletter

Read articles from Aaditya Champaneri directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by