Linux wget and curl command for Efficient File Retrieval and Data Transfer

Subash Neupane

Subash Neupane

In this blog on the DevOps journey, we will learn about the curl and wget commands.

Two potent command-line utilities for obtaining files, data, and online material from the internet are wget and curl. For a variety of tasks, including online scraping, downloading big files, and automating data transfers, they are adaptable, user-friendly, and crucial. We'll go through the fundamentals of wget and curl in this blog article and offer real-world examples to show you how to use them to their fullest capacity.

What is wget?

wget is a command-line utility for non-interactive downloading of files from the web. It supports HTTP, HTTPS, FTP, and more protocols, making it a handy tool for various downloading tasks.

Basic Usage

The basic syntax for using wget is simple:

wget [options] [URL]

Here are some common options:

-P: Specify the directory to save downloaded files.

-O: Rename the downloaded file.

-r: Recursively download files.

-np: Don't ascend to the parent directory.

-nc: Skip downloading if the file already exists.

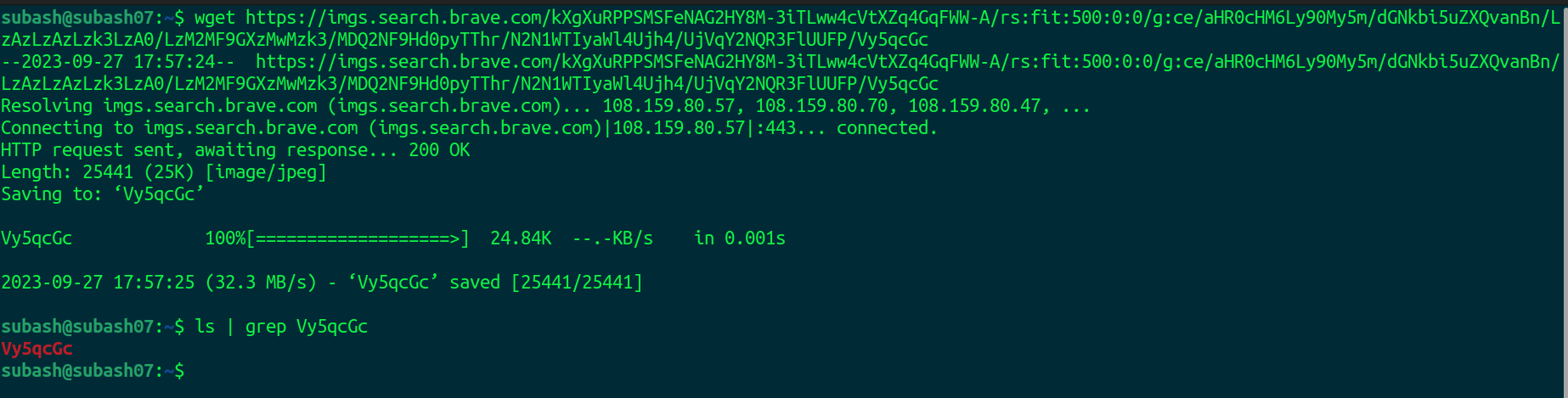

Here the image is downloaded using the wget command.

To Save to a Specific Directory

wget -P /directory_path [url]

To Download Entire Websites

wget -r [url]

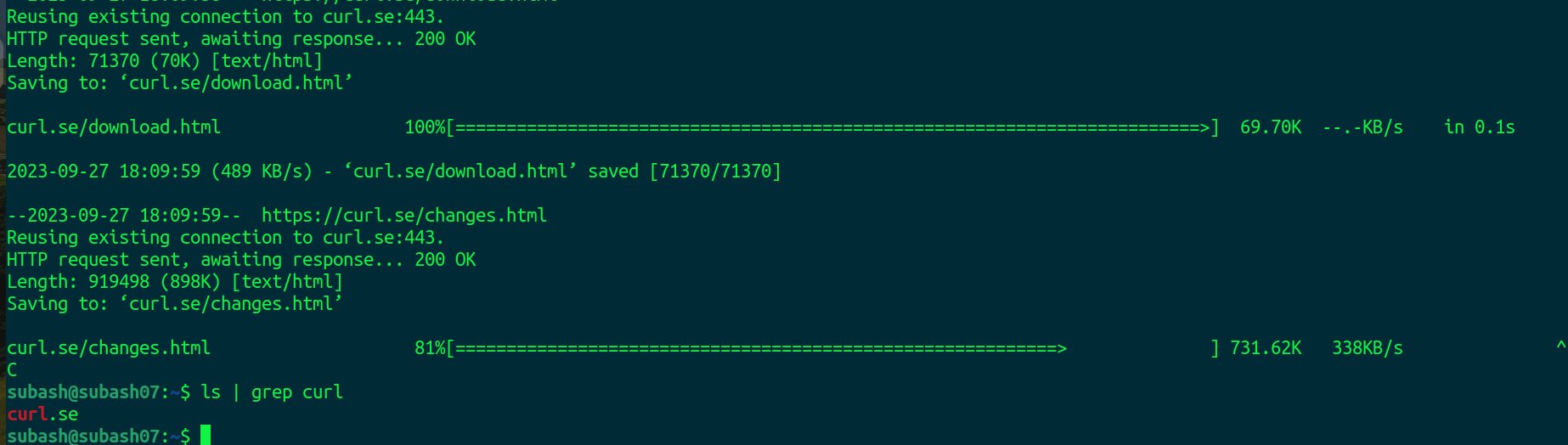

Using the wget -r https://curl.se/ command we tried to download the entire website being downloading. I stopped it using ctrl+c command as we don't want it. The downloaded content is stored in curl.se directory as we can see in the image.

To Rename the Downloaded File

wget -O new_name [url]

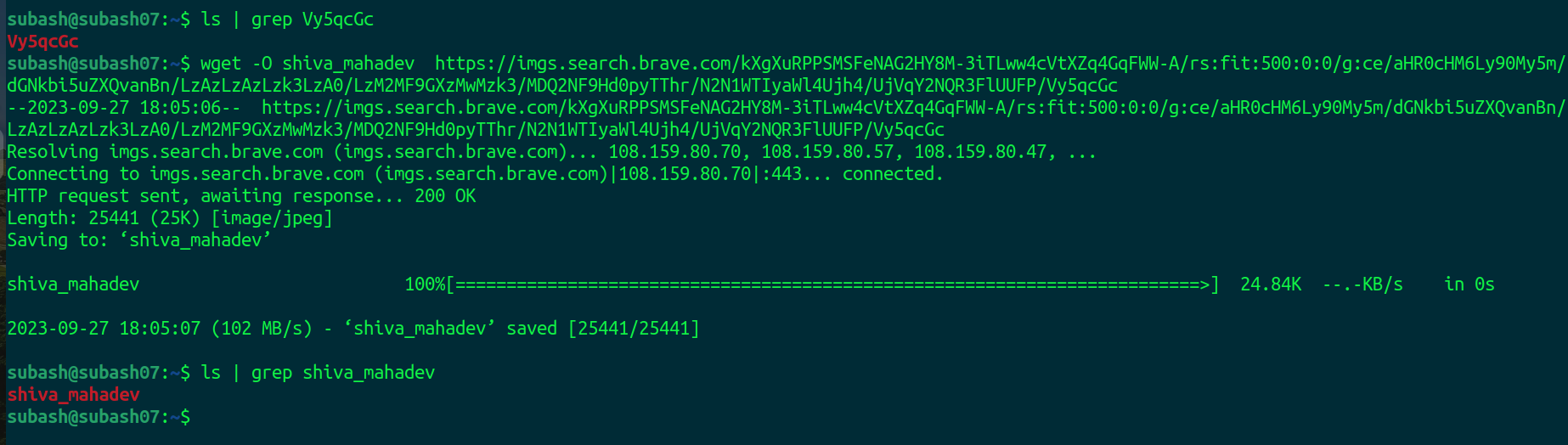

Previously we downloaded the image as "Vy5qcGc" but now we downloaded the same image as shiva_mahadev using the -O option in the wget command.

What is curl?

curl stands for "Client URL." It is a versatile command-line tool for transferring data with URLs. Curl supports a wide range of protocols, including HTTP, HTTPS, FTP, SCP, and more.

Basic Usage

The basic syntax for using curl is:

curl [options] [URL]

-o: Specify the output file name.

-O: Save with the original file name.

-L: Follow redirects.

-H: Set HTTP headers.

-d: Send data in a POST request.

Download a File

curl -O [url]

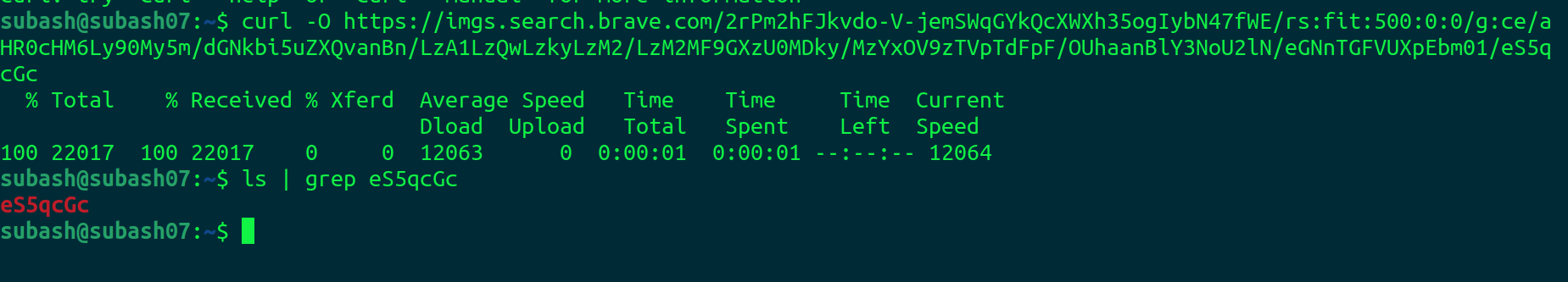

-O option saves the file with its original name. Here we downloaded the eS5qcGc which is an image.

Download Multiple files

To download multiple files at once, use multiple -O options, followed by the URL to the file you want to download.

curl -O [url1]

-O [url2]

Resume a Download

You can resume a download by using the -C - option. This is useful if your connection drops during the download of a large file, and instead of starting the download from scratch, you can continue the previous one.

#download link

curl -O [url]

#download suddenly stopped and to resume the download

curl -C [url]

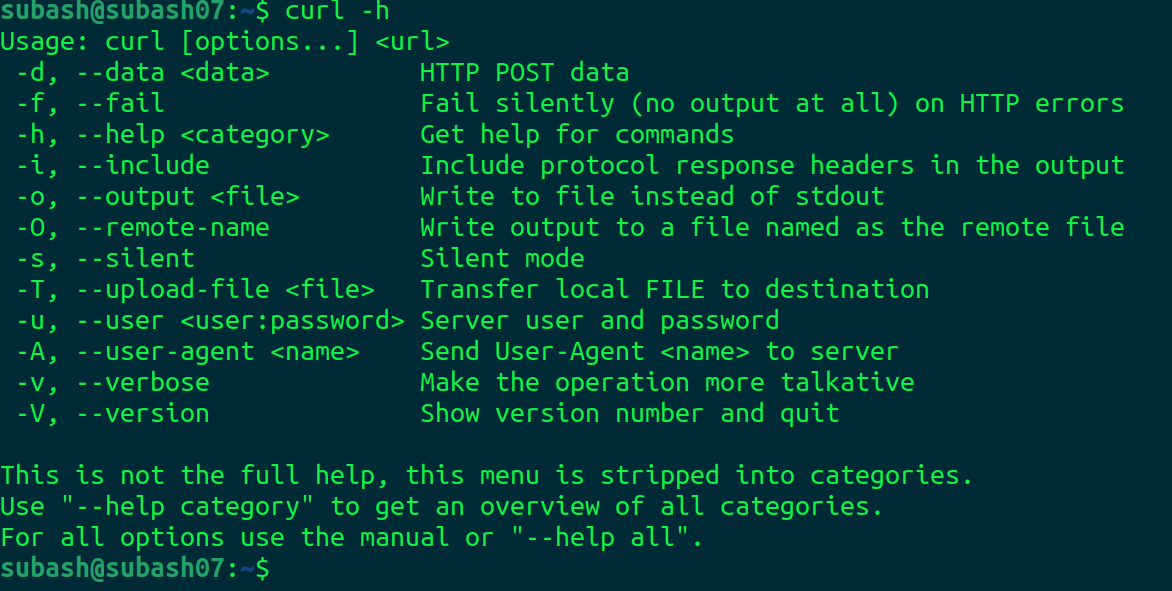

curl usage help#

Run the -h option to quickly retrieve a list of helpful command line options with associated descriptions.

Both wget and Curl offer advanced features, such as authentication, parallel downloads, and rate limiting.

For downloading files and sending data via the command line, wget, and curl are essential programs. Learning how to use these tools will greatly increase your productivity, whether you need to automate data retrieval, carry out web scraping, or just download a file. To fully utilize wget and curl, start playing with them right away.

Happy Learning!!

Subscribe to my newsletter

Read articles from Subash Neupane directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Subash Neupane

Subash Neupane

Computer Science graduate