Adaboost: The Secret Sauce Behind High-Performance Models

Saurabh Naik

Saurabh Naik

Introduction:

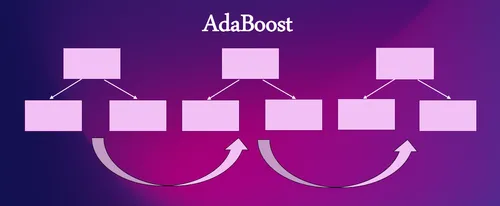

In the ever-evolving landscape of machine learning algorithms, there exists a powerful ensemble method known as Adaboost (Adaptive Boosting). Adaboost is a technique that combines the predictions of multiple weak learners into a robust and accurate ensemble model. In this technical blog post, we will embark on a journey to demystify Adaboost, exploring its inner workings, advantages, and real-world applications.

The Essence of Adaboost

At its core, Adaboost is a boosting algorithm designed to improve the performance of weak learners. Weak learners are models that perform slightly better than random guessing. Adaboost, through an iterative process, gives more weight to the misclassified data points, allowing subsequent weak learners to focus on the errors made by their predecessors.

The Adaboost Algorithm

The Adaboost algorithm can be summarized in these key steps:

Initialization: Assign equal weights to all data points.

Iterative Learning: Repeatedly train a weak learner on the dataset, with adjusted weights to focus on misclassified samples.

Weighted Voting: Combine the predictions of weak learners with each learner's weight determined by its accuracy.

Update Weights: Increase the weights of misclassified samples, giving them more importance in the next iteration.

Repeat: Repeat the process for a predefined number of iterations or until a certain level of accuracy is achieved.

Sample Example:

- Consider a dataset with 7 records. Thus, naturally, the weight of each record will be 1/7.

| Feature 1 | Feature 2 | Output | Weight |

| x1 | x8 | True | 1/7 |

| x2 | x9 | False | 1/7 |

| x3 | x10 | False | 1/7 |

| x4 | x11 | False | 1/7 |

| x5 | x12 | True | 1/7 |

| x6 | x13 | True | 1/7 |

| x7 | x15 | True | 1/7 |

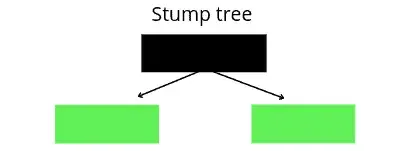

We will use a stump(decision tree having a depth of 1 level.). So we pass the above data into this stump.

Now suppose we get 1 record prediction wrong from the stump. We have to make sure that this record has to be passed to the next model to get focused. But now the question arises how?

For the above question, we have a 3-step process as a solution

Find the total error value: TE=1/7

Find the performance of the stump:

\(\frac{1}{2} \log_e\left(\frac{1-te}{te}\right)\)

New sample weight:

For the correct record: \(e^{-ps}\)

For the wrong record: \(e^{ps}\)

The weight of the wrong record will be high

Now that we have a new weight column we can replace this with the old weight column

| Feature 1 | Feature 2 | Output | New Weights |

| x1 | x8 | True | Wnew1 |

| x2 | x9 | False | Wnew2 |

| x3 | x10 | False | Wnew3 |

| x4 | x11 | False | Wnew4 |

| x5 | x12 | True | Wnew5 |

| x6 | x13 | True | Wnew6 |

| x7 | x14 | True | Wnew7 |

- Now the next model in the boosting algorithm can easily identify the misclassified record based on the new weight

The Power of Weak Learners:

Adaboost can utilize a variety of weak learners, such as decision trees with limited depth (stumps), linear models, or any other model that performs slightly better than random guessing. The diversity of weak learners contributes to Adaboost's strength.

Adaboost's Adaptive Nature

The term "Adaptive" in Adaboost refers to its ability to adapt to difficult-to-classify data points. By increasing the weights of misclassified samples in each iteration, Adaboost focuses on the samples that previous weak learners found challenging, effectively improving the overall model's accuracy.

Real-World Applications

Adaboost finds applications in a wide range of domains:

Face Detection: Adaboost is widely used in face detection systems.

Object Recognition: It plays a crucial role in identifying objects within images.

Text Classification: Adaboost can enhance the accuracy of text classification tasks.

Anomaly Detection: Detecting rare events or anomalies in data.

Medical Diagnosis: Improving disease diagnosis by combining the expertise of multiple weak classifiers.

Advantages of Adaboost

High Accuracy: Adaboost often achieves high accuracy by combining multiple weak learners.

Versatility: It can work with various weak learner algorithms.

Feature Selection: Adaboost can implicitly perform feature selection by assigning lower weights to irrelevant features.

Robustness: It is less prone to overfitting compared to individual weak learners.

Conclusion

Adaboost stands as a testament to the power of ensemble learning in machine learning. Its ability to adapt and learn from its mistakes, along with its impressive accuracy, makes it a valuable addition to any data scientist's toolbox. By harnessing the strength of multiple weak learners, Adaboost continues to elevate the performance of machine learning models across diverse applications, proving its worth in both theory and practice.

Subscribe to my newsletter

Read articles from Saurabh Naik directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Saurabh Naik

Saurabh Naik

🚀 Passionate Data Enthusiast and Problem Solver 🤖 🎓 Education: Bachelor's in Engineering (Information Technology), Vidyalankar Institute of Technology, Mumbai (2021) 👨💻 Professional Experience: Over 2 years in startups and MNCs, honing skills in Data Science, Data Engineering, and problem-solving. Worked with cutting-edge technologies and libraries: Keras, PyTorch, sci-kit learn, DVC, MLflow, OpenAI, Hugging Face, Tensorflow. Proficient in SQL and NoSQL databases: MySQL, Postgres, Cassandra. 📈 Skills Highlights: Data Science: Statistics, Machine Learning, Deep Learning, NLP, Generative AI, Data Analysis, MLOps. Tools & Technologies: Python (modular coding), Git & GitHub, Data Pipelining & Analysis, AWS (Lambda, SQS, Sagemaker, CodePipeline, EC2, ECR, API Gateway), Apache Airflow. Flask, Django and streamlit web frameworks for python. Soft Skills: Critical Thinking, Analytical Problem-solving, Communication, English Proficiency. 💡 Initiatives: Passionate about community engagement; sharing knowledge through accessible technical blogs and linkedin posts. Completed Data Scientist internships at WebEmps and iNeuron Intelligence Pvt Ltd and Ungray Pvt Ltd. successfully. 🌏 Next Chapter: Pursuing a career in Data Science, with a keen interest in broadening horizons through international opportunities. Currently relocating to Australia, eligible for relevant work visas & residence, working with a licensed immigration adviser and actively exploring new opportunities & interviews. 🔗 Let's Connect! Open to collaborations, discussions, and the exciting challenges that data-driven opportunities bring. Reach out for a conversation on Data Science, technology, or potential collaborations! Email: naiksaurabhd@gmail.com