Demystifying Kubernetes Architecture: A Comprehensive Guide

NILESH GUPTA

NILESH GUPTA

In today's ever-evolving world of containerization and orchestration, Kubernetes has emerged as the de facto standard for managing containerized applications at scale. Whether you're a seasoned developer or just getting started with container orchestration, this blog will provide you with a comprehensive understanding of Kubernetes, along with practical examples to help you get started.

What is Kubernetes?

Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform developed by Google. It automates the deployment, scaling, and management of containerized applications. Kubernetes abstracts the underlying infrastructure and provides a consistent API for managing containers, making it easier to deploy and scale applications across different environments.

Kubernetes is an open-source container orchestration tool. It makes it easier to deploy and manage applications. It also enables autoscale and auto-heal the application based on requirements. It is written in the GO language.

K8's is simply shortened to Kubernetes. The number 8 represents the number of letters skipped between the "K" and the "s" in the word "Kubernetes".

Google made it for themself in 2008 and named it 'Borg'. In 2014 it made the tool open named it Kubernetes and donated to CNCF.

Why use Kubernetes?

Orchestration: Clustering of any no of containers running on different networks.

Scalability: Kubernetes allows you to autoscale your applications up or down based on demand also it can do both vertical and horizontal scaling (mostly we do horizontal scaling).

High Availability: It ensures your applications are highly available by distributing them across multiple nodes.

Portability: Kubernetes is cloud-agnostic, enabling you to run your applications on various cloud providers or on-premises i.e. platform independent.

Resource Efficiency: Kubernetes optimizes resource utilization, reducing costs.

Self-healing: It can automatically replace failed containers or nodes, improving application reliability.

Roll back: Going back to the previous version.

Monitoring: Health monitoring of containers.

Batch execution: one-time, sequential and parallel.

Kubernetes Architecture:

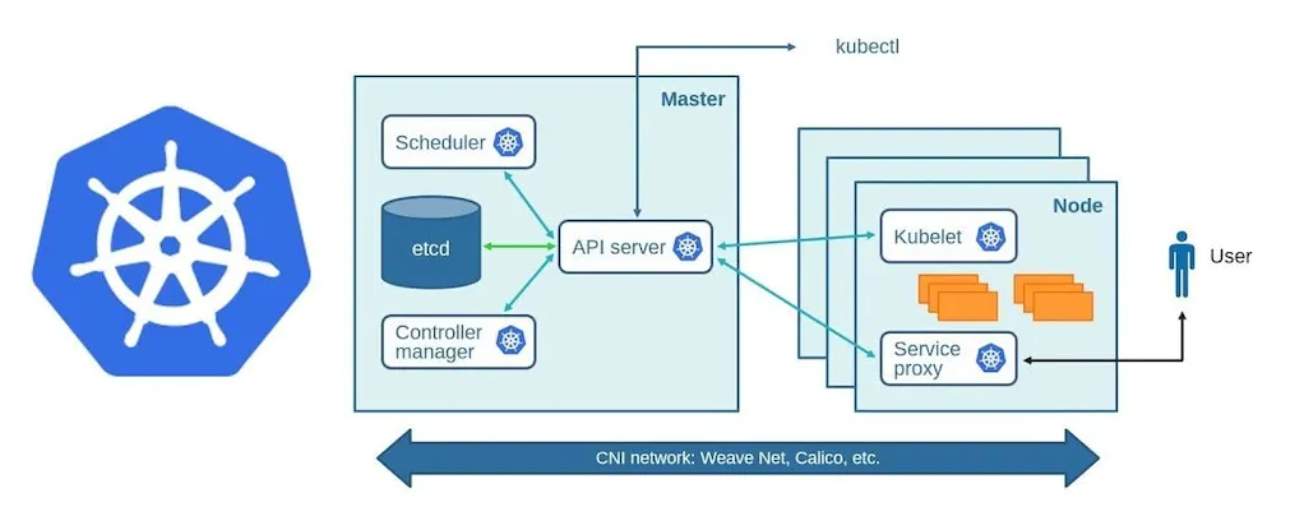

The above diagram is the Kubernetes cluster

Master Node: The Master node acts as the control center for the cluster, managing the desired state of the cluster and ensuring that the actual state matches the desired state.It can be also called as control plane

Worker Nodes: Worker nodes are where your application containers run. They communicate with the Master node to receive instructions and report their status.

k8s works on client-server or master-slave model. A cluster is a group of servers (i.e. minimum of 2 servers will be there ). In k8s we can have a setup likea. Single Master - Single Nodeb. Single Master - Multiple Nodesc. Multiple Masters - Multiple Nodes (multiple masters are used for high availability.Nodes can have multiple pods, and pods can have multiple containers (ideally we run only one container in the pod)companies with microservice application architecture uses mostly k8s.etcd : etcd is a distributed key-value store that stores the configuration data for the cluster. It acts as Kubernetes' brain, ensuring data consistency and reliability. The entire cluster state, including configuration, object metadata, and current cluster conditions, is stored in etcd.

etcd has following features:

Fully replicated:The entire state is available on every node in cluster.secure :implements automatic TLS with optional client-certificate authentication.Fast:Benchmarked at 10000 writes per second.API Server : The API Server is the main interface for communication between the Master node and the worker nodes. It exposes the Kubernetes API, which can be used to manage the cluster and deploy applications.The Kubernetes API server acts as the entry point for cluster operations. Clients, including the

kubectlcommand-line tool interacts with the API server to create, update, and query cluster resources.Controller Manager: The Controller Manager is responsible for running various controllers that regulate the state of the cluster, such as the replication controller and endpoints controller. The Controller Manager oversees background tasks that regulate the desired state of the cluster. It includes controllers for various resources such as ReplicaSets, Deployments, and Services, ensuring they are maintained according to the desired specifications.

Scheduler: The Scheduler is responsible for assigning pods to worker nodes based on resource requirements and constraints.

kubelet : kubelet is a process running on each worker node that communicates with the Master node to receive instructions and ensure the containers are running as intended. Kubelet is the primary node agent responsible for interacting with the control plane and ensuring containers are running in Pods as specified. It monitors the Pod's health and reports back to the master components.

Kube Proxy: Kube Proxy maintains network rules on nodes, enabling network communication to and from Pods. It provides load balancing for Service resources. It provides IP to pods

kubectl : kubectl is the command-line interface for interacting with the Kubernetes API.

**Container Runtime:**The container runtime, such as Docker or containerd, is responsible for running containerized applications inside Pods. It communicates with the Kubelet to start and stop containers.

In Kubernetes, everything we create is a manifest file written in yaml.

For a more detail explanation click the link Kubernetes_architecture

Cluster Networking :

Kubernetes relies on networking solutions to enable communication between Pods and external access. Key components include:

Container Network Interface (CNI) Plugins: These plugins, such as Flannel, Calico, and Weave, handle Pod-to-Pod communication.

Ingress Controllers: Manage external access to services by routing traffic based on rules.

Network Policies: Define and enforce rules for network traffic within the cluster, enhancing security.

4. What is a Control Plane?

The Control Plane manages the worker nodes and the Pods in the cluster. In production environments, the control plane usually runs across multiple computers and a cluster usually runs multiple nodes, providing fault-tolerance and high availability. Components of the Control Plane include API server, etcd, scheduler, and controller manager.

5. Write the difference between kubectl and kubelets

kubectl is the command-line interface (CLI) tool for working with a Kubernetes cluster. It communicates with the API server to perform various operations on the cluster, such as deploying applications, scaling resources, and inspecting logs.

Kubelet is the technology that applies, creates, updates, and destroys containers on a Kubernetes node.

6. Explain the role of the API server.

The API server is a main component of Kubernetes that enables users and applications to interact with and manage resources. All administrative operations, including creating, updating, and deleting resources like pods, services, deployments, and namespaces, are initiated by sending API requests to the API server. API Server is also responsible for the authentication and authorization mechanism. All API clients should be authenticated in order to interact with the API Server. REST operations validate them, and update the corresponding objects in etcd.

Setting Up Your Kubernetes Environment

Installing Kubernetes Locally (Minikube)

Minikube is a tool that lets you run a single-node Kubernetes cluster locally. It's an excellent way to get started with Kubernetes without needing a cloud provider. Here's how to set it up:

Install Minikube: Follow the installation guide for your ubuntu.

click on the link for first-Kubernetes-cluster-launch-with-nginx-running-in-minikube

KUBEADM installation(Used in production)

Install Kubeadm: Follow the installation guide for your ubuntu.

Setting Up a Cloud-Based Kubernetes Cluster

If you prefer a cloud-based Kubernetes cluster, consider using Google Kubernetes Engine (GKE), Azure Kubernetes Service (AKS), or Amazon Elastic Kubernetes Service (EKS). Each cloud provider offers a managed Kubernetes solution.

GKE: Follow Google's documentation to create a GKE cluster.

AKS: Microsoft provides a guide for setting up an AKS cluster.

EKS: To create an EKS cluster, follow Amazon's EKS getting started guide.

With your Kubernetes cluster ready, you're now prepared to dive into creating and managing Kubernetes objects.

Kubernetes Objects

Kubernetes uses various objects to define and manage the desired state of your applications. Some of the essential objects include Pods, ReplicaSets, Deployments, Services, ConfigMaps, Secrets, Persistent Volumes, and Namespaces.

Pods: The smallest deployable units in Kubernetes, containing one or more containers.

Services: Expose Pods to the network and provide load balancing.

ReplicaSets and Deployments: Manage the replication and scaling of Pods.

ConfigMaps and Secrets: Store configuration data and secrets separately from Pods.

Persistent Volumes: Manage storage for stateful applications.

These objects are defined in YAML or JSON manifests and interact with the API server for management.

Kubernetes Control Flow

Understanding how Kubernetes manages the control flow of objects is crucial. Here's a simplified overview:

Creating a Pod: When you create a Pod manifest, the API server stores it in etcd. The Scheduler assigns the Pod to a suitable node, and the Kubelet ensures it's running.

Scaling Deployments: Scaling a Deployment modifies the desired state in the etcd store. The Controller Manager detects the change and ensures the correct number of Pods is running.

Updating Configurations: When you update a ConfigMap or Secret, the change propagates to Pods using them via volume mounts or environment variables.

High Availability and Failover

Kubernetes is designed with high availability in mind:

Master Node Redundancy: Running multiple master nodes with leader election ensures the control plane is highly available.

Node Failures and Recovery: In case of a node failure, Pods are rescheduled to healthy nodes, maintaining application availability.

Security in Kubernetes

Security is a top priority in Kubernetes:

Authentication and Authorization: Kubernetes supports various authentication mechanisms and authorizes actions based on RBAC policies.

Role-Based Access Control (RBAC): RBAC defines roles, role bindings, and cluster roles to manage user and service account permissions.

Pod Security Policies: Define policies to control the security posture of Pods, such as disallowing privileged containers.

Kubernetes Add-Ons

Kubernetes has a rich ecosystem of add-ons that extend its functionality:

Helm: A package manager for Kubernetes that simplifies deploying and managing applications.

Prometheus and Grafana: Tools for monitoring and visualizing cluster and application metrics.

Logging with Fluentd and Elasticsearch: Centralized logging solutions for collecting and analyzing container logs.

Kubernetes Ecosystem

Operators: Custom controllers that extend Kubernetes to manage complex applications, such as databases.

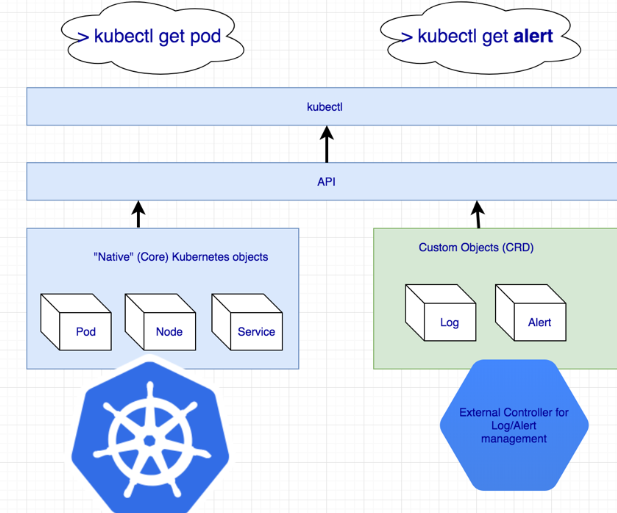

Custom Resource Definitions (CRDs): Define custom resources and controllers for specialized use cases.CRDs allow users to create new types of resources without adding another API server. You do not need to understand API Aggregation to use CRDs. Regardless of how they are installed, the new resources are referred to as Custom Resources to distinguish them from built-in Kubernetes resources (like pods)

I hope you like this blog .

happy learning....

Subscribe to my newsletter

Read articles from NILESH GUPTA directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by