Uninstall tracking via BigQuery and Airflow

Ashwini Kumar

Ashwini Kumar

At InCred, our mobile app is an integral part of our lending process. It helps customers complete their loan application, gives them information about their loan, and payment history, allows them to make payments, and stays in touch with InCred. That's why it is crucial for our business that our customers keep the app installed on their devices. However, sometimes customers may remove the app inadvertently or otherwise. In that situation, they may miss payment reminders and other vital communication related to their loan potentially causing them financial harm. So we wanted to reach out to customers in case of an uninstall quickly. In this article, we will talk about how we are using BigQuery to track user uninstalls in conjunction with Airflow to fire remedial action notifications to our users.

Hunt for an API

We would like to build a REST API on top of BigQuery which can tell the app status for a given user on the given month range. At InCred, language is not the barrier where a microservice can be written in Java/GoLang/NodeJs. For this case, we went with NodeJs and used BigQuery NodeJs client along the way. The API would have the following request body:

{

"userIds": [

<list of user identifiers>

],

"startMonth": 1,

"startYear": 2020,

"endMonth": 5,

"endYear": 2020

}

For every user, this API should return the status of our app with values ranging from Uninstalled/Installed/Never Installed.

Our Android app fires many events to Firebase Analytics. These events help in analyzing user behaviour. To gain deeper insights, we have BigQuery integrated into Firebase analytics. To track uninstalls, we started looking out whether we can explicitly fire any event when the app is getting uninstalled by bundling it with user information. Unfortunately, this was not available and then we came across Firebase auto-logged events, where one of the events app_remove was specifically meant for that purpose. To define the uniqueness of a user, we send [user_id](https://firebase.google.com/docs/analytics/userid)when the users authenticate via phone. Right, so just querying with app_remove event name will not solve the problem. That wasn’t meant to be!!

What if the user had reinstalled the app again?

If we just went with listening to theapp_remove event, the user having reinstalled the app, will be shown as Uninstalled. Not the desired result. We also wanted to check if we could find anything unique about the user before logging into our app and then we saw user_pseudo_id. Firebase automatically generates this unique ID within BigQuery for each user. So, we created a map of user_id and user_pseudo_id and then analyzed the uninstalls. This failed too as it started giving false-positive results.

Keep-It-Simple-Silly(KISS)

When the problem becomes complex, it is always good to break it down into sub-problems. Then, solve the sub-problems and connect them all together. For a user in the given month range, we broke this problem into 3 parts:

We would get the maximum

event_timestampfor theapp_removeevent. This would also club the fact that the user has installed/uninstalled the app multiple times. Since BigQuery shards the table based on date, we used wildcard(*) to cover all the tables for a particular month.select max(event_timestamp) as app_remove_timestamp, user_id as user_id from " + <table_name.yyyymm*> + " where event_name = 'app_remove' and user_id is not nullWe would get the maximum engagement timestamp of a user. This would get the last timestamp when the user engaged with our app.

select max(event_timestamp) as max_engage_timestamp, user_id as user_id from " + <table_name.yyyymm*> + " where user_id is not nullBoth the results would also be run on the same day since the BigQuery intraday table name is a little different. We would combine the last results to give us the bucket for Installed/Uninstalled/Never Installed users. Let’s see how:

If the

app_remove_timestampis greater thanmax_engage_timestamp, then the user has Uninstalled the app.If the

app_remove_timestampis null or less thanmax_engage_timestamp, then the user has the app InstalledIf both the timestamps are null, then the user has Never Installed the app in that range.

Note: All this works for a given month range. We know for sure that when the user has taken a loan from us and then gives an estimated month range on this API to give the desired result.

Why not include a date too to make this API complete?

With month ranges, we were not able to track the uninstalls every week or in the given time frame, say, we wanted to track from 5th Jan 2020 to 3rd Mar 2020 when we ran a campaign or disbursed loans via some offer. To cater to that, we changed the request body a little to include optional startDay and endDay and then tweaked the earlier solution. With the help of TABLE_SUFFIX, we were able to scan the table for the given date range.

For the range of 5th Jan 2020 to 3rd Mar 2020, we used TABLE_SUFFIX to get the data set from 5th Jan to 30th Jan, then ran a Wildcard(*) for Feb month, and lastly ran a range query with TABLE_SUFFIX to query from 1st Mar to 3rd Mar.

With this, we successfully developed an API over BigQuery that can track user uninstalls on the given date range.

Periodic notifications via Airflow

Once we developed an API, we wanted to utilize it the most by pushing automatic reminders to our users to have the app installed. At InCred, we use Apache Airflow to program, schedule, and monitor our workflows. Airflow provides a very neat dashboard to monitor your jobs, logging, retry policy, and much more via DAG runs.

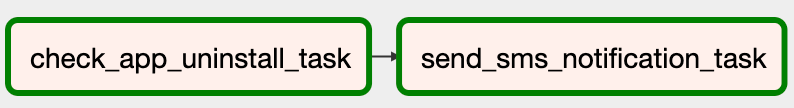

Let’s break down the problem. We would get the users with active loans(SQL query to our database), run those users on our Uninstall tracking API, and then send notifications via SMS to all those who have uninstalled our Android app.

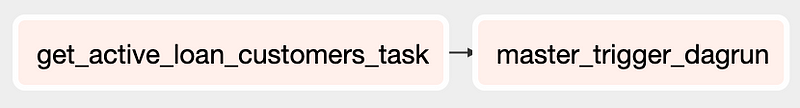

Since the users with active loans would be huge, running every logic on a single DAG would take a lot of time to complete. Also, if there are a huge number of users then our SQL query underneath BigQuery would significantly increase thus hitting the Maximum unresolved standard SQL query length of 1 MB. So, we broke this problem even further and created multiple child DAGs.

- We set our master DAG to get the users having active loans.

- We configured the batch size on Airflow to create a child DAG for every batch.

These child DAGs would run in parallel to get install status and send notifications.

Child DAGs would have their retry policy along with master DAG.

Once we brainstormed on the solution, with Airflow, creating this setup was a piece of cake. We did just that and executed our workflow to work like a charm. We then went ahead and scheduled it bi-weekly for periodic reminders to our users.

Subscribe to my newsletter

Read articles from Ashwini Kumar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ashwini Kumar

Ashwini Kumar

Hi 👋, I’m Ashwini Kumar A Google Developer Expert - Firebase and a Mentor at Scaler. Currently, I hold the position of Director of Software Engineering, overseeing the development of mobile and web applications at PayU Credit. I’m a proud Computer Science alumnus of NIT Trichy, with a track record of architecting and developing applications that have made a significant impact on the Indian tech landscape. My experience spans diverse domains, including Gaming, FinTech, Entertainment, Healthcare, e-commerce, and Agro and Construction Tech. As technology continues to evolve, I am committed to the pursuit of engineering excellence every day 🎯