Day 21 : Docker Important interview Questions

Siri Chandana

Siri ChandanaTable of contents

- What is the difference between an Image, Container and Engine?

- What is the Difference between the Docker command COPY vs ADD?

- What is the difference between the Docker command CMD vs RUN?

- How Will you reduce the size of the Docker image?

- Why and when to use Docker?

- Explain the Docker components and how they interact with each other.

- Explain the terminology: Docker Compose, Docker File, Docker Image, Docker Container?

- In what real scenarios have you used Docker?

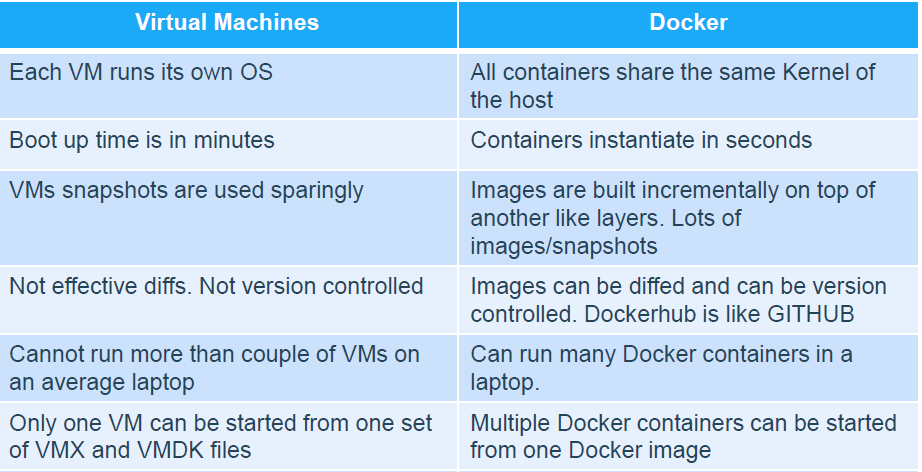

- Docker vs Hypervisor?

- What are the advantages and disadvantages of using docker?

- What is a Docker namespace?

- What is a Docker registry?

- What is an ENTRYPOINT?

- How to implement CI/CD in Docker?

- Will data on the container be lost when the Docker container exits?

- What is a Docker swarm?

- What are the docker commands for the following?

- What are the common Docker practices to reduce the size of Docker Images?

What is the difference between an Image, Container and Engine?

Docker Images:

A Docker image is a lightweight, standalone, and executable software package that includes everything needed to run a piece of software. It consists of layered file systems, libraries, dependencies, and the application code. Docker images are built from a set of instructions called a Dockerfile, which specifies how to construct the image. Images serve as templates for creating Docker containers. They are stored in a registry and can be shared, downloaded, and reused by other users.

Docker Containers:

A Docker container is a running instance of a Docker image. It is an isolated and lightweight execution environment that encapsulates the application and its dependencies. Containers run on a host machine using the Docker engine. Each container is isolated from other containers and the host system, providing process-level isolation, resource management, and security. Containers can be started, stopped, and managed independently of each other. Multiple containers can run simultaneously on a single host.

Docker Engine:

The Docker engine, also known as the Docker daemon, is the core component of Docker. It is responsible for building, running, and managing Docker containers. The Docker engine runs as a background service on the host machine and provides the necessary infrastructure to create and manage containers. It includes the Docker CLI (Command-Line Interface) and communicates with the host operating system's kernel to provide containerization capabilities, such as process isolation using Linux namespaces and resource management using control groups (cgroups).

What is the Difference between the Docker command COPY vs ADD?

In Docker, both the

COPYandADDcommands are used to copy files and directories from the host system into a Docker image. While both commands support similar functions, COPY takes local files or directory from your host and ADD can do more likeUse URL instead of a local file / directory

Extract a tar file from the source directly into the destination

COPY COMMAND | ADD COMMAND |

COPY is a docker file command that copies files from a local source location to a destination in the Docker container. | ADD command is used to copy files/directories into a Docker image. |

Syntax: COPY <src> <dest> | Syntax: ADD source destination |

It only has only one assigned function. | It can also copy files from a URL. |

Its role is to duplicate files/directories in a specified location in their existing format. | ADD command is used to download an external file and copy it to the wanted destination. |

COPY command does not support directly | ADD command supports copying files directly from URLs.This can be useful for downloading external resources during the image build process |

What is the difference between the Docker command CMD vs RUN?

RUN is used to execute commands during the build process of a Docker image. These commands are run in a new layer on top of the current image and their result is saved in the new image layer. The commands specified with RUN are typically used to install software packages, update system configurations, create directories, and perform other tasks that are necessary to configure the image.

RUN apt-get update && apt-get install -y python3

CMD, on the other hand, is used to specify the default command to run when a Docker container is started from the image. This command is only executed when the container is started and it can be overridden by passing a different command to the docker run command line. CMD is typically used to start a service or application in the container.

CMD ["python", "app.py"]

How Will you reduce the size of the Docker image?

Using distroless/minimal base images

Your first focus should be on choosing the right base image with a minimal OS footprint. One such example is alpine base images. Alpine images can be as small as 5.59MB. It’s not just small; it’s very secure as well.

alpine latest c059bfaa849c 5.59MBMultistage builds

The multistage build pattern is evolved from the concept of the builder pattern where we use different Dockerfiles for building and packaging the application code. Even though this pattern helps reduce the image size, it puts little overhead when it comes to building pipelines.

After that, only the necessary app files required to run the application are copied over to another image with only the required libraries, i.e., lighter to run the application

Minimizing the number of layers

Understanding caching

Often, the same image has to be rebuilt again & again with slight code modifications. Docker helps in such cases by storing the cache of each layer of a build, hoping that it might be useful in the future.

Using Dockerignore

Keeping application data elsewhere

Storing application data in the image will unnecessarily increase the size of the images. It’s highly recommended to use the volume feature of the container runtimes to keep the image separate from the data.

These are image optimization tools:

Docker Slim: It helps you optimize your Docker images for security and size.

Docker Squash: This utility helps you to reduce the image size by squashing image layers.

To reduce the image size, users can either use the Docker “–squash” command or utilize the multistage Dockerfile. To reduce the Docker image size through the Docker “–squash”, utilize the “docker build –squash -t <imagename> .” command.

Why and when to use Docker?

Docker is widely used for various reasons and can be beneficial in multiple scenarios. Here are some common reasons and situations where Docker is particularly useful:

Application Isolation and Portability: Docker allows you to encapsulate an application and its dependencies into a container, providing process-level isolation. This isolation ensures that the application runs consistently across different environments, regardless of the underlying host system. Docker containers are highly portable and can be deployed on any platform that supports Docker, making it easier to move applications between development, testing, staging, and production environments.

Simplified Deployment and Configuration: Docker simplifies the deployment process by providing a consistent and reproducible environment. With Docker, you can package an application along with its dependencies into a container image, ensuring that it runs consistently across different infrastructure setups. This eliminates the "works on my machine" problem and streamlines the deployment process, making it easier to scale and manage applications.

Scalability and Resource Efficiency: Docker allows you to scale applications horizontally by running multiple containers on a single host or distributed across multiple hosts. Containers are lightweight and share the host's operating system kernel, reducing resource overhead compared to traditional virtualization. Docker's ability to quickly start, stop, and replicate containers makes it suitable for dynamic scaling and load balancing scenarios.

Microservices Architecture: Docker aligns well with the microservices architectural pattern, where applications are broken down into smaller, decoupled services. Each service can be containerized using Docker, allowing independent development, deployment, and scaling of individual services. Docker's container orchestration tools, such as Docker Swarm or Kubernetes, provide features for managing and scaling microservices-based applications.

Development and Testing Environments: Docker simplifies the setup of development and testing environments by providing a consistent and reproducible environment across different machines. Developers can define the required dependencies and configurations in a Dockerfile, ensuring that everyone on the team has the same development environment. Docker also facilitates the creation of disposable testing environments, enabling the isolation and reproducibility of tests.

Continuous Integration and Delivery (CI/CD): Docker integrates well with CI/CD pipelines, allowing developers to automate the build, test, and deployment processes. By using Docker images, applications can be packaged and tested in a consistent environment. Docker's containerization also enables faster deployment and rollback, as well as simplifies the management of application versions and dependencies.

Collaboration and Software Distribution: Docker makes it easy to share and distribute software applications. Docker images can be pushed to public or private registries, allowing others to download and run the same application in their own Docker environment. This simplifies collaboration among teams, facilitates the sharing of development environments, and reduces compatibility issues.

Explain the Docker components and how they interact with each other.

Docker has several components that work together to provide a platform for packaging, deploying, and running applications in containers. These components include:

Docker Engine: The Docker Engine is the underlying technology that runs and manages the containers. It is responsible for creating, starting, stopping, and deleting containers, as well as managing their networking and storage.

Docker Daemon: The Docker Daemon is the background service that communicates with the Docker Engine. It receives commands from the Docker CLI and performs the corresponding actions on the Docker Engine.

Docker CLI: The Docker Command Line Interface (CLI) is a command-line tool that allows users to interact with the Docker Daemon to create, start, stop, and delete containers, as well as manage images, networks, and volumes.

Docker Registries: A Docker Registry is a place where images are stored and can be accessed by the Docker Daemon. Docker Hub is the default public registry, but you can also use private registries like those provided by Google or AWS.

Docker Images: A Docker Image is a lightweight, stand-alone, executable package that includes everything needed to run a piece of software, including the code, a runtime, libraries, environment variables, and config files.

Docker Containers: A Docker Container is a running instance of an image. It is a lightweight, standalone, and executable software package that includes everything needed to run the software in an isolated environment.

Users interact with the Docker Client, which communicates with the Docker Daemon. The Docker Daemon, in turn, manages images, containers, and other resources, using the Docker Engine.

Interaction:

The Docker Client communicates with the Docker Daemon using the Docker API.

Docker Images are built using the Docker Client, and they can be stored in Docker Registries.

Docker Containers are created from Docker Images using the Docker Client and run on the Docker Daemon.

Containers can communicate with each other using Docker Networks, and they can store and retrieve data using Docker Volumes.

Docker Compose uses the Docker Client to manage multi-container applications based on a Compose file.

Explain the terminology: Docker Compose, Docker File, Docker Image, Docker Container?

Docker Compose:

Docker Compose is a tool provided by Docker that allows you to define and run multi-container applications. It uses a YAML file called docker-compose.yml to specify the services, networks, and volumes required for your application. With Docker Compose, you can define the configuration for multiple Docker containers, their relationships, and the environment variables required for each container. It simplifies the process of managing complex container setups, as you can start, stop, and manage multiple containers as a single unit.

Dockerfile:

A Dockerfile is a text file that contains a set of instructions for building a Docker image. It serves as a blueprint for creating a Docker image with all the required dependencies, configurations, and steps to set up the application environment. The Dockerfile specifies the base image to use, copies files into the image, runs commands to install dependencies, sets environment variables, exposes ports, and defines the default command to run when a container is created from the image. The Docker CLI uses the Dockerfile to build an image by executing the instructions in sequence.

Docker Image:

A Docker image is a lightweight, standalone, and executable software package that includes everything needed to run a piece of software. It is built from a Dockerfile using the docker build command. A Docker image consists of multiple layers that represent changes made at each step in the Dockerfile. Each layer is read-only and represents a specific instruction in the Dockerfile. Images are immutable, meaning they cannot be modified once created, but you can create new images based on existing ones. Docker images are stored in a registry and can be shared, downloaded, and reused by others.

Docker Container:

A Docker container is a running instance of a Docker image. It is an isolated and lightweight execution environment that encapsulates the application and its dependencies. Containers run on a host machine using the Docker engine. Each container is isolated from other containers and the host system, providing process-level isolation, resource management, and security. Containers can be started, stopped, and managed independently of each other. Multiple containers can run simultaneously on a single host. Containers are ephemeral, meaning they can be easily started, stopped, and replaced without affecting the host or other containers.

In what real scenarios have you used Docker?

Application Deployment:

It is widely used to package applications and their dependencies into containers, ensuring that they run consistently across different environments, from a developer's laptop to production servers.

b) Microservice Architecture:

In a microservices-based application, various components of the application are containerized using Docker. Each microservice can be developed, tested, and deployed independently in its own container.

c) Continuous Integration/Continuous Deployment (CI/CD):

Docker is a key technology in CI/CD pipelines. Developers create Docker images of their applications, which are then tested and deployed automatically through CI/CD tools like Jenkins, Travis CI, or GitLab CI/CD.

d) Testing and QA:

Docker containers can be used to create isolated testing environments for different stages of testing, such as unit testing, integration testing, and user acceptance testing.

Docker vs Hypervisor?

What are the advantages and disadvantages of using docker?

Advantages:

Docker allows for the quick and consistent deployment of applications. Environment Consistency: Docker ensures that applications run consistently in a variety of environments.

Containers share the host OS kernel, resulting in efficient resource utilisation.

Scalability: Docker facilitates application scaling via container orchestration platforms.

Containers provide isolation between applications, which improves security.

Disadvantages:

Docker networking can be complicated, especially in multi-container and multi-host setups.

Security Issues: Inadequate configuration can lead to security flaws. Docker containers are typically used for command-line applications and have limited GUI support.

Docker has a learning curve, which is especially important for users who are new to containerization.

What is a Docker namespace?

A namespace is one of the Linux features and an important concept of containers. Namespace adds a layer of isolation in containers. Docker provides various namespaces to stay portable and not affect the underlying host system. Few namespace types supported by Docker – PID, Mount, IPC, User, Network.

Types of Namespaces

Within the Linux kernel, there are different types of namespaces. Each namespace has its own unique properties:

user namespace has its own set of user IDs and group IDs for assignment to processes. In particular, this means that a process can have

rootprivilege within its user namespace without having it in other user namespaces.ProcessID (PID) namespace assigns a set of PIDs to processes that are independent of the set of PIDs in other namespaces. The first process created in a new namespace has PID 1 and child processes are assigned subsequent PIDs.

Network namespace has an independent network stack: its own private routing table, set of IP addresses, socket listing, connection tracking table, firewall, and other network‑related resource

What is a Docker registry?

A Docker registry is a storage and distribution system for named Docker images. The same image might have multiple different versions, identified by their tags. It is organized into Docker repositories, where a repository holds all the versions of a specific image. The registry allows Docker users to pull images locally, as well as push new images to the registry (given adequate access permissions when applicable).

By default, the Docker engine interacts with DockerHub, Docker’s public registry instance. However, it is possible to run on-premise the open-source Docker registry/distribution. There are other public registries available online.

What is an ENTRYPOINT?

ENTRYPOINT is one of the many instructions you can write in a dockerfile. The entrypoint instruction is used to configure the executables that will always run after the container is initiated.

For example, you can mention a script to run as soon as the container is started. Note that the Entrypoint commands cannot be overridden or ignored, even when you run the container with command line arguments.

Docker Entrypoint instructions can be written in both shell and exec forms,

such as the following example below:

• Shell form: ENTRYPOINT node app.js

• Exec form: ENTRYPOINT ["node", "app.js"]

FROM centos:7

MAINTAINER Devopscube

RUN yum -y update && \

yum -y install httpd-tools && \

yum clean all

ENTRYPOINT ["ab" , "http://google.com/" ]

How to implement CI/CD in Docker?

Implementing CI/CD (Continuous Integration/Continuous Deployment) for Docker involves automating the build, test, and deployment processes of your applications within a Dockerized environment. Here's a general outline of how you can set up CI/CD with Docker:

Version Control System (VCS): Start by using a VCS such as Git to manage your application's source code. Host your code repository on platforms like GitHub, GitLab, or Bitbucket.

CI/CD Pipeline Configuration: Set up a CI/CD pipeline using a dedicated CI/CD tool of your choice, such as Jenkins, GitLab CI/CD, or Travis CI. Configure the pipeline to trigger the build process whenever changes are pushed to the repository.

Docker Image Build: Create a Dockerfile that defines the steps to build your application's Docker image. Specify the base image, copy necessary files, install dependencies, and configure the image. Commit the Dockerfile to your code repository.

Build Stage: In the CI/CD pipeline, configure a build stage that executes the

docker buildcommand using the Dockerfile. This step builds the Docker image for your application.Testing Stage: Configure a testing stage in the pipeline to run your application's tests within a Docker container. Use a testing framework of your choice, such as pytest or Selenium, to execute tests against the running container.

Docker Image Tagging: Tag the built Docker image with a version or a unique identifier. You can use tags like commit hash, build number, or version number. This helps track and identify different versions of your application's Docker images.

Docker Image Registry: Push the tagged Docker image to a Docker image registry, such as Docker Hub, Amazon ECR, or Google Container Registry. The registry serves as a central repository for storing and distributing Docker images.

Deployment Stage: Configure a deployment stage in the CI/CD pipeline to deploy the Docker image to your target environment. This can involve deploying to a staging environment for further testing or directly deploying to production.

Orchestration and Scaling: If you're using container orchestration platforms like Kubernetes, configure the pipeline to deploy the Docker image to the cluster. This enables features like scaling, rolling updates, and service discovery.

Monitoring and Logging: Integrate monitoring and logging tools to track the health, performance, and logs of your Docker containers and applications. This helps identify and address issues in real-time.

Rollback and Rollforward: Implement rollback and rollforward mechanisms in your CI/CD pipeline to handle deployment failures and ensure smooth transitions between different versions of your application.

Will data on the container be lost when the Docker container exits?

By default, data on a Docker container will be lost when the container exits. Containers are designed to be ephemeral and disposable. However, you can use Docker volumes or bind mounts to persist data outside the container, ensuring that it is retained even after the container is stopped or removed.

What is a Docker swarm?

A Docker Swarm is a container orchestration tool running the Docker application. It has been configured to join together in a cluster. The activities of the cluster are controlled by a swarm manager, and machines that have joined the cluster are referred to as nodes.

What are the docker commands for the following?

view running containers

command to run the container under a specific name

command to export a docker

command to import an already existing docker image

commands to delete a container

command to remove all stopped containers, unused networks, build caches, and dangling images?

#view running containers docker ps #command to run the container under a specific name docker run --name=<container_name> <image_name> #command to export a docker docker export -o <file_name> <container_name> #command to import an already existing docker image docker save -o <image_name> image_name:tag #commands to delete a container docker rm <container_id> #command to remove all stopped containers, unused networks, build caches, and dangling images? docker system pruneWhat are the common Docker practices to reduce the size of Docker Images?

Reducing the size of Docker images is important for optimizing storage, network transfer, and deployment times. Here are some common practices to help minimize the size of Docker images:

Use Minimal Base Images: Start with a minimal base image, such as Alpine Linux, instead of a full-fledged operating system. These lightweight base images contain only the essential components needed to run your application. Smaller base images reduce the overall image size.

Optimize Dockerfile Instructions: Structure your Dockerfile to leverage Docker's layer caching mechanism. Place frequently changing instructions (like package installations) towards the end of the Dockerfile to minimize the number of layers affected by changes. Combine multiple related commands into a single RUN instruction using && or \ to reduce the number of intermediate layers.

Remove Unnecessary Dependencies: Regularly review your application's dependencies and remove any unnecessary or unused packages. Only include the libraries and dependencies required for your application to run correctly.

Use Multi-Stage Builds: Utilize multi-stage builds to separate the build environment from the runtime environment. This allows you to compile and build your application within a larger build image and then copy only the necessary artifacts into a smaller runtime image. This helps reduce the final image size.

Minimize Exposed Ports: Only expose the necessary ports in your Dockerfile using the EXPOSE instruction. This reduces the attack surface and eliminates the need to expose unnecessary ports, which can increase the image size.

Clean Up Temporary and Cache Files: Remove any temporary files, caches, or artifacts created during the build process within your Dockerfile. This ensures that these files are not included in the final image, reducing its size.

Use .dockerignore: Create a .dockerignore file in your project directory to exclude unnecessary files and directories from being copied into the Docker image. This helps avoid bloating the image with files that are not required for runtime.

Compress and Optimize Assets: If your application includes static assets, compress and optimize them before adding them to the Docker image. This can significantly reduce the size of the assets and the resulting image.

Use Docker Image Layer Pruning: After building and pushing your Docker images, use tools like

docker image pruneto remove any dangling or unused image layers. This helps free up disk space and reduces the overall size of your Docker image collection.Consider Alternative Base Images: Explore alternative base images tailored for specific use cases, such as language-specific images (e.g., official Node.js or Python images) or specialized images catered to specific frameworks or applications. These pre-optimized images may offer a smaller footprint compared to generic base images.

By following these practices, you can significantly reduce the size of your Docker images without sacrificing functionality, making them more efficient to distribute, store, and deploy.

Thank you for 📖reading my blog, 👍Like it and share it 🔄 with your friends if you find it knowledgeable.

Happy learning together😊😊!!

Subscribe to my newsletter

Read articles from Siri Chandana directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by