Deploying and Resizing EBS Volumes on AWS: A Step-by-Step Guide for beginners

SIDDHANI VAMSI SAI KUMAR

SIDDHANI VAMSI SAI KUMAR

In the fast-paced world of cloud computing, AWS has emerged as a dominant force, offering a wide array of services and tools to cater to the ever-evolving needs of businesses. One such crucial feature is Elastic Block Store (EBS) volumes, which allow you to attach additional storage to your Amazon EC2 instances.

In this blog post, I'll walk you through the process of launching a Linux EC2 instance, creating an EBS volume (let's say 20 GiB), and resizing it (let's say 30 GiB).

Task 1: Launch a Linux EC2 Instance

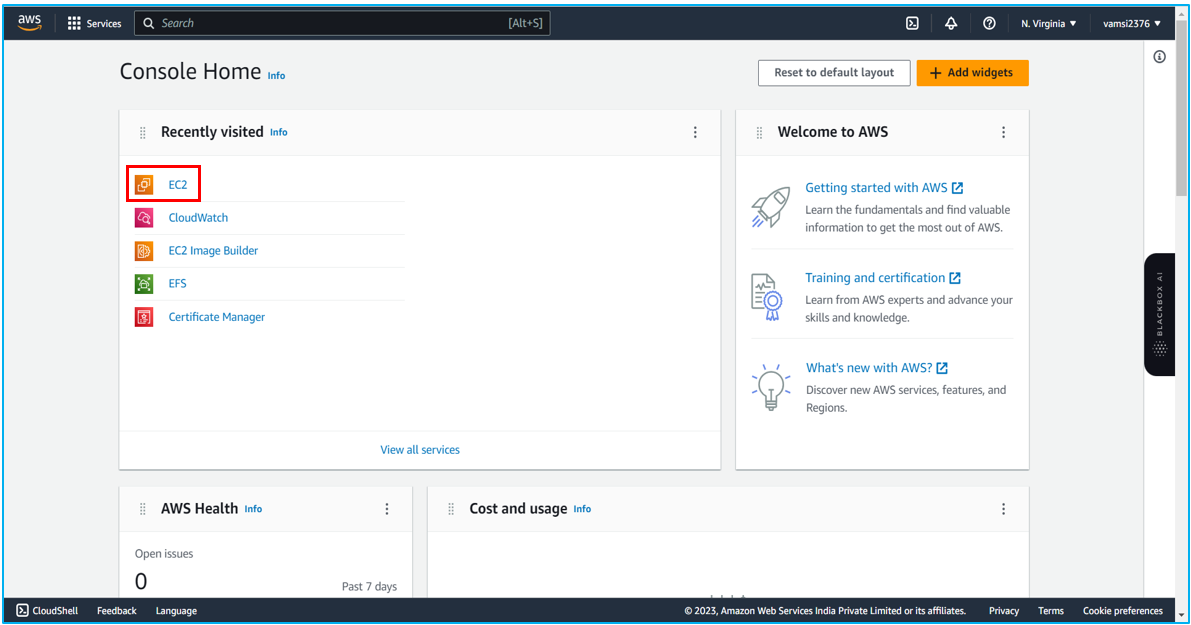

Log in to the AWS console and Click on EC2.

There are no running instances. We’ll launch one. Click the Launch Instance button to start the EC2 instance creation process.

Enter a name for the instance

Select the Linux AMI that best suits your needs. For example, you might choose "Amazon Linux 2" or "Ubuntu Server." I'm choosing Amazon Linux OS from the suggestions.

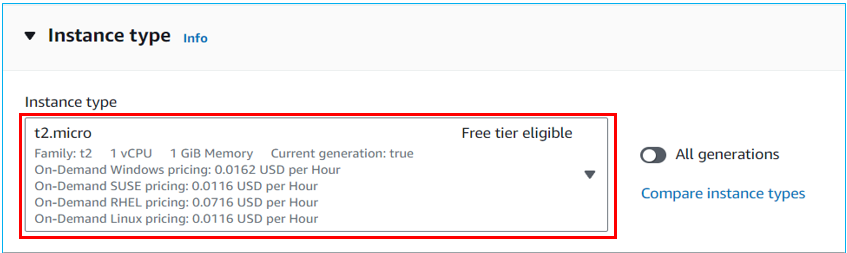

Select the instance type that matches your requirements in terms of CPU, memory, and other resources. You can choose the free tier-eligible options or scale up for more power. I keep the default instance type (t2.micro)

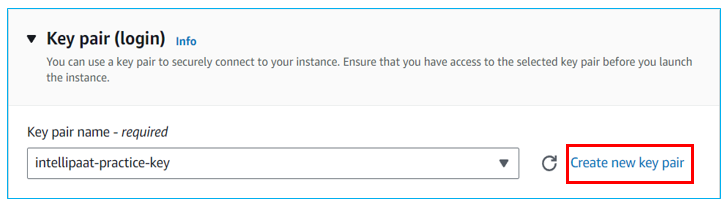

Select one of the key pairs from the suggestions.

Create a new one if no key pair is created for the current region.

Click on Create key pair and download the .pem file. Later, we’ll use PuTTYgen to create a .ppk file to access the EC2 instance.

In the network settings, select the check boxes corresponding to SSH and HTTP Traffic.

Configure the amount of storage you want for your instance. The default 8 GB of gp2 volume should be enough for a basic web server.

Review the summary of the EC2 instance and click on the Launch Instance. It’ll take a moment to initiate the instance.

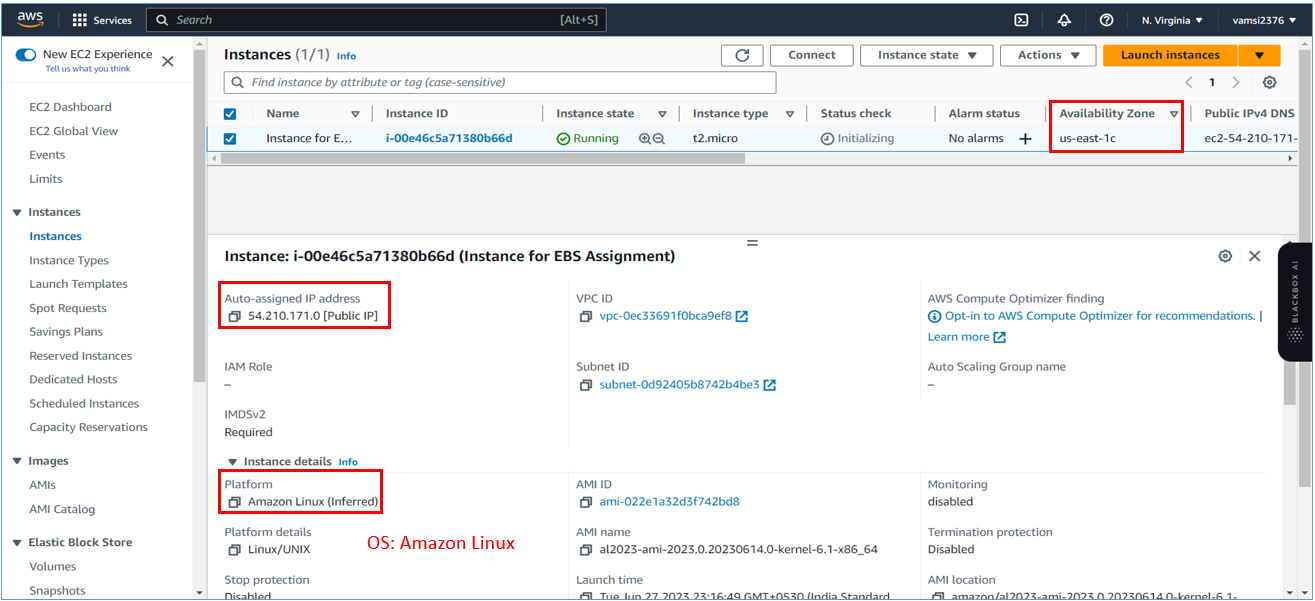

The newly created instance is launched in the availability zone in the US-East-1 Region. We’ll now open PuTTY & copy this Public IP to access the instance from PuTTY.

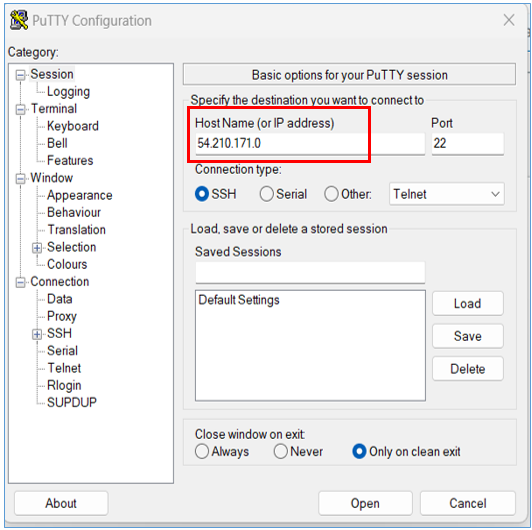

Open PuTTY & paste the copied Public IP in the Host Name (or IP address).

Then go to, Connection -> SSH -> Auth -> Credentials.

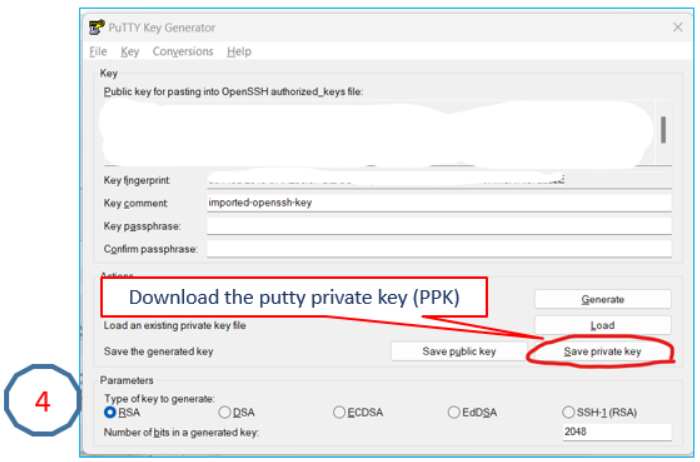

Now we need a putty private key file for authentication to access the instance. So we’ll now open PuTTYgen & generate the .ppk file from the .pem file.

Generating .ppk from .pem:

Select the generated .ppk file and then click on Open.

It is successfully connected to the instance. Click on Accept.

Enter ec2-user to log in to the instance.

The instance is ready for use.

Task 2: Create an EBS Volume and Attach It to the instance

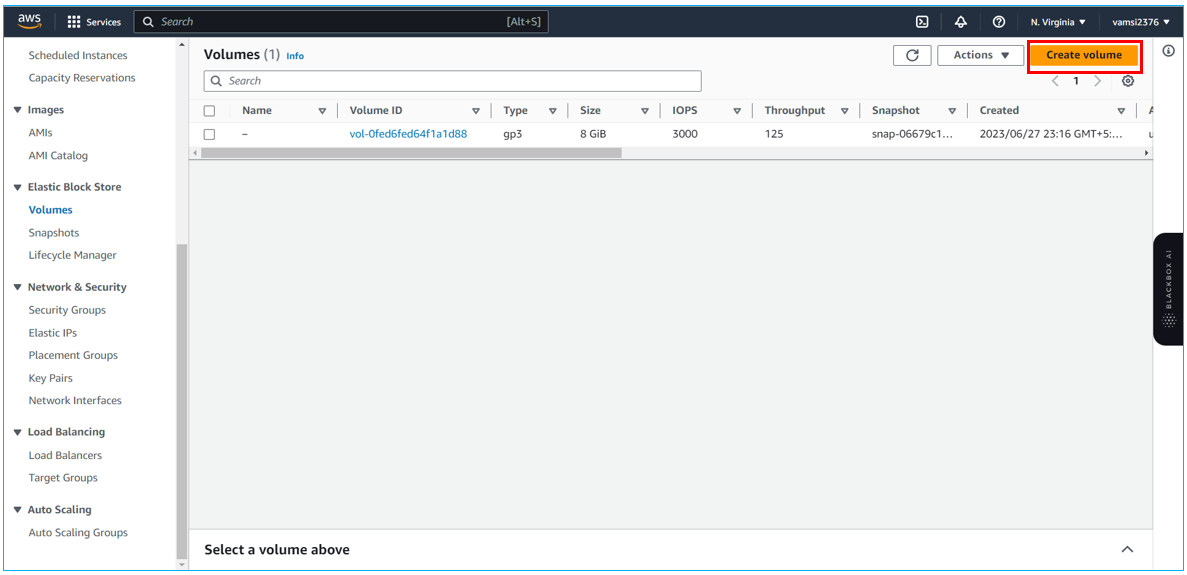

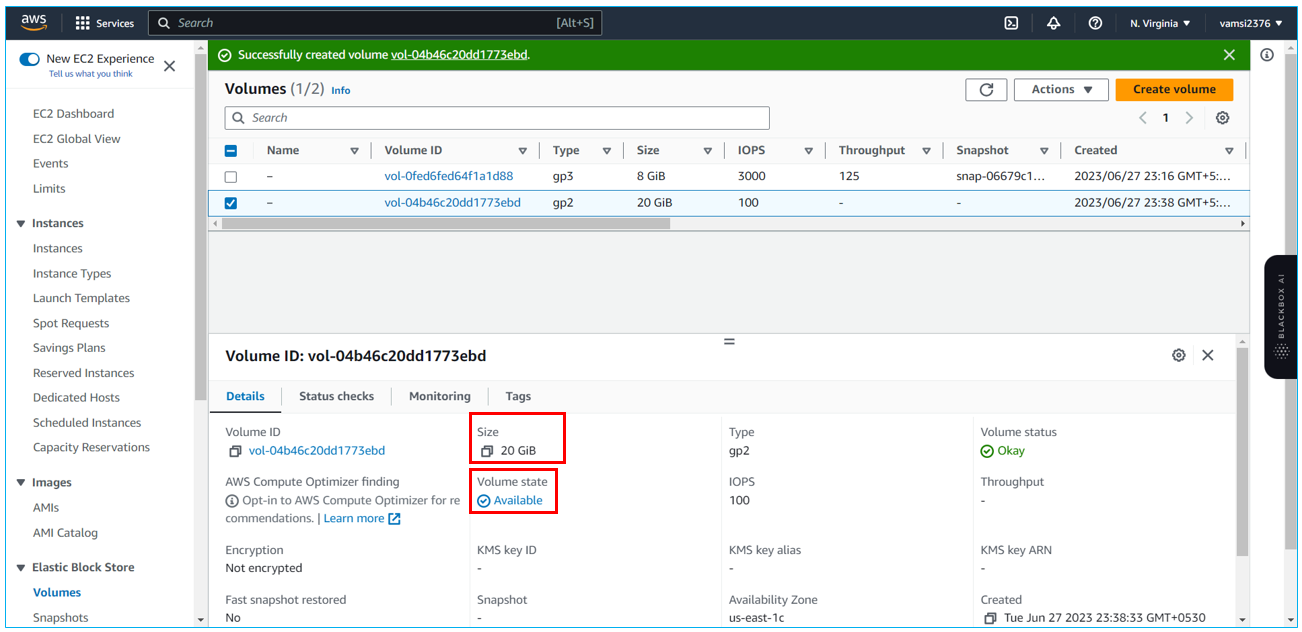

Now we’ll create an EBS volume with 20 GB of storage and attach it to the created EC2 instance. In this same EC2 page, scroll down to Elastic Block Store (EBS) and click on Volumes.

Currently, it is showing the Root Volume Device of the instance launched in the previous task. Click on Create Volume.

Enter 20 GiB for the size and select the availability zone that our current instance is running from.

Keep the remaining options as it is and click on Create Volume.

Volume is successfully created and is available for us to use.

Now, we’ll attach this volume to our instance. Go to Actions -> Attach Volume

Select the instance to which we want to attach our newly created volume and click on attach volume.

Volume is successfully attached to our instance. Now we’ll go to our instance.

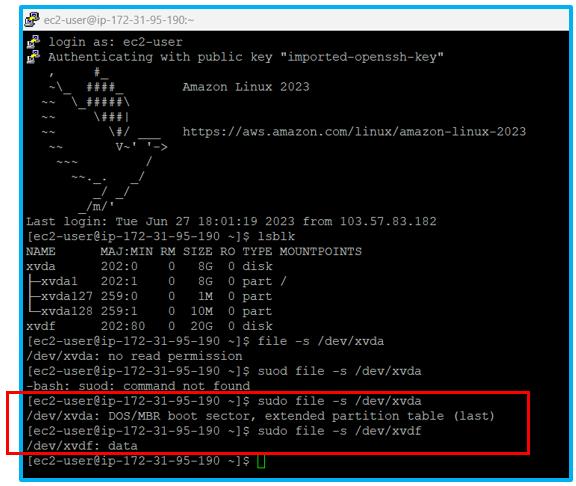

Type lsblk and see the available volumes and we can see that there is no mount point for xvdf.

LSBLK command

- We'll run the file command. It detected the device xvda as a volume of root partition and xvdf as just data.

The output of the file command is different for both volumes (xvda & xvdf). So, we’ll make xvdf a file system.

FILE command

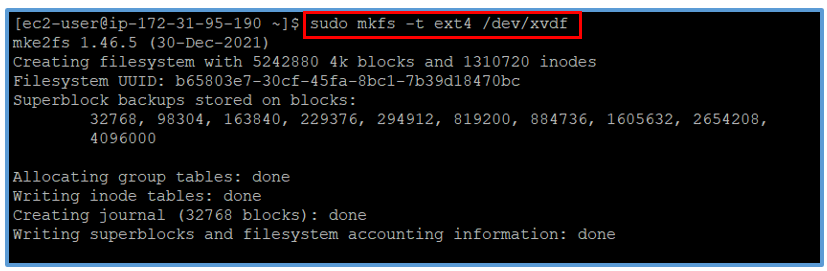

Use mkfs to create a filesystem.

The mkfs command

It builds a Linux filesystem on a device, usually a hard disk partition.We’ll make a new directory named ebs (mkdir ebs) and mount this file system.

Now, there is a mount point for xvdf.

The mount command

It serves to attach the filesystem found on some devices to the big file tree.

Go to the newly created directory ebs and create a new text file.

1.txt is created. Now we have a new EBS volume attached to our EC2 instance.

Step 3: Resize the Attached Volume and make sure it reflects in the connected instance

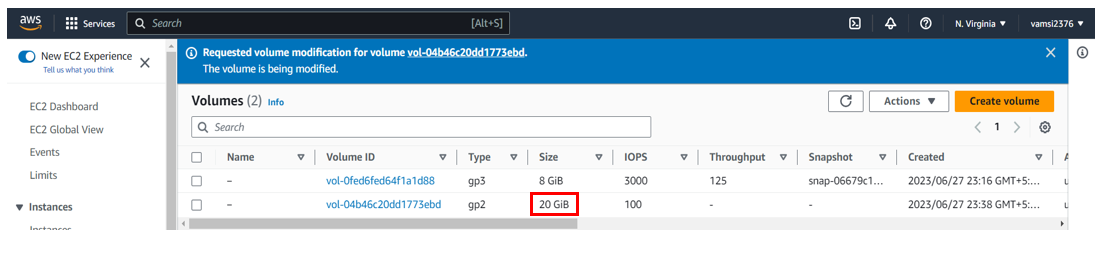

Go to AWS Console -> EC2 -> Elastic Block Storage -> Volumes -> Modify Volume. Change the size from 20 GiB to 30 GiB and click on Modify.

Click on Modify.

Modification is in progress and the size is still 20 GiB.

After modification, the size is changed to 30 GiB.

Now, we’ll check the device information. Here lsblk is showing 30 GiB but df is still showing 20 GiB.

The df command

It displays the amount of disk space available on the file system containing each file name argument.We’ll use the resize2fs command to reflect the changes.

Now df is showing 30 GiB and we can also see the 1.txt that was created in the previous step.

The resize2fs program

It will resize ext2, ext3 or ext4 file systems. It can be used to enlarge or shrink an unmounted file system located on a device. If the file system is mounted, it can be used to expand the size of the mounted file system, assuming the kernel and the file system support online resizing.

And that's it! We've successfully launched a Linux EC2 instance, attached an EBS volume, and resized it to meet our requirements.

Conclusion:

AWS provides a powerful and flexible platform for cloud computing, and understanding how to work with resources like EC2 instances and EBS volumes is essential for any AWS user. In this blog post, I've walked you through the process of launching an EC2 instance, creating an EBS volume, and resizing it when needed. With this knowledge, you can confidently deploy and manage resources in your AWS environment, ensuring that your infrastructure is always in line with your evolving requirements.

Subscribe to my newsletter

Read articles from SIDDHANI VAMSI SAI KUMAR directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

SIDDHANI VAMSI SAI KUMAR

SIDDHANI VAMSI SAI KUMAR

I've spent over 9 years working in software development for the Indian Defense industry. I'm skilled in C++, Qt, Socket Programming, Multi-Threading, BASH Scripting, and CUDA, which have all been crucial for projects in defense. Right now, I'm learning about cloud computing and DevOps, especially focusing on AWS. I'm passionate about making software development and deployment smoother and more reliable using cloud technology. Looking ahead, I'm excited about roles in cloud computing and DevOps. I want to use my software skills and knowledge of AWS and DevOps to lead exciting projects and make a difference in the tech world.