A Guide to Human Pose Estimation for AI

tagx

tagx

Human pose estimation and tracking is a computer vision task that includes detecting, associating, and tracking semantic key points. Examples of semantic key points are “right shoulders,” “left knees,” or the “left brake lights of vehicles.”

The performance of semantic keypoint tracking in live video footage requires high computational resources which has been limiting the accuracy of pose estimation. With the latest advances, new applications with real-time requirements become possible, such as self-driving cars and last-mile delivery robots.

Today, the most powerful image processing models are based on convolutional neural networks (CNNs). Hence, state-of-the-art methods are typically based on designing the CNN architecture tailored particularly for human pose inference.

Importance of Pose Estimation

In traditional object detection, people are only perceived as a bounding box (a square). By performing pose detection and pose tracking, computers can develop an understanding of human body language. However, conventional pose tracking methods are neither fast enough nor robust enough for occlusions to be viable.

High-performing real-time pose detection and tracking will drive some of the biggest trends in computer vision. For example, tracking the human pose in real-time will enable computers to develop a finer-grained and more natural understanding of human behavior.

This will have a big impact on various fields, for example, in autonomous driving. Today, the majority of self-driving car accidents are caused by “robotic” driving, where the self-driving vehicle conducts an allowed but unexpected stop and a human driver crashes into the self-driving car. With real-time human pose detection and tracking, the computers are able to understand and predict pedestrian behavior much better – allowing more natural driving.

What is Human Pose Estimation?

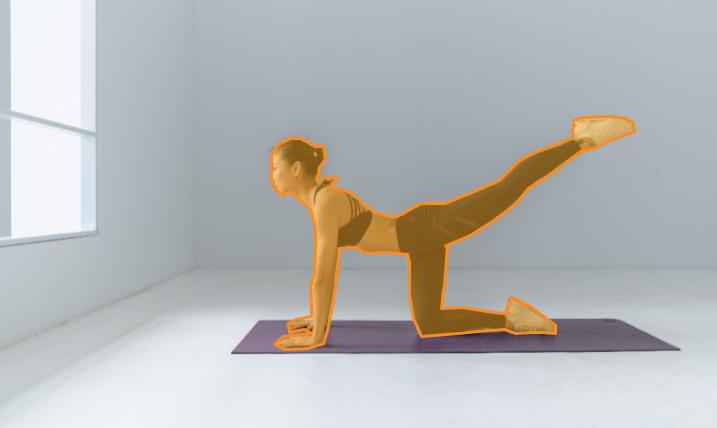

The goal of human pose estimation is to predict the positions of human body parts and joints in images or videos. Because pose motions are frequently driven by specific human actions, knowing a human’s body pose is critical for action recognition.

2D Pose Estimation – 2D pose estimation is based on the detection and analysis of X, Y coordinates of human body joints from an RGB image.

3D Pose Estimation – 3D pose estimation is based on the detection and analysis of X, Y, Z coordinates of human body joints from an RGB image.

Human body modeling

The location of human body parts is used to build a human body representation (such as a body skeleton pose) from visual input data in human pose estimation. As a result, human body modeling is an essential component of human pose estimation. It represents features and key points extracted from visual input data. A model-based approach is typically used to describe and infer human body poses, as well as render 2D or 3D poses.

Most methods employ an N-joints rigid kinematic model, in which the human body is represented as an entity with joints and limbs, containing information about body kinematic structure and body shape.

There are three types of models for human body modeling:

The kinematic model, also known as the skeleton-based model, is used for both 2D and 3D pose estimation. To represent the human body structure, this flexible and intuitive human body model includes a set of joint positions and limb orientations. As a result, skeleton pose estimation models are employed to capture the relationships between various body parts. Kinematic models, on the other hand, are limited in their ability to represent texture or shape information.

A planar model, also known as a contour-based model, is used for 2D pose estimation. Planar models are used to depict the appearance and shape of the human body. Typically, body parts are represented by a series of rectangles that approximate the contours of the human body. The Active Shape Model (ASM) is a popular example of how principal component analysis can be used to capture the full human body graph and silhouette deformations.

A volumetric model is used for 3D pose estimation. There are several popular 3D human body models that are used for deep learning-based 3D human pose estimation and recovering 3D human mesh. For example, GHUM and GHUML(ite) are fully trainable end-to-end deep learning pipelines trained on a high-resolution dataset of full-body scans of over 60’000 human configurations to model statistical and articulated 3D human body shapes and pose.

The main difficulties

Human pose estimation is a difficult task because the body’s appearance joins change dynamically due to various types of clothing, arbitrary occlusion, occlusions due to viewing angle, and background contexts. Pose estimation must be resistant to challenging real-world variations such as lighting and weather.

As a result, it is difficult for image processing models to identify fine-grained joint coordinates. It is especially difficult to track small and barely visible joints.

Head pose estimation

A common computer vision problem is estimating a person’s head pose. Head pose estimation has a variety of applications, including assisting in gaze estimation, modeling attention, fitting 3D models to video, and performing face alignment.

Traditionally, the head pose is computed by using key points from the target face and solving the 2D to 3D correspondence problem with a mean human head model.

The ability to recover the 3D pose of the head is a byproduct of keypoint-based facial expression analysis, which is based on the extraction of 2D facial keypoints using deep learning methods. These methods are resistant to occlusions and extreme pose changes.

Animal pose estimation

The majority of cutting-edge methods concentrate on human body pose detection and tracking. However, some models were created to be used with animals and automobiles (object pose estimation).

Animal pose estimation is complicated by a lack of labeled data (images must be manually annotated) and a high number of self-occlusions. As a result, animal datasets are typically small and include only a few animal species.

Estimating the pose of multiple animals is also a difficult computer vision problem due to frequent interactions that cause occlusions and make assigning detected key points to the correct individual difficult. It’s also difficult to have very similar-looking animals interact more closely than humans normally would.

Transfer learning techniques have been developed to address these issues by re-applying methods from humans to animals. Multi-animal pose estimation and tracking with DeepLabCut, a cutting-edge, popular open-source pose estimation toolbox for animals and humans, is one example.

Video person pose tracking

Multi-frame human pose estimation in complex situations is difficult and requires a lot of computing power. While human joints detectors perform well in static images, they frequently fall short when applied to video sequences for real-time pose tracking.

Handling motion blur, video defocus, pose occlusions, and the inability to capture temporal dependency among video frames are the most difficult challenges.

When modeling spatial contexts with traditional recurrent neural networks (RNN), empirical difficulties arise, particularly when dealing with pose occlusions. DCPose, a cutting-edge multi-frame human pose estimation framework, takes advantage of abundant temporal cues between video frames to facilitate keypoint detection.

Most Popular Pose Estimation Applications

1: Human Activity Estimation

2: Robot Training

3: Motion capture and augmented reality

4: Motion Capture for Consoles

5: Athlete pose detection

Human Activity Estimation

Tracking and measuring a human activity and movement is a fairly obvious application of pose estimation. DensePose, PoseNet, and OpenPose architectures are frequently used for activity, gesture, and gait recognition.

Robot Training

Rather than manually programming robots to follow trajectories, robots can be programmed to follow the trajectories of a human pose skeleton performing an action. By simply demonstrating certain actions, a human instructor can effectively teach the robot those actions. The robot can then calculate how to move its articulators to accomplish the same task.

Motion Capture for Consoles

Pose estimation has an interesting application in tracking the motion of human subjects for interactive gaming. Kinect, for example, popularly used 3D pose estimation (using IR sensor data) to track the motion of human players and use it to render the actions of virtual characters.

Motion Capture and Augmented Reality

CGI applications are an interesting application of human pose estimation. Graphics, styles, fancy enhancements, equipment, and artwork can be superimposed on the person if their human pose can be estimated. By tracking the variations of this human pose, the rendered graphics can “naturally fit” the person as they move.

Animoji is a good visual example of what is possible. Even though the above only tracks the structure of a face, the concept can be extrapolated to the key points of a person. The same ideas can be used to create Augmented Reality (AR) elements that can mimic a person’s movements.

Athlete pose detection

Pose detection can assist players in fine-tuning their technique and achieving better results. Apart from that, pose detection can be used to analyze and learn about the opponent’s strengths and weaknesses, which is extremely useful for professional athletes and their trainers.

Conclusion

Great progress has been made in the field of human pose estimation, allowing us to better serve the wide variety of applications that it is capable of. Furthermore, research in related fields such as Pose Tracking can significantly improve its productive use in a variety of fields. 3D pose estimation is one of the most fascinating and difficult tasks in computer vision. Today, technology provides a plethora of options for meeting the growing demand in the sports industry. It aids athletes in improving their techniques, avoiding injury, and increasing their endurance. And in the future, it has the potential to bring a lot more to the table.

TagX is dedicatedly involved in data collection and classification with labeling and image tagging or annotations to make such data recognizable for machines or computer vision to train Artificial Intelligence models. Whether you have a one-time project or need data on an ongoing basis, our experienced project managers ensure that the whole process runs smoothly.

Subscribe to my newsletter

Read articles from tagx directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by