Activation Functions in Neural NetworksProblem

Juan Carlos Olamendy

Juan Carlos Olamendy

Problem

Ever wondered why your neural network isn't capturing complex patterns? It might be missing the magic of Activation Functions. Without them, you're just playing with a linear model.

Without activation functions:

- Your model can't map non-linear relationships

- No gradients to guide weight updates

- No focus on essential features

Imagine training a model for days only to realize it's not versatile enough!

What is an Activation Function?

An activation function is a mathematical function applied to the output of each neuron in the network.

It determines whether a neuron should be activated or not, based on the weighted sum of its input.

Essentially, it introduces non-linearity into the model, allowing the network to learn from the error and make adjustments, which is essential for learning complex patterns.

Why are Activation Functions Important?

Non-linearity: Without activation functions, a neural network would simply be a linear regression model, which limits its utility. Non-linearity ensures that the network can map non-linear relationships in the data, making it versatile and powerful.

Gradient: Activation functions and their derivatives (used in backpropagation) provide the necessary gradients that guide the weight updates during training. A good activation function will have a gradient that aids in propagating the error back through the network.

Thresholding: Activation functions introduce a threshold, ensuring that neurons are only activated when necessary. This thresholding capability allows neural networks to focus on the most important features.

Common Activation Functions

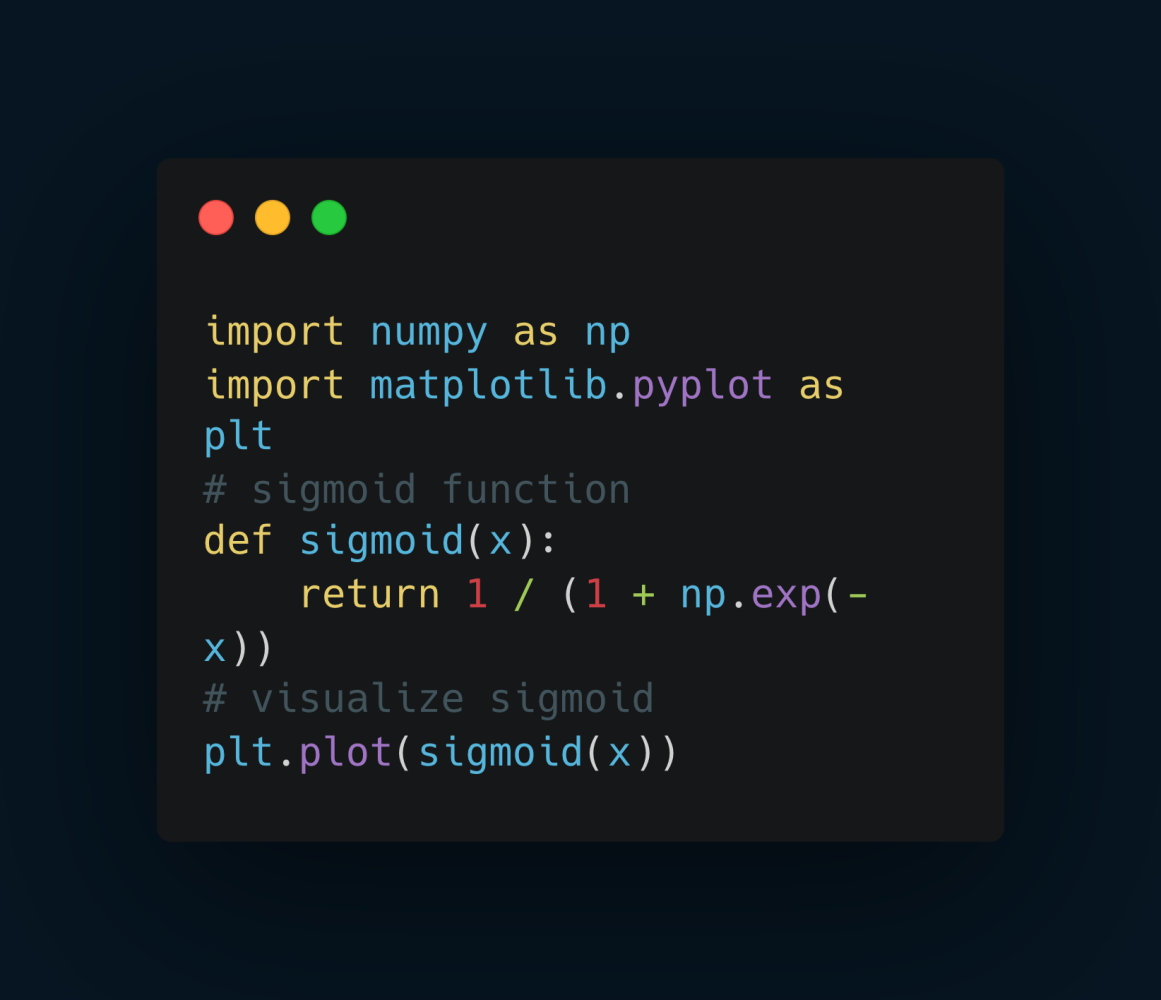

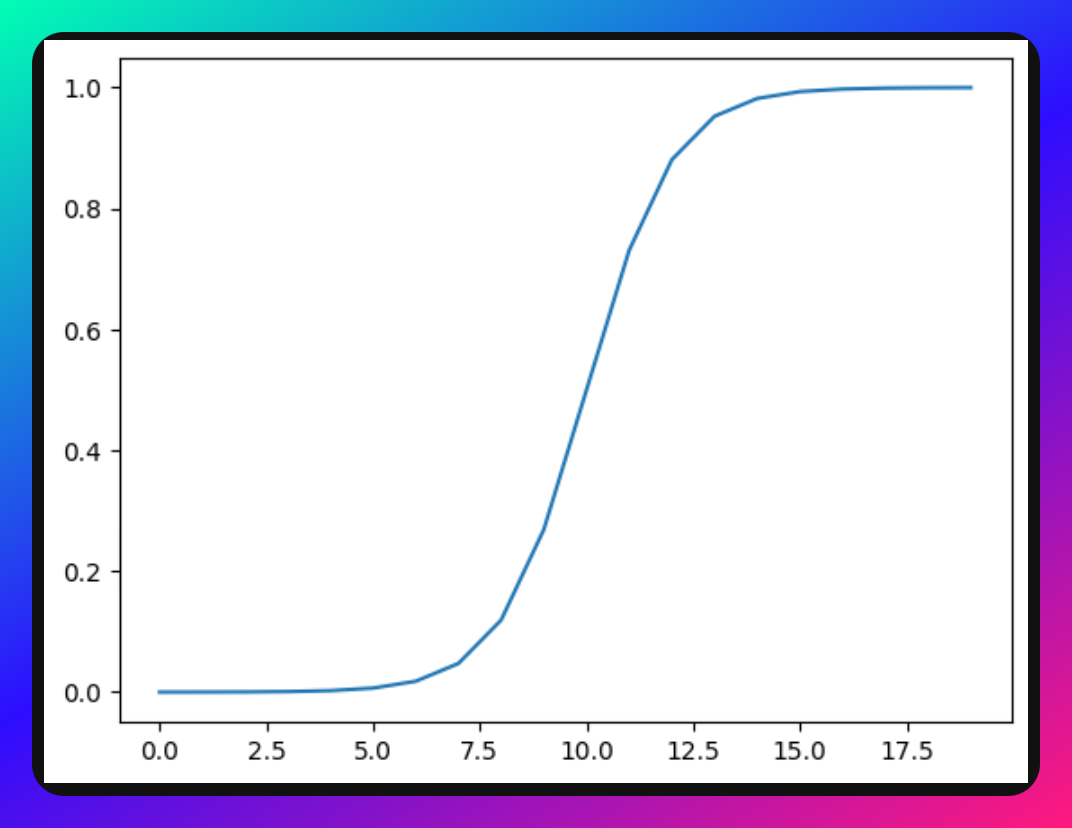

Sigmoid Function: This is an S-shaped curve that maps any input value to an output between 0 and 1. It's beneficial for binary classification problems. However, it suffers from the vanishing gradient problem, which can slow down training.

Sigmoid implementation using Numpy.

Sigmoid implementation using Pytorch.

Sigmoid plot.

Tanh (Hyperbolic Tangent) Function: Similar to the sigmoid but maps values between -1 and 1. It is zero-centered, making it preferable over the sigmoid in many cases.

ReLU (Rectified Linear Unit): One of the most popular activation functions, ReLU outputs the input if it's positive; otherwise, it outputs zero. It's computationally efficient and helps mitigate the vanishing gradient problem. However, it can suffer from the "dying ReLU" problem where neurons can sometimes get stuck during training and stop updating.

Relu implementation using Numpy.

Relu implementation using Pytorch.

Relu plot.

Leaky ReLU: A variant of ReLU, it has a small slope for negative values, preventing neurons from "dying."

Softmax: Used primarily in the output layer of classification problems, it converts the output scores into probabilities.

Choosing the Right Activation Function

The choice of activation function depends on the specific application and the nature of the data:

- For binary classification, the sigmoid function is often used in the output layer.

- For multi-class classification, the softmax function is preferred in the output layer.

- ReLU and its variants are commonly used in hidden layers due to their computational efficiency and performance.

Conclusion

Activation functions are the unsung heroes of neural networks, driving their capacity to learn and adapt.

They introduce the necessary non-linearity that allows neural networks to approximate almost any function.

As research in deep learning progresses, we may see the emergence of new activation functions tailored to specific challenges, further enhancing the capabilities of neural networks.

In summary, activation functions are our heroes for making neural networks the powerhouses they are today.

If you like this, share with others ♻️

Subscribe to my newsletter

Read articles from Juan Carlos Olamendy directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Juan Carlos Olamendy

Juan Carlos Olamendy

🤖 Talk about AI/ML · AI-preneur 🛠️ Build AI tools 🚀 Share my journey 𓀙 🔗 http://pixela.io