Best of all: Automatic log output scheme for AWS IoT solutions

honma

honma

Abstract

The number of solutions using AWS IoT is growing every day!

- The number of IoT devices connecting to AWS is also increasing

With the increase in the number of devices, AWS server developers are spending more time investigating logs when there is a failure between a device and AWS IoT.

DevOps operators need to create optimal and easy log output schemes and automate them so that they can focus on development

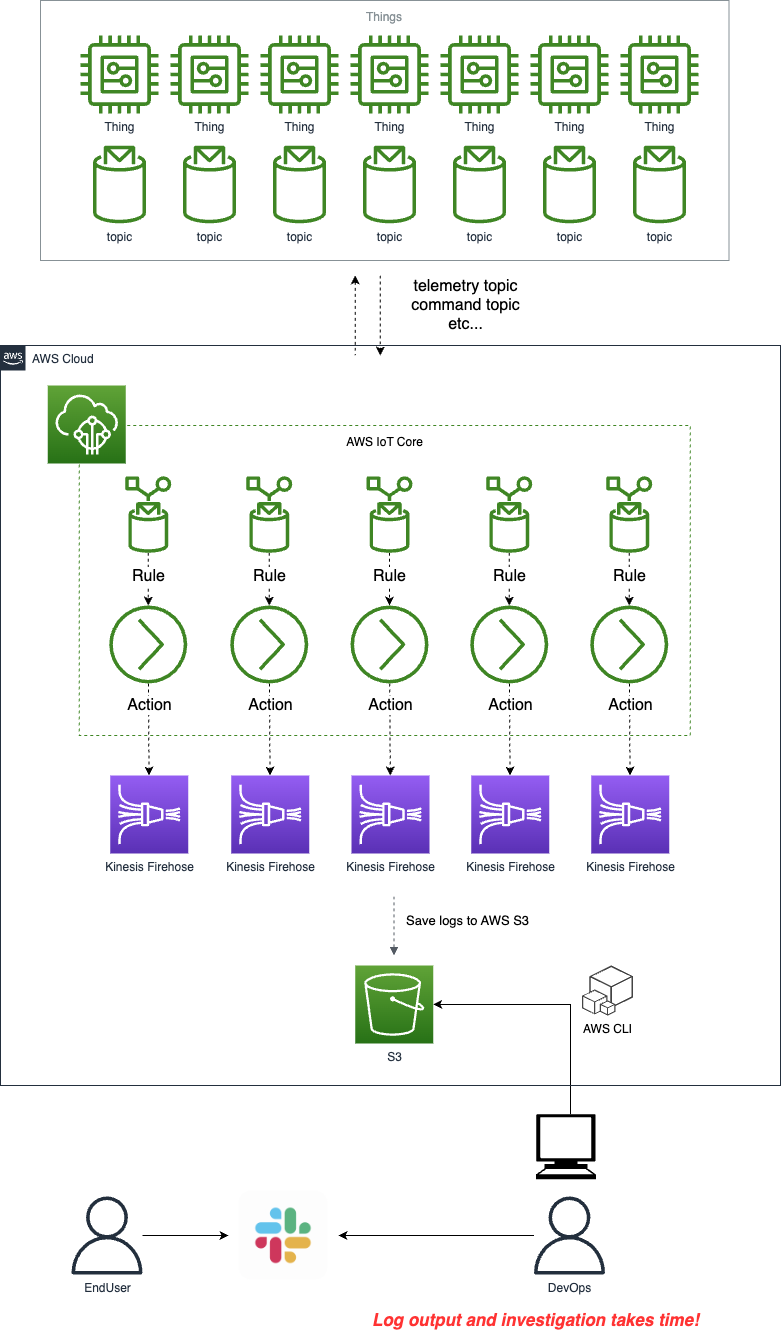

As Is

Manually utilizing AWS CLI to execute log outputs from S3.

Development work is halted due to inquiries on Slack.

Anyone can't retrieve logs at any time, which results in extended investigation time.

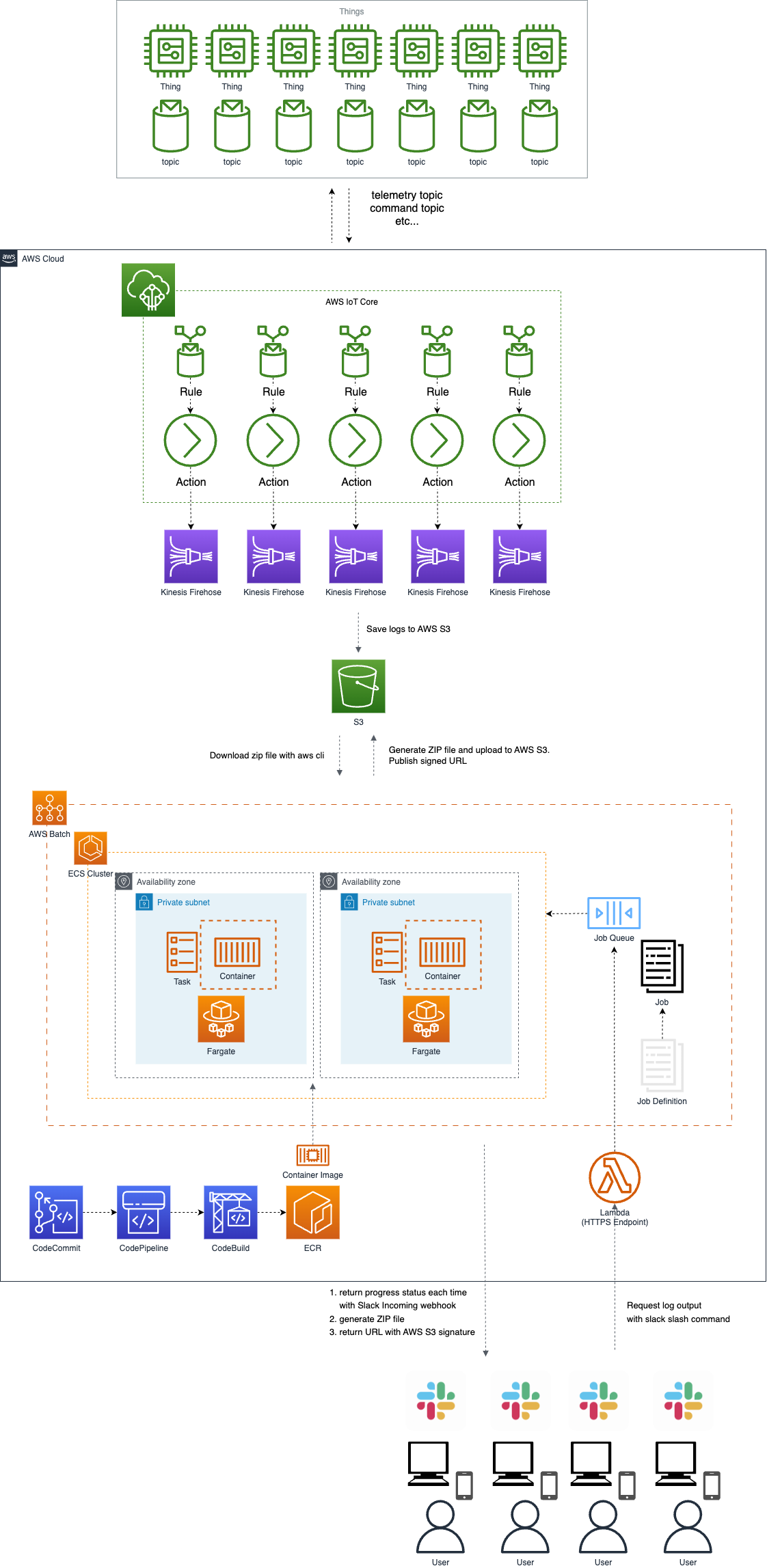

To Be

Realize automatic log output while keeping costs as low as possible with a serverless architecture.

Reduce communication time on Slack to the bare minimum.

Enable anyone to execute log outputs from anywhere as long as they have Slack installed on their PC or phone.

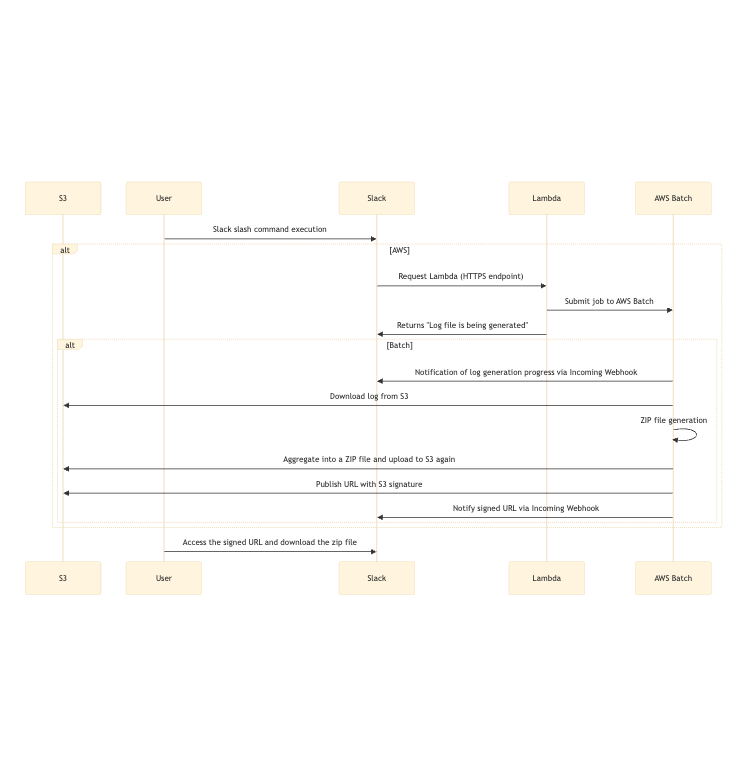

Sequence

I will adopt AWS Batch to generate a single Zip file consolidating a large number of log files.

Please note that the Slack slash command requires a response within

3 seconds.

AWS Architecture

Command Specifications

Guidelines

/iot-log [Identifier] [Category] [Date(UTC)]

Identifier:

ThingName

Certificate ID

etc...

Category:

Set a limit for the category parameter specification according to the specifications of the slash command and AWS Batch

telemetry

command

lifecycle

etc...

Date:

2023/01/01

Note that as per AWS S3 directory specifications, the date here is in UTC

Examples of Slash Commands

# If you want to retrieve telemetry for THING00001 on 2023/10/01

/iot-log THING00001 telemetry 2023/10/01

# If you want to retrieve telemetry and command for THING00001 on 2023/10/01

/iot-log THING00001 telemetry,command 2023/10/01

# If you want to retrieve all devices such as airframes and apps that connected/disconnected to AWS IoT Core on 2023/10/01

/iot-log client_id lifecycle 2023/10/01

Display Image on Slack

We are issuing signed URLs for the zipped objects on S3. Please specify an appropriate value for the expiration time.

Here, we describe an example of how the Slack slash command will appear when generating a zip file for telemetry and command logs for the ThingName THING00001 on 2023/10/01.

syuhei-honma 12:21 PM

/iot-log THING00001 telemetry,command 2023/10/01 dev

-----------------------------------------------

IoT Log Output App 12:21 PM

Target Identifier: THING00001

Log Category: telemetry,command

Specified Log Date: 2023/10/01

Generating log file...

-----------------------------------------------

IoT Log Output App 12:22 PM

Target Identifier: THING00001

Log Category: telemetry,command

Specified Log Date: 2023/10/01

Output Progress: xxx%

-----------------------------------------------

IoT Log Output App 12:22 PM

Output Progress: 100%

Target Identifier: THING00001

Log Category: telemetry,command

Specified Log Date: 2023/10/01

Log file generation complete!!

Please access the link below to download the log file.

The download link is valid for up to 60 minutes.

https://xxxxx.s3.ap-northeast-1.amazonaws.com/slack/iot-logs/YYYYMMMDDhhmmss/20231001_THING00001.zip??X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=xxxxx&X-Amz-Date=xxxxx&X-Amz-Expires=xxxx&X-Amz-SignedHeaders=host&X-Amz-Signature=xxxxxx

-----------------------------------------------

Specification for Log Output Generation Progress

Please decide whether to define the progress status in % or string during implementation.

Assumed Progress (Tentative):

starting: 10%progress: 10~99%is startingis done

completed: 100%

Directory Structure of the zip file

The directory structure post-decompression of the downloaded zip file is described below.

The directory structure varies depending on the parameters of the slash command.

# This is just a reference example

.

├── telemetry_20231001.log

└── command_20231001.log

Construction Flow

Creating Slack App:

Create a new app on Slack API's Your Apps page.

Create a slash command and specify the request URL. This URL will later become the endpoint for your AWS Lambda function.

Creating AWS Lambda Function:

Create a Lambda function to receive requests from Slack.

Within this function, write the code to initiate AWS Batch jobs.

Building & Implementing AWS Batch:

Construct AWS Batch job definitions and job queues.

Within the job's Docker container, download multiple log files from S3, aggregate them into a single log file, and re-upload it to S3.

Depending on the process, notify the progress status to Slack.

Generate signed URLs for the Zip files uploaded to S3.

Launching Batch Job from Lambda:

- Within the Lambda function, call the API to initiate the Batch job.

Notification of Log File to Slack:

- Once the Batch job is completed, notify Slack of the signed URL of the log file in S3.

Security:

Verify Slack's signature to ensure that the request is genuinely from Slack.

Appropriately set S3 bucket policies and IAM roles to prevent unauthorized access.

Testing:

Execute the slash command in Slack, check if AWS Batch is activated, a Zip file is generated, and Slack is notified.

Access the signed URL to ensure that the Zip file can be downloaded.

AWS S3 Specifications

The bucket management settings below are examples. Please design optimally as needed.

Logs between devices and AWS IoT are output to the dev-iot-logs bucket.

The output destination for log output is also managed in the dev-iot-logs bucket.

| Bucket Name | Input Directory Name | MQTT Topic | Remarks |

| dev-iot-logs | lifecycle | $aws/events/presence/connected/+,$aws/events/presence/disconnected/+ | Connection/Disconnection detection to AWS IoT Core |

| dev-iot-logs | telemetry | iot/telemetry/# | Telemetry topics from devices |

| dev-iot-logs | commands | iot/commands/# | Command topics to devices |

# Reference: Input Directory Structure

dev-iot-logs

├── lifecycle

├── telemetry

└── commands

# Reference: Output Directory Structure

dev-iot-logs

└── slack

└── iot-logs

└── Request Time (e.g., YYYYMMMDDhhmmss)

└── 20231001_THING00001.zip

S3 Lifecycle Rule Setting

Here are the lifecycle settings details.

The settings below are examples. Please design optimally as needed.

| Key | Value |

| Lifecycle Rule Name | Slack2IoTLogs |

| Rule Scope Selection | Restrict the scope of this rule using one or more filters |

| Filter Type Prefix | slack |

| Lifecycle Rule Actions | Expire the current version of objects, Permanently delete the non-current versions of objects |

| Current Version Expiration: Days from object creation | 1 day |

| Non-current Version Permanent Deletion: Days since object became non-current version | 1 day |

AWS Lambda Specifications

Only one AWS Lambda will be prepared to be triggered by a Slack slash command.

To achieve the minimum implementation, use Lambda HTTPS Endpoint instead of API Gateway + Lambda.

Utilize convenient tools like CDK, SAM, etc., for infrastructure construction.

Implement in Python.

When submitting jobs to multiple AWS Batch from one AWS Lambda, define AssumeRole in IAM role.

Example Source Code

To trigger AWS Batch jobs from AWS Lambda, you can follow the steps below using the AWS SDK for Python (boto3):

Setting up IAM Role:

- It's crucial to assign an appropriate IAM role to the AWS Lambda function to allow communication with AWS Batch. This role should include the

batch:SubmitJobpermission along with any other necessary permissions.

- It's crucial to assign an appropriate IAM role to the AWS Lambda function to allow communication with AWS Batch. This role should include the

Creating AWS Lambda Function:

- Create a new Lambda function using either the Lambda console or AWS CLI.

Installing Dependencies:

- Install the dependencies, including the

boto3library, as needed.

- Install the dependencies, including the

Implementing the Code:

- The Python code snippet below illustrates an example of an AWS Lambda function that triggers an AWS Batch job:

import boto3

def lambda_handler(event, context):

# Create AWS Batch client

batch_client = boto3.client('batch')

# Specify job queue and job definition

job_queue = 'your-job-queue-name'

job_definition = 'your-job-definition-name'

# Specify job name and priority

job_name = 'example-job-name'

job_priority = 1 # Priority can take a value between 1 and 99

# Submit the job

response = batch_client.submit_job(

jobName=job_name,

jobQueue=job_queue,

jobDefinition=job_definition,

priority=job_priority

)

# Log the Job ID

print(f'Job ID: {response["jobId"]}')

return {

'statusCode': 200,

'body': f'Job {response["jobId"]} submitted successfully.'

}

In this code snippet, boto3 is utilized to create an AWS Batch client and the submit_job method is used to submit a new job. It's necessary to specify the job queue, job definition, job name, and job priority.

When used as a handler for an AWS Lambda function, this code snippet will submit a new AWS Batch job each time the function is triggered. Furthermore, this function logs the job ID and returns the job ID in the response.

AWS Batch Specifications

The settings below are examples. Please design optimally as needed.

A serverless architecture is adopted, and computing is prepared in Fargate.

ECR

Create in a private repository

ECR repository name: slack2log (example)

Scan frequency: on push

Job Queue

| Key | Value |

| Job Queue Name | slack |

| Priority | 1 |

| Scheduled Policy ARN - Optional | Not specified |

| Orchestration Type | Fargate |

| Enable Job Queue | Enabled |

| Select Computing Environment | default(FARGATE_SPOT) |

Job Definition

| Key | Value |

| Job Type | Single-node |

| Name | example-job-name |

| Execution Timeout | 3600 (1 hour) |

| Schedule Priority | Disabled |

| Platform Type | Fargate |

| Fargate Platform Version | Default value |

| Assign Public IP | Enabled |

| Image | <your_account_id>.dkr.ecr.ap-northeast-1.amazonaws.com/slack:latest |

| Command (JSON) | ["python3","main.py","generate-ziplog","--identifier","Ref::Identifier","--category","Ref::Category","--date","Ref::Date"] |

| vCPU | 1.0 |

| Memory | 2 GB (2048MB) |

| Job Role Configuration | arn:aws:iam::<your_account_id>:role/JobRole |

| Execution Role | arn:aws:iam::<your_account_id>:role/TaskExecutionRole |

| Log Configuration | awslogs: /batch/slack |

Add additional settings if there are others

Creation of Cloudwatch Log Group

| Key | Value |

| Log Group Name | /batch/slack |

| Retention Period Setting | 30 days |

During Operation (Prepare during implementation)

Describe usage and documentation on Slack channel's Canvas

List frequently used slash commands (such as telemetry and commands)

Conclusion

Troubleshooting in IoT solutions requires real-time investigation and root cause analysis.

Let's utilize the proposal from this time to achieve swift DevOps.

Subscribe to my newsletter

Read articles from honma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by