Cost Function in Linear Regression

SUBITSHA M

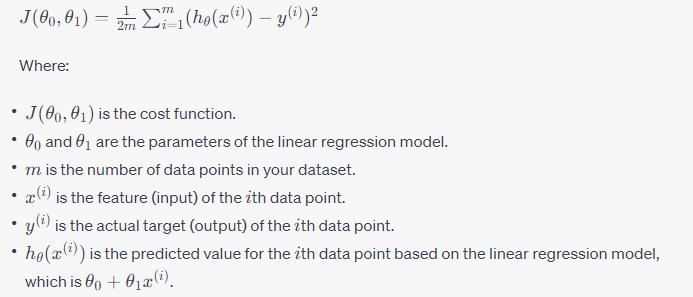

SUBITSHA MIn linear regression, the cost function, also known as the loss function or the mean squared error (MSE) function, is used to measure the error or the dissimilarity between the predicted values and the actual target values. The goal of linear regression is to find the line (or hyperplane in multiple dimensions) that minimizes this cost function. The formula for the mean squared error cost function in simple linear regression (with a single feature) is:

The cost function aims to minimize the squared difference between the predicted values and the actual target values. The division by 2m is included for mathematical convenience, as it simplifies the derivative of the cost function when performing gradient descent, a common optimization algorithm used to find the best values for θ0 and θ1.

The goal in linear regression is to find the values of θ0 and θ1 that minimize this cost function, which results in the best-fitting linear model for the given data. This is typically done using optimization techniques like gradient descent.

Subscribe to my newsletter

Read articles from SUBITSHA M directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

SUBITSHA M

SUBITSHA M

I am a Student who lives and breathe Software development.