Using HashiCorp Vault to Automate certificate lifecycle management F5 BIGIP NEXT Central Manager

Sebastian Maniak

Sebastian Maniak

One challenge enterprises face today involves managing various certificates and ensuring their validity for critical applications deployed across multi-cloud environments. This integration enhances security by utilizing short-lived dynamic SSL certificates through HashiCorp Vault on the BIG-IP Next Platform.

What is BIGIP NEXT

BIG-IP Next, comprising two essential components—BIG-IP Next instances and the BIG-IP Next Central Manager—delivers an all-encompassing application security and traffic management solution for applications across on-premises and multi-cloud architectures. Deployed near your apps, BIG-IP Next instances process and secure user traffic, while the BIG-IP Next Central Manager offers a unified point of control.

BIG-IP Next Instance

The BIG-IP Next Instance is a high-performance, scalable data plane designed to deliver and secure application traffic in various environments, including on-premises, colocation facilities, cloud, and at the edge. This is achieved through seamless integration with the BIG-IP Next Central Manager, which provides a unified point of control for comprehensive application security and traffic management across multi-cloud architectures.

BIG-IP Next Central Manager

The BIG-IP Next Central Manager serves as a centralized console that efficiently manages, automates, and monitors multiple BIG-IP Next instances, regardless of whether your applications are deployed on-premises, in colocation facilities, on the cloud, or at the edge. This seamless integration ensures comprehensive application security and traffic management across multi-cloud architectures through a unified point of control.

What is HashiCorp Vault

HashiCorp Vault is a popular open-source tool for managing secrets and protecting sensitive data in modern infrastructure and applications. It provides a secure and centralized way to manage, store, and control access to various types of secrets, such as API keys, passwords, certificates, and more. Vault is designed to address the challenges of secret management, data protection, and access control in cloud-native and dynamic environments.

Vault PKI Secret Engine

“The PKI secrets engine generates dynamic X.509 certificates. With this secrets engine, services can get certificates without going through the usual manual process of generating a private key and CSR, submitting to a CA, and waiting for a verification and signing process to complete. Vault’s built-in authentication and authorization mechanisms provide the verification functionality.” — from here

In summary, HashiCorp Vault is a powerful tool for secret management and data protection, and its PKI engine is just one of the many capabilities it offers to help organizations enhance security and manage cryptographic assets like certificates.

Vault Agent

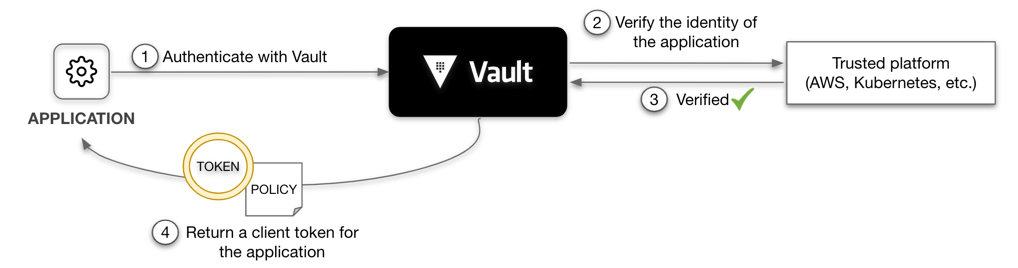

Vault Agent is a client daemon that provides the following features to manage the certificate life cycle. Auto-Auth — Automatically authenticate to Vault and manage the token renewal process for locally-retrieved dynamic secrets.

Benefits of using Vault automation for BIG-IP

No application downtime — Dynamically update configuration without affecting traffic

Multi-Cloud and On-prem independent solution for your application anywhere

Improve security posture with short lived dynamic certificates

Increased collaboration breaking down silos

Configuration

You can create instances in the cloud for Vault & BIG-IP using terraform.

Setup Vault in your lab.

- Start Vault in a new terminal

vault server -dev -dev-root-token-id root

2. Export an environment variable for the vault CLI to address the Vault server.

export VAULT_ADDR=http://127.0.0.1:8200

3. Export an environment variable for the vault CLI to authenticate with the Vault server.

export VAULT_TOKEN=root

Configure Vault

# Configure PKI Engine

vault secrets enable pki

vault secrets tune -max-lease-ttl=87600h pki# Generate root CAvault write -field=certificate pki/root/generate/internal \

common_name="example.com" \

ttl=87600h > CA_cert.crt# Configure the CA and CRL URLvault write pki/config/urls \

issuing_certificates="$VAULT_ADDR/v1/pki/ca" \

crl_distribution_points="$VAULT_ADDR/v1/pki/crl"# Generate intermediate CAvault secrets enable -path=pki_int pki

vault secrets tune -max-lease-ttl=43800h pki_int# Execute the following command to generate an intermediate and save the CSR as pki_intermediate.csrvault write -format=json pki_int/intermediate/generate/internal \

common_name="example.com Intermediate Authority" \

| jq -r '.data.csr' > pki_intermediate.csr# Sign the intermediate certificate with the root CA private key, and save the generated certificate as intermediate.cert.pem.vault write -format=json pki/root/sign-intermediate csr=@pki_intermediate.csr \

format=pem_bundle ttl="43800h" \

| jq -r '.data.certificate' > intermediate.cert.pem# Once the CSR is signed and the root CA returns a certificate, it can be imported back into Vault.vault write pki_int/intermediate/set-signed certificate=@intermediate.cert.pem

Create a role

Create a role named example-dot-com which allows subdomains.

vault write pki_int/roles/example-dot-com \

allowed_domains=”example.com” \

allow_subdomains=true \

ttls="10m" \

max_ttl=”30m”

Build a Policy and Configure Auth method

Next we are going to build a policy and auth method (approle) for our Vault-agent.

Here is the following policy i used cert-policy

# Permits token creation

path "auth/token/create" {

capabilities = ["update"]

}# Permits token renew

path "auth/token/renew" {

capabilities = ["update"]

}# Read-only permission on secret/

path "secret/data/*" {

capabilities = ["read"]

}# Enable secrets engine

path "sys/mounts/*" {

capabilities = [ "create", "read", "update", "delete", "list" ]

}

# List enabled secrets engine

path "sys/mounts" {

capabilities = [ "read", "list" ]

}

# Work with pki secrets engine

path "pki*" {

capabilities = [ "create", "read", "update", "delete", "list", "sudo" ]

}

Lets enable approle and deploy the policy

vault auth enable approle

vault policy write cert-policy cert-policy.hcl

vault write auth/approle/role/web-certs policies="cert-policy"

Build Vault Agent

Next let’s build a vault agent on your machine. I build it on my shared server in ubuntu. here is a link to help you built this

Now that you have vault installed on your machine let’s get the connect to vault and grab the roleID and SecretID by executing the following command

vault read -format=json auth/approle/role/web-certs/role-id | jq -r '.data.role_id' > roleID

vault write -f -format=json auth/approle/role/web-certs/secret-id | jq -r '.data.secret_id' >

Build Vault Agent Config File

Next step is to build your vault agent config agent-config.hcl .. here is an example of mine.

You mind need to change you vault agent IP from 192.168.86.6 to what ever yours is.

pid_file = "./pidfile"vault {

address = "http://192.168.86.69:8200"

}auto_auth {

method "approle" {

mount_path = "auth/approle"

config = {

role_id_file_path = "roleID"

secret_id_file_path = "secretID"

remove_secret_id_file_after_reading = false

}

}sink "file" {

config = {

path = "approleToken"

}

}

}template {

source = "./certs.tmpl"

destination = "./certs.json"

command = "bash f5-magic.sh"

}

Vault Agent Command Script

To make the appropriate changes to the F5 Cert Manager you will need to execute a set of api calls to F5 BIGIP Central Manager.

This Bash script is designed for managing TLS certificates through a RESTful API. It begins by authenticating with the API using a POST request that includes a username and password, storing the obtained access token. It then retrieves information about existing certificates via a GET request and checks the certificate count. If there are no certificates, it creates a new one and stores its ID in a text file. If certificates already exist, it checks if a stored certificate ID matches any in the API's list. If there's a match, it updates a JSON file, replacing certain fields with the certificate ID, and sends an API request to update the certificate. Throughout the script, error handling is implemented, and the --insecure option is used with curl to allow connections to the API with untrusted SSL/TLS certificates. The access token is exported for further API requests. This script is useful for automating TLS certificate management tasks via the specified API.

#!/bin/bash

# Input JSON file

input_file="tlscertdeploy.json"

# Output JSON file

output_file="tlscertupdate.json"

# ID to add

id_value="your_id_here"

response=$(curl -s -X POST -H "Content-Type: application/json" -d '{"username": "admin", "password": "W3lcome098!"}' --insecure https://172.16.10.67/api/login)

access_token=$(echo "$response" | jq -r '.access_token')

if [ -n "$access_token" ]; then

export ACCESS_TOKEN="$access_token"

echo "Access Token: $ACCESS_TOKEN"

# Perform a GET request to retrieve certificate information

get_certificates_response=$(curl -s -X GET -H "Content-Type: application/json" -H "Authorization: Bearer $ACCESS_TOKEN" --insecure https://172.16.10.67/api/v1/spaces/default/certificates)

# Extract the count value from the response using jq

count=$(echo "$get_certificates_response" | jq -r '.count')

echo "Certificate count: $count"

if [ "$count" -eq 0 ]; then

echo "No certificates found. Creating a new certificate..."

# Create a new certificate

create_cert_response=$(curl -s -X POST -H "Content-Type: application/json" -H "Authorization: Bearer $ACCESS_TOKEN" -d @tlscertdeploy.json --insecure https://172.16.10.67/api/v1/spaces/default/certificates/import)

# Extract the 'id' field from the create certificate response using jq

cert_id=$(echo "$create_cert_response" | jq -r '.path | split("/") | last')

echo "New Certificate ID: $cert_id"

# Save the certificate ID to a text file without additional structure

echo "$cert_id" > certificate_id.txt

echo "Certificate ID saved to certificate_id.txt"

else

echo "Certificates already exist."

# Export a list of all certificate IDs to a text file

all_cert_ids=$(echo "$get_certificates_response" | jq -r '.["_embedded"]["certificates"][] | .id')

# Save the certificate IDs to a text file, one per line

echo "$all_cert_ids" > all_certificate_ids.txt

echo "All Certificate IDs saved to all_certificate_ids.txt"

# Check if the certificate ID in certificate_id.txt exists in all_certificate_ids.txt

saved_cert_id=$(cat certificate_id.txt 2>/dev/null) # Read the file and suppress errors if it doesn't exist

if grep -q "$saved_cert_id" all_certificate_ids.txt; then

echo "Certificate ID in certificate_id.txt exists in all_certificate_ids.txt."

echo "Matched Certificate ID: $saved_cert_id"

# Check if the input file exists

if [ -f "$input_file" ]; then

# Use jq to modify the JSON file

jq --arg id "$saved_cert_id" 'del(.name, .common_name) + {id: $id}' "$input_file" > "$output_file"

echo "Modified JSON written to $output_file"

else

echo "Input JSON file not found: $input_file"

fi

# Execute an API call to update the certificate using the matched certificate ID

update_cert_response=$(curl -s -X POST -H "Content-Type: application/json" -H "Authorization: Bearer $ACCESS_TOKEN" -d @tlscertupdate.json --insecure "https://172.16.10.67/api/v1/spaces/default/certificates/import")

else

echo "Certificate ID in certificate_id.txt does not exist in all_certificate_ids.txt."

fi

fi

else

echo "Failed to retrieve the access_token from the API response."

fi

The script does the following

Shebang Line:

#!/bin/bash- This line indicates that the script should be interpreted using the Bash shell.

Input and Output File Definitions:

input_file="tlscertdeploy.json": Specifies the name of the input JSON file.output_file="tlscertupdate.json": Specifies the name of the output JSON file.id_value="your_id_here": This is a placeholder for a certificate ID.

API Authentication:

It uses the

curlcommand to send a POST request to the API's/api/loginendpoint to authenticate. It passes a JSON object with a username and password.The response from the API is stored in the

responsevariable.The

jqcommand is used to extract theaccess_tokenfrom the JSON response.

Checking Access Token:

- It checks if the

access_tokenis non-empty. If it exists, it proceeds with API requests. If not, it indicates a failure to authenticate.

- It checks if the

Retrieving Certificate Information:

It sends a GET request to retrieve certificate information from the API's

/api/v1/spaces/default/certificatesendpoint.The response is stored in the

get_certificates_responsevariable.The

jqcommand is used to extract thecountvalue from the JSON response, representing the number of certificates.

Certificate Handling:

If there are no certificates (

countequals 0), it means no certificates exist, so it creates a new certificate.It sends a POST request to the

/api/v1/spaces/default/certificates/importendpoint, using the data from thetlscertdeploy.jsonfile.The

jqcommand is used to extract theidof the newly created certificate from the JSON response, and thisidis stored in thecert_idvariable.The

cert_idis saved to a text file calledcertificate_id.txt.

Certificate Updating:

If certificates already exist (i.e.,

countis not 0), it checks if thecertificate_id.txtexists in the list of certificate IDs obtained from the API.If there is a match, it proceeds to update the JSON file specified in

input_fileby modifying it usingjq. It replaces thenameandcommon_namefields with theidfrom thecertificate_id.txtfile and saves the modified JSON tooutput_file.It then sends a POST request to update the certificate using the matched certificate ID, sending data from the

tlscertupdate.jsonfile.

Error Handling:

Throughout the script, it checks for the existence of files, whether the access token is valid, and whether the certificate ID in

certificate_id.txtexists in the list of all certificate IDs.Appropriate error messages are displayed if conditions are not met.

Insecure Connections:

- The

--insecureoption is used withcurlto allow connections to the API with self-signed or otherwise untrusted SSL/TLS certificates. This should be used with caution in a production environment.

- The

Exporting Access Token:

- It exports the

ACCESS_TOKENenvironment variable, making it available for subsequentcurlrequests.

- It exports the

This script is designed for managing TLS certificates through an API, including creating new certificates and updating existing ones based on a stored certificate ID.

Run Vault

Final step is to execute the Vault agent to run

COPY

vault agent -config=agent-config.hcl -log-level=debug

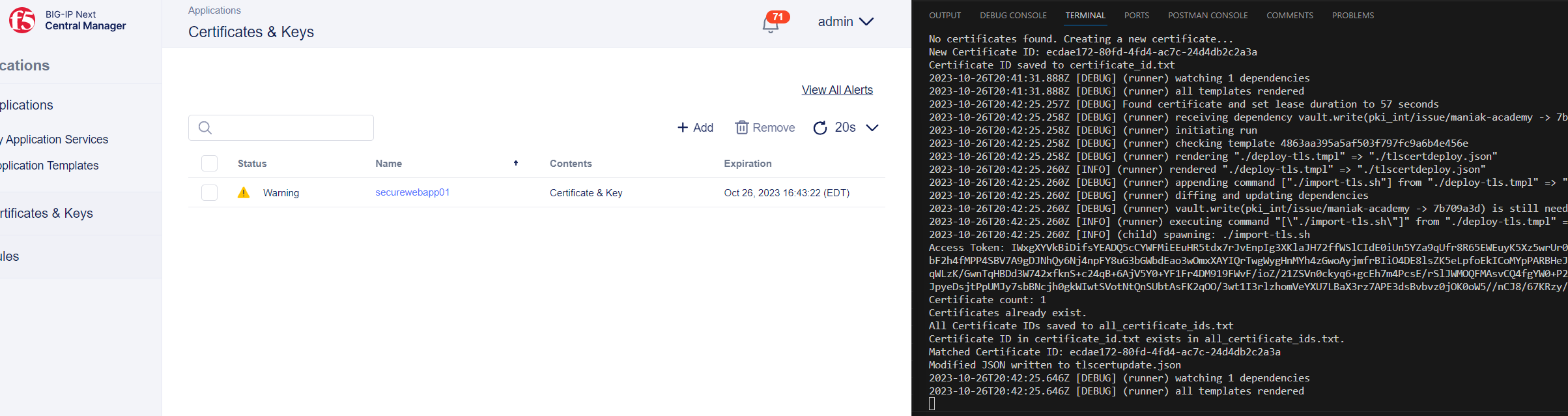

Here is the final output of vault agent automating the certificate lifecycle.

Subscribe to my newsletter

Read articles from Sebastian Maniak directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sebastian Maniak

Sebastian Maniak

I build, secure and automate infrastructure. | Follow me for daily updates and code examples.