Word embedding

pici

pici

Introduction

Word embeddings have become a foundational technology in Natural Language Processing (NLP), providing a way to represent words and documents in a numerical format. In this blog post, we'll explore the use of word embeddings in the context of the Cranfield dataset retrieval task. We'll employ various techniques and models to compare and contrast their performance.

Setting Up Dependencies

Before we dive into the exploration, we need to set up the necessary libraries and tools. We'll be using the ir_datasets library for managing datasets, nltk for text preprocessing, and gensim for training word embeddings. Additionally, we'll be measuring execution times using a custom decorator function.

!pip install ir_datasets --quiet

!pip install nltk --quiet

import ir_datasets

import nltk

nltk.download('stopwords')

nltk.download('punkt')

nltk.download('wordnet')

import gensim

from gensim.models import Word2Vec

from sklearn.metrics.pairwise import cosine_similarity

Data Preprocessing

The first step in our exploration is data preprocessing. We'll use NLTK's tools to tokenize text, remove stopwords, and lemmatize words. This step is crucial to ensure that we're working with clean and normalized text data.

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

from nltk.stem import WordNetLemmatizer

import numpy as np

import math

from tqdm.notebook import tqdm

stop_words = set(stopwords.words('english'))

lemmatizer = WordNetLemmatizer()

def preprocess(doc):

tokens = word_tokenize(doc.lower())

filtered_tokens = [lemmatizer.lemmatize(token) for token in tokens if token not in stop_words]

return filtered_tokens

Vector Space Model (VSM) using TF-IDF

Our first model is the Vector Space Model (VSM) using TF-IDF. This model is a traditional approach in information retrieval and text classification. It calculates the TF-IDF scores for each term in the document and query, then measures the cosine similarity between them to retrieve relevant documents.

class VSM_tfidf:

def __init__(self):

self.name = "Vector space model using TF-IDF"

@measure_execution_time

def fit(self, docs_p):

# Implementation details...

return self

def search(self, query):

# Search for relevant documents...

return sims

Vector Space Model using Word2Vec

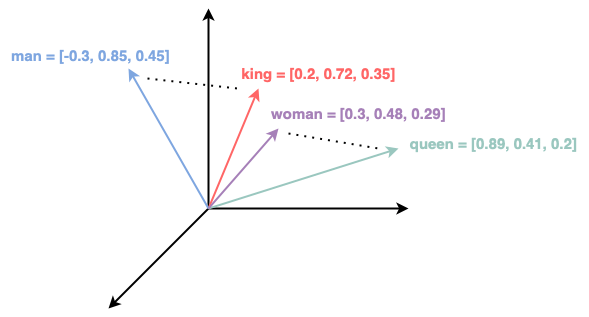

Next, we explore a Vector Space Model using Word2Vec. Word2Vec is a popular technique for learning word embeddings from text data. It represents words as dense vectors and allows us to calculate the cosine similarity between the query and documents.

class VSM_word2vec:

def __init__(self, num=100):

self.name = "Vector space model using Word2Vec"

self.w2v_model = None

self.num = num

# More methods...

Vector Space Model using FastText

Lastly, we employ a Vector Space Model using FastText, an extension of Word2Vec. FastText provides better representations for out-of-vocabulary terms by utilizing subword information. It allows us to calculate the cosine similarity between the query and documents similarly to the other models.

class VSM_FastText:

def __init__(self, num=100):

self.name = "Vector space model using FastText"

self.ft_model = None

self.num = num

# More methods...

Preparing the Data

Before we can start using these models, we need data. We'll be using the Cranfield dataset, a widely used dataset for information retrieval tasks. The data is preprocessed to create a collection of documents and queries for our models.

dataset = ir_datasets.load("cranfield")

corpus = [(doc.doc_id, preprocess(doc.text)) for doc in tqdm(dataset.docs_iter())]

queries = [preprocess(query.text) for query in dataset.queries_iter()]

queries = [(str(i + 1), x) for i, x in enumerate(queries)]

rels = {}

for qrel in dataset.qrels_iter():

rels[qrel.query_id] = []

for qrel in dataset.qrels_iter():

rels[qrel.query_id].append(qrel.doc_id)

Training the Models

With the data prepared, we can train our models using the respective vector space models and embeddings.

vsm_tfidf = VSM_tfidf()

vsm_tfidf.fit(corpus)

vsm_w2v = VSM_word2vec(num=200)

vsm_w2v.fit(corpus)

vsm_ft = VSM_FastText(num=200)

vsm_ft.fit(corpus)

Querying the Models

Now that our models are trained, it's time to query them using the provided queries. We calculate the cosine similarity between the queries and documents and retrieve relevant documents for each query.

res_vsm_tfidf = infer(vsm_tfidf)

res_vsm_w2v = infer(vsm_w2v)

res_vsm_ft = infer(vsm_ft)

Evaluation

Finally, we evaluate the performance of our models. We calculate the interpolated Mean Average Precision (MAP) for each model. This metric helps us assess the retrieval effectiveness of each model and compare their performance.

calculate_interpolated_map(res_vsm_tfidf, rels)

calculate_interpolated_map(res_vsm_w2v, rels)

calculate_interpolated_map(res_vsm_ft, rels)

| Model | vsm_tfidf | vsm_w2v | vsm_ft |

| IR result | 0.34 | 0.05 | 0.15 |

| Spam Detection result | 0.97 | 0.94 | 0.95 |

Results and Insights

In this study, we conducted a comparative analysis of vector embedding methods, including Word2Vec and FastText, and the traditional TF-IDF method on two significant tasks: Information Retrieval and Text Classification. Here are some key takeaways from this research:

TF-IDF remains a powerful method for Information Retrieval: In the IR task on the Cranfield dataset, the TF-IDF method demonstrated impressive performance with a higher Mean Average Precision score compared to the vector embedding methods. TF-IDF's stability and its ability to handle domain-specific texts allowed it to outperform embedding methods in this task.

The choice of method depends on the context: The decision to use either TF-IDF or vector embedding methods depends on the context and the task's objectives. TF-IDF continues to be a strong choice for IR and domain-specific text processing tasks, while vector embedding methods show potential in short-text document classification tasks or when distributed representations of individual words are needed.

Vector embedding methods, despite lower initial results, have the potential for improved performance when extended to larger datasets.

These insights highlight the importance of considering the specific requirements of a natural language processing task when choosing the most suitable method. While TF-IDF remains a robust and reliable choice, vector semantics and embeddings offer versatility and potential for improved performance, particularly in certain applications and with larger datasets. As the field of NLP continues to evolve, these methods will play a pivotal role in developing innovative language processing solutions.

Code: File notebook

Subscribe to my newsletter

Read articles from pici directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by