DevOps(Day-71): Let's prepare for some interview questions of Terraform 🔥

Biswaraj Sahoo

Biswaraj SahooWhat is Terraform and how it is different from other IaaC tools?

Terraform is an Infrastructure as Code (IaaC) tool developed by HashiCorp. It allows users to define and provision infrastructure resources in a declarative manner. With Terraform, infrastructure is treated as code, enabling its creation, management, and versioning.

Here are a few ways in which Terraform differs from other IaaC tools:

Terraform is cloud-agnostic and supports multiple cloud providers, including Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), and more.

Terraform uses a declarative approach, where you define the desired state of your infrastructure in configuration files. Terraform then determines the changes required to reach that desired state and applies them, ensuring that the actual infrastructure matches the defined configuration.

Terraform maintains a state file that keeps track of the resources provisioned by Terraform. This state file helps Terraform understand the current state of your infrastructure and allows it to plan and apply only the necessary changes. The state file can be stored remotely, allowing collaboration and shared state management.

How do you call a main.tf module?

In Terraform, the main.tf module is typically called by using the "module" block in another configuration file. To call the main.tf module, you need to follow these steps:

Create a new Terraform configuration file, let's say "main.tf" or any other name of your choice.

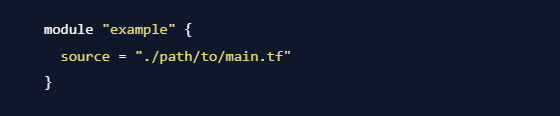

In the new configuration file, define a "module" block and specify a name for the module. For example:

Here, "example" is the name given to the module. The "source" parameter specifies the path to the directory containing the main.tf module. Adjust the "./path/to/main.tf" value according to the actual path of your main.tf module.

Save the configuration file.

From the command line, navigate to the directory where the new configuration file is located.

Run the Terraform commands to initialize, plan, and apply the configuration. For example:

By calling the main.tf module using the "module" block in another configuration file, Terraform will load and execute the main.tf module, incorporating its resources and configurations into the overall infrastructure provisioning process.

What exactly is Sentinel? Can you provide few examples where we can use for Sentinel policies?

Sentinel is a policy-as-code framework developed by HashiCorp. It is designed to enforce and automate governance and compliance policies in infrastructure provisioning workflows. Sentinel enables organizations to codify their policies and apply them across various stages of the infrastructure lifecycle.

Here are a few examples of how Sentinel can be used for policy enforcement:

Infrastructure Provisioning Policies: Sentinel can be used to define policies that enforce certain standards and best practices during infrastructure provisioning. For instance, you can define policies that restrict the use of specific instance types, enforce tagging conventions, or ensure compliance with security configurations.

Cost Optimization Policies: Sentinel can help you enforce policies to optimize infrastructure costs. For example, you can define policies that enforce the use of specific instance types or limit the creation of resources that exceed a certain cost threshold.

Resource Lifecycle Policies: Sentinel enables you to define policies that manage the lifecycle of infrastructure resources. You can enforce policies that require resources to be reviewed or approved before they are provisioned, or policies that automatically terminate or archive resources after a certain period of inactivity.

Integration with Version Control Systems: Sentinel integrates with version control systems like Git, allowing policies to be versioned, reviewed, and managed alongside the infrastructure code. This ensures that policies are updated and applied consistently across different environments.

You have a Terraform configuration file that defines an infrastructure deployment. However, there are multiple instances of the same resource that need to be created. How would you modify the configuration file to achieve this?

To create multiple instances of the same resource in a Terraform configuration file, you can utilize the concept of resource "count" or resource "for_each" depending on your requirements.

Using "count": You can use the "count" meta-argument within a resource block to specify the number of instances to create.

Using "for_each": If you want more flexibility and control over the instances, you can use the "for_each" meta-argument. It allows you to create instances based on a map or set of strings.

You want to know from which paths Terraform is loading providers referenced in your Terraform configuration (\.tf files). You need to enable debug messages to find this out. Which of the following would achieve this?*

A. Set the environment variable TF_LOG=TRACE

B. Set verbose logging for each provider in your Terraform configuration

C. Set the environment variable TF_VAR_log=TRACE

D. Set the environment variable TF_LOG_PATH

A. Set the environment variable TF_LOG=TRACE is the answer.

Below command will destroy everything that is being created in the infrastructure. Tell us how would you save any particular resource while destroying the complete infrastructure.

terraform destroy

The terraform destroy -target command will specifically target and destroy the resource(s) mentioned, rather than saving or excluding them from destruction. The -target option allows you to focus on a specific resource or set of resources when running terraform destroy.

For example, if you execute the command terraform destroy -target=aws_instance.example, it will specifically destroy the AWS EC2 instance resource named example, while still removing any dependencies associated with that resource.

If you want to save or exclude a particular resource from being destroyed while destroying the entire infrastructure, you should follow the approach mentioned earlier, which involves using the lifecycle block with prevent_destroy set to true.

Which module is used to store .tfstate file in S3?

The module used to store the

.tfstatefile in Amazon S3 is called thes3backend.To configure Terraform to store the state file in an S3 bucket, you need to add the

backendconfiguration block in your Terraform configuration file (e.g.,backend.tf)By using the

s3backend module, you can store the Terraform state file in an S3 bucket, providing versioning, security, and collaboration benefits for your infrastructure deployments.How do you manage sensitive data in Terraform, such as API keys or passwords?

Managing sensitive data in Terraform, such as API keys or passwords, requires taking precautions to ensure their security and avoid exposing them in plaintext. Here are some recommended approaches:

Use Environment Variables: Store sensitive data as environment variables on the system running Terraform. You can reference these variables in your Terraform configuration files using the

${var.VARIABLE_NAME}syntax. This allows you to keep the sensitive information separate from the Terraform code and helps prevent accidental exposure.Utilize Terraform Input Variables: Declare input variables in your Terraform configuration to accept sensitive data during runtime. These variables can be prompted for interactively or passed through command-line options. Ensure that you mark such sensitive input variables as

sensitiveso that their values are not displayed in the output or logged.Implement Access Controls: Limit access to Terraform configurations and sensitive data to authorized users. Apply the principle of least privilege, granting only the necessary permissions required to execute Terraform commands and access sensitive resources.

You are working on a Terraform project that needs to provision an S3 bucket, and a user with read and write access to the bucket. What resources would you use to accomplish this, and how would you configure them?

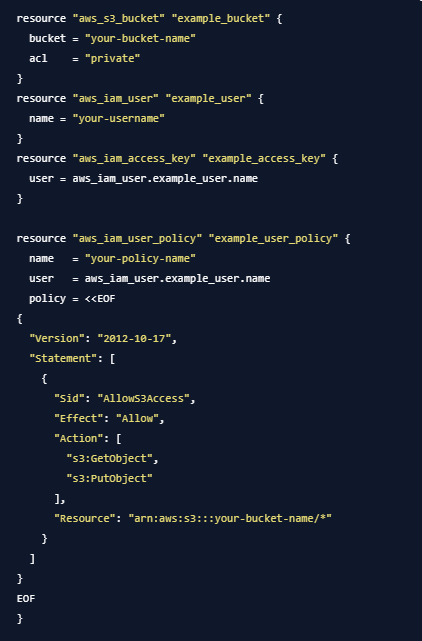

To provision an S3 bucket and a user with read and write access to that bucket using Terraform, you would utilize the following resources:

aws_s3_bucket: This resource is used to create the S3 bucket.aws_iam_user: This resource is used to create an IAM user.aws_iam_access_keyandaws_iam_user_policy: These resources are used to create an access key for the IAM user and attach a policy granting read and write access to the S3 bucket.

Who maintains Terraform providers?

Terraform providers are maintained by the respective cloud providers, open-source communities, or organizations responsible for the infrastructure or services being targeted. HashiCorp, the company behind Terraform, provides and maintains a set of official providers known as "HashiCorp-maintained providers." These official providers cover a wide range of cloud platforms, including AWS, Azure, Google Cloud, and more.

However, it's important to note that many cloud providers also maintain and release their own Terraform providers. These providers are typically developed and maintained by the cloud provider's engineering teams to ensure compatibility and support for their services in Terraform.

How can we export data from one module to another?

To export data from one module to another in Terraform:

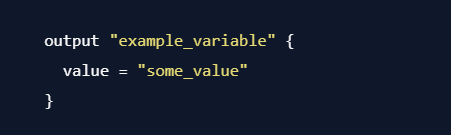

- Exporting Data: In the module containing the desired data, define an output variable in the

outputs.tffile:

- Exporting Data: In the module containing the desired data, define an output variable in the

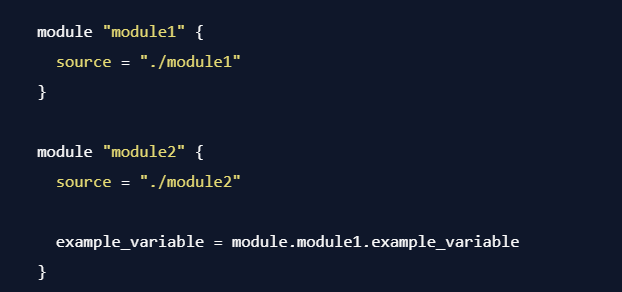

- Importing Data: In the module where you want to import the exported data, reference it using the module's namespace and the variable name:

By using output variables and referencing them in the consuming module, you can easily export and import data between Terraform modules.

Thanks for reading my article. Have a nice day.

WRITTEN BY Biswaraj Sahoo --AWS Community Builder | DevOps Engineer | Docker | Linux | Jenkins | AWS | Git | Terraform | Docker | kubernetes

Empowering communities via open source and education. Connect with me over linktree: linktr.ee/biswaraj333

Subscribe to my newsletter

Read articles from Biswaraj Sahoo directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Biswaraj Sahoo

Biswaraj Sahoo

--AWS Community Builder | DevOps Engineer | Docker | Linux | Jenkins | AWS | Git | Terraform | Docker | kubernetes Empowering communities via open source and education.