How to do cross-cloud backup replication

Kris F

Kris F

Introduction

A good practice to do backups is to follow the 3-2-1 rule. That's having

3 backups of the same data

2 of them on separate media

1 of them off-site

Translating this to the cloud we can do:

3 backups of the same data

2 of them are on separate clouds

1 of them offline

In this guide we'll deal with the "separate cloud" approach.

Options you may have

There are various options out there and generally, you have a category of these options:

Self-build scripts

Native Cloud products (AWS, GCP, Azure, etc.)

Off-the-shelf 3rd party products

While all of the above could be a solution to your problem, this guide will focus on #2, and specifically AWS => GCP

Initial setup

You'll need the following:

An S3 bucket on AWS with something to back up

A GCP bucket you'll clone the files to

The tools we'll use:

AWS S3

AWS IAM

GCP Storage tranfer service

GCP Cloud Storage

GCP Pub/Sub

GCP Cloud Functions

(GCP Event Arc)

How it will work?

Item is uploaded to AWS S3

GCP Storage Transfer Service fires weekly/daily/etc. to copy over files

On fail/success, Storage service publishes a message on GCP Pub/Sub

On a Pub/Sub message, EventArc is triggered, which calls GCP Cloud Functions

GCP Cloud function takes the event, extracts the status and sends it to Slack

Step-by-step tutorial on how to do the above

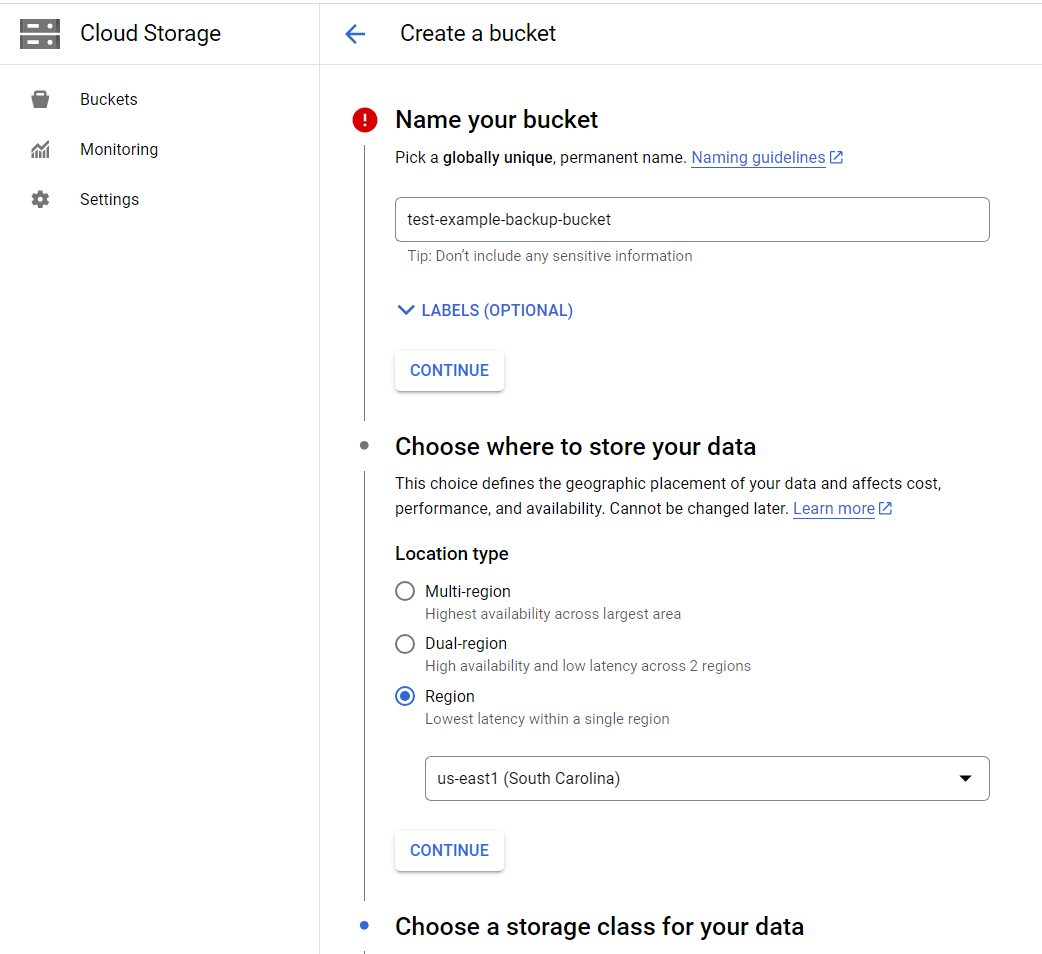

Create a GCP bucket

This is a fairly simple task, you can pick a name, storage class, region, etc. for your bucket.

Make sure the bucket isn't public, and its name is unique.

Create an IAM user and access key in AWS

This will be used by your GCP service.

Here’s an example permission:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:GetObjectAcl",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::your-db-backups",

"arn:aws:s3:::your-db-backups/*"

]

}

]

}

Then create an Access Key and Secret Key for this user and take (temporary) note of it.

The job is done with AWS for this tutorial. To GCP!

Create a Storage Transfer service job

Choose source (S3) and destination (GCP)

Set up the source details with the Access key you generated in the previous step

Choose destination GCP bucket (you may need to enable permissions here for the service account)

Choose how often to run the job (or just run it once)

Choose further misc. options (like deletion, overwrite, etc.) and make sure "

Get transfer operation status updates via Cloud Pub/Sub notifications" is clicked.

Create a new Pub/Sub topic here and select that.Done. At this point you can already test the job.

Create a Cloud function

Go to your Pub/Sub topic that was created on storage service creation

Click on “Trigger Cloud Function”, which will prompt you to create a new function there

Add anything there (for now). This will create a basic function along with EventArc trigger, and hook them up together

Go to your function and write the code to call slack. Here’s the one I used:

const axios = require('axios');

const functions = require('@google-cloud/functions-framework');

const url = `to be filled in`;

functions.cloudEvent('notifySlack', cloudEvent => {

let payload;

try {

console.log('CloudEvent:', JSON.stringify(cloudEvent, null, 2));

payload = {

status: cloudEvent?.data?.message?.attributes?.eventType,

description: `JOB: ${cloudEvent?.data?.message?.attributes?.transferJobName}`

}

}

catch (error) {

payload = {

status: "GCP db backup status",

description: "An exception has happened in GCP CloudFunctions, backups has failed."

}

}

axios.post(url, payload)

.then(response => {

callback(null, response.data);

})

.catch(error => {

callback(error);

});

});

- You can test it via CloudShell and see the console log outputting some text.

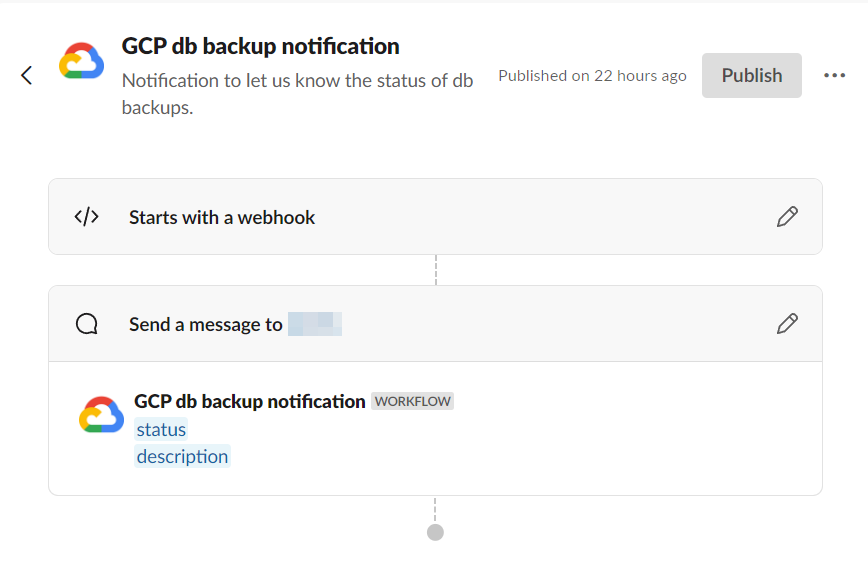

Create your Slack Webhook

You can of course create any other notification method, or call and endpoint, but here we'll use Slack's built in webhooks.

Find the "Workflow builder" in Slack Automations and create a new "Workflow"

As trigger create a Webhook and take a note of it

As an action send a message to your slack channel

Go back to your Cloud function and add the webhook URL

Done!

At this point you should be able to test your implementation.

A good way to test it without much effect is to disable the Access keys in S3.

This will fail the Storage Transfer job and will send a message to Slack.

Subscribe to my newsletter

Read articles from Kris F directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Kris F

Kris F

I am a dedicated double certified AWS Engineer with 10 years of experience, deeply immersed in cloud technologies. My expertise lies in a broad array of AWS services, including EC2, ECS, RDS, and CloudFormation. I am adept at safeguarding cloud environments, facilitating migrations to Docker and ECS, and improving CI/CD workflows with Git and Bitbucket. While my primary focus is on AWS and cloud system administration, I also have a history in software engineering, particularly in Javascript-based (Node.js, React) and SQL. In my free time I like to employ no-code/low code tools to create AI driven automations on my self hosted home lab.