Backup Exoscale SKS workloads using Velero

Fabrice Carrel

Fabrice Carrel

In this technical guide, we'll explore the practical steps to fortify your Scalable Kubernetes Service (SKS) workloads on Exoscale using Velero, a robust backup and restore tool.

The guide includes a hands-on demo, illustrating how Velero simplifies the backup and recovery process for stateful applications like Nextcloud.

Whether you're a seasoned DevOps professional or diving into Kubernetes for the first time, this guide is tailored to enhance your understanding of backup strategies, ensuring the resilience of your SKS workloads.

Step 1: Deploy SKS via Terraform

Follow the guide for K8s Cluster Installation and Configuration on Exoscale SKS.

Step 2: Deploy Longhorn for Persistent Storage

Integrating Longhorn into the SKS cluster enhances data management, resilience, and backup capabilities, providing a reliable storage solution for stateful applications running in Kubernetes.

# Deploy longhorn on your SKS Cluster

kubectl apply -f https://raw.githubusercontent.com/longhorn/longhorn/master/deploy/longhorn.yaml

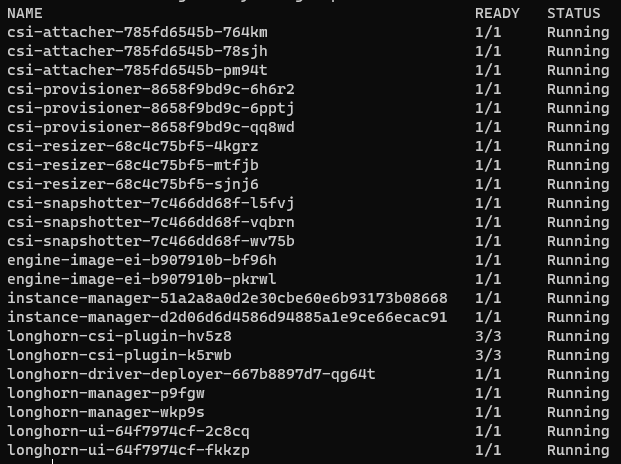

Check that all the pods are in the Running state

kubectl -n longhorn-system get pods

# Use port-forward to connect to the UI

kubectl port-forward deployment/longhorn-ui 7000:8000 -n longhorn-system

Access the Longhorn UI at http://127.0.0.1:7000/#/.

Step 3: Deploy Stateful Application (e.g., Nextcloud)

For the demo we're going to use the Nextcloud application, which is simple to deploy and allows us to work easily with persistent data. And what's more, I love this soft!

helm repo add nextcloud https://nextcloud.github.io/helm/

helm repo update

kubectl create ns nextcloud && helm -n nextcloud install nextcloud nextcloud/nextcloud --set service.type=LoadBalancer,persistence.enabled=true,nextcloud.password=whatanamaizingpassword

Step 4: Install Velero CLI

To perform backup and restore operations directly from your console, you'll need to install and use the Velero CLI.

wget https://github.com/vmware-tanzu/velero/releases/download/v1.12.1/velero-v1.12.1-linux-amd64.tar.gz

tar -xvf velero-v1.12.1-linux-amd64.tar.gz

sudo cp velero-v1.12.1-linux-amd64/velero /usr/local/bin/

rm -rf velero-v1.12.1*

velero version

Step 5: Install Velero using Helm Chart

Now we deploy Velero on the cluster using the official Helm chart.

The latest Velero documentation at this time is https://velero.io/docs/v1.12/

You can find more information about the Helm chart and all the available values on https://artifacthub.io/packages/helm/vmware-tanzu/velero

The settings below will allow us to push the data to the Exoscale s3 service named SOS (Simple Object Storage). The SOS bucket is named veleroexo and hosted in the ch-gva-2 region.

You will need to create an IAM API Keys with a role that allow access to Object Storage service.

Velero has to be initialized with the plugin velero-plugin-for-aws to interact with S3 services and velero-plugin-for-csi for storage operations.

# Add Velero Helm Chart repository

helm repo add velero https://vmware-tanzu.github.io/helm-charts/

# Create Velero credentials file from Exoscale IAM API Keys at /home/username/.aws/velero_exo_creds

[default]

aws_access_key_id=********************************

aws_secret_access_key=**********************************

# Install Velero with specific configurations

helm install velero velero/velero \

--namespace velero \

--create-namespace \

--set-file credentials.secretContents.cloud=/home/username/.aws/velero_exo_creds \

--set configuration.backupStorageLocation[0].name=exoscale \

--set configuration.backupStorageLocation[0].provider=aws \

--set configuration.backupStorageLocation[0].bucket=veleroexo \

--set configuration.backupStorageLocation[0].config.region=ch-gva-2 \

--set configuration.backupStorageLocation[0].config.publicUrl=https://sos-ch-gva-2.exo.io \

--set configuration.backupStorageLocation[0].config.s3ForcePathStyle=true \

--set configuration.backupStorageLocation[0].config.s3Url=https://sos-ch-gva-2.exo.io \

--set configuration.volumeSnapshotLocation[0].name=exoscale \

--set configuration.volumeSnapshotLocation[0].provider=aws \

--set configuration.volumeSnapshotLocation[0].config.region=sos-ch-gva-2.exo.io \

--set image.pullPolicy=IfNotPresent \

--set initContainers[0].name=velero-plugin-for-aws \

--set initContainers[0].image=velero/velero-plugin-for-aws:v1.7.1 \

--set initContainers[0].volumeMounts[0].mountPath=/target \

--set initContainers[0].volumeMounts[0].name=plugins \

--set configuration.features=EnableCSI \

--set initContainers[1].name=velero-plugin-for-csi \

--set initContainers[1].image=velero/velero-plugin-for-csi:v0.6.1 \

--set initContainers[1].volumeMounts[0].mountPath=/target \

--set initContainers[1].volumeMounts[0].name=plugins \

--set deployNodeAgent=true

# Label the longhorn volumesnapshotclass as the csi-volumesnapshot-class

kubectl label volumesnapshotclasses.snapshot.storage.k8s.io longhorn velero.io/csi-volumesnapshot-class=true

# Set exoscale as the default storage location

velero backup-location set exoscale --default

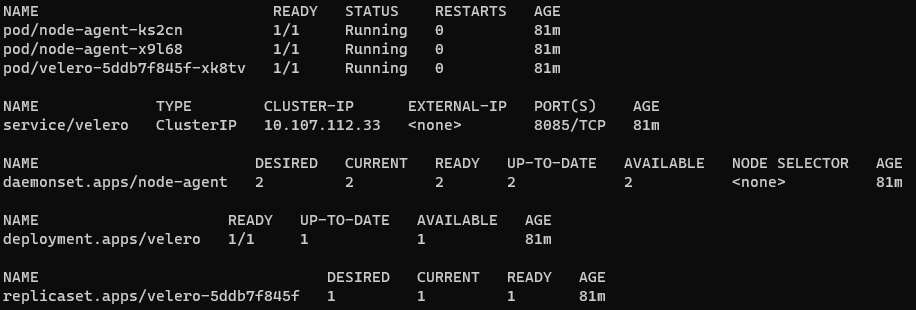

Check that everything is up and running

kubectl -n velero get all

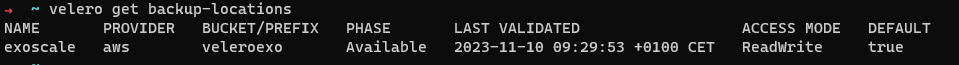

velero get backup-locations

Step 6: Functional Demo

Let's add some data in Nextcloud before the backup and restore operations. Log to the Nextcloud UI with the admin account and the password provided during the installation.

#Enable port-forward to access the UI

kubectl port-forward deployment/nextcloud 8099:80 -n nextcloud

Access the Nextcloud UI at http://127.0.0.1:8099/apps/files/?dir=/Documents and add some files.

Now we create a backup of all the elements of the nextcloud namespace.

# Create a backup of the Nextcloud namespace

velero backup create nc-backup-10112023-4 --include-namespaces nextcloud

The backup operation will be executed in the background. You can describe the backup operation or follow the backup logs. Velero will give you the command to get the information.

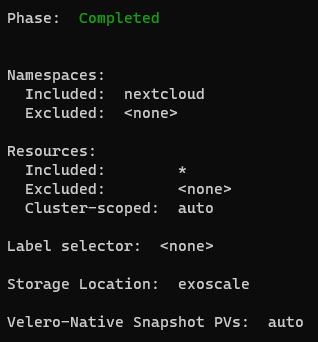

If you describe the backup operation you can see the status of the operation, or which namespace and resources are included.

# Describe backup details

velero backup describe nc-backup-10112023-4 --details

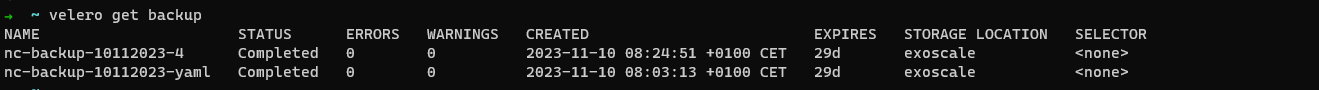

Get the status of the backup using Velero CLI. You can also see the backup expiration date and the storage location.

# Get the list of the actual backups

velero get backup

Delete the entire Nextcloud namespace

# Delete the Nextcloud namespace for simulation

kubectl delete ns nextcloud

Validate that the nextcloud PV is deleted to be sure that the restore process is fully fonctionnal.

#No more nextcloud/nextcloud-nextcloud PV

kubectl get pv

Now we start the restore process using the Velero CLI

# Restore from the backup

velero restore create --from-backup nc-backup-10112023-4

The restore process will execute in background. Again Velero will give you the command to get information about the status of the operation.

# Describe restore details

velero restore describe nc-backup-10112023-4-20231110074848 --details

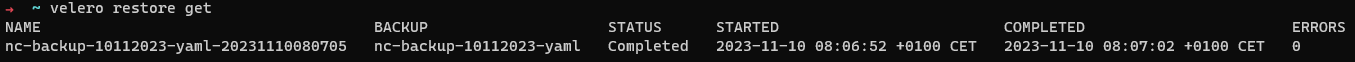

You get also get the status of the restore operation.

velero restore get

Validate that Velero as recreated all the resources in the nextcloud namespace.

# Check the status of PVCs, PVs, and Pods in the Nextcloud namespace

kubectl -n nextcloud get pvc

kubectl -n nextcloud get pv

kubectl -n nextcloud get pods -w

Access the Nextcloud UI at http://127.0.0.1:8099/apps/files/?dir=/Documents and validate that your files are present.

#Enable port-forward to access the UI

kubectl port-forward deployment/nextcloud 8099:80 -n nextcloud

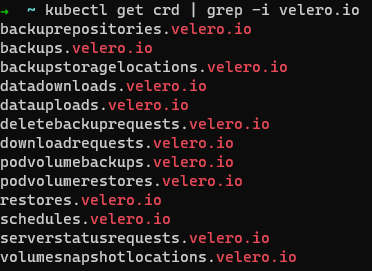

All these operations can be carried out using the CRDs provided by Velero.

Now armed with these tools and insights, you're ready to navigate the dynamic seas of Kubernetes Backups with confidence. Happy deploying! 🚀🔒

Subscribe to my newsletter

Read articles from Fabrice Carrel directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by