Cross-Validation Chronicles: Elevating Model Evaluation with Python's Best Techniques

Saurabh Naik

Saurabh Naik

Introduction:

In the realm of machine learning, model performance evaluation is a critical aspect of the development process. One powerful tool in the data scientist's toolkit is cross-validation. This technique helps assess a model's performance, providing a robust estimate of how it will generalize to unseen data. In this comprehensive guide, we'll delve into the world of cross-validation, exploring various methods and implementing them in Python.

Why Cross-Validation?

Before we dive into the methods, let's understand why cross-validation is crucial:

Limited Data: In scenarios where the dataset is limited, cross-validation allows us to make the most out of the available data.

Model Assessment: It provides a more reliable estimate of a model's performance compared to a single train-test split, reducing the risk of overfitting or underfitting.

Parameter Tuning: When fine-tuning model parameters, cross-validation helps in obtaining a more accurate assessment of the model's effectiveness.

Basic Cross-Validation Techniques

1. Holdout Validation (Train-Test Split):

The simplest form of cross-validation involves splitting the dataset into two parts: a training set and a testing set. The model is trained on the training set and evaluated on the testing set.

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.linear_model import LogisticRegression

# Assuming X, y are your features and labels

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

model = LogisticRegression()

model.fit(X_train, y_train)

predictions = model.predict(X_test)

accuracy = accuracy_score(y_test, predictions)

print(f'Accuracy: {accuracy}')

2. K-Fold Cross-Validation:

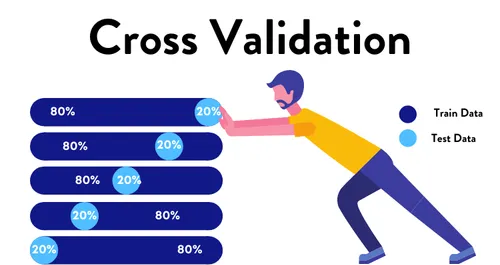

K-fold cross-validation involves dividing the dataset into 'k' folds, using 'k-1' folds for training and the remaining fold for testing. This process is repeated 'k' times, with each fold used exactly once as a test set.

from sklearn.model_selection import KFold, cross_val_score

kfold = KFold(n_splits=5, shuffle=True, random_state=42)

model = LogisticRegression()

results = cross_val_score(model, X, y, cv=kfold)

print(f'Accuracy for each fold: {results}')

print(f'Mean Accuracy: {results.mean()}')

3. Stratified K-Fold Cross-Validation:

Stratified K-Fold is particularly useful for imbalanced datasets. It ensures that each fold maintains the same class distribution as the entire dataset.

from sklearn.model_selection import StratifiedKFold, cross_val_score

stratkfold = StratifiedKFold(n_splits=5, shuffle=True, random_state=42)

model = LogisticRegression()

results = cross_val_score(model, X, y, cv=stratkfold)

print(f'Accuracy for each fold: {results}')

print(f'Mean Accuracy: {results.mean()}')

4. Leave-One-Out Cross-Validation (LOOCV):

LOOCV involves using a single observation as the test set and the remaining data as the training set. This process is repeated for each observation.

from sklearn.model_selection import LeaveOneOut, cross_val_score

loo = LeaveOneOut()

model = LogisticRegression()

results = cross_val_score(model, X, y, cv=loo)

print(f'Accuracy for each iteration: {results}')

print(f'Mean Accuracy: {results.mean()}')

Advanced Cross-Validation Techniques

5. Repeated K-Fold Cross-Validation:

Repeating K-Fold Cross-Validation 'n' times can provide a more robust estimation of model performance.

from sklearn.model_selection import RepeatedKFold, cross_val_score

repeated_kfold = RepeatedKFold(n_splits=5, n_repeats=3, random_state=42)

model = LogisticRegression()

results = cross_val_score(model, X, y, cv=repeated_kfold)

print(f'Accuracy for each iteration: {results}')

print(f'Mean Accuracy: {results.mean()}')

6. Leave-P-Out Cross-Validation:

Leave-P-Out is a generalized form of LOOCV, where 'p' observations are used for testing, and the rest for training.

from sklearn.model_selection import LeavePOut, cross_val_score

leave_p_out = LeavePOut(p=2)

model = LogisticRegression()

results = cross_val_score(model, X, y, cv=leave_p_out)

print(f'Accuracy for each iteration: {results}')

print(f'Mean Accuracy: {results.mean()}')

7. Time Series Cross-Validation:

For time series data, where the order of observations matters, a specialized cross-validation method is required.

from sklearn.model_selection import TimeSeriesSplit, cross_val_score

time_series_cv = TimeSeriesSplit(n_splits=5

)

model = LogisticRegression()

results = cross_val_score(model, X, y, cv=time_series_cv)

print(f'Accuracy for each fold: {results}')

print(f'Mean Accuracy: {results.mean()}')

Conclusion

Cross-validation is an indispensable tool in a data scientist's toolkit, offering a reliable method for estimating model performance. Depending on the dataset and its characteristics, choosing the appropriate cross-validation technique is crucial. By implementing these techniques in Python, you can fine-tune your models and make more informed decisions in your machine-learning projects.

Subscribe to my newsletter

Read articles from Saurabh Naik directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Saurabh Naik

Saurabh Naik

🚀 Passionate Data Enthusiast and Problem Solver 🤖 🎓 Education: Bachelor's in Engineering (Information Technology), Vidyalankar Institute of Technology, Mumbai (2021) 👨💻 Professional Experience: Over 2 years in startups and MNCs, honing skills in Data Science, Data Engineering, and problem-solving. Worked with cutting-edge technologies and libraries: Keras, PyTorch, sci-kit learn, DVC, MLflow, OpenAI, Hugging Face, Tensorflow. Proficient in SQL and NoSQL databases: MySQL, Postgres, Cassandra. 📈 Skills Highlights: Data Science: Statistics, Machine Learning, Deep Learning, NLP, Generative AI, Data Analysis, MLOps. Tools & Technologies: Python (modular coding), Git & GitHub, Data Pipelining & Analysis, AWS (Lambda, SQS, Sagemaker, CodePipeline, EC2, ECR, API Gateway), Apache Airflow. Flask, Django and streamlit web frameworks for python. Soft Skills: Critical Thinking, Analytical Problem-solving, Communication, English Proficiency. 💡 Initiatives: Passionate about community engagement; sharing knowledge through accessible technical blogs and linkedin posts. Completed Data Scientist internships at WebEmps and iNeuron Intelligence Pvt Ltd and Ungray Pvt Ltd. successfully. 🌏 Next Chapter: Pursuing a career in Data Science, with a keen interest in broadening horizons through international opportunities. Currently relocating to Australia, eligible for relevant work visas & residence, working with a licensed immigration adviser and actively exploring new opportunities & interviews. 🔗 Let's Connect! Open to collaborations, discussions, and the exciting challenges that data-driven opportunities bring. Reach out for a conversation on Data Science, technology, or potential collaborations! Email: naiksaurabhd@gmail.com