The Power of Boosting Methods in Ensemble Learning

Juan Carlos Olamendy

Juan Carlos Olamendy

Have you ever wondered how combining several simple models can lead to a robust and powerful predictor?

This is the essence of boosting in ensemble learning.

Let's explore boosting methods, focusing on AdaBoost and Gradient Boosting, two popular techniques in machine learning.

What is Boosting?

Boosting, a pivotal ensemble technique in machine learning, integrates multiple weak learners to forge a strong learner.

The core principle involves training predictors sequentially.

Each predictor aims to correct the errors of its predecessor, enhancing the overall model's accuracy.

It's a step-by-step enhancement, making the ensemble increasingly accurate.

AdaBoost has some similarities with Gradient Descent, but instead of tweaking a single predictor to minimize a cost function, it adds new predictors to the ensemble.

Each addition aims to rectify the errors of its predecessor, gradually perfecting the model.

There are important limitations in this technique:

The sequential nature means it can't be fully parallelized.

As a result, it doesn't scale well, especially when compared to bagging or pasting methods.

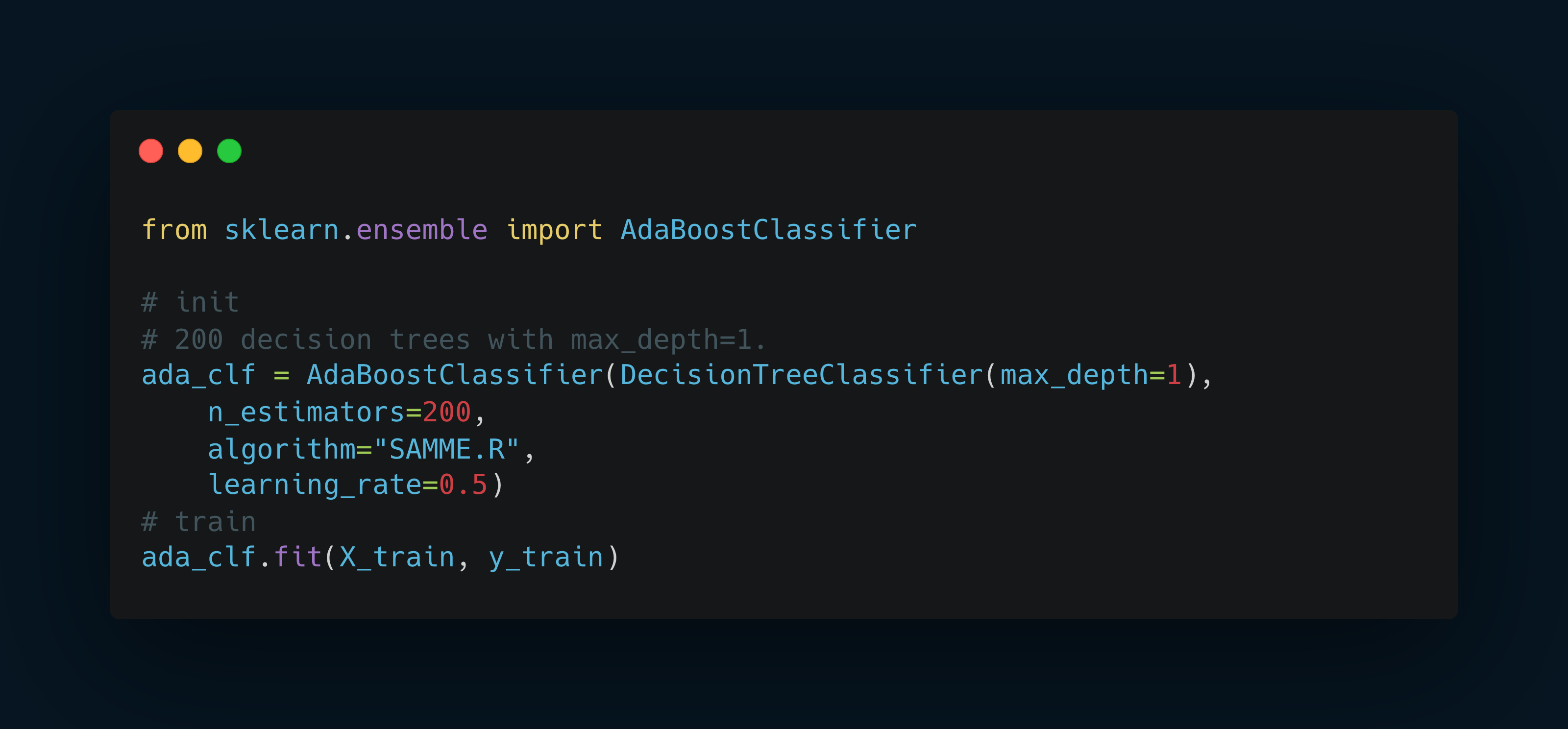

Implementing AdaBoost in Python

Here's a Python snippet to implement AdaBoost using the AdaBoostClassifier from Scikit-Learn:

To prevent overfitting, you can reduce the number of estimators or apply stronger regularization to the base estimator.

Gradient Boosting: A Step Further

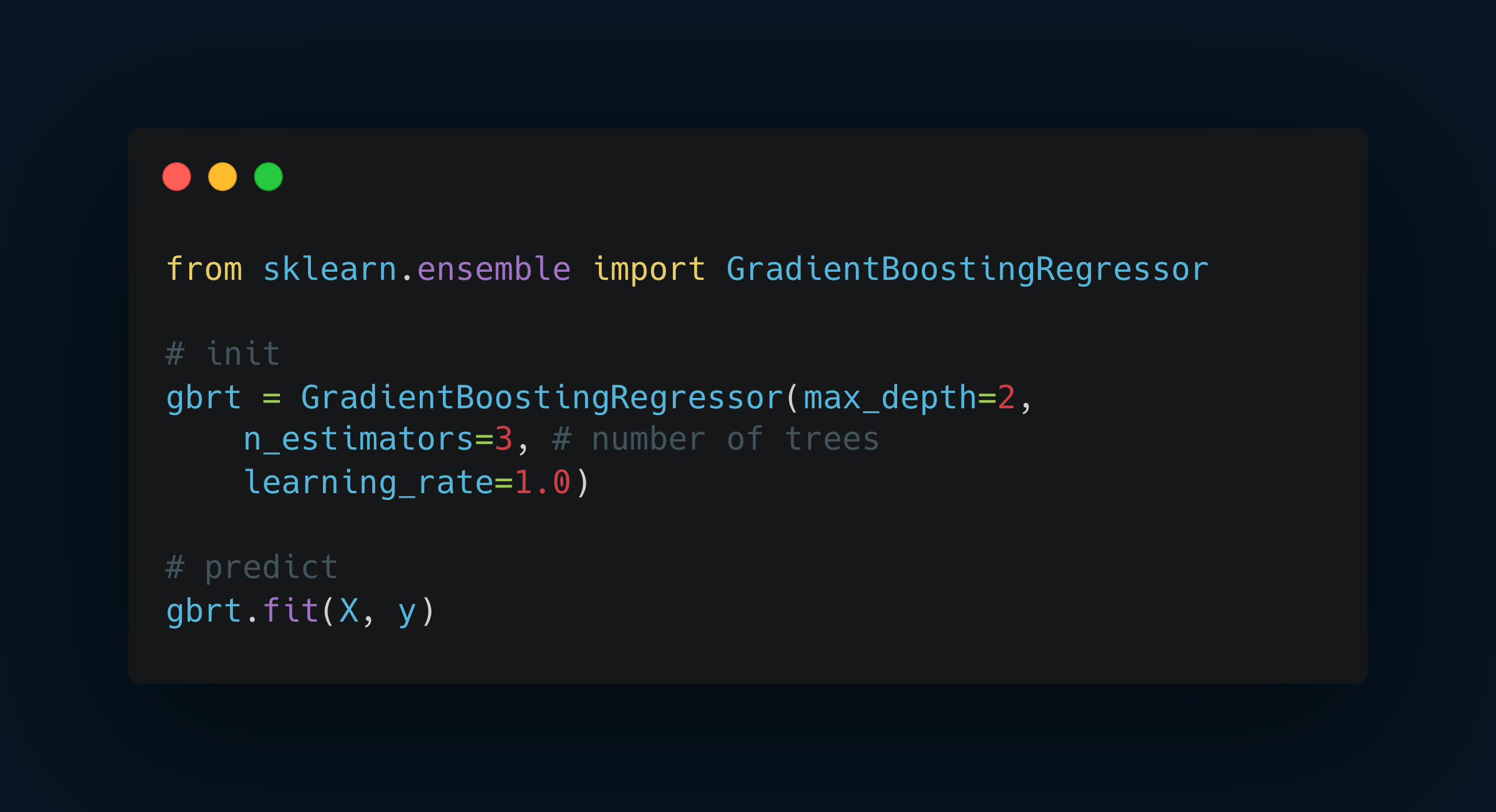

Gradient Boosting takes the concept of boosting a notch higher.

Also builds an ensemble by adding predictors sequentially.

Instead of adjusting instance weights like AdaBoost, it focuses on correcting the residual errors from previous predictors.

The Gradient Boosting Algorithm

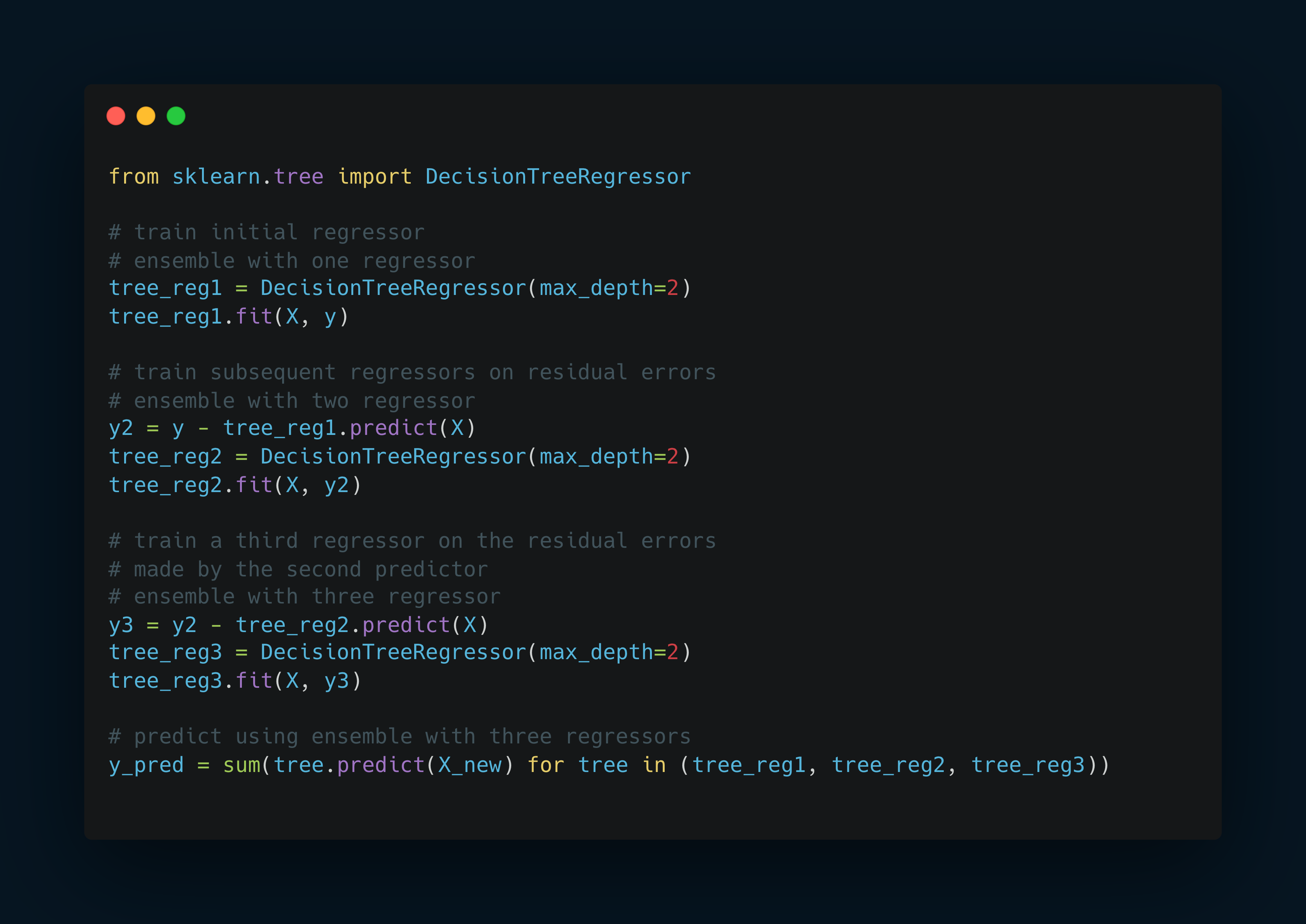

Here's how Gradient Boosting works in practice:

Start with a base predictor and fit it to the data.

Train subsequent predictors on the residual errors of their predecessors.

Combine these predictors to make the final predictions.

Python Implementation of Gradient Boosting

Gradient Boosting can be implemented using Decision Tree Regressors in Python as follows:

Gradient Boosting in Sklearn

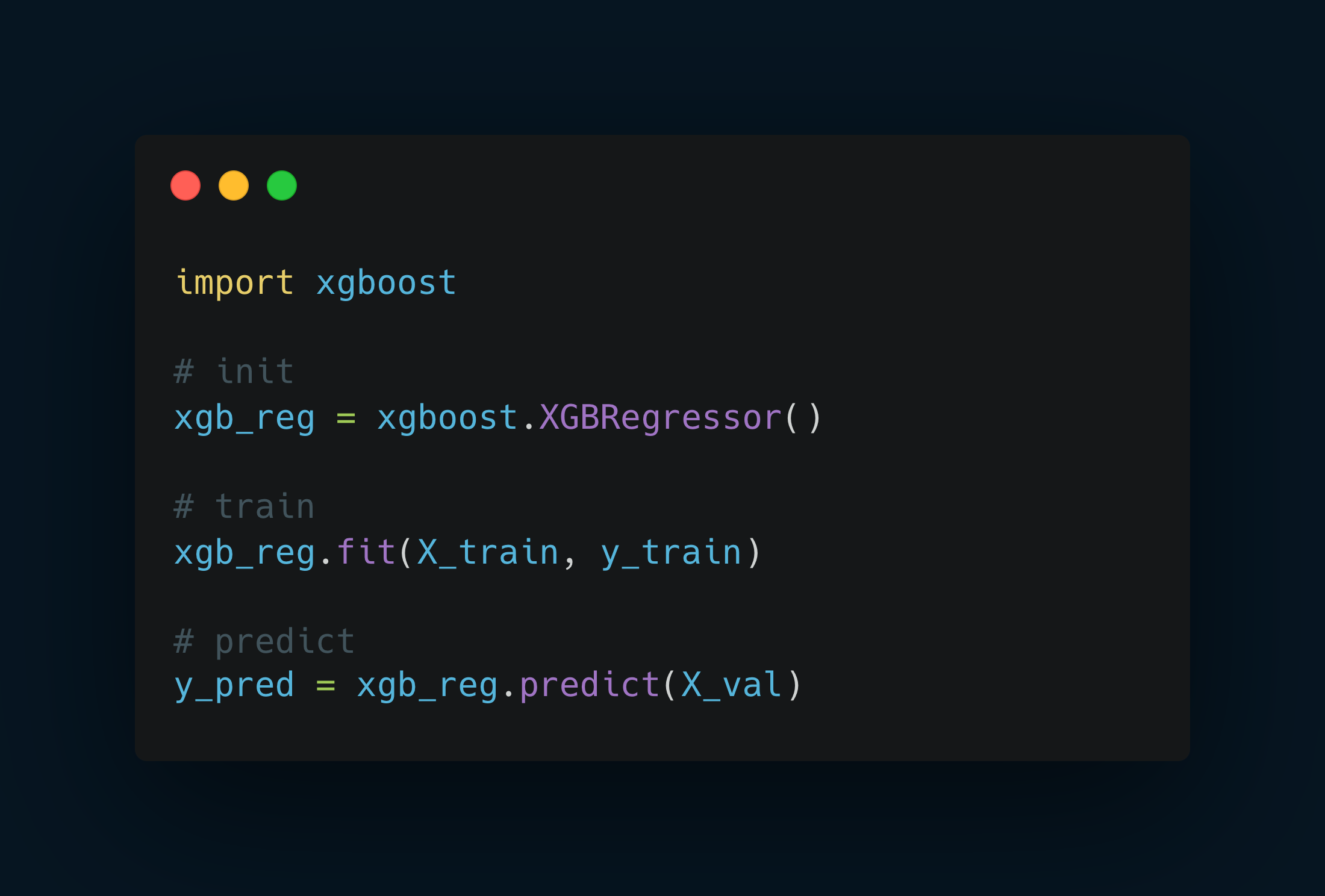

Gradient Boosting in XGBoost library

It's important to highlight that XGBoost, a well-known Python library representing Extreme Gradient Boosting, offers an optimized version of the Gradient Boosting algorithm.

Conclusion

The realm of machine learning is significantly enriched by the concepts of AdaBoost and Gradient Boosting.

These techniques, embodying the principle of transforming weakness into strength, offer powerful tools for predictive modeling.

AdaBoost's focus on rectifying underfit predictions.

Gradient Boosting's approach to correcting residual errors.

While AdaBoost excels in emphasizing hard-to-predict instances, Gradient Boosting optimizes performance by refining predictions based on previous errors.

The introduction of libraries like XGBoost further enhances the accessibility and efficiency of these techniques, making advanced machine learning methods more attainable for data scientists and enthusiasts alike.

Whether you're a seasoned professional or a budding enthusiast, embracing these methods can lead to more accurate, efficient, and impactful data-driven solutions.

Subscribe to my newsletter

Read articles from Juan Carlos Olamendy directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Juan Carlos Olamendy

Juan Carlos Olamendy

🤖 Talk about AI/ML · AI-preneur 🛠️ Build AI tools 🚀 Share my journey 𓀙 🔗 http://pixela.io