Kubernetes on Desktop - 2: Load Balancing Evolution in Kubernetes on Docker Desktop

JERRY ISAAC

JERRY ISAACTable of contents

Introduction:

As stated in my previous Blog Kubernetes on Desktop This is part 2 of learning Kubernetes on Desktop we are going to learn how to balance load i.e network traffic in an application between pods to ensure no pod is overloaded and the application stays available for all the users at all times so, load balancing becomes a vital part in application maintenance and optimizing.

If you like my content subscribe here

Load balancing in Kubernetes:

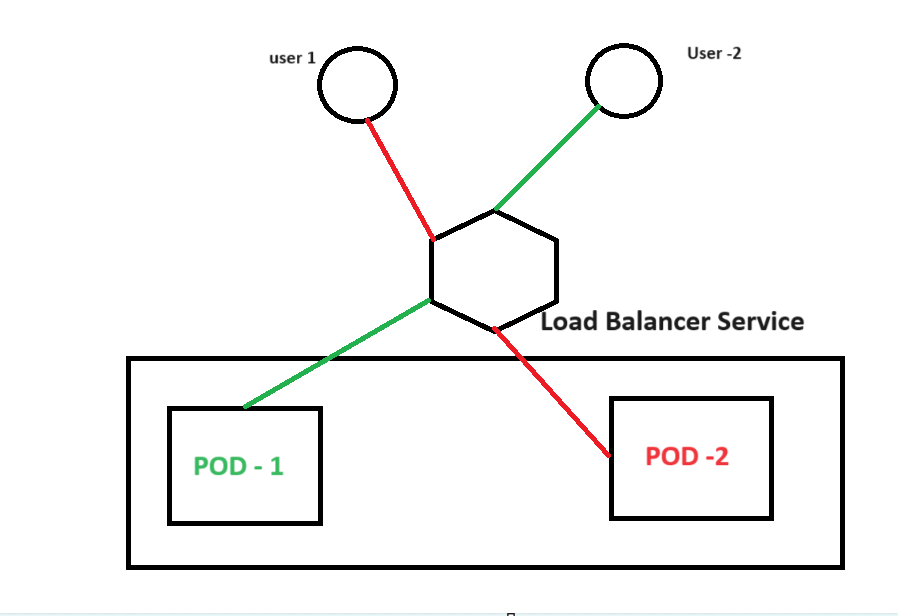

Before going into the steps we need to have a clear-cut idea of what we are doing. why we need it and How it works. load balancing in Kubernetes is a service that enables to route the incoming traffic evenly between the pods even though this load balancing service is not the most used method in Kubernetes understanding this concept helps us to conquer the world of Kubernetes

As we can see in the above Diagram what the Load balancer service does is it directs traffic to all the pods equally hence the result is that the pods are not overloaded and the user experience is smooth

Step 1 :

Let's begin !! First of all, you need to set up Kubernetes on your Desktop if you haven't check out Kubernetes on Desktop

I have all the required files needed for this in my Github Repo clone it for your practice

Here I have a Dockerfile that just has my index.html I used that file to create a docker image called load

docker build -t load .Now what I have is 2 deployment files deployment.yaml & deployment1.yaml

apiVersion: apps/v1 kind: Deployment metadata: name: kubeloadbalancer spec: selector: matchLabels: app: kubeloadbalancer template: metadata: labels: app: kubeloadbalancer spec: containers: - name: kubeloadbalancer image: load imagePullPolicy: Never ports: - containerPort: 80apiVersion: apps/v1 kind: Deployment metadata: name: kubeloadbalancer2 spec: selector: matchLabels: app: kubeloadbalancer template: metadata: labels: app: kubeloadbalancer spec: containers: - name: kubeloadbalancer2 image: nginx ports: - containerPort: 80If we compare these two files you can see that deployment.yaml file uses the docker image that we built the deployment1.yaml files use the nginx image we have purposefully kept the matchLabels the same in both files for the load balancer service to connect to both these files.

If you like my content subscribe here

Step 2

Now we need to create the load balancer service to direct the incoming traffic to the pods so create a yaml file named loadbalancer_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kubeloadbalancer

spec:

selector:

app: kubeloadbalancer

type: LoadBalancer

ports:

- port: 8083

protocol: TCP

targetPort: 80

So what we are doing here is that we are creating a load balancer service that points to the matchLabels of the deployment files we have set and targeting port 80 in those pods (deployment & deployment1) and forwarding them to 8083 so now what will happen is that the service we created routes traffic from the internet to these 2 deployments in a round-robin method.

kubectl apply -f deployment.yaml kubectl apply -f deployment1.yaml kubectl apply -f loadbalancer_svc.yamlThese commands will start the 2 deployment pods and the service

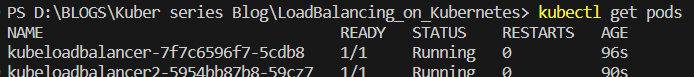

Ensure the pods are running

kubectl get pods

Ensure the service running

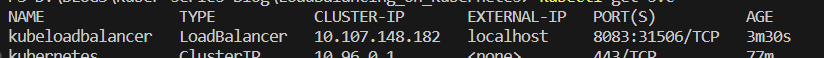

kubectl get svc

as you can see the service is running in the port we have declared in the yaml file

Result:

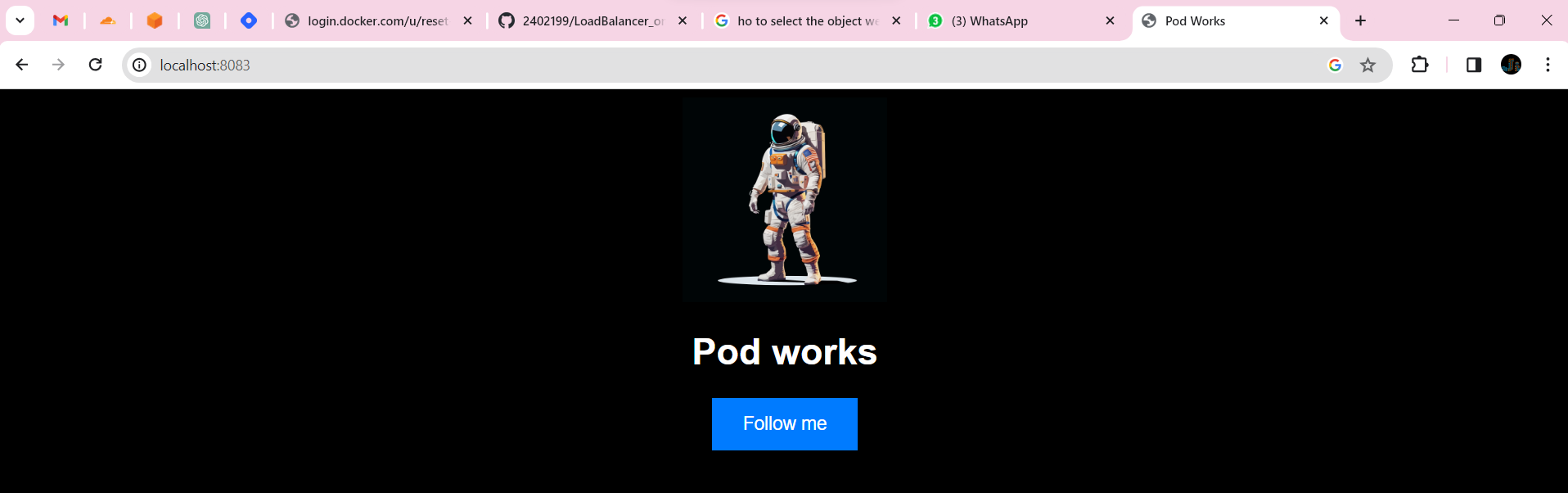

Now we have set up everything if we hit http://localhost:8083 on the internet we should see the output as

now it works this serves the content from the deployment.yaml file lets check from another browser to ensure that our load balancer is doing its work

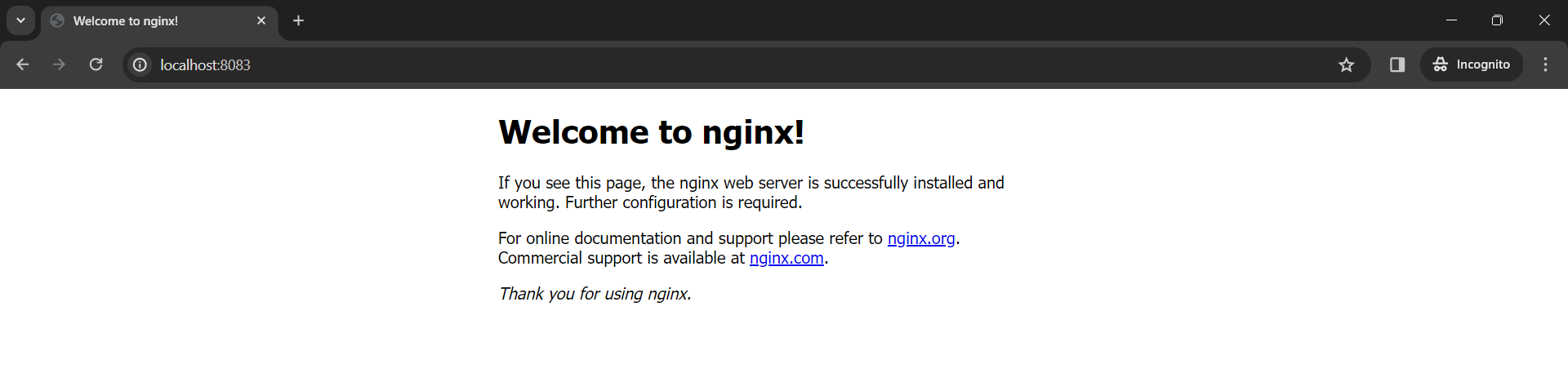

Voila !!! here we go we can see the default page of nginx in the deployment1 file hence we have proved that Load Balancing is achieved in Kubernetes don't forget we are doing this at 0 expense in our desktop

Next up in this series, we are going to learn how we can auto scale in Kubernetes on a desktop

Happy Coding !!!

Now if you like my content give me a subscribe here https://blog.jerrycloud.in/newsletter

Subscribe to my newsletter

Read articles from JERRY ISAAC directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

JERRY ISAAC

JERRY ISAAC

I am a DevOps Engineer with 3+ years of experience I love to share my Tech knowledge on DevOps and I love writings that's how I started Blogging