Getting started with LangChain and Building your very first News Article Summarizer

Shantanu Kumar

Shantanu Kumar

LangChain is a powerful framework that simplifies the process of building advanced language model applications.

LangChain is a fantastic library that makes it super easy for developers to create robust applications using large language models (LLMs) and other computational resources.

In this guide, I'll walk you through how LangChain works and show you some cool things you can do, like building a news article summarizer, I'll get you started quickly with a simple guide. Let's dive in!

What is LangChain?

What is LangChain? LangChain is a powerful framework designed to help developers build full applications using language patterns. It provides a set of tools, components and interfaces that simplify the creation of applications supported by large-scale language models (LLM) and conversational models. LangChain makes it easy to manage interactions with language models, chain multiple components, and integrate additional resources such as APIs and databases.

Now LangChain has numerous core concepts. Let's go through them one by one:

- Building Blocks and Sequences

In LangChain, components are like building blocks that can be combined to make strong applications. A chain is a series of these components (or other chains) put together to do a specific job. For instance, a chain might include a prompt template, a language model, and an output parser, all working together to handle what the user inputs, create a response, and process the output.

- Creating Prompts and Values

A Prompt Template creates a PromptValue, which is what eventually goes to the language model. Prompt Templates help change user input and other dynamic information into a format that the language model can understand. PromptValues are like classes with methods that can be turned into the exact input types each model type needs, such as text or chat messages.

- Choosing Examples Dynamically

Example Selectors are handy when you want to include examples in a prompt in a dynamic way. They take what the user says and provide a list of examples to use in the prompt, making it more powerful and specific to the situation.

- Organizing Model Responses

Output Parsers structure language model responses into a more usable format. They have two main jobs: giving instructions on how to format the output and turning the language model's response into a structured format. This makes it easier to work with the output data in your application.

- Managing Documents

Indexes organize documents to make it easier for language models to use them. Retrievers fetch relevant documents and combine them with language models. LangChain has tools for working with different types of indexes and retrievers, like vector databases and text splitters.

- Recording Chat History

LangChain mainly works with language models through a chat interface. The ChatMessageHistory class remembers all past chat interactions, which can then be given back to the model, summarized, or combined in other ways. This keeps the context and helps the model understand the conversation better.

- Decision-Making Entities and Tool Collections

Agents make decisions in LangChain. They can choose which tool to use based on what the user says. Toolkits are groups of tools that, when used together, can do a specific job. The Agent Executor is in charge of running agents with the right tools.

Understanding and using these main ideas lets you use LangChain to make advanced language model applications that can adapt, work efficiently, and handle complicated tasks.

LangChain Agent:

A LangChain Agent is a decision-making entity within the framework. It possesses a toolbox of tools and can determine which tool to employ based on user input. Agents are instrumental in constructing sophisticated applications that necessitate adaptive and context-specific responses, especially in scenarios where the interaction sequence depends on user input and other variables.

Using LangChain:

To utilize LangChain, a developer initiates by importing essential components and tools like Large Language Models (LLMs), chat models, agents, chains, and memory features. These components are combined to develop applications capable of comprehending, processing, and responding to user inputs.

LangChain offers a range of components tailored for specific purposes, such as personal assistants, document-based question answering, chatbots, tabular data querying, API interaction, extraction, evaluation, and summarization.

LangChain Model:

A LangChain model is an abstraction representing various model types used in the framework. There are three primary types:

Large Language Models (LLMs): These models take a text input and return a text output, forming the backbone of many language model applications.

Chat Models: Backed by a language model but with a structured API, these models process a list of Chat Messages as input and return a Chat Message, facilitating conversation history management and context maintenance.

Text Embedding Models: These models take text input and return a list of floats representing the text's embeddings. These embeddings are useful for tasks like document retrieval, clustering, and similarity comparisons.

Developers can select the appropriate LangChain model for their specific use case and leverage provided components for application development.

Key Features of LangChain:

LangChain is crafted to assist developers in six primary areas:

LLMs and Prompts: Simplifies prompt management, optimization, and provides a universal interface for all LLMs. Includes utilities for working effectively with LLMs.

Chains: Sequences of calls to LLMs or other utilities, with a standard interface, integration with various tools, and end-to-end chains for popular applications.

Data Augmented Generation: Allows chains to interact with external data sources for generation, aiding tasks like summarizing long texts or answering questions using specific data sources.

Agents: Enables LLMs to make decisions, take actions, check results, and continue until completion. Standard interface, diverse agent options, and end-to-end agent examples are provided.

Memory: Standard memory interface to maintain state between chain or agent calls, offering various memory implementations and examples of chains or agents utilizing memory.

Evaluation: Provides prompts and chains to help developers assess generative models using LLMs, addressing challenges in evaluating such models with traditional metrics.

Use Cases:

LangChain offers a range of applications, including:

Question-Answering Apps: LangChain is ideal for developing applications that answer questions based on specific documents. These apps can analyze document content and generate responses to user queries.

Chatbot Development: LangChain provides essential tools for building chatbots. These chatbots leverage large language models to comprehend user input and generate meaningful responses.

Interactive Agents: Developers can use LangChain to create agents that engage with users, make decisions based on user input, and carry out tasks to completion. These agents find applications in customer service, data collection, and other interactive scenarios.

Data Summarization: LangChain is suitable for developing applications that summarize lengthy documents. This feature proves particularly beneficial for processing extensive texts, articles, or reports.

Document Retrieval and Clustering: Leveraging text embedding models, LangChain facilitates document retrieval and clustering. This capability is valuable for applications requiring the grouping of similar documents or retrieving specific documents based on defined criteria.

API Interaction: LangChain seamlessly integrates with various APIs, empowering developers to build applications that interact with diverse services and platforms. This versatility proves valuable across scenarios, from integrating with social media platforms to accessing weather data.

Limitations of LangChain:

Like any tool, LangChain has its share of limitations that should be considered:

Reliance on External Resources: Similar to many tools, LangChain's effectiveness and performance depend significantly on external computational resources like APIs and databases. Changes in these resources can potentially impact LangChain's functionality, necessitating regular updates by developers.

Complexity: Despite offering a robust set of tools and features, LangChain's complexity may present challenges for beginners or developers not well-versed in the intricacies of language models. Mastering the full potential of the framework may require a significant investment of time and experience.

Processing Time and Efficiency: Depending on the application's complexity and the volume of data being processed, LangChain may encounter efficiency issues. Large-scale applications dealing with substantial data or intricate language models might face performance challenges due to computational intensity.

Dependency on Large Language Models: LangChain heavily relies on large language models. If these models are not well-trained or encounter scenarios beyond their training data, applications developed using LangChain may produce less accurate or relevant results.

Building a News Article Summarizer

Now that we've discussed LangChain, it's time to build our very own news article summarizer.

Introduction:

In today's fast-paced world, many of us prefer quick summaries instead of reading lengthy paragraphs. This shift has reduced our attention spans compared to the past. In this project, we will build a news article summarizer. This tool will scrape online articles, extract their titles and text, and generate concise summaries. To accomplish this, we will utilize LangChain and ChatGPT.

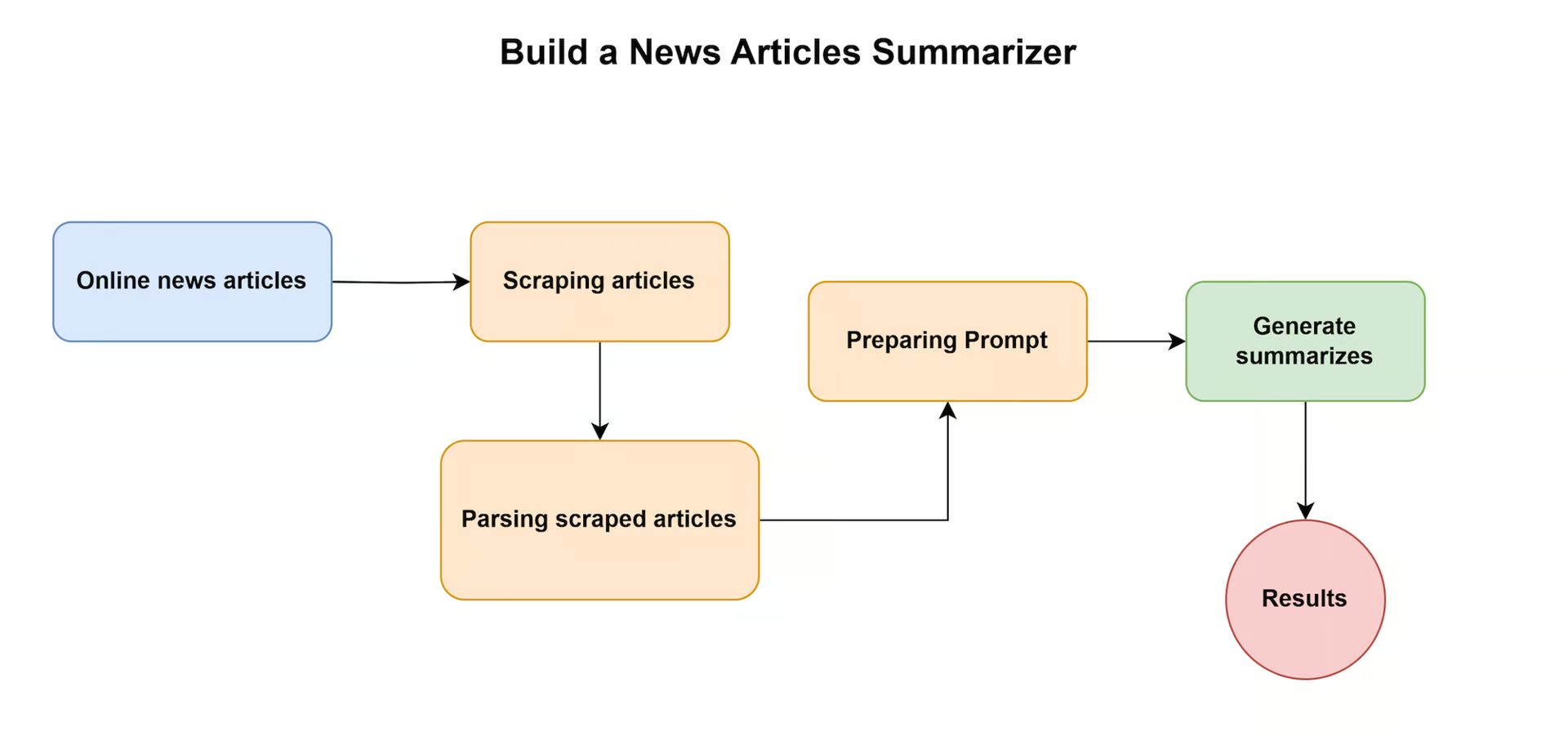

Before we begin, let's take a closer look at the project workflow to understand what we'll be doing.

Here are the steps in detail:

Install required libraries: To get started, ensure you have the necessary libraries installed:

requests,newspaper3k, andlangchain.Scrape articles: Use the

requestslibrary to scrape the content of the target news articles from their respective URLs.Extract titles and text: Employ the

newspaperlibrary to parse the scraped HTML and extract the titles and text of the articles.Preprocess the text: Clean and preprocess the extracted texts to make them suitable for input to ChatGPT.

Generate summaries: Utilize ChatGPT to summarize the extracted articles' text concisely.

Output the results: Present the summaries along with the original titles, allowing users to grasp the main points of each article quickly.

After familiarizing ourselves with the workflow, we now have a clear concept of how the process will unfold and what to expect at various stages.

This understanding will guide us in the development of a news article summarization tool, which will be instrumental in condensing lengthy articles into concise summaries.

Before we proceed, it's essential to acquire your OpenAI API key, which can be obtained through the OpenAI website. To do so, you must create an account and receive API access. Once logged in, go to the API keys section and make a copy of your API key.

After obtaining your API key you can save it in an environment variable to use it or you can directly save it in your code and use it. Remember if you are to post this code in public make sure to remove your API key from the code or else someone else might also use your API key and can access the key.

import os

os.environ["OPENAI_API_KEY"] = "<Your API Key>"

Also, install the required libraries that you will need during this project

langchain==0.0.208

deeplake==3.6.5

openai==0.27.8

tiktoken==0.4.0

The above can be installed by creating a requirements.txt file which can be installed by using the command

!pip install -r requirement.txt

Additionally, the install the newspaper3k package which is given below.

!pip install -q newspaper3k python-dotenv

Now, we’ll be taking a URL of an article to generate the summary. The following code fetches articles from a list of URLs using the requests library with a custom User-Agent header. It then extracts the title and text of each article using the newspaper library.

Now, Let’s understand the code to understand it more clearly.

- Firstly, We are using the

requestslibrary to make HTTP requests and thenewspaperlibrary to extract and parse the article content.

import requests

from newspaper import Article

NOTE: If we don't get the HTTP request from the website you won’t be able to fetch the data.

- Setting user-agent headers

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.82 Safari/537.36'

}

User-agent headers are added to the HTTP request headers. These headers inform the website that the request is coming from a web browser (Google Chrome in this case). This is done to mimic a web browser's behavior and avoid any restrictions that the website may impose on bots or automated requests.

- Defining the URL of the article to be summarized

article_url = "<https://www.artificialintelligence-news.com/2022/01/25/meta-claims-new-ai-supercomputer-will-set-records/>"

This is the URL that we want to summarize. (You can paste any other URL as per your choice)

- Creating a session object

session = requests.Session()

A Session object is created from the requests library. This allows you to stop certain parameters across multiple requests, such as headers and cookies.

- Making an HTTP request to fetch the article

try:

response = session.get(article_url, headers=headers, timeout=10)

Here, a GET request is sent to the specified article_url using the session.get() method. The headers are included in the request to mimic a web browser. The timeout parameter is set to 10 seconds, meaning that if the request takes longer than 10 seconds to complete, it will raise an exception.

- Checking the response status code

if response.status_code == 200:

This checks if the response from the website has a status code of 200, which indicates a successful HTTP request.

- Downloading and parsing the article

article = Article(article_url)

article.download()

article.parse()

An Article object is created from the newspaper library using the article_url. Then, the article content is downloaded and parsed from the website.

- Printing the title and text of the article

print(f"Title: {article.title}")

print(f"Text: {article.text}")

Now, if the article is successfully downloaded and parsed then it prints the title and the text.

- Handling exceptions

else:

print(f"Failed to fetch article at {article_url}")

except Exception as e:

print(f"Error occurred while fetching article at {article_url}: {e}")

Now, as mentioned if the status code is not 200 it means we didn't get the GET request successful and it will show the error as failed to fetch the article. The exception will occur if the timeout is greater than 10 seconds.

Now, when we write the code as a whole and print it,

import requests

from newspaper import Article

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.82 Safari/537.36'

}

article_url = "<https://www.artificialintelligence-news.com/2022/01/25/meta-claims-new-ai-supercomputer-will-set-records/>"

session = requests.Session()

try:

response = session.get(article_url, headers=headers, timeout=10)

if response.status_code == 200:

article = Article(article_url)

article.download()

article.parse()

print(f"Title: {article.title}")

print(f"Text: {article.text}")

else:

print(f"Failed to fetch article at {article_url}")

except Exception as e:

print(f"Error occurred while fetching article at {article_url}: {e}")

Output:

Title: Meta claims its new AI supercomputer will set records

Text: Ryan is a senior editor at TechForge Media with over a decade of experience covering the latest technology and interviewing leading industry figures. He can often be sighted at tech conferences with a strong coffee in one hand and a laptop in the other. If it's geeky, he’s probably into it. Find him on Twitter (@Gadget_Ry) or Mastodon (@gadgetry@techhub.social)

Meta (formerly Facebook) has unveiled an AI supercomputer that it claims will be the world’s fastest.

The supercomputer is called the AI Research SuperCluster (RSC) and is yet to be fully complete. However, Meta’s researchers have already begun using it for training large natural language processing (NLP) and computer vision models.

RSC is set to be fully built in mid-2022. Meta says that it will be the fastest in the world once complete and the aim is for it to be capable of training models with trillions of parameters.

“We hope RSC will help us build entirely new AI systems that can, for example, power real-time voice translations to large groups of people, each speaking a different language, so they can seamlessly collaborate on a research project or play an AR game together,” wrote Meta in a blog post.

“Ultimately, the work done with RSC will pave the way toward building technologies for the next major computing platform — the metaverse, where AI-driven applications and products will play an important role.”

For production, Meta expects RSC will be 20x faster than Meta’s current V100-based clusters. RSC is also estimated to be 9x faster at running the NVIDIA Collective Communication Library (NCCL) and 3x faster at training large-scale NLP workflows.

A model with tens of billions of parameters can finish training in three weeks compared with nine weeks prior to RSC.

Meta says that its previous AI research infrastructure only leveraged open source and other publicly-available datasets. RSC was designed with the security and privacy controls in mind to allow Meta to use real-world examples from its production systems in production training.

What this means in practice is that Meta can use RSC to advance research for vital tasks such as identifying harmful content on its platforms—using real data from them.

“We believe this is the first time performance, reliability, security, and privacy have been tackled at such a scale,” says Meta.

(Image Credit: Meta)

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo. The next events in the series will be held in Santa Clara on 11-12 May 2022, Amsterdam on 20-21 September 2022, and London on 1-2 December 2022.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

The next code imports essential classes and functions from the LangChain and sets up a ChatOpenAI instance with a temperature of 0 for controlled response generation. Additionally, it imports chat-related message schema classes, which enable the smooth handling of chat-based tasks. The following code will start by setting the prompt and filling it with the article’s content.

Temperature = 0 means that it will print a deterministic output.

from langchain.schema import (

HumanMessage

)

# we get the article data from the scraping part

article_title = article.title

article_text = article.text

# prepare template for prompt

template = """You are a very good assistant that summarizes online articles.

Here's the article you want to summarize.

==================

Title: {article_title}

{article_text}

==================

Write a summary of the previous article.

"""

prompt = template.format(article_title=article.title, article_text=article.text)

messages = [HumanMessage(content=prompt)]

It's essential to understand that templates play a crucial role as they instruct the chat model on its intended purpose. Think of templates as a way to guide ChatGPT on how to process and respond to the provided information effectively.

The HumanMessage is a structured way to describe what users say or type when chatting with a computer program. Imagine it as a standardized format to represent user messages.

The "ChatOpenAI" class is what we use to talk to the AI model. It's like the tool we use to have conversations with the computer program.

Now, let's talk about the "template." It's like a blueprint for a message. Instead of writing out the entire message, we use placeholders like {article_title} and {article_text} to stand in for the actual title and content of an article. Later, when we need to send the message, we fill in these placeholders with the real article title and text. This makes it easy to create different messages using the same template but with different information.

from langchain.chat_models import ChatOpenAI

# load the model

chat = ChatOpenAI(model_name="gpt-4", temperature=0)

Now, here we have used the “gpt-3.5-turbo” model as it’s a free version to use. Alternatively, if you have a subscription to the “gpt-4” model you can also you that too.

We loaded the model and set the temperature to 0. We’d use the chat() instance to generate a summary by passing a single HumanMessage object containing the formatted prompt. The AI model processes this prompt and returns a concise summary

# generate summary

summary = chat(messages)

print(summary.content)

Output:

Meta, formerly Facebook, has unveiled an AI supercomputer called the AI Research SuperCluster (RSC) that it claims will be the world's fastest once fully built in mid-2022. The aim is for it to be capable of training models with trillions of parameters and to be used for tasks such as identifying harmful content on its platforms. Meta expects RSC to be 20 times faster than its current V100-based clusters and 9 times faster at running the NVIDIA Collective Communication Library. The supercomputer was designed with security and privacy controls in mind to allow Meta to use real-world examples from its production systems in production training.

Now we can also tell our model to print the output in the form of a bullet list by just updating the prompt

# prepare template for prompt

template = """You are an advanced AI assistant that summarizes online articles into bulleted lists.

Here's the article you need to summarize.

==================

Title: {article_title}

{article_text}

==================

Now, provide a summarized version of the article in a bulleted list format.

"""

# format prompt

prompt = template.format(article_title=article.title, article_text=article.text)

# generate summary

summary = chat([HumanMessage(content=prompt)])

print(summary.content)

output:

- Meta (formerly Facebook) unveils AI Research SuperCluster (RSC), an AI supercomputer claimed to be the world's fastest.

- RSC is not yet complete, but researchers are already using it for training large NLP and computer vision models.

- The supercomputer is set to be fully built in mid-2022 and aims to train models with trillions of parameters.

- Meta hopes RSC will help build new AI systems for real-time voice translations and pave the way for metaverse technologies.

- RSC is expected to be 20x faster than Meta's current V100-based clusters in production.

- A model with tens of billions of parameters can finish training in three weeks with RSC, compared to nine weeks previously.

- RSC is designed with security and privacy controls to allow Meta to use real-world examples from its production systems in training.

- Meta believes this is the first time performance, reliability, security, and privacy have been tackled at such a scale.

Moreover, if you want to try more you can also modify your prompt to print in any language you want to(given it is recognized by the model you specify)

For example, if you want to print the output in Hindi you can do it by just modifying the prompt as:

template = """You are an advanced AI assistant that summarizes online articles into bulleted lists in Hindi.

Here's the article you need to summarize.

==================

Title: {article_title}

{article_text}

==================

Now, provide a summarized version of the article in a bulleted list format, in Hindi.

"""

prompt = template.format(article_title=article.title, article_text=article.text)

# generate summary

summary = chat([HumanMessage(content=prompt)])

print(summary.content)

Output:

- Meta (पहले फेसबुक) ने एक एआई सुपरकंप्यूटर लॉन्च किया है जिसे वह दावा करता है कि यह दुनिया का सबसे तेज होगा।

- इस सुपरकंप्यूटर का नाम एआई रिसर्च सुपरक्लस्टर (आरएससी) है और यह पूरी तरह से तैयार नहीं हुआ है।

- आरएससी का निर्माण मध्य-2022 में पूरा होगा।

- इसका उद्देश्य है कि यह त्रिलियन पैरामीटर वाले मॉडल्स को ट्रेन करने की क्षमता रखे।

- इसके उपयोग से रियल-टाइम आवाज अनुवाद और बड़े समूहों में लोगों के बीच सहज सहयोग के लिए नई एआई सिस्टम बनाने में मदद मिलेगी।

- आरएससी के उत्पादन के लिए, यह मेटा के मौजूदा वी100-आधारित क्लस्टर्स की तुलना में 20 गुना तेज होगा।

- आरएससी को नवीनतम एनविडिया कलेक्टिव कम्युनिकेशन लाइब्रेरी (एनसीसीएल) चलाने में 9 गुना और बड़े-स्केल एनएलपी वर्कफ़्लो को ट्रेन करने में 3 गुना तेज होगा।

- आरएससी के उपयोग से बिलियनों के पैरामीटर वाले मॉडल्स को 3 हफ्तों में ट्रेन करने में सक्षम होगा।

- मेटा कहता है कि इसका पिछला एआई अनुसंधान ढांचा केवल ओपन सोर्स और अन्य सार्वजनिक डेटासेट का उपयोग करता था।

- आरएससी को डिज़ाइन करते समय सुरक्षा और गोपनीयता नियंत्रणों का ध्यान रखा गया था ताकि मेटा इसे अपने उत्पादन प्रणालियों से वास्तविक उदाहरणों का उपयोग कर सके।

- इसका मतलब है कि मेटा अपने प्लेटफ़ॉर्म पर हानिकारक सामग्री की पहचान करने जैसे महत्वपूर्ण कार्यों के लिए एआई अनुसंधान को आगे बढ़ा सकता है।

- मेटा कहता है कि इस तरह के प्रदर्शन, विश्वसनीयता, सुरक्षा और गोपनीयता को इस प्रकार के स्केल पर पहली बार संघटित किया गया है।

Hooray, you’ve just created your very own News article summarizer.

Feel free to reach out and connect with me

Subscribe to my newsletter

Read articles from Shantanu Kumar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Shantanu Kumar

Shantanu Kumar

I am a passionate and curious person who always strives to learn new things and improve myself. I started my journey of coding in my intermediate class (11th standard) and since then I have developed a strong foundation in SQL, Java, C, and Python. I have participated in multiple hackathons and received a consolation prize for one of my innovative solutions. I am interested in DevOps, ML, Deep Learning, and cloud computing and I keep myself updated with the latest technologies and trends in these fields. I am looking for opportunities to apply my skills and knowledge in real-world projects and collaborate with other like-minded professionals.