Fan-in and Fan-out Architecture Design Pattern on AWS

Raju Mandal

Raju Mandal

Fan-out and Fan-in refer to breaking a task into subtasks, executing multiple functions concurrently, and then aggregating the result.

They’re two patterns that are used together. The Fan-out pattern messages are delivered to workers, each receiving a partitioned subtask of the original tasks. The Fan-in pattern collects the result of all individual workers, aggregates it, stores it, and sends an event signaling the work is done.

“Large jobs or tasks can easily exceed the execution time limit on Lambda functions. Using a divide-and-conquer strategy can help mitigate the issue.

The work is split between different lambda workers. Each worker will process the job asynchronously and save its subset of the result in a common repository.

The final result can be gathered and stitched together by another process or it can be queried from the repository itself.”

Fan-out and Fan-in refer to breaking a task into subtasks, executing multiple functions concurrently, and then aggregating the result.

They’re two patterns that are used together. The Fan-out pattern messages are delivered to workers, each receiving a partitioned subtask of the original tasks. The Fan-in pattern collects the result of all individual workers, aggregates it, stores it, and sends an event signaling the work is done.

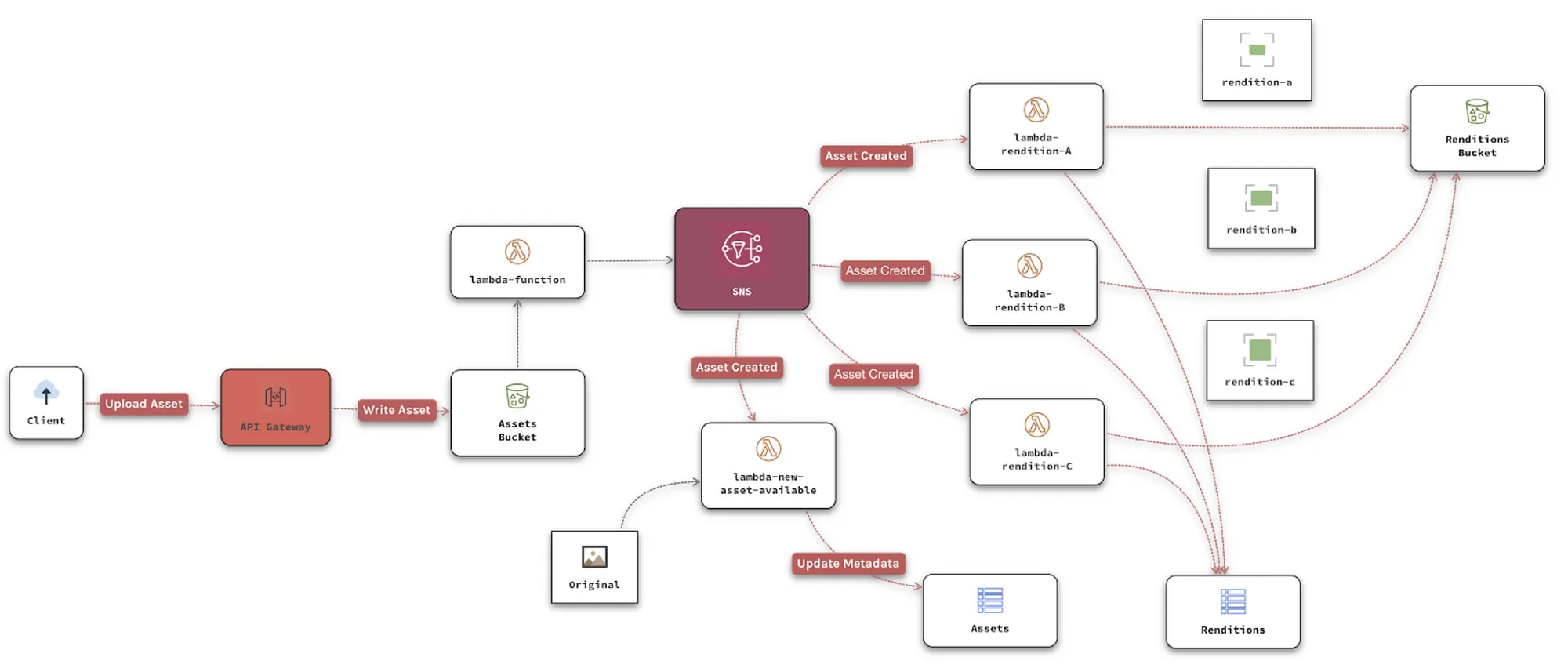

Resizing images is one of the most common examples of using serverless. This is the fan-out approach.

A client uploads a raw image to the Assets S3 Bucket. API Gateway has an integration to handle uploading directly to S3.

A lambda function is triggered by S3. Having one lambda function to do all the work can lead to limited issues. Instead, the lambda pushes an Asset Created event to SNS so our processing lambdas get to work.

There are three lambda functions for resizing — on the right. Each creates a different image size, writing the result on the Renditions bucket.

The lambda on the bottom reads the metadata from the original source — location, author, date, camera, size, etc. and adds the new asset to the DAM’s Assets Table on DynamoDB, but doesn’t mark it as ready for use. Smart auto-tagging, text extraction and content moderation could be added to processing lambdas with Rekognition later.

For the Fan-in part of the DAM, we have a lambda function that’s listening to the renditions bucket, when there’s a change on the bucket it checks if all renditions are ready, and marks the assets ready for use.

With the event-driven nature of serverless, and given the resource limits of lambda functions we favor this type of choreography over orchestration.

Not all workloads can be split into small enough pieces for lambda functions. Failure should be considered on both flows, otherwise, a task might stay unfinished forever. Leverage lambda’s retry strategies and Dead-Letter Queues. Any task that can take over 15 minutes should use containers instead of lambda functions, sticking to the choreography approach.

Subscribe to my newsletter

Read articles from Raju Mandal directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Raju Mandal

Raju Mandal

A digital entrepreneur, actively working as a data platform consultant. A seasoned data engineer/architect with an experience of Fintech & Telecom industry and a passion for data monetization and a penchant for navigating the intricate realms of multi-cloud data solutions.