Deploy a Static Website Using Amazon S3 (detailed)

Godbless Lucky Osu

Godbless Lucky Osu

INTRODUCTION

One of the simplest architectures that can be implemented on AWS is creating a static website by hosting it entirely on Amazon S3 (Simple Storage Service). It is especially suitable for businesses that want to establish a web presence, showcasing their business details such as location, opening and closing hours, services and products, achievements, testimonials, who they are, etc. Simply put, Amazon S3 in addition to other use cases, can be used to host static websites if you configure the S3 bucket for website hosting. It is important to note, though, that S3 does not support server-side scripting (that is, dynamic websites); however, AWS offers other services that enable you to host dynamic websites.

S3 is ideal because of the various benefits it offers which include scalability, durability (11 9s of durability), availability (four 9s of availability), fine-grained security and performance.

In Amazon S3, data files are stored as objects (you can read more about object storage here: https://cloud.google.com/learn/what-is-object-storage). Objects are placed in a bucket that you define yourself. The name of your bucket must be globally unique across all AWS Regions. You can store as much objects as you want/need, however, a single/individual object cannot be larger than 5TB.

To get more details about S3 including its storage classes and storage management, see the Amazon S3 Developer Guide

Moving forward, the content of today’s post will focus on using Amazon Simple Storage Service (Amazon S3) to build a static website and implementing architectural best practices to protect and manage your data.

Here’s what we’d be doing:

Deploy a static website on Amazon S3.

Enhance data security on Amazon S3 through a protective measure.

Optimize Cost by implement a comprehensive data lifecycle plan within Amazon S3.

Alright, let's dive in already!

DEPLOY A STATIC WEBSITE ON AMAZON S3

Note: Before starting, make sure you have all the website codes extracted and ready for uploading. I got the demo code for this from https://www.free-css.com/free-css-templates/page290/brighton

To host the static website, we will be creating an S3 bucket, uploading the code to the bucket, and creating a bucket policy to automatically make the website contents publicly accessible on the web even when new changes to codes (i.e. new objects) are uploaded (this is because by default all objects uploaded to an S3 bucket are private).

First Task: Creating an S3 bucket to host the static website contents.

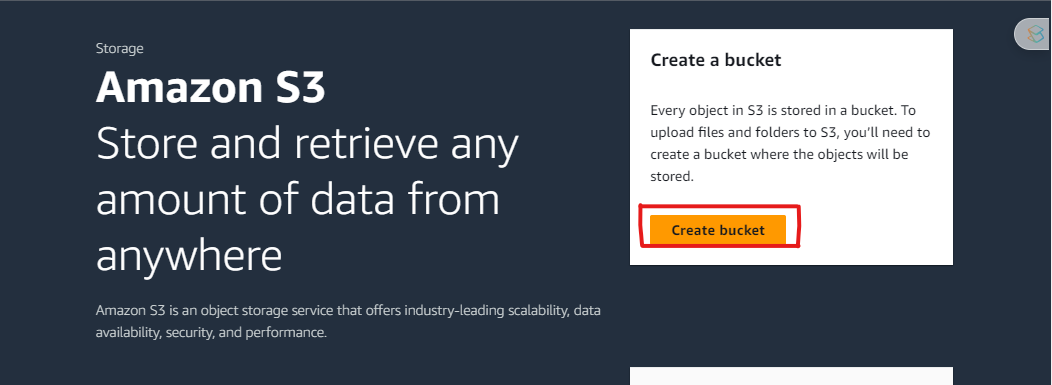

- Use the search feature in the AWS console, and search for s3. Click on the Create bucket button

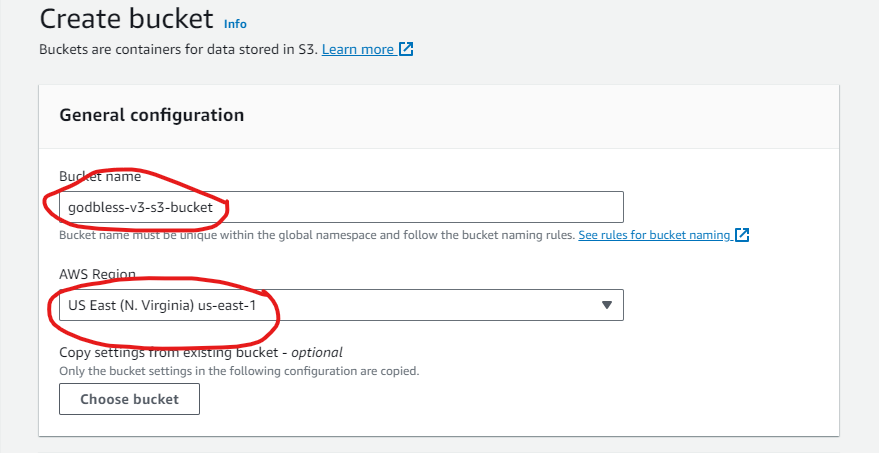

Input a unique bucket name (remember that S3 bucket names must be globally unique across all regions). In my own case, I used my name which is globally unique by the way (winks).

Select a region to create your bucket. (consider proximity to customers when selecting a region)

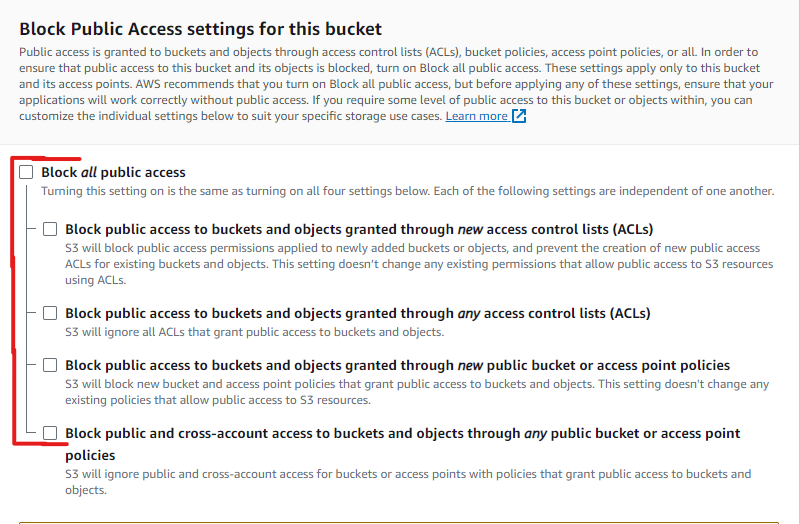

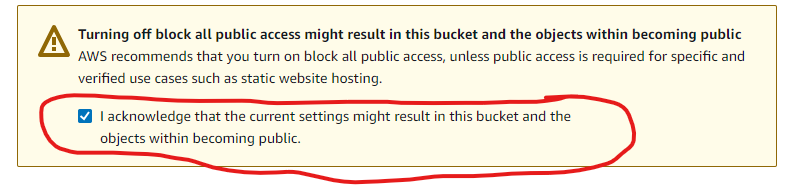

Disable Block all public access. You need to allow public access to the S3 bucket containing your website files, if you want your website to be publicly accessible on the internet. Disabling "Block all public access" ensures that the necessary permissions are set to make your website content publicly readable.

Click on the acknowledgement check button to confirm your agreement.

Now, scroll down and click on Create bucket. Congratulations! You just created your bucket successfully.

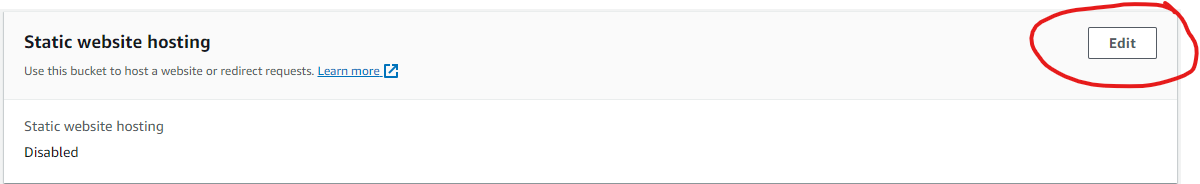

Now that your bucket has been created, you have to Enable static website hosting on your bucket. Enabling static website hosting provides you with an endpoint URL where customers or the public can access your static website.

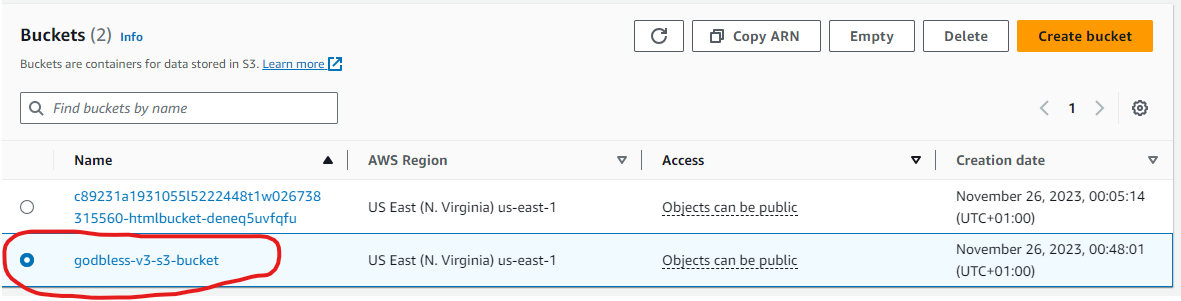

To enable website hosting on your bucket, scroll down in your S3 console. From the list of S3 buckets, select the bucket that you just created (the bucket you’re looking for will ideally carry the unique name you specified during creation).

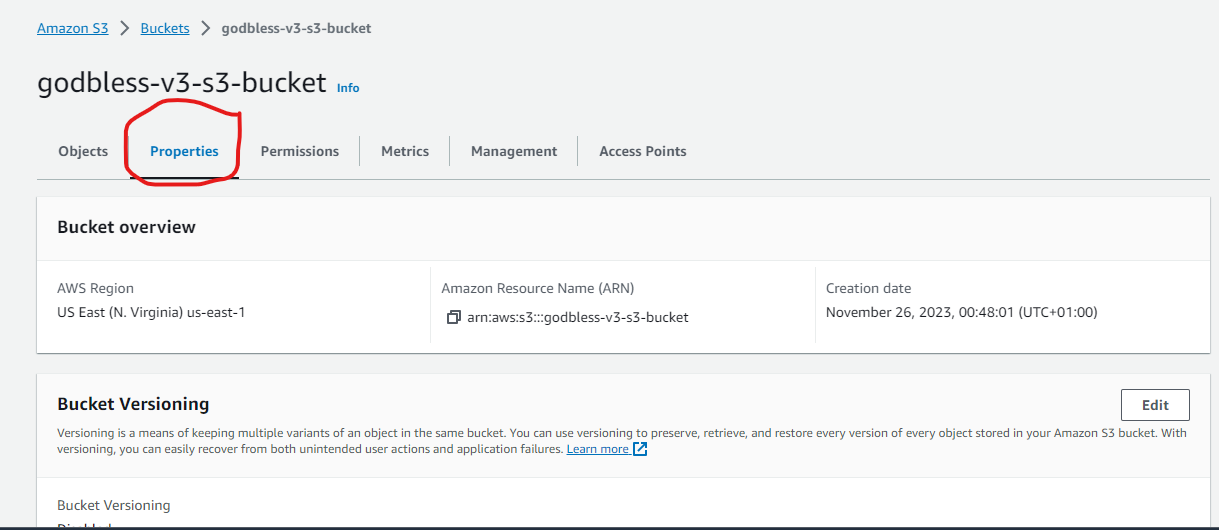

- Within the selected bucket, go to the "Properties" tab. This is where you can configure various settings for your S3 bucket.

- Under the properties tab, scroll down to the bottom, and you’ll find Static Website Hosting. Click on Edit in the top-right corner.

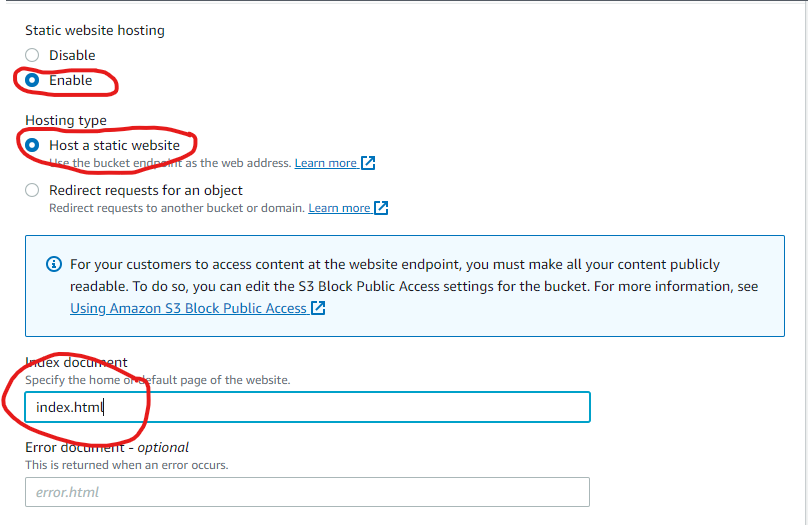

- Select enable, leave the hosting type as Host a static website, and specify the index document (usually index.html) and, optionally, the error document.

- Scroll down and click on Save changes. You now have an endpoint URL where customers can access your static website.

Second Task: Uploading source code to your S3 bucket

You now need to upload the static website code to the S3 bucket.

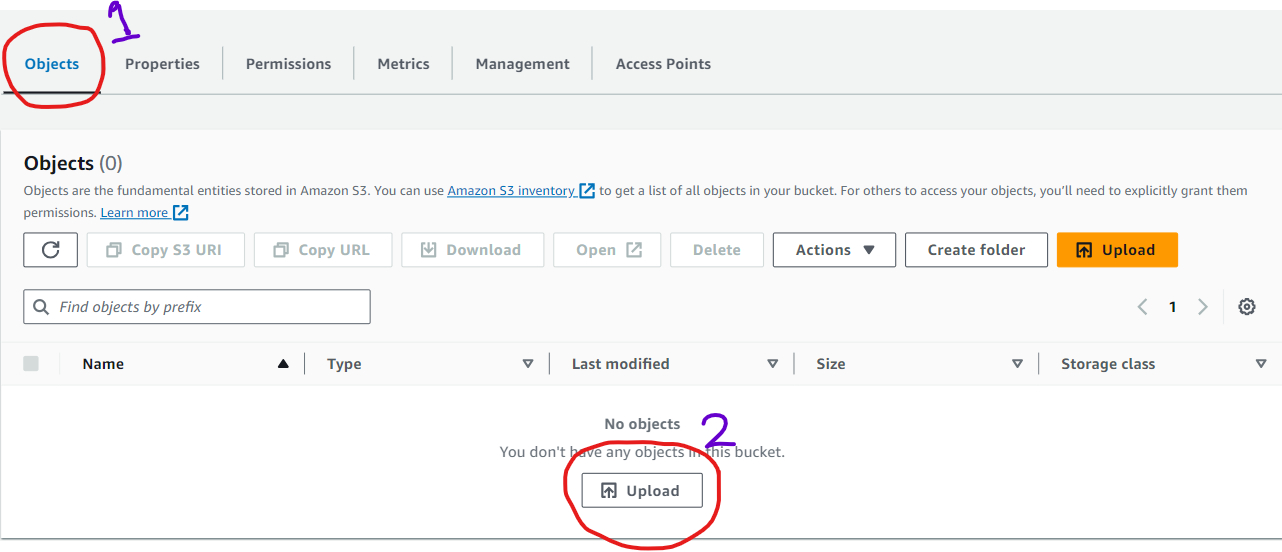

Navigate back to the “Objects” tab.

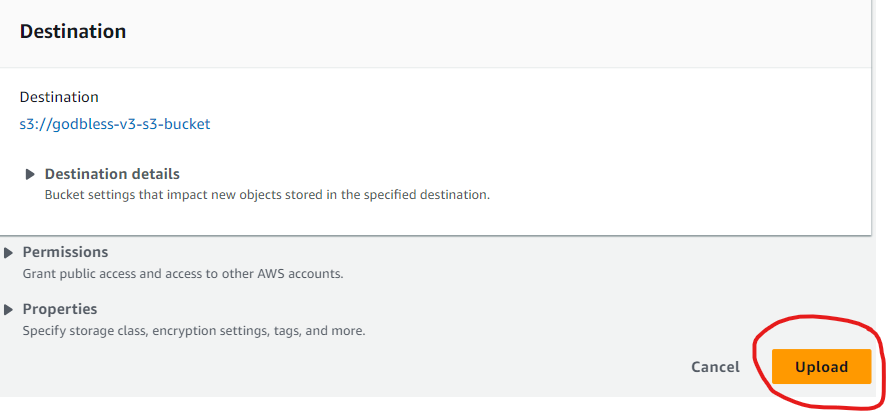

Click on "Upload" to open the file upload interface.

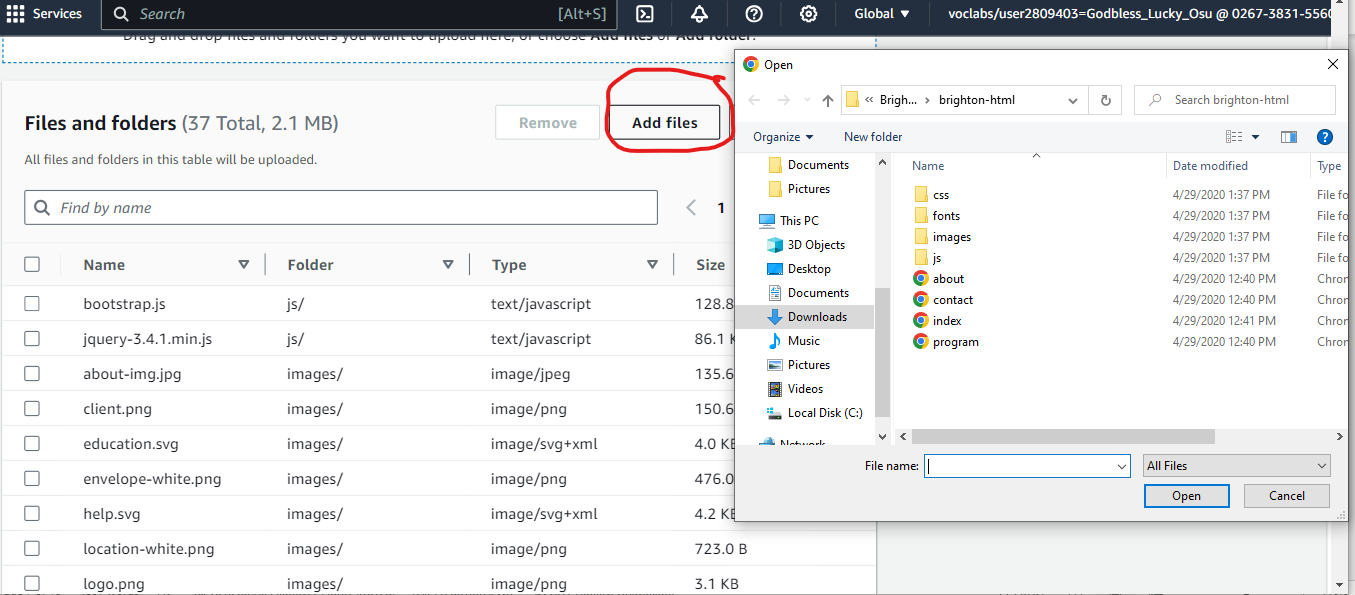

- Select Add files to bring up your directories. Navigate to the directory containing the source code files of your static website. This can includes HTML, CSS, JavaScript, images, and others. You can either use the file selection dialog to choose the files, or you can drag and drop them into the upload area.

Note: When hosting a static website on Amazon S3, it's important to remember that S3 does not support server-side scripting. Make sure JavaScript included in your files is executed on the client side only. Server-side scripting is not part of S3 functionality and so, would not be processed. For dynamic content, consider using a server-based hosting solution

- Click the orange colour “Upload” button and wait for some seconds/minutes for your files to upload into S3.

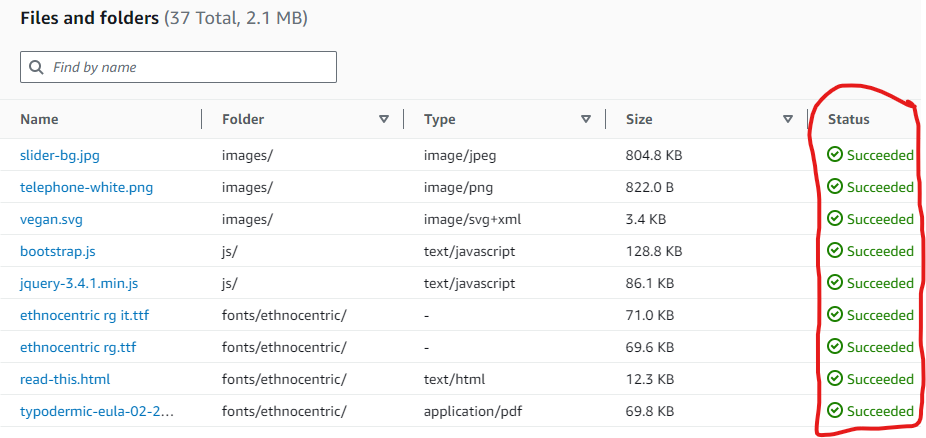

- You will see a "Succeeded" status in all your uploads, like the screenshot below.

Congratulations you’ve successfully uploaded your source codes into the S3 bucket.

Note: By default, these objects uploaded to the S3 bucket are private, meaning they can only be accessed by you (the AWS account that owns the bucket). To make your static website publicly accessible, you have to configure a bucket policy that grants read access to everyone (public access). This will take us to the third task.

Third Task: Creating a bucket policy to grant public read access

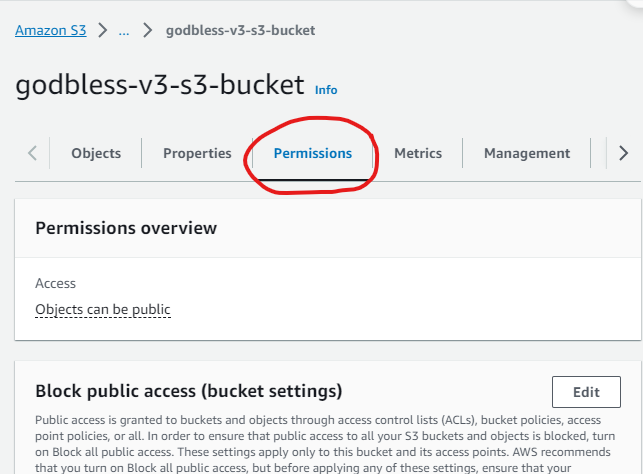

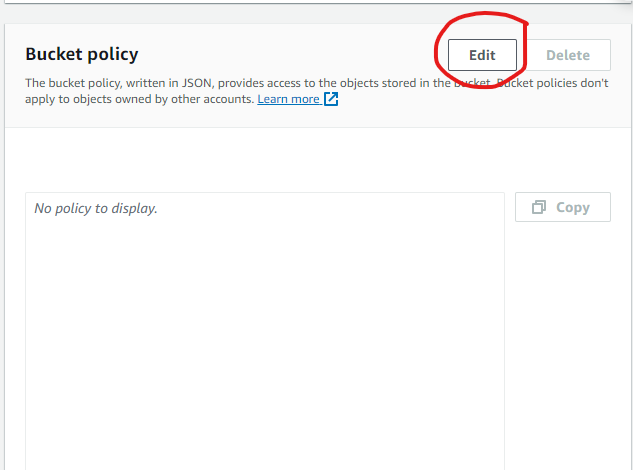

- Navigate to the "Permissions" tab and scroll downward to find Bucket policy.

- Click on the Edit button on the far right of the screen

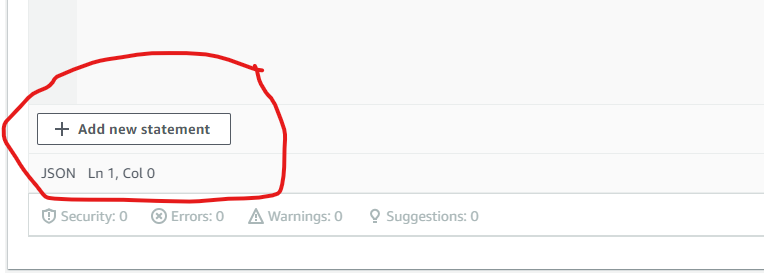

- Scroll down and click on "Add new statement", you can also find it if you look toward the right of your screen.

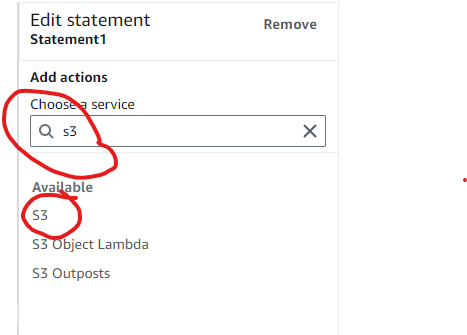

- Use the Filter services (search bar), type in S3 and click on the first option (S3).

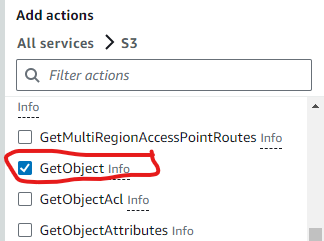

- Scroll down to locate GetObject and click on it.

Note: "s3:GetObject" is a permission that grants the right to retrieve objects (files) from the specified S3 bucket.

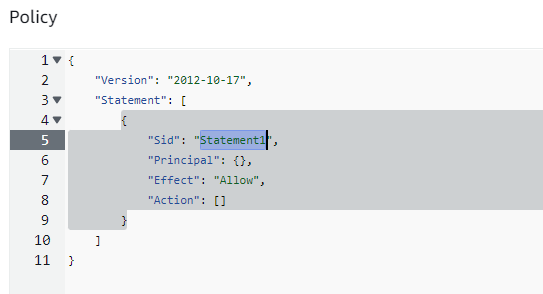

Add the wildcard "*" to the "Principal" in the policy.

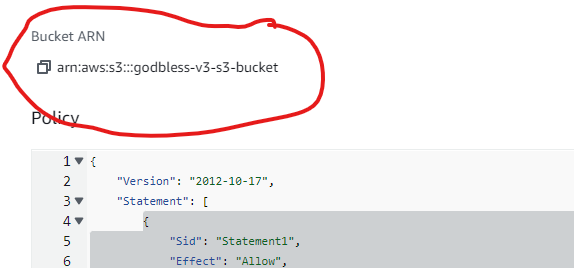

Copy your Bucket ARN just above the policy space and paste it in your "Resource." Note that you must add /* just after the pasted policy. The /* means that the policy affects all objects (new and old) in your bucket.

- Scroll down and click on Save changes.

Your final bucket policy should look like this below:

Here's the JSON code:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Statement1",

"Effect": "Allow",

"Principal": "*",

"Action": [

"s3:GetObject"

],

"Resource": "arn:aws:s3:::godbless-v3-s3-bucket/*"

}

]

}

Note: This policy grants the "s3:GetObject" permission to everyone ("Principal": "*") for all objects in your bucket ("Resource": "arn:aws:s3:::godbless-v3-s3-bucket/*"). This means that anyone can read the objects in your S3 bucket.

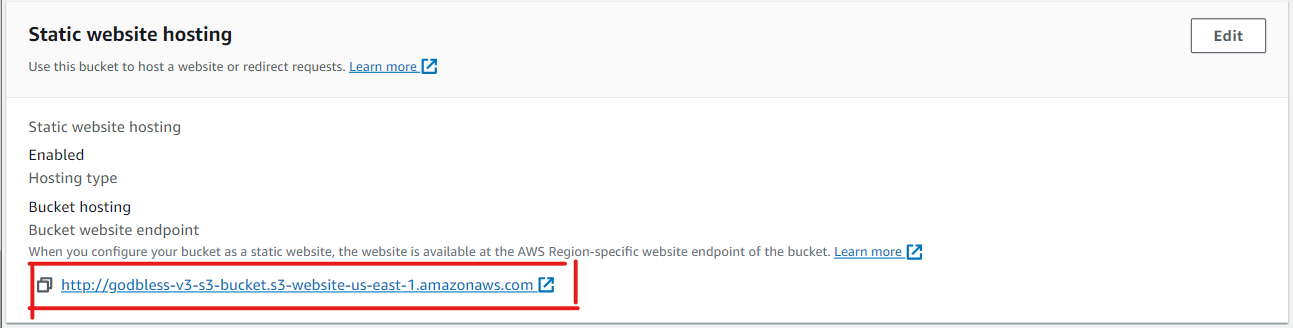

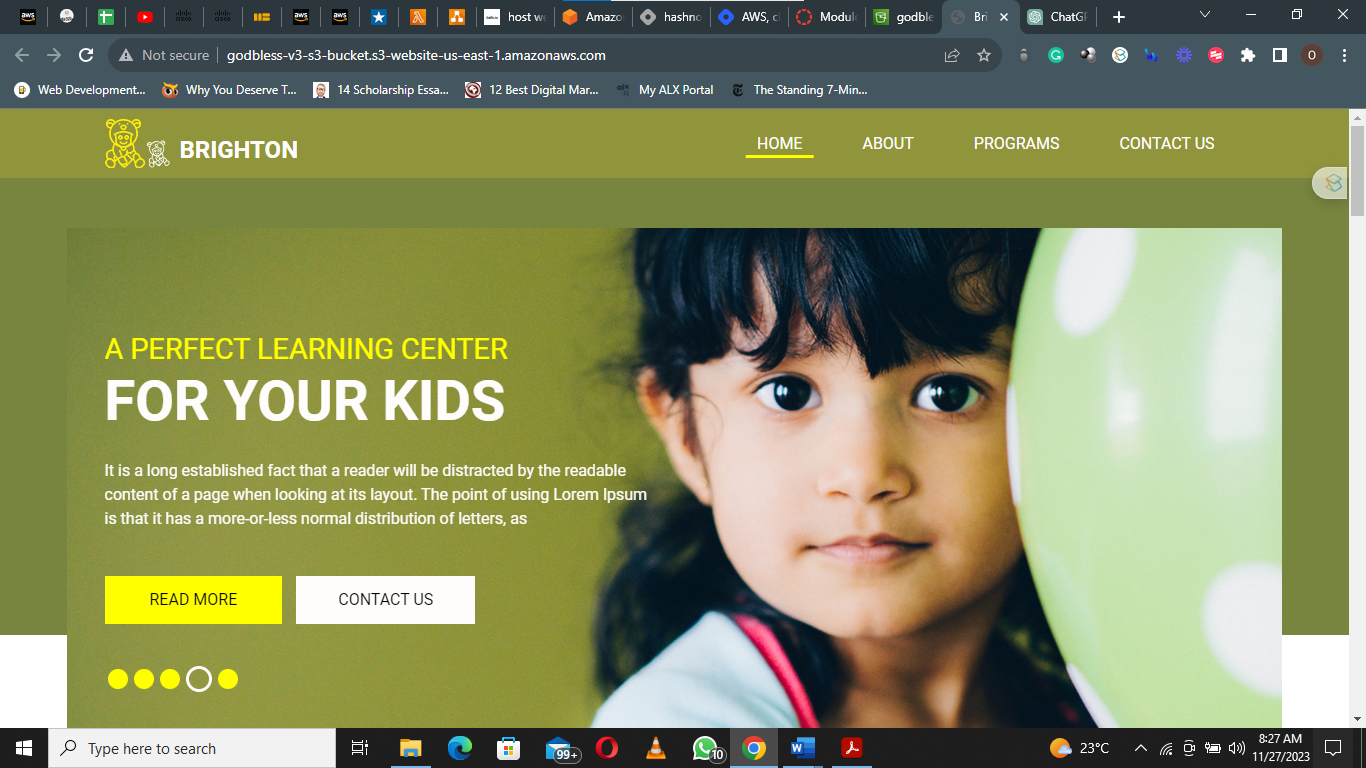

Alright, time to get the reward for our hard work so far. Remember the last step in the First Task right? Now is the time to use it. Navigate to the Properties tab again and scroll down to Static website hosting, where you will find your website endpoint (URL).

Open it in a new browser tab, and if you did everything right, you should find your website application. Congratulations! You just successfully hosted your website and made it publicly accessible.

ENHANCE DATA SECURITY ON AMAZON S3 THROUGH A PROTECTIVE MEASURE: VERSIONING

One Amazon S3 best practice is enabling Versioning. It is very likely that you will need to make many updates to your website as a number of things change.

If you are working with critical data or in collaborative environments where multiple users contribute to data, versioning can help prevent accidental data loss (a strategy to prevent the accidental overwrite and deletion of website objects).

Versioning keeps multiple versions of an object in the same bucket, preserving, retrieving, and restoring every version. If you delete an object, Amazon S3 inserts a delete marker, making it the current version. You can restore previous versions at any time.

When you overwrite an object, a new version is created. Buckets can be unversioned (default), versioning-enabled, or versioning-suspended.

Note: Once versioning is enabled, it cannot be changed back to unversioned, but you can suspend versioning on a bucket if needed. Also, enabling versioning can increase storage costs because each version of an object consumes additional storage.

For further information about versioning, see https://docs.aws.amazon.com/AmazonS3/latest/userguide/Versioning.html

Alright, now let's dive in already.

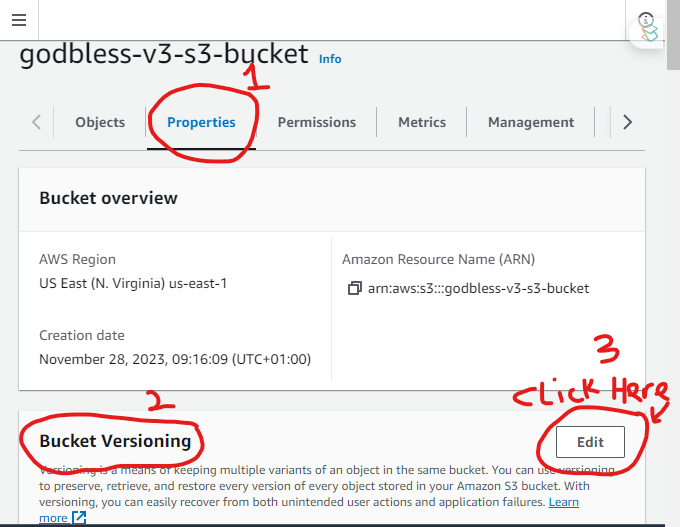

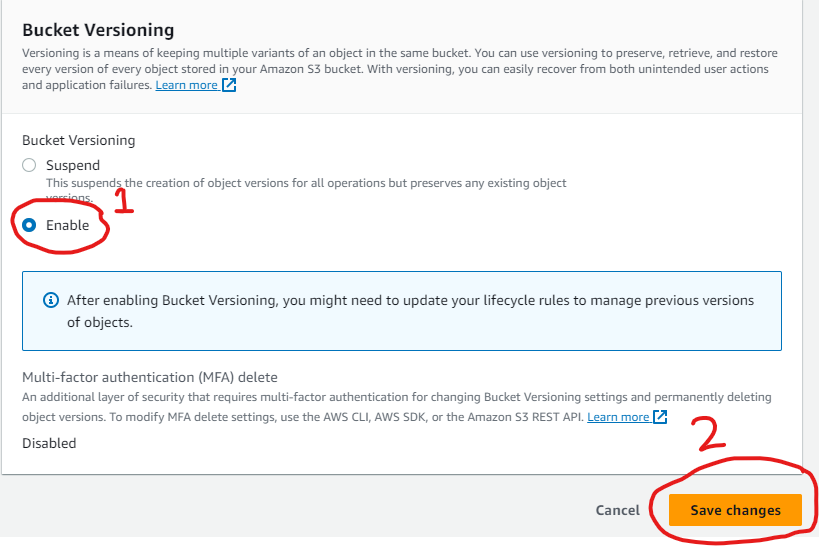

First Task: Enabling versioning

- In the S3 console, navigate to the "Properties" tab, locate Bucket versioning and click on "Edit".

- In the new window, choose Enable and click on Save changes

Versioning has been enabled in your bucket.

Second Task: Confirming that versioning works as expected

Objects in S3 are immutable, meaning that once you upload an object, you cannot modify it directly. Therefore, if you want to make changes to a file, you typically need to make the necessary modifications or updates to the file on your local computer. Then you can upload the modified file back to the S3 bucket, which creates a new version of the object.

You could change your whole HTML code in your index.html file, or even your CSS or Javascript code.

To confirm that versioning has been implemented in this case, we are only going to modify a single line of code in the index.html file. Let's get started!

- Make the changes you want to the appropriate file and save it.

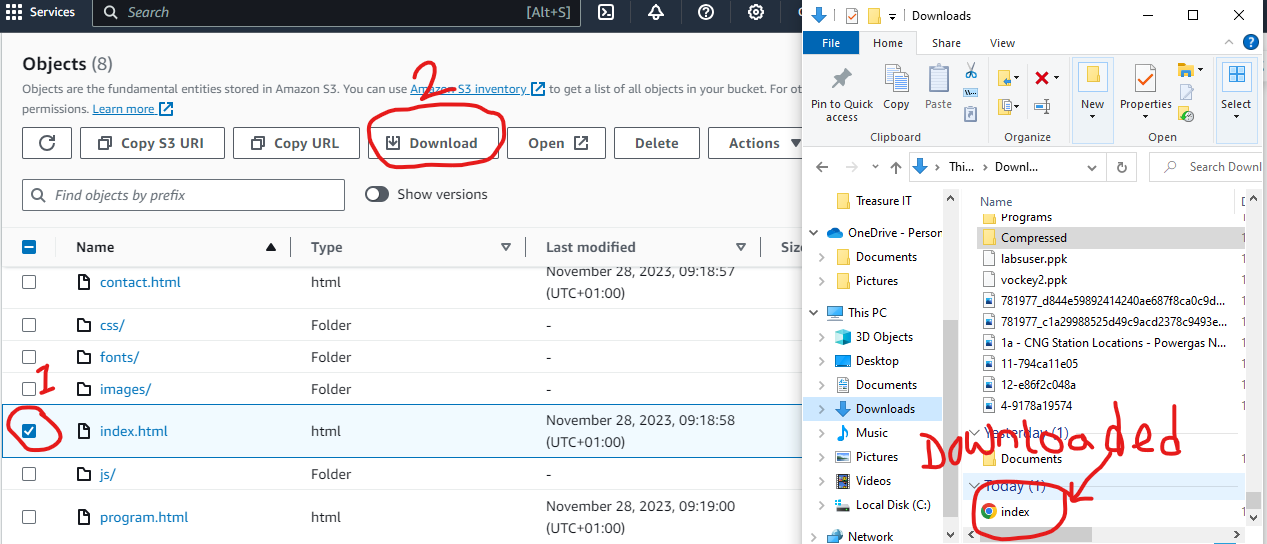

Note that if you can’t locate the file on your local computer anymore, you can simply download the file from S3 to your local computer and make the changes. To do this, simply navigate to the "Objects" tab, locate the object you want to make changes to (in my case, the index.html file), select it by clicking on the check button, and the download button should come alive. Click on it to download your file. I used the index.html file only as an example here; you can make changes to any file you want.

Upload the updated file to your S3 bucket. (I already showed you how to do this in the second task we did when deploying our website).

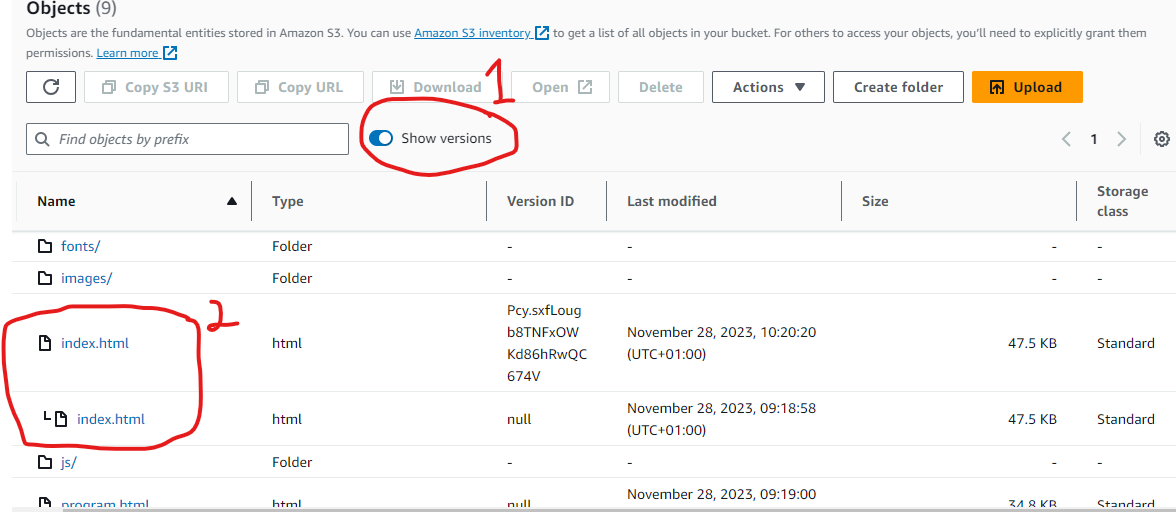

When your file has been successfully uploaded, go back to the Objects tab and click on "Show versions" as shown below. You should see the current version of your file as well as the previous versions.

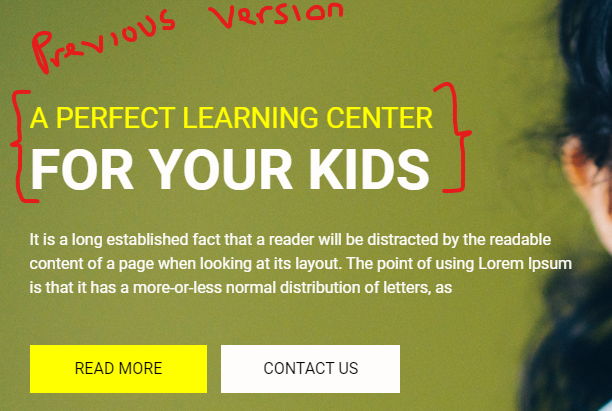

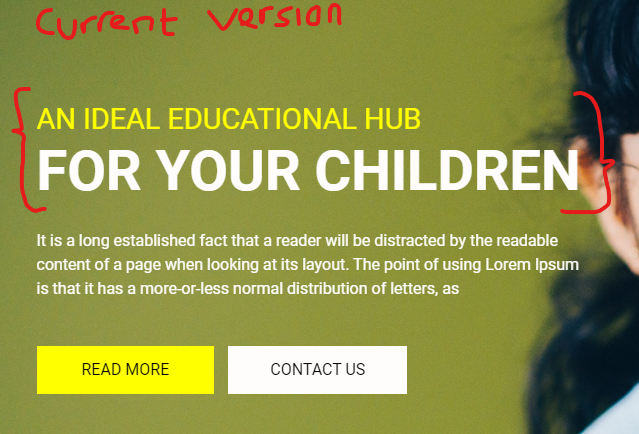

Now, when you open your website, you will notice the changes you made. See the screenshot of mine below. I only made modifications to a single line of code

OPTIMIZE COSTS OF S3 OBJECT STORAGE BY IMPLEMENTING A COMPREHENSIVE DATA LIFECYCLE PLAN

When you enable versioning, the size of the S3 bucket will continue to grow as you upload new objects and versions. To save costs, you can choose to implement a strategy to retire some of those older versions.

Implementing or configuring a lifecycle policy will automatically move older versions of objects between storage classes or Permanently delete previous versions of objects, depending on the action you specify. This cycling reduces your overall cost because you pay less for data as it becomes less important over time.

A lifecycle configuration is a set of rules that define actions that Amazon S3 applies to a group of objects.

In our case, we are going to configure a policy with two rules. The first rule will move older versions of the objects in our source bucket to S3 Standard-Infrequent Access (S3 Standard-IA) after 30 days (1 month). The second rule will eventually expire the objects after 365 days (a year).

Let's get started…

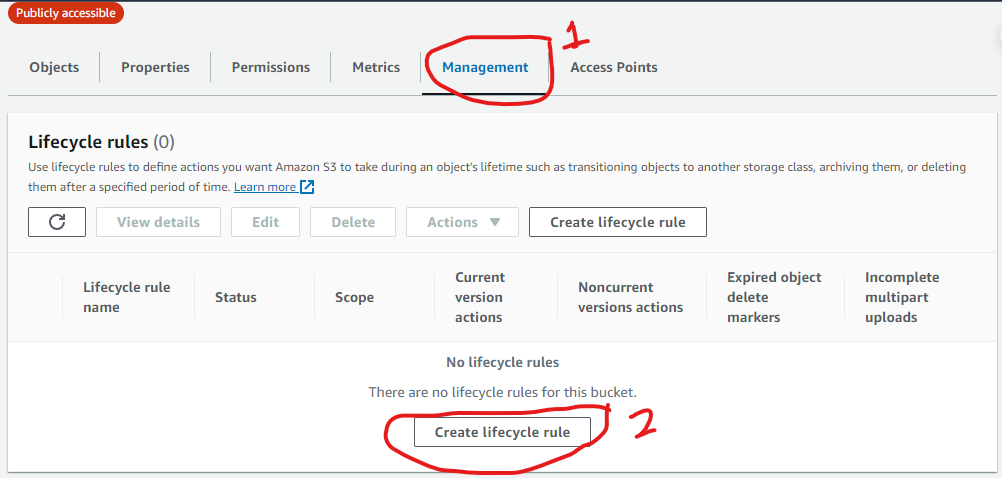

Navigate to the "Management" tab, locate Lifecycle rules and click on "Create lifecycle rule"

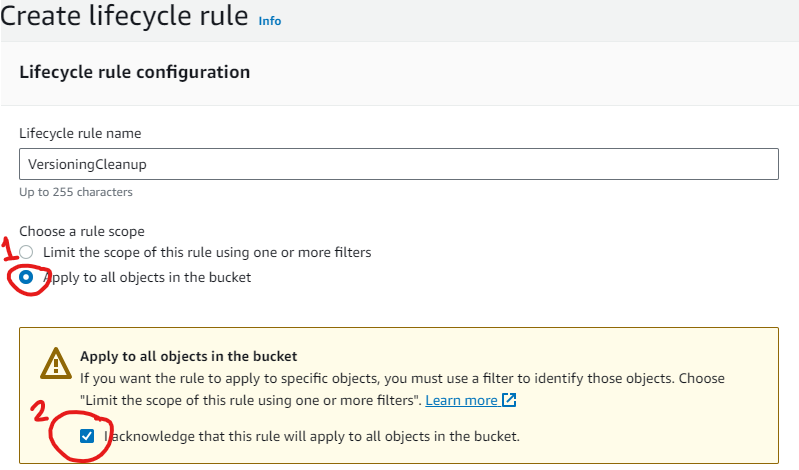

In the new window, enter your rule name.

Choose the scope of the lifecycle rule. Select "Apply to all objects in the bucket" and click on "I acknowledge that this rule will apply to all objects in the bucket".

Note: If you want to limit the rule to specific objects, then select the first option and specify the object prefix, tag key and value. (in our case, we want the rule to affect all objects in our bucket, which is why we went with the second option).

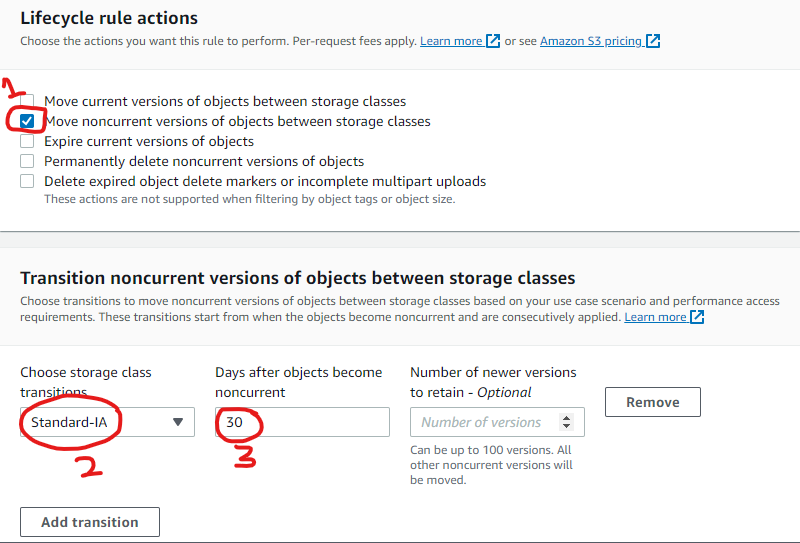

To set the first rule which will move older versions of the objects in your source bucket to a different storage class;

Choose "Move noncurrent versions of objects between storage classes".

Choose your desired storage class. Here we are going with Standard-IA.

Input the number of days after which older versions of objects will move to the new storage class. (here, I choose 30 days after which objects would move into Standard-IA).

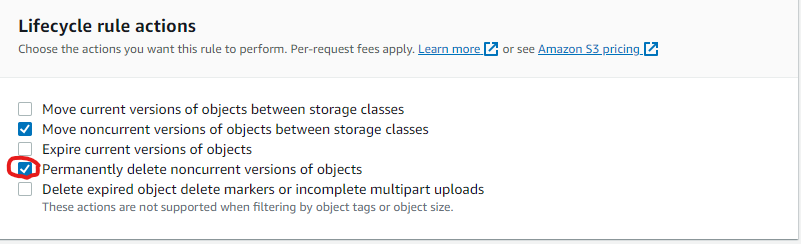

To set a second rule which will expire the objects after a particular period of time;

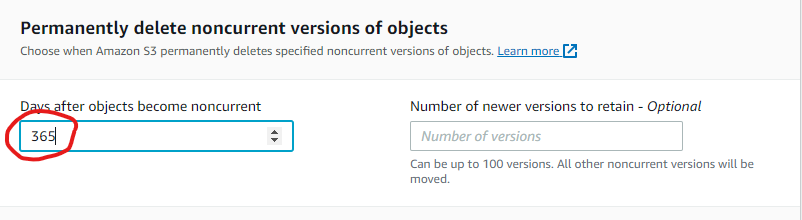

- Scroll back up and select "Permanently delete noncurrent versions of objects".

- Scroll down and you’ll see a new rule has appeared, specify the number of days (in our case, we choose 365 days).

- Scroll down and click on Create rule.

Hurray! You have successfully configured a lifecycle for your objects to manage how they are stored throughout their lifecycle.

The policy we’ve just set above will move previous versions of your source bucket objects to S3 Standard-IA after 30 days. The policy will also permanently delete the objects that are in S3 Standard-IA after 365 days.

For more information on Lifecyle Policy, see this AWS Documentation.

CONCLUSION

We successfully utilized Amazon S3 to host a static website, leveraging its simplicity and scalability. By following the outlined steps, we created an S3 bucket, uploaded the website source code, and configured static website hosting. This approach is particularly advantageous for businesses aiming to establish a web presence and showcase essential information.

Additionally, we implemented key architectural best practices to enhance data security (Versioning) and optimize costs (Lifecycle policy).

Subscribe to my newsletter

Read articles from Godbless Lucky Osu directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by