Docker Volume

Vyankateshwar Taikar

Vyankateshwar Taikar

📦 Introduction to Docker Volumes 📦

Docker volumes are an essential component within Docker, designed to effectively manage and safeguard your data across container instances.

These volumes offer a mechanism to store and exchange data independently from the container itself. They serve several key functions:

Performance Optimization: Volumes can offer improved performance compared to bind mounts, especially when dealing with large amounts of data.

Driver Support: Docker provides various types of volume drivers, allowing you to choose the best storage solution for your needs, such as local, remote, or cloud-based storage.

Persist Data: Volumes ensure that data generated or used by containers is retained even if the container is stopped or removed.

Share Data Between Containers: Volumes enable multiple containers to access and share the same data, making it easier to build multi-container applications.

Backup and Migration: Volumes facilitate data backup, migration, and recovery, as the data is decoupled from the container lifecycle.

Separate Concerns: Volumes separate the concerns of data storage and container execution, making it easier to manage, backup, and restore data.

In essence, Docker volumes 📦 offer a means to orchestrate data persistence 📂, sharing, and segregation 🧩 within containers, adaptability, scalability, and administration 🛠️ of your Dockerized applications. 🐳

🐳Basics of Docker Volume

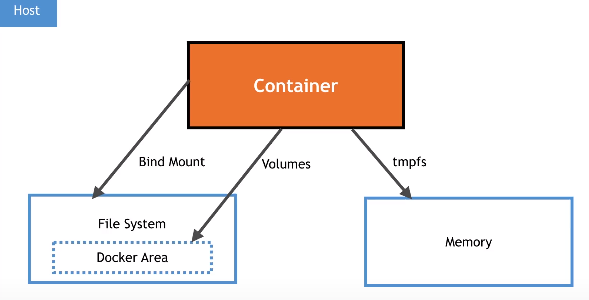

- Data management and persistence in Docker containers are carried out through the use of Docker volumes.

- They allow you to separate the storage of data from the lifecycle of containers, providing a way to store, share, and manage data in a more controlled and flexible manner.

- Containers in Docker are made to be lightweight and ephemeral, which allows them to be launched, stopped and destroyed with no loss of the data.

- However, some applications require persistent data that should survive container restarts, updates, or removals.

- Docker volumes satisfy this requirement by allowing data to be managed independently of container instances.

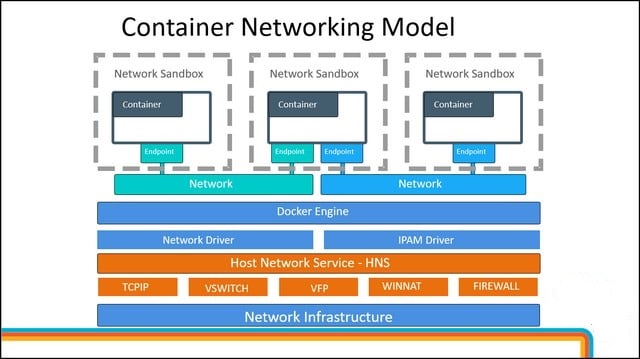

🌐Docker Network

The primary feature of Docker is its networks, which facilitate connectivity and communication both within containers and between them and the host system.

Docker networks offer a secure and classified environment where containers can communicate with one another.

To create and manage Docker networks, you can use the Docker CLI commands like docker network create, docker network ls and docker network connect. You can also define networks in Docker Compose YAML files.

To provide connectivity and communication between containers, both within a single host and across several hosts, Docker networks are essential.

They provide an essential tool for building and deploying complex applications composed of multiple interacting containers.

🎭 learn from the Examples

1) Create a multi-container docker-compose file that will bring UP and bring DOWN containers in a single shot ( Example - Create application and database container )

This is a demonstration of a Docker Compose file that specifies a multi-container configuration that includes a database container and an application container. This sample has setups that allow you to use a single command to bring the containers up or down.

Make a docker-compose.yml file and include the following information in it.

version: '3.8'

services:

app:

image: nginx:latest

ports:

- "8080:80"

depends_on:

- db

db:

image: mysql

environment:

MYSQL_ROOT_PASSWORD: root_password

MYSQL_DATABASE: app_database

If necessary, we can also change the environment variables, port mappings, and other parameters.

Open your terminal, find the directory where the docker-compose.yml file is located, and type the following commands to launch the containers:

docker-compose up -d

With this command, you can launch the database and application containers in detached mode (-d) so they can operate simultaneously in the background.

Use the following command to bring the containers down:

docker-compose down

With this command, the containers will be stopped and removed, along with any related networks and volumes.

2)how to use Docker Volumes and Named Volumes to share files and directories between multiple containers.

- Create a Docker Volume (Named Volume):

The docker volume create command can be used to create a named volume. The shared data that several containers can access will be stored on this volume.

bashcopy codedocker volume create my-shared-data

Establish Containers With the Named Volume:

You can designate the named volume to be mounted inside the containers when you create them. They can now share data using the same volume thanks to this.

docker run -d --name container1 -v my-shared-data:/shared-data my-image1 docker run -d --name container2 -v my-shared-data:/shared-data my-image2

In this example, my-image1 and my-image2 are the images of the containers you want to create. The -v flag specifies the volume to be mounted into the containers.

Access Shared Data:

Inside the containers, the /shared-data directory will contain the shared data from the named volume. Both container1 and container2 will have access to the same data.

Here's how to use Docker Compose to carry out the same thing.

Create a Docker Compose YAML File:

Create a docker-compose.yml file that defines the named volume and the containers that use it.

version: '3.8' services: container1: image: my-image1 volumes: - my-shared-data:/shared-data container2: image: my-image2 volumes: - my-shared-data:/shared-data volumes: my-shared-data:

Run Docker Compose:

Navigate to the directory containing the docker-compose.yml file and run:

codedocker-compose up -d

This command will create and start the containers using the named volume.

Access Shared Data:

Inside the containers, you can access and modify the shared data in the /shared-data directory.

Using Docker volumes and named volumes as described above, you can easily share files and directories between multiple containers. This is particularly useful for scenarios where you need data consistency and synchronization across different parts of your application.

Create two or more containers that read and write data to the same volume using the docker run --mount command.

Create a Docker Volume:

Let's start by creating a Docker volume that the containers will use to share data

docker volume create my-shared-volumeCreate Containers:

Now, create two containers that will read and write data to the shared volume using the docker run --mount command.

# Container 1 docker run -d --name container1 --mount source=my-shared-volume,target=/shared-data busybox sh -c "echo 'Hello from Container 1' > /shared-data/message.txt" # Container 2 docker run -d --name container2 --mount source=my-shared-volume,target=/shared-data busybox sh -c "cat /shared-data/message.txt"

In this example, we're using the busybox image, a lightweight base image. The first container writes a message to a file in the shared volume, and the second container reads and displays the message.

Access Shared Data:

You can check the output of the second container to verify that it successfully read the message from the shared volume.

docker logs container2

The output should display: "Hello from Container 1".

This example demonstrates how to create two containers that interact with the same volume using the docker run --mount command. You can adapt this approach to more complex scenarios and use cases, where multiple containers need to share and manipulate data in a coordinated manner.

Verify that the data is the same in all containers by using the docker exec command to run commands inside each container.

Verify Data Using docker exec:

Use the docker exec command to run a command inside each container and verify that the data is the same:

# Verify data in Container 1 docker exec container1 cat /shared-data/message.txt # Verify data in Container 2 docker exec container2 cat /shared-data/message.txtUse the docker volume ls command to list all volumes and the docker volume rm command to remove the volume when you're done.

List All Volumes:

Use the docker volume ls command to list all volumes created on your system:

docker volume ls

This will display a list of volumes along with their names and other information.

Remove the Volume:

Once you're done testing and want to remove the volume you created, you can use the docker volume rm command followed by the volume name.

In our previous examples, the volume name is my-shared-volume:

docker volume rm my-shared-volume

Note that you can only remove a volume if there are no containers or services that are actively using it. If you attempt to remove a volume that is still in use, you will receive an error message.

Remember that removing a volume is a permanent action and will result in the loss of any data stored in that volume. Make sure you've backed up any important data before removing a volume.

By using the docker volume ls and docker volume rm commands, you can easily manage and clean up volumes that are no longer needed in your Docker environment.

As we understand from the above ,

One essential component that makes it possible for containers to handle data separation, sharing, and persistence is Docker volumes. They make it possible for containers to store and retrieve data without regard to the application's lifecycle, which improves application portability, scalability, and reliability.

Volumes facilitate data consistency, simple backup, and smooth data sharing amongst containers by separating data from the container filesystem. Docker volumes are a vital component of the Docker ecosystem because they enable developers to create flexible applications with effective data management and communication capabilities.

I hope you enjoy the blog post!

If you do, please show your support by giving it a like ❤, leaving a comment 💬, and spreading the word 📢 to your friends and colleagues 😊.

Subscribe to my newsletter

Read articles from Vyankateshwar Taikar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Vyankateshwar Taikar

Vyankateshwar Taikar

Hi i am Vyankateshwar , I have a strong history of spearheading transformative projects that have a direct impact on an organization's bottom line as a DevOps Engineer with AWS DevOps tools implementations.