External Reconnaissance Unveiled: A Deep Dive into Domain Analysis

Rizwan Syed

Rizwan SyedTable of contents

Introduction:

Cybersecurity: Mastering External Reconnaissance" is a detailed guide for cybersecurity professionals, focusing on subdomain enumeration, HTTP probing, DNS resolving, port scanning, and technology stack analysis. The methodology employs powerful tools, ensuring a comprehensive understanding of the target organization's digital footprint.

The below tools are needed in the system

0. Domain Discovery:

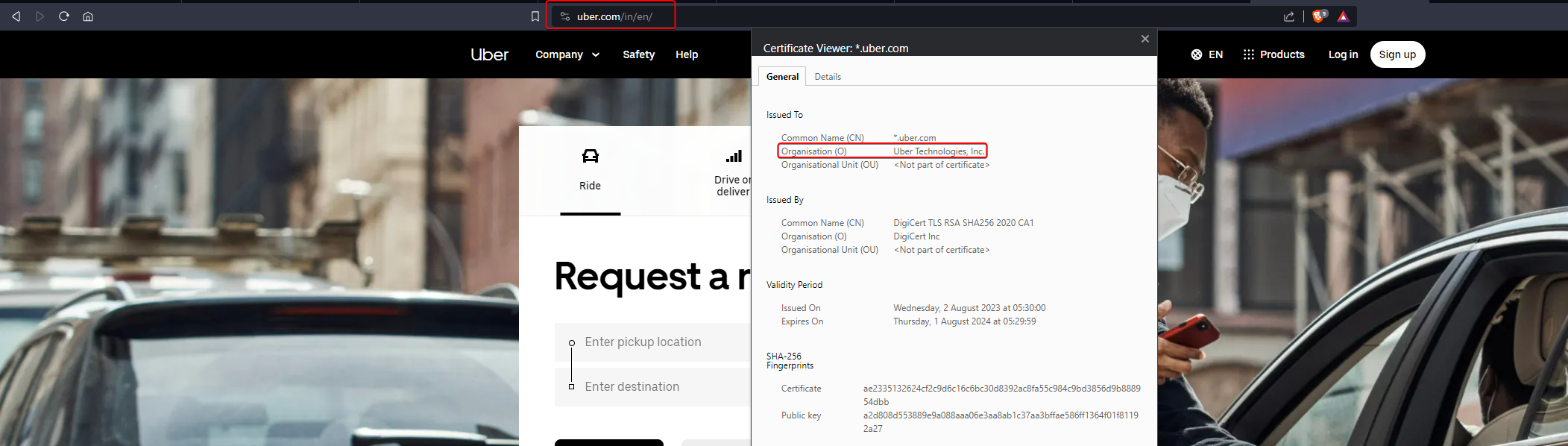

Once you've selected your target organization, visit their official website. Retrieve the organization's name from the SSL certificate in your browser or from the footer/contact page of the website. You can then use this organization name to discover additional apex/root top-level domains associated with your target organization.

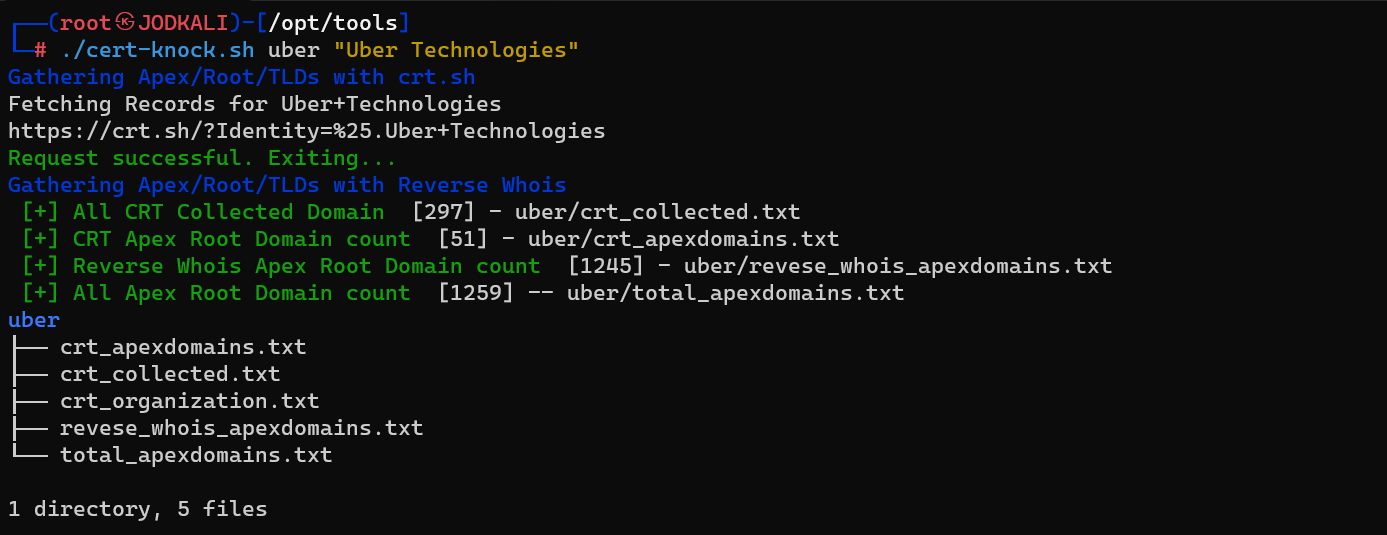

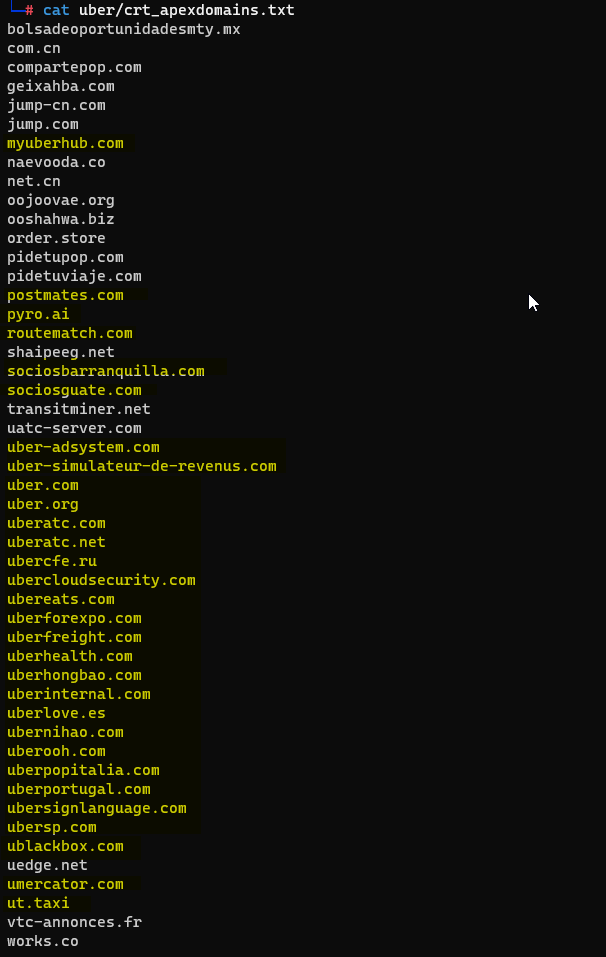

Unveiling the Apex/Root/TLDs with crt.sh and Reverse-Whois

Afterward, leverage the crt-knock.sh script by providing the organization's name as input. This script gathers all apex domains from crt.sh SSL certificate transparency logs and conducts reverse whois searches to acquire additional domains

wget https://raw.githubusercontent.com/mr-rizwan-syed/chomtesh/main/core/cert-knock.sh -P MISC

chmod +x MISC/cert-knock.sh

./MISC/cert-knock.sh

./MISC/cert-knock.sh uber "Uber Technologies, Inc."

./MISC/cert-knock.sh uber "uber.com"

Please note that the results can also contain domains that are not owned by the particular target, Not all of them are linked to your target organization; manual segregation is required.

Next, we can advance to the target domain for further processing.

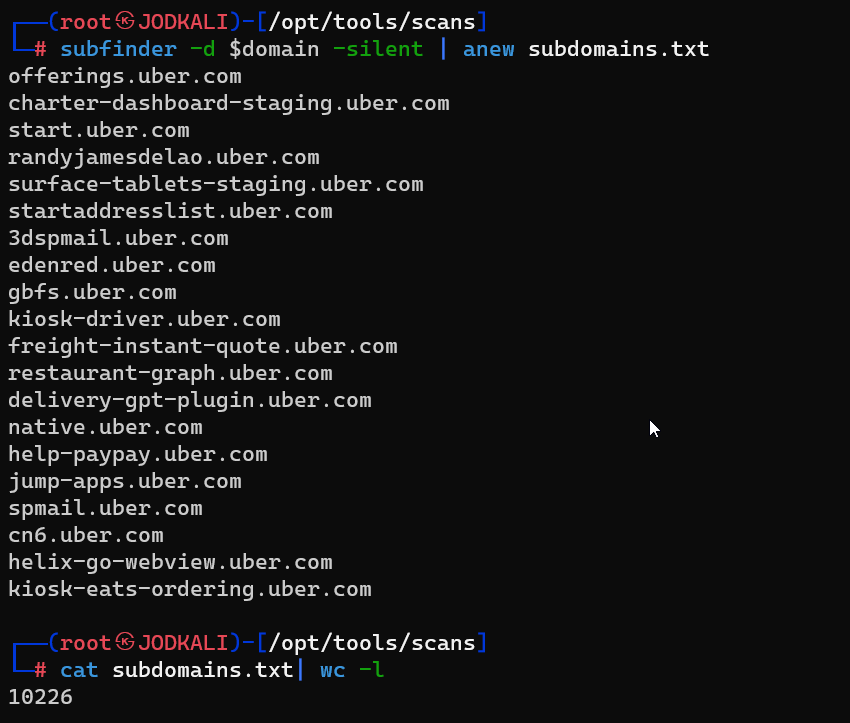

1. Subdomain Gathering - Passive

Easily gather subdomains with the help of the robust subfinder tool from ProjectDiscovery.

Note: For effective results, you must put API Keys in subfinder config file

More Details?

domain=targetdomain.com

subfinder -d $domain -silent | anew subdomains.txt

subfinder -dL subdomains.txt -silent | anew subdomains.txt

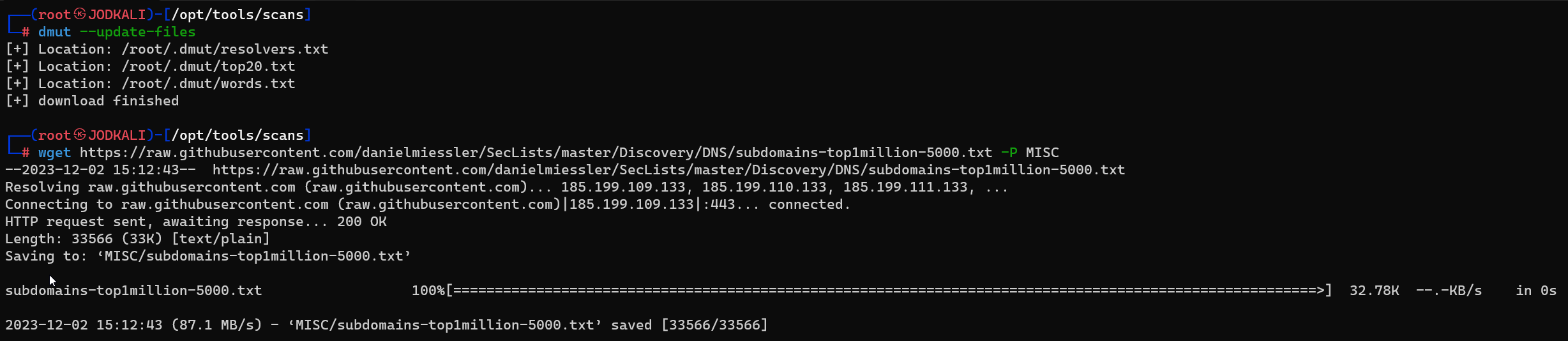

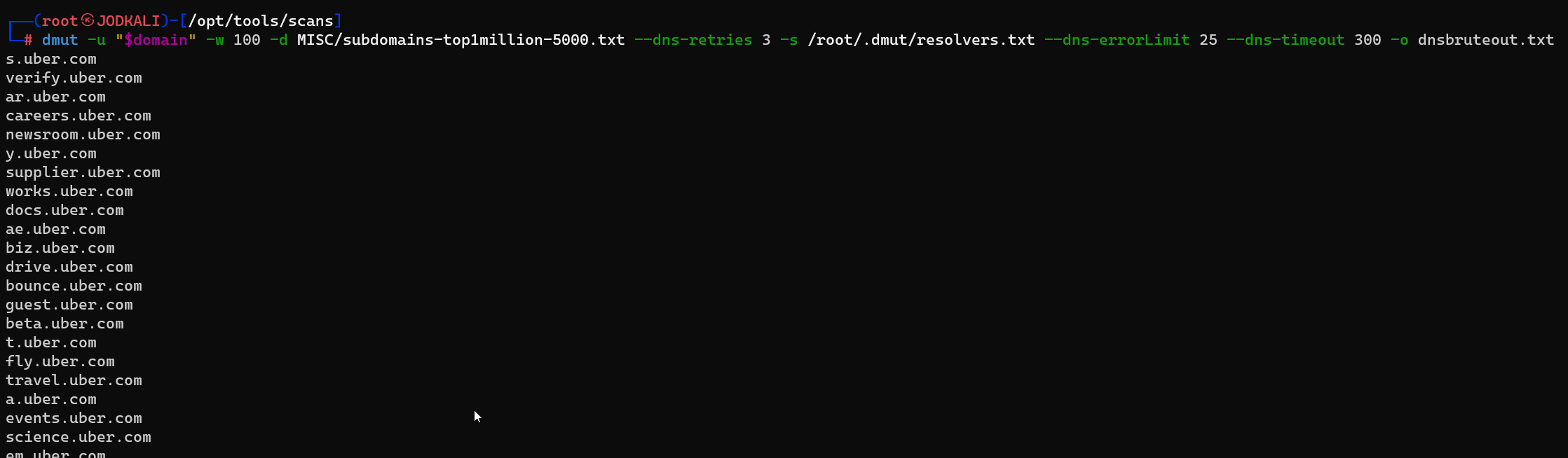

1.1 Subdomain Gathering - Active

Employing dmut for subdomain brute-force attacks with a standard wordlist Utilizing the AlterX tool to dynamically generate custom wordlists through permutation and combination, followed by DNSx resolution for enhanced subdomain discovery.

More Details?

# dmut --update-files

# wget https://raw.githubusercontent.com/danielmiessler/SecLists/master/Discovery/DNS/subdomains-top1million-5000.txt -P MISC

dmut -u "$domain" -w 100 -d MISC/subdomains-top1million-5000.txt --dns-retries 3 -s /root/.dmut/resolvers.txt --dns-errorLimit 25 --dns-timeout 300 -o dnsbruteout.txt

cat subdomains.txt| alterx | dnsx -silent -r /root/.dmut/resolvers.txt -wd $domain | anew alterx && cat alterx | anew -q dnsbruteout.txt

grep -Fxvf subdomains.txt dnsbruteout.txt > brutesubdomains.tmp

cat dnsbruteout.txt | anew -q subdomains.txt

2. HTTP Probing

To identify active URLs through HTTP probing, leveraging the httpx tool from ProjectDiscovery is crucial. This tool not only confirms live hosts but also boasts additional features for comprehensive enumeration, including URL details, titles, status codes, and insights into the technology stack and web server.

More Details?

cat subdomains.txt | httpx -fr -sc -content-type -location -timeout 30 -retries 2 -title -server -td -ip -cname -cdn -vhost -pa -random-agent -asn -favicon -r /root/.dmut/resolvers.txt -stats -si 120 -csv -csvo utf-8 -o httpxout -oa

cat httpxout.json | jq -r '.url' 2>/dev/null | anew -q urlprobed.txt

3. DNS Resolving

Leveraging the DNSx tool for DNS resolving allows for the extraction of both A and CNAME records, while also discerning the Content Delivery Network (CDN) in use. Furthermore, the tool facilitates the identification of subdomains directed to IP addresses linked to the target organization.

More Details?

cat subdomains.txt | dnsx -silent -a -cname -re -cdn -r /root/.dmut/top20.txt | anew dnsreconout.txt

cat dnsreconout.txt | cut -d ' ' -f 2 | sort -u | awk -F'[][]' '{print $2}' | grep -vE 'autodiscover|microsoft' | anew -q dnsxresolved.txt

4. Quick Port Scanning

Utilizing the naabu tool from ProjectDiscovery, a swift port scanner, quick port scanning involves rapidly exploring a target's network to pinpoint open ports and assess potential entry points.

More Details?

naabu -list subdomains.txt -top-ports 1000 -r /root/.dmut/resolvers.txt -exclude-cdn -cdn -stats -si 60 -j -o naabuout.json

cat naabuout.json | jq -r '"\(.ip)"' | anew aliveip.txt

cat naabuout.json | jq -r '"\(.ip):\(.port)"' | anew ipport.txt

cat naabuout.json | jq -r '"\(.host):\(.port)"' | anew hostport.txt

4.1 To Convert Naabu Port Scan Results from JSON to CSV

Note: Although naabu supports CSV output, it currently doesn't provide the desired format. Therefore, we are using this Python script to achieve the desired output.

Below is the Python code for converting naabu json file to CSV

Save the above python code in naabu_json2csv.py file

python3 naabu_json2csv.py naabuout.json naabuout.csv

5. HTTP Probing on uncommon open ports

cat hostport.txt | grep -v ":80\|:443" | anew -q hostport-services.txt

cat hostport-services.txt | httpx -fr -sc -content-type -location -timeout 30 -retries 2 -title -server -td -ip -cname -cdn -vhost -pa -random-agent -asn -favicon -r /root/.dmut/resolvers.txt -stats -si 120 -csv -csvo utf-8 -o httpxout-portprobe -oa

cat httpxout-portprobe.json | jq -r '.url' 2>/dev/null | anew -q urlprobed.txt

6. To extract potential URLs

cat httpxout*.json | jq -r 'select(.status_code | tostring | test("^(20|30)")) | .final_url' | grep -v null | grep '^http' | anew -q potentialsdurls.tmp

cat httpxout*.json | jq -r 'select(.status_code | tostring | test("^(20|30)")) | select(.final_url == null) | .url' | anew -q potentialsdurls.tmp

cat potentialsdurls.tmp | sed 's/\b:80\b//g;s/\b:443\b//g' 2>/dev/null| sort -u| anew -q potentialsdurls.txt

rm potentialsdurls.tmp

7. Tech Stack Enumeration using WebAnalyze

Tech Stack Enumeration involves utilizing the WebAnalyze tool to analyze web applications and enumerate their technology stack.

Also, download the latest technologies.json file

More Details?

# wget https://raw.githubusercontent.com/rverton/webanalyze/master/technologies.json -P MISC

webanalyze -hosts urlprobed.txt -apps MISC/technologies.json -silent -crawl 2 -redirect -output json 2>/dev/null | anew -q webtech.json

8. Horizontal Tech Enumeration

Horizontal enumeration streamlines the reconnaissance process by selectively scanning or checking URLs based on identified technologies. Instead of testing all tools on all URLs, this approach focuses on the specific technologies identified, optimizing efficiency, conserving resources, and providing a more targeted analysis of potential security issues.

Here's how horizontal enumeration works in the described scenario:

Technology Identification: Initial reconnaissance tools, such as httpx and webtech, are used to identify the technologies associated with the target URLs.

Selective Scanning:

Rather than conducting comprehensive tests on all URLs, the reconnaissance process becomes more targeted.Efficiency and Resource Optimization:

Horizontal enumeration enhances efficiency by avoiding unnecessary tests on URLs that do not pertain to the identified technologies. Resource optimization is achieved as computational resources are directed towards targeted assessments, reducing the overall time and processing power required.Focused Analysis:

The reconnaissance efforts are concentrated on the technologies that have been identified, allowing for a more focused and in-depth analysis of potential vulnerabilities, misconfigurations, or security issues associated with those specific technologies.Minimized Noise:

Horizontal enumeration minimizes the noise generated during the reconnaissance process by excluding URLs that do not match the specified technology criteria. This results in a more concise and relevant set of findings.

In summary, horizontal enumeration optimizes reconnaissance by tailoring subsequent scans and checks based on the identified technologies. This approach enhances efficiency, conserves resources, and provides a more focused analysis of potential security aspects associated with the specific technologies in use.

This script, named techdetect.sh, filters URLs based on the specified technology, ensuring that subsequent scans or checks are relevant only to the identified technologies.

In summary, the script serves as a tool for enumerating and displaying URLs associated with a specified technology, leveraging information from JSON files generated by tools such as httpx and webtech.

wget https://gist.githubusercontent.com/mr-rizwan-syed/1e1dfe3aad931fc9e8b49c3679cab965/raw/99e177b1e31c1fddba9b2f3caf44730f4570334c/techdetect.sh

chmod +x techdetect.sh

./techdetect.sh <technology_name>

This will print all URLs of technologies

./techdetect.sh wordpress

./techdetect.sh drupal

./techdetect.sh jenkins

Conclusion:

In summary, the guide equips cybersecurity professionals with the techdetect.sh script, a powerful tool for horizontal tech enumeration. By efficiently filtering results, it minimizes noise and focuses on relevant findings. The abundance of results, including live IP addresses, resolved domains, probed URLs, and technology insights in webtech.json, underscores the guide's effectiveness in providing a wealth of actionable information.

The diverse set of output files, such as aliveip.txt, dnsreconout.txt, httpxout.json, and more, demonstrates the thoroughness of the reconnaissance process. Professionals can leverage these results for in-depth analysis and decision-making in cybersecurity assessments. "Mastering External Reconnaissance" stands as a comprehensive resource for cybersecurity practitioners seeking precision and depth in their reconnaissance endeavours.

Subscribe to my newsletter

Read articles from Rizwan Syed directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by