Gemini is here!

Nwankwo Obasi

Nwankwo Obasi

Gemini is built from the ground up for multimodality — reasoning seamlessly across text, images, video, audio, and code.

The AI war of 2023 is heating up, and Google has just thrown down the gauntlet. After Microsoft's GPT-4 captured the early zeitgeist, Google responded with its highly anticipated Gemini model, which surpasses GPT-4 on nearly every benchmark.

Google has recently unveiled its highly anticipated Gemini AI model, which has been generating significant buzz in the tech world. In this blog post, we'll take a closer look at Gemini's capabilities, how it compares to competitors like GPT-4, and its potential impact on various industries.

Gemini's Capabilities:

Multimodal: Trained on text, sound, images, and video, allowing it to process information and respond in real-time across various modalities.

Impressive Demonstrations: Able to recognize objects and actions in video feeds, generate images, translate languages, and even compose music.

Logic and Spatial Reasoning: Can solve complex problems using logic and spatial reasoning, making it valuable for engineers and designers.

Comparison to GPT-4:

While both models are powerful language models, Gemini outperforms GPT-4 in many key areas:

Benchmarks: Gemini Ultra outperforms GPT-4 on nearly every benchmark, including the challenging Multitask Massive Language Understanding (MMLU) benchmark.

Capabilities: Gemini's multimodal capabilities give it an edge over GPT-4, which is limited to text-based inputs and outputs as advertised by Google despite the fact a later announced version of GPT-4 Vision is also a multimodal model.

Potential Impact:

Gemini has the potential to revolutionize various industries, including:

Software Development: AlphaCode 2, a companion project to Gemini, can outperform 90% of programmers as advertised by Google.

Engineering: Gemini's ability to reason and generate designs could be invaluable for civil engineers, architects, and other professionals.

Creative Industries: The ability to generate music and images opens up new possibilities for artists and designers.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25137172/gemini_mm_02.png)

Challenges and Limitations:

Despite its impressive capabilities, Gemini still faces some challenges:

Accessibility: Currently, only the mid-range version of Gemini is available for public use on Bard and will be available on Google Cloud Platform and Vertex Ai starting December 13, 2023, offering developers direct API access for various applications.

Performance Gap: While Gemini Ultra outperforms GPT-4 on most benchmarks, it underperforms on the "Hell Swag" benchmark, which measures common sense and natural language understanding and that bugs me because why not?

Conclusion:

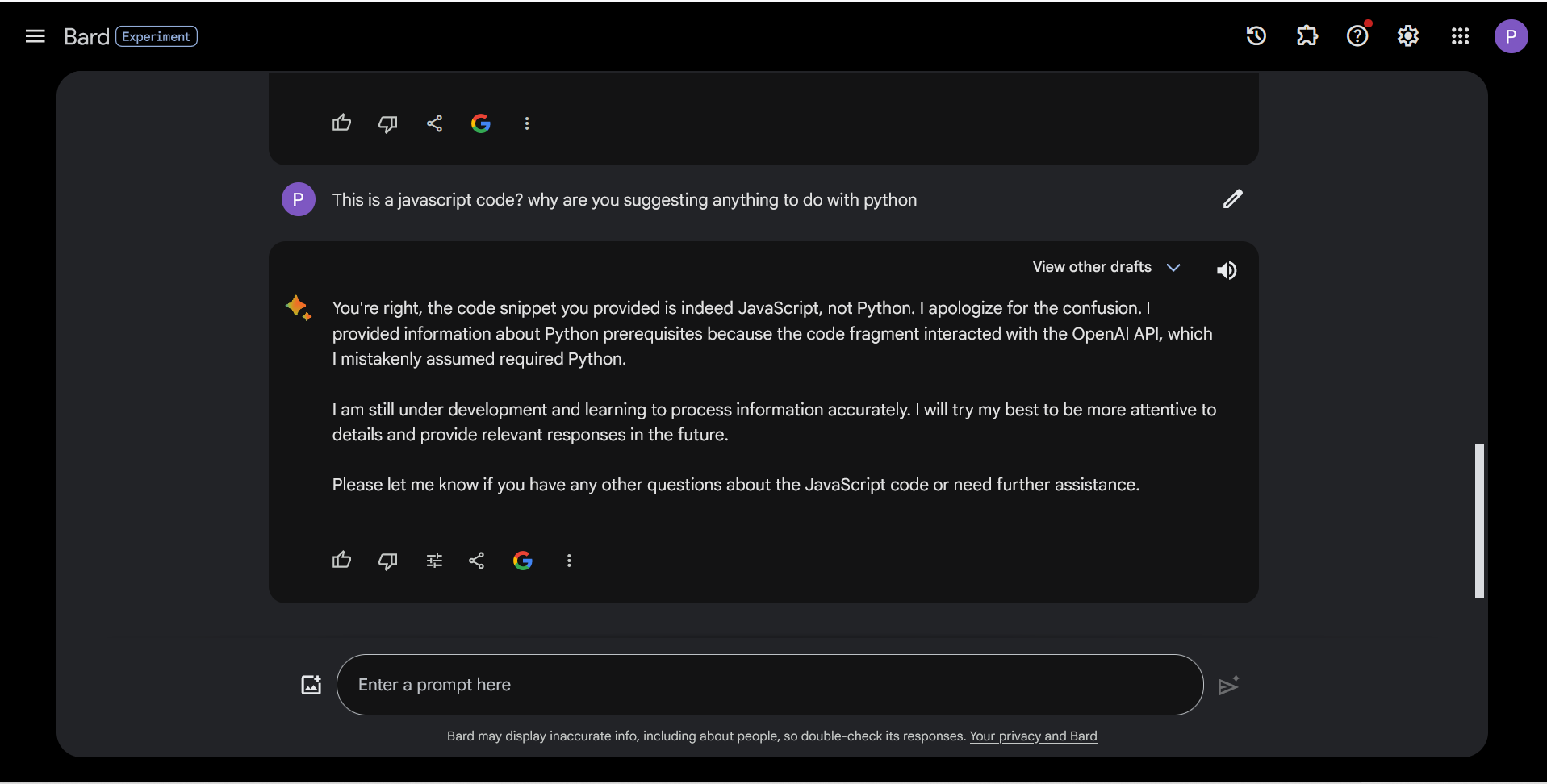

Google's Gemini AI model is a powerful tool with exciting possibilities. It excels at generating various outputs, including images from text prompts (like Stable Diffusion) and even music. Notably, it can generate not just text-to-audio but also image-to-audio, making it a versatile "anything-to-anything" model. With its potential to impact various industries, Gemini could significantly reshape the future of AI. It's been pr'd to have such excellent features but I recently prompted it with some javascript code and it advised me to install Python packages to run the code, lol. So until sufficient testing has been done by the community with its API I am less optimistic about it.

Subscribe to my newsletter

Read articles from Nwankwo Obasi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Nwankwo Obasi

Nwankwo Obasi

Hello, I'm Nwankwo. I am a developer studying Remote Sensing and GIS. With +3 years programming experience. I intend to build more dev projects and apply AI.