Natural Language Processing

Kith Stack

Kith Stack

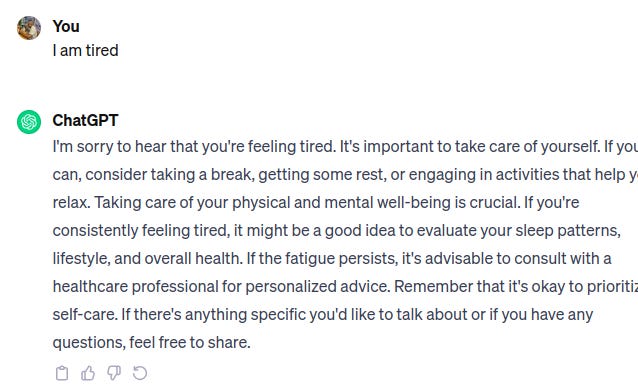

Weird right? I mean, how in the world is ChatGPT able to come up with that? I do not know if you ever ask yourself such questions as these, but I believe, you nevertheless would be interested in the answers. What we have here is software that processes human language like a human.

Natural Language Processing or NLP is simply the processing of natural language. Just that this time, it is not a human doing the processing, but software. A common mistake could be made to confuse language translation with what we are looking at. What such sites as Google Translate do is machine translation and not NLP.

NLP, however, is concerned with the perception of a message and context. This could be the tone in which the message is said and the emphasis on certain words or the previous knowledge held by the message recipient. This task usually comes easy for us humans, but there are times when we fail to process a message right. This could be because a person spoke in a monotone or their punctuation was off or something like that or their joke just was not funny, so we did not laugh despite their expectation.

The point I am trying to make is that NLP is nondeterministic. Some math equations with some variables will always equal the same value with the same values fed into the variables but such determinism is not shared when processing natural language. I believe by now you can already picture how hard it is to translate human natural language processing abilities into a computer algorithm.

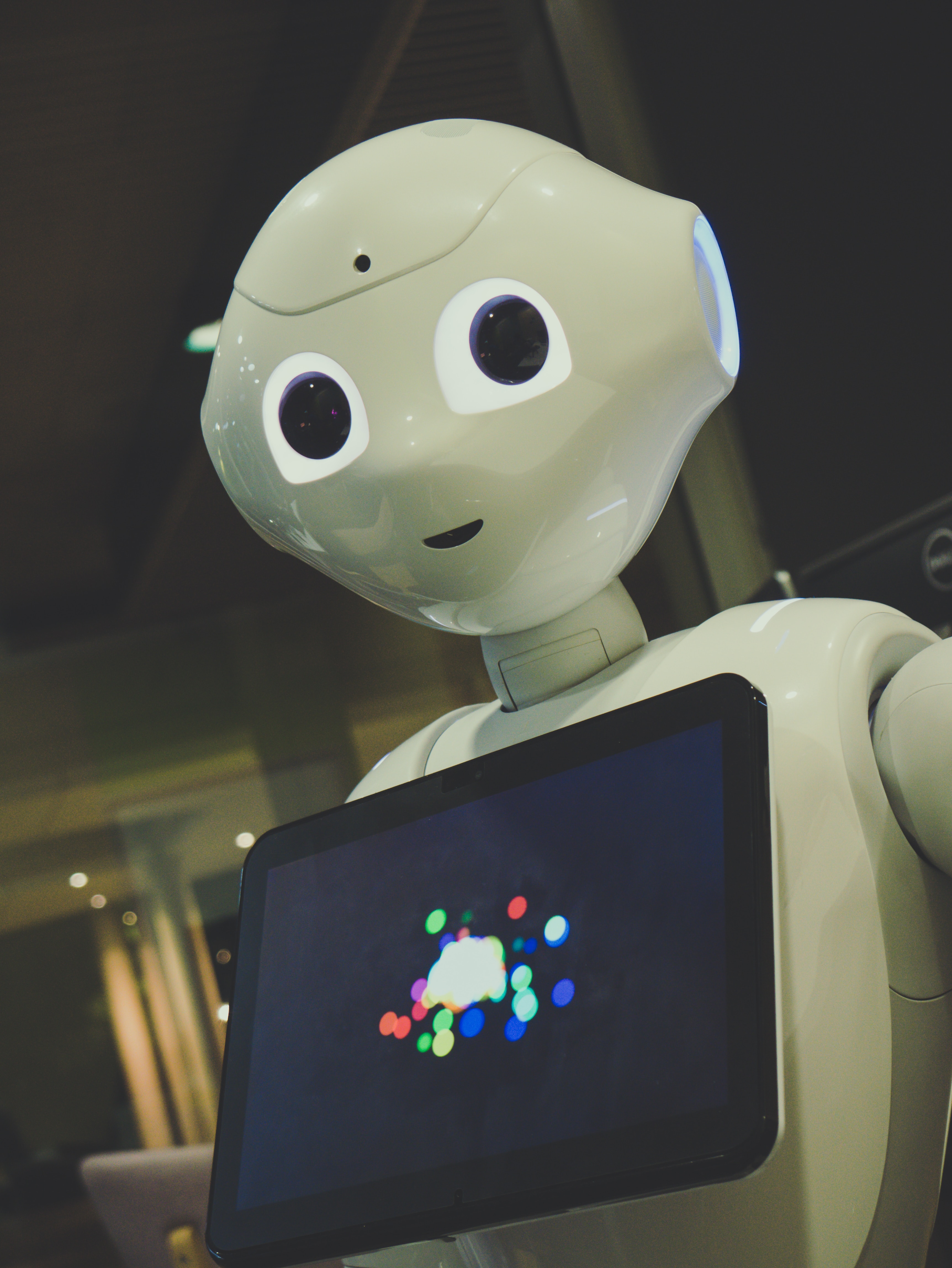

An algorithm able to do this would be intelligent, or should I say artificially intelligent. It should be no surprise that NLP is a sub-field of AI.

Human language processing is more nuanced than we might think. Consider a message as seemingly simple as "He burnt his hand, he put it in the fire". It is common sense that humans are not fireproof and direct contact with fire will result in a burn. Therefore, someone reading this message will obviously, like we agreed, by commonsense, understand that it was putting the hand in the fire that caused the burn. How will a machine understand this statement without this basic knowledge? How will it be able to know that even if I said " he burnt his hand" first, I do not mean this happened before the hand was put into the fire? Interesting right? How do we expect a machine to know such details about a phrase that is not included in it? The commonsense details.

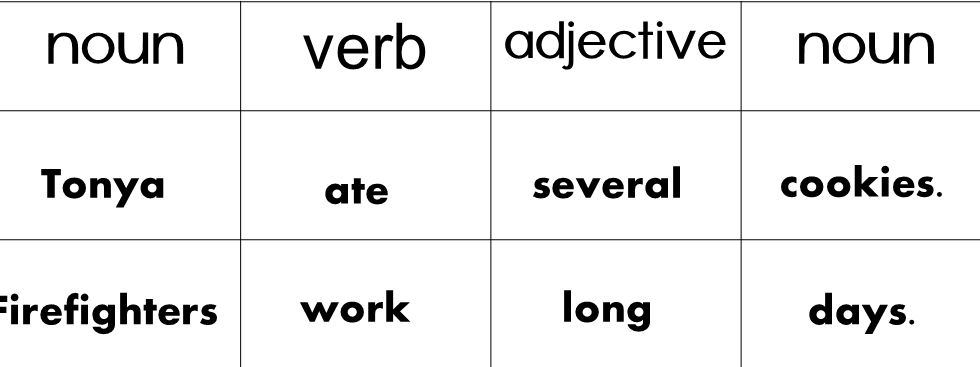

Incorporating these details is so tricky it has whole two categories of solutions in the field of NLP. In one, rules for processing a specific context are created, take for instance grammar where we could provide information on how sentences are organized.

These rules are then applied to the data, which is processed according to the rules in whichever task it might be. Could be text summarization or an AI chatbot responding to a message or replying to it. Finally, the rules go back-to-back between refinement and reapplication to increase accuracy. This here is what we call Rules-based NLP. There are many contexts for natural language processing, and each needs its own set of rules which must be manually entered. This is daunting and time-intensive.

This burden is not without a solution. It turns out there is a way to deduce what a message means by studying a wide range of data and learning this meaning from what similar text often meant when it occurred in various related settings. Or should I say, that a machine can learn from past data what the current data means? That sounds like machine learning and yes, Statistical NLP, which is what this method is called, does use Machine learning-based algorithms in processing language.

Now which method is better than the other? Both methods have their upsides and downsides. We already mentioned the strain that comes with writing a lot of rules in Rules-based NLP, which is not the case with statistical NLP. This though is not without benefit since the algorithm turns out to be more accurate than its counterpart. Rules-based NLP emulates humans’ perception of messages in language processing and thus the accuracy. One drawback though is some rules are not written since there is quite a lot to write. Therefore, some scenarios in processing might not be catered for. Statistical learning, since it trains on large datasets, accounts for more than Rules-based NLP does. Save for it being less accurate than Rules Based NLP.

Have you used such software before? Well, you have but did not know you were when you did. If you have used ChatGPT then you used NLP, isn't that cool? NLP is very often used today not only in ChatGPT but also in Chatbots, text-summarizers, and sentiment analysis tools (a tool that can read emotion from text). Yeah, Siri and Alexa as well.

It is fascinating to me how fast growth is in IT. I had to talk verbally to ChatGPT as I consulted on how to write this post. A “real” conversation. Yes, it now has that enabled on the free version. How crazy can this get? We are living the lives of our ancestors’ dreams. Cannot wait, cannot predict whatever comes next after this.

"Artificial intelligence, with all its revolutionary power, has the potential to reshape the world, challenging our understanding of humanity and the very fabric of society." —ChatGPT: XD.

Subscribe to my newsletter

Read articles from Kith Stack directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Kith Stack

Kith Stack

Bachelor's degree in engineering student. 1st Century Christian. Interested studying the Bible, Roman History, playing Piano (haven't started yet), Chess, AI, Psychology. Guess this platform will only have the AI stuff.