Retrieval augmented version of LLM

Manik Khandelwal

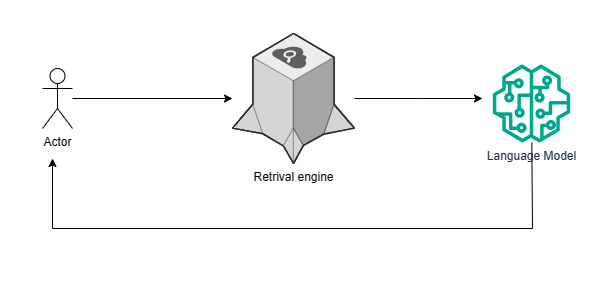

Manik KhandelwalA retrieval-augmented version of a large language model (LLM) is a type of LLM that utilizes an information retrieval (IR) system to enhance its performance. Imagine it like this: the LLM is a powerful engine, but it needs the right fuel and guidance to run smoothly and efficiently. The IR system acts as the fuel and GPS, providing the LLM with relevant information and context to generate more accurate and informative responses.

Here's how it works:

User Input: You ask the LLM a question or give it a task.

Information Retrieval: The IR system searches through a vast external knowledge base (like a library or the internet) and retrieves relevant documents or passages.

Augmentation: The retrieved information is then fed into the LLM as additional context. This helps the LLM understand the query or task better and generate a more informed response.

Generation: The LLM processes the information and uses its own language generation capabilities to produce an output, such as an answer to your question, a creative text format, or a solution to a problem.

Benefits

Increased Accuracy

Improved Factuality: The retrieved information can help the LLM stay up-to-date on current events and factual knowledge.

Enhanced Context: The additional context provided by the retrieved information can help the LLM understand the nuances of your query and generate a more relevant response.

Better Domain Specificity: Retrieval-augmented LLMs can be fine-tuned for specific domains by using domain-specific knowledge bases.

Challenges

Increased Complexity: Integrating an IR system with an LLM can add complexity to the overall system.

Computational Cost: Retrieving and processing information from external sources can be computationally expensive.

Data Quality: The accuracy of the retrieved information can impact the accuracy of the LLM's output.

Overall, retrieval augmented LLMs represent a promising approach to improving the performance of LLMs. They offer a way to leverage the strengths of both LLMs and IR systems to generate more accurate, informative, and contextually relevant responses.

Subscribe to my newsletter

Read articles from Manik Khandelwal directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by