Food Ordering Bot with Langchain and OpenAI function calling

Ritobroto Seth

Ritobroto Seth

Introduction

In this blog, we'll use OpenAI Function Calling to create a pizza ordering bot that interacts with users and takes their orders. The bot performs 3 operations:

Greet the user.

Take Orders from the user.

Update the user on the order status.

Next, let's explore the bot's engagement with users.

Pizza Order Chatbot In Action

This bot uses Langchain JS and the OpenAI function calling feature to call the relevant functions at the backend to respond to the user. This application is built in the NextJS framework. Before diving into the code here is a small intro to OpenAI Function Calling.

What is OpenAI Function Calling

Function calling is a process where within the Chat Completions API, functions are described and the model intelligently chooses to output a JSON object containing arguments to call one or many functions. The Chat Completions API does not call the function; instead, the model generates JSON that we can use to call the function in your code. The models gpt-3.5-turbo-1106 and gpt-4-1106-preview support function calling, more details on OpenAI Function Calling can be found here.

Code Walkthrough

Frontend Code

Since this blog focuses more on the OpenAI function calling we will not dive much into the UI part. In the UI we have a text box for accepting user messages and a conversation container where the conversation between the user and the bot is displayed. We call pizza-order-endpoint whenever the user clicks the submit button or presses the Enter post message.

The state variable userMessage of type string is bind to the textbox. We have another state variable messages which is an array of type IChatMessageItem. The messages array stores the message history between the user and the bot. When calling the backend endpoint pizza-order-endpoint we pass the entire message history in the request payload.

"use client";

import { FC, ReactElement, useState, ChangeEvent } from "react";

import ChatMessageBox from "../components/ChatMessageBox";

import { IChatMessageItem } from "../components/interfaces/IChatMessageItem";

import ChatMessageItemThread from "../components/ChatMessageItemThread";

export const OpenAiFunctionDemo: FC = (): ReactElement => {

const [userMessage, setUserMessage] = useState<string>("");

const initalBotMessage: IChatMessageItem = {

type: "bot",

text: "Welcome to Pizza Hub. How can I help you today?",

};

const [messages, setMessages] = useState<IChatMessageItem[]>([

initalBotMessage,

]);

const handleUserMessageChange = (event: ChangeEvent<HTMLInputElement>) => {

setUserMessage(event.target.value);

};

const callOpenAiEndpoint = async () => {

console.log("User Message: ", userMessage);

// Add the message send by the user to the messages state

const userChatMessage: IChatMessageItem = {

type: "user",

text: userMessage,

};

setMessages((prevMessages) => [...prevMessages, userChatMessage]);

const updatedMessages = [...messages, userChatMessage];

console.log("Updated Messages: ", updatedMessages);

setUserMessage("");

const response = await fetch("/api/pizza-order-endpoint", {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({ messages: updatedMessages }),

});

const responseData = await response.json();

console.log("AI Bot Response: ", responseData);

// Add the message send by the bot to the messages state

const botChatMessage: IChatMessageItem = {

type: "bot",

text: responseData.message,

};

setMessages((prevMessages) => [...prevMessages, botChatMessage]);

};

return (

<div className="flex min-h-screen flex-col items-center justify-between pb-2 bg-white max-w-screen-lg mx-auto border-2">

<div className="flex flex-col items-center justify-center bg-cyan-700 w-full h-64 bg-opacity-50">

<h1 className="text-5xl font-bold mb-10">Pizza Store Bot</h1>

</div>

<ChatMessageItemThread messages={messages} />

<ChatMessageBox

userMessage={userMessage}

handleUserMessageChange={handleUserMessageChange}

handleMessageSubmitEvent={callOpenAiEndpoint}

/>

</div>

);

};

export default OpenAiFunctionDemo;

Following is the IChatMessageItem interface definition.

export interface IChatMessageItem {

type: string;

text: string;

}

Backend Code

Our API handler does two things, first, it calls the LLM model with the function schemas, conversation history, and the user message. Next, it processes the LLM model response and executes the chosen function with the arguments provided by the model.

getAiResponse: This function is responsible for calling the OpenAI model.

processOpenAiResponse: This function is responsible for processing the OpenAI response.

Below is the code of our pizza-order-endpoint API Handler:

import { NextApiRequest, NextApiResponse } from "next";

import { ChatOpenAI } from "langchain/chat_models/openai";

import {

HumanMessage,

SystemMessage,

BaseMessage,

AIMessage,

} from "langchain/schema";

import { IChatMessageItem } from "@/app/components/interfaces/IChatMessageItem";

export default async function handler(

req: NextApiRequest,

res: NextApiResponse

) {

console.log("Inside pizza-order API handler");

const messages = req.body.messages;

const result = await getAiResponse(messages);

const aiMessage = processOpenAiResponse(result);

res.status(200).json({ message: aiMessage });

}

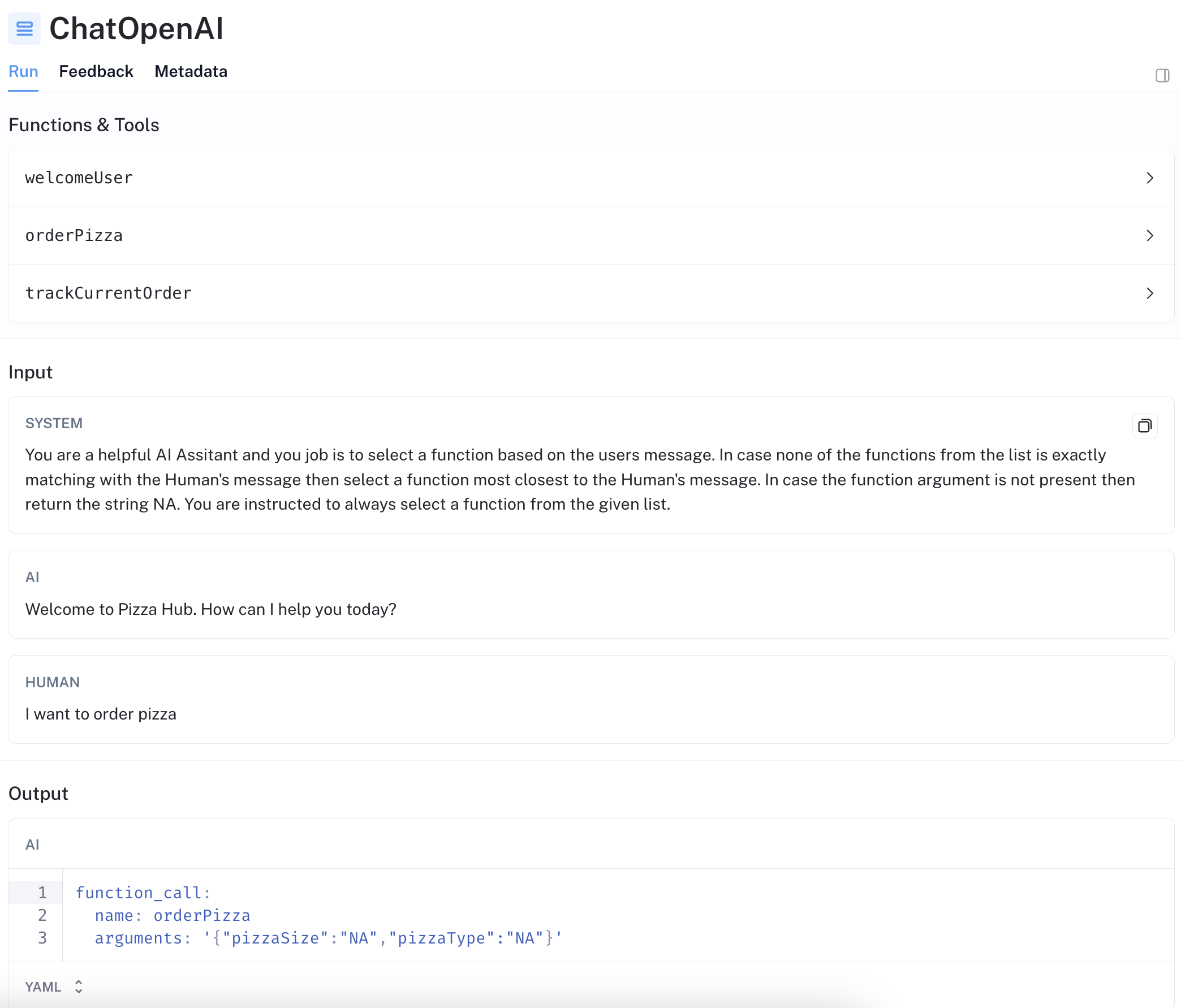

Understanding Input/Output using Langsmith

Below I have added a screenshot from LangSmith which gives a clearer understanding of the Input we are sending to the OpenAI and the response we are getting back from the model.

First, under the Functions & Tools section, we have the Function descriptions or the schemas, we are sending 3 function schemas to the LLM model: greetUserFunctionSchema, pizzaOrderFunctionSchema and trackPizzaOrderFunctionSchema. We will see the definition of all these schemas later in this blog.

greetUserFunctionSchema: Greets the user.

pizzaOrderFunctionSchema: Allows the user to place an order.

trackPizzaOrderFunctionSchema: Returns the latest status of the order to the user.

Next under the Input section, we can see 3 prompts that are being sent to the LLM. SystemMessage, AIMessage and the HumanMessage.

SystemMessage: Represents a system message in a conversation. It contains the contextual details about the application as well as the instructions for the LLM.

AIMessage: Represents the AI message in the conversation.

HumanMessage: Represents the Human message in the conversation, that is the user-typed message.

The SystemMessage is a very important prompt here as it dictates how the LLM should behave so let's have a close look at the system message.

You are a helpful AI Assitant and you job is to select a

function based on the users message. In case none of the

functions from the list is exactly matching with the Human's

message then select a function most closest to the Human's message.

In case the function argument is not present then return the

string NA. You are instructed to always select a function from

the given list.

Third and the last part is the Output Section where we get to see the response of the LLM. Under the function_call attribute, we have the name of the function and the arguments for the function.

{

"function_call": {

"name": "orderPizza",

"arguments": "{\"pizzaSize\":\"NA\",\"pizzaType\":\"NA\"}"

}

}

In this particular example, the user input was: I want to order pizza . The LLM was able to understand the intent of the user which was to order a pizza but the pizza size and the pizza type information was missing in the user message. So the LLM has filled those values with NA as instructed in our system message.

Now let's have a look at the function schemas that are being sent to LLM.

Function Schemas

The function getFunctionSchemas returns an array of function schema. A function schema consists of 3 parts, function name, function description, and function parameters. Based on the function description the LLM chooses the function so the function description should be accurate and must contain the relevant information of the function.

Here is an example of how I described the functions:

| Function Name | Function Description |

| welcomeUser | This function is used for greeting users. Acknowledging the user with a welcome message. |

| orderPizza | This function is called to accept the pizza orders. If the pizza size or the pizza type is not mentioned then pass the value as NA in the parameters. |

| trackCurrentOrder | Checks for the Pizza order status. |

const getFunctionSchemas = () => {

const greetUserFunctionSchema = {

name: "welcomeUser",

description:

"This function is used for greeting users. Acknowledging the user with a welcome message.",

parameters: {},

};

const pizzaOrderFunctionSchema = {

name: "orderPizza",

description:

"This function is called to accept the pizza orders. If the pizza size or the pizza type is not mentioned then pass the value as NA in the parameters.",

parameters: {

type: "object",

properties: {

pizzaSize: {

type: "string",

description: "The size of the pizza",

enum: ["NA", "small", "medium", "large"],

},

pizzaType: {

type: "string",

description: "The type of pizza",

enum: ["NA", "cheese", "pepperoni", "veggie", "chicken"],

},

},

required: ["pizzaSize", "pizzaType"],

},

};

const trackPizzaOrderFunctionSchema = {

name: "trackCurrentOrder",

description: "Checks for the Pizza order status.",

parameters: {

type: "object",

properties: {

orderNumber: {

type: "string",

description:

"The order number of which the customer wants to check the status.",

},

},

required: ["orderNumber"],

},

};

return [

greetUserFunctionSchema,

pizzaOrderFunctionSchema,

trackPizzaOrderFunctionSchema,

];

};

In the orderPizza function parameter, we have described two parameters pizzaSize and pizzaType. Here I wanted to restrict the pizzaSize to small, medium, and large so I have defined an enum for this field in the properties. Similarly for pizzaType, I wanted to restrict it to a handful of options so I have used an enum for it too.

Building OpenAI Request

In the getAiResponse function, we first build the input payload and then call the LLM chat completion endpoint by executing the invoke function. In the messages argument, we get the chat history of the conversation. We wrap the messages into an AIMessage or a HumanMessage based on the message type. We are sending the entire message history in the API call so that OpenAI can consider the previous context before answering the user's message. For calling the OpenAI endpoint, we have initialized an instance of ChatOpenAI which is a wrapper around the OpenAI LLM that uses the Chat endpoint. The GPT model we are using here is gpt-3.5-turbo-1106.

const getAiResponse = async (messages: IChatMessageItem[]) => {

const functionSchemaList = getFunctionSchemas();

// Create a new instance of ChatOpenAI with specific temperature and model name settings

const model = new ChatOpenAI({

modelName: "gpt-3.5-turbo-1106",

temperature: 0,

}).bind({

functions: functionSchemaList,

});

const newSystemMessage =

"You are a helpful AI Assitant and you job is to select a function based on the users message. " +

"In case none of the functions from the list is exactly matching with the Human's message then select a function most closest to the Human's message. " +

"In case the function argument is not present then return the string NA. " +

"You are instructed to always select a function from the given list.";

const chatHistory: BaseMessage[] = [];

chatHistory.push(new SystemMessage(newSystemMessage));

messages.forEach((message: IChatMessageItem) => {

if (message.type == "bot") {

chatHistory.push(new AIMessage(message.text));

} else if (message.type == "user") {

chatHistory.push(new HumanMessage(message.text));

}

});

const result = await model.invoke(chatHistory);

console.log(result);

return result;

};

Processing OpenAI Response

Below is the raw version of the OpenAI response that is parsed and returned by Langchain. The only part we are interested in here is the function_call attribute under additional_kwargs .

AIMessage {

lc_serializable: true,

lc_kwargs: {

content: '',

additional_kwargs: { function_call: [Object], tool_calls: undefined }

},

lc_namespace: [ 'langchain', 'schema' ],

content: '',

name: undefined,

additional_kwargs: {

function_call: {

name: 'orderPizza',

arguments: '{"pizzaSize":"NA","pizzaType":"NA"}'

},

tool_calls: undefined

}

}

To process this response I have written a function called processOpenAiResponse. This function first parses the function name from the response. Then based on the function name it calls the appropriate function. It also parses the function argument value from the response.

const processOpenAiResponse = (result) => {

if (result.additional_kwargs.function_call?.name) {

const functionName = result.additional_kwargs.function_call?.name;

console.log("Call method ", functionName);

if (functionName == "welcomeUser") {

return welcomeUser();

} else if (functionName == "orderPizza") {

const functionArguments =

result.additional_kwargs.function_call?.arguments;

const parsedArguments = JSON.parse(functionArguments);

const pizzaSize = parsedArguments.pizzaSize;

const pizzaType = parsedArguments.pizzaType;

return orderPizza(pizzaType, pizzaSize);

} else if (functionName == "trackCurrentOrder") {

const functionArguments =

result.additional_kwargs.function_call?.arguments;

const parsedArguments = JSON.parse(functionArguments);

const orderNumber = parsedArguments.orderNumber;

return trackCurrentOrder(orderNumber);

}

} else if (result.content) {

return result.content;

}

};

Below I have added the function body of all three functions: welcomeUser, orderPizza, and trackCurrentOrder. The implementation of these functions is not that important because I have defined these functions only for bot demonstration purposes. The actual implementation of these functions might be very different in the real world which might include querying and updating DB, as well as calling other downstream services.

const welcomeUser = () => {

console.log("Welcome to Pizza Hub. How can I help you today? ");

return "Welcome to Pizza Hub. How can I help you today?";

};

const orderPizza = (pizzaType: string, pizzaSize: string) => {

if (pizzaType == "NA" && pizzaSize == "NA") {

return "Sure, please select the Pizza type and the Pizza Size. We have the following options Cheese, Pepperoni, Veggie, and Chicken pizza. We have 3 sizes available Small, Medium, and Large.";

}

if (pizzaType == "NA") {

// Ask the user to pick the pizza type from the available option

return "Sure, please select the Pizza type. We have the following options Cheese, Pepperoni, Veggie, and Chicken pizza.";

}

if (pizzaSize == "NA") {

// Ask the user to pick the pizza size from the available option

return "Sure, please select the Pizza size. We have 3 sizes available Small, Medium, and Large.";

}

console.log("Ordering pizza of type {} and size {}", pizzaType, pizzaSize);

const orderNumber = getRandomOrderNumber(1, 100);

const orderSuccessMessage =

"Order placed successfully for " +

pizzaSize +

" " +

pizzaType +

" pizza. Your order number is " +

orderNumber +

". Please mention your order number when tracking your order.";

return orderSuccessMessage;

};

function getRandomOrderNumber(min: number, max: number): number {

return Math.floor(Math.random() * (max - min + 1)) + min;

}

const trackCurrentOrder = (orderNumber: string) => {

if (orderNumber == "NA") {

return "Please share the order number.";

}

const convertedNumber: number = Number(orderNumber);

if (convertedNumber < 30) {

return "Your pizza is out for delivery.";

} else if (convertedNumber < 60) {

return "Your Pizza is now being packed and will be out for delivery soon.";

} else {

return "Your pizza is now being prepared by our master chef.";

}

};

Closing Remark

This has been a long blog with a lot of code to digest. In this post, I have explained OpenAI function calling and discussed its implementation. I have talked about function schemas and their components with examples. I have also discussed processing the structured OpenAI function calling response.

I trust this blog helped you understand the concept of function calling and its implementation using Langchain. If you have any questions regarding the topic, please don't hesitate to ask in the comment section. I will be more than happy to address them. I regularly create similar content on Langchain, LLM, and AI topics. If you'd like to receive more articles like this, consider subscribing to my blog.

If you're in the Langchain space or LLM domain, let's connect on Linkedin! I'd love to stay connected and continue the conversation. Reach me at: linkedin.com/in/ritobrotoseth

Subscribe to my newsletter

Read articles from Ritobroto Seth directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by