Unlocking AI Magic: Hugging Face Inference API in JavaScript

nidhinkumar

nidhinkumar

Introduction

In recent years, artificial intelligence (AI) and natural language processing (NLP) have taken giant strides, enabling developers to build intelligent applications that can understand and generate human-like text. One of the driving forces behind this progress is the Hugging Face Transformers library, which provides a collection of pre-trained models for various NLP tasks.

Now, imagine harnessing the power of Hugging Face's state-of-the-art models directly in your JavaScript applications. This is where the Hugging Face Inference API comes into play, allowing developers to seamlessly integrate powerful AI capabilities into their web projects.

This comprehensive guide will walk you through the process of using the Hugging Face Inference API in JavaScript, from setting up your development environment to making API calls and incorporating the results into your applications. Whether you're a seasoned JavaScript developer or just getting started with AI, this tutorial will equip you with the knowledge and skills to leverage the full potential of Hugging Face's cutting-edge models.

Hugging Face is a machine learning (ML) and data science platform and community that helps users build, deploy and train machine learning models.

Create a developer account in Hugging Face

Open HuggingFace and then click signup button and fill up the information to create an account in hugging face

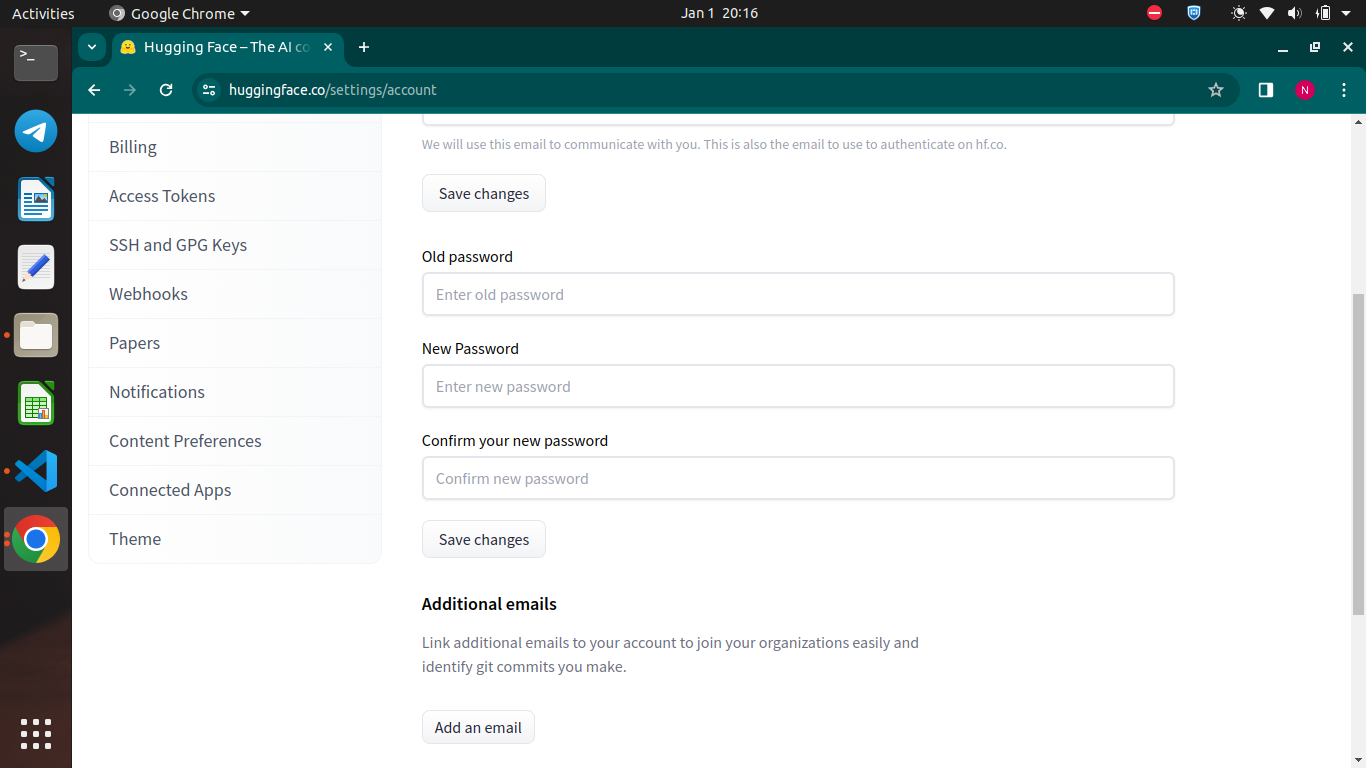

Once you have successfully signed into HuggingFace account click on the profile and then click "Settings". Once you click the settings page you could see the accounts page like below

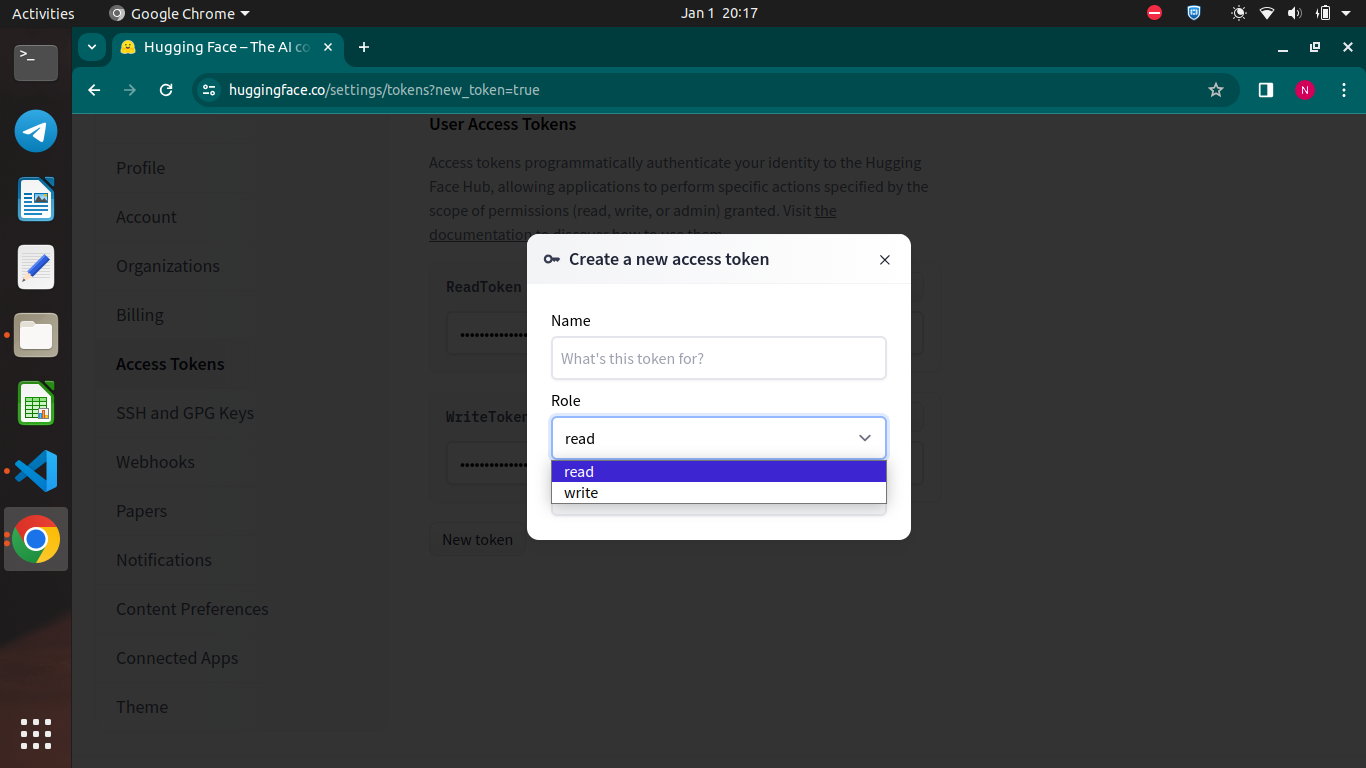

Click on the Access Tokens section you could see "New Token" button on the right hand side.click on it which will show two options like "Read" and "Write".

Create two access token one for "Read" and another for "Write". once you have created the access token we can proceed to the "Models" sections.

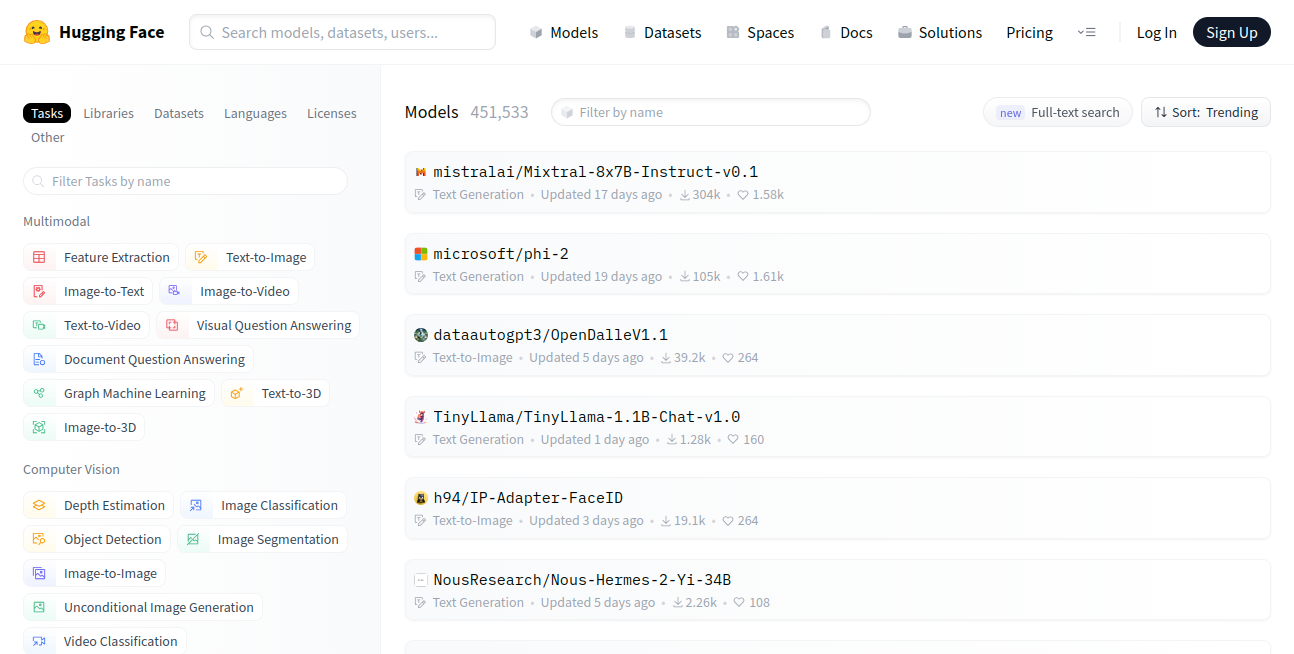

Hugging Face Models

Click on the Models sections on the header where you can see a list of Models like "Image to Image", "Text to Image", "Text to Video"

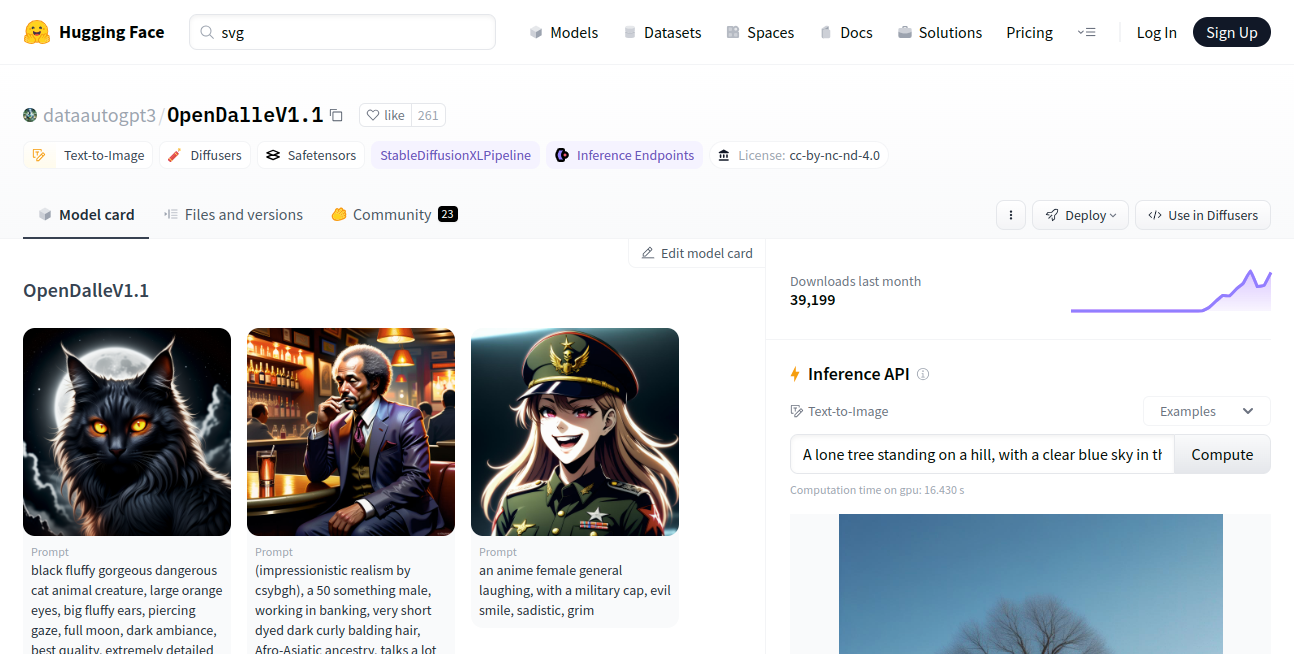

Explore the models that is available over there and then select any one model for now we will use Text to Image model and you can see a list of models in the list. Select any on the model like below

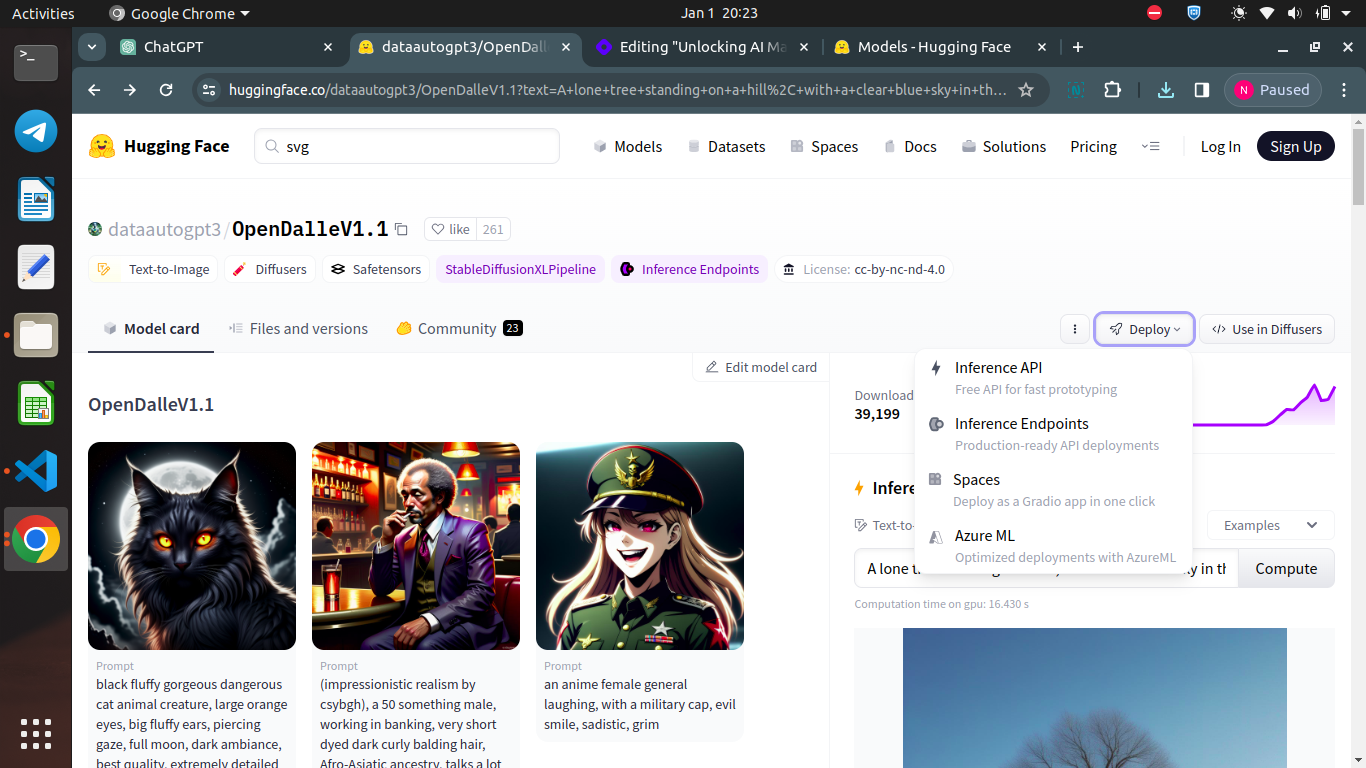

Once you select a model click the Deploy button which you see on the right hand side which will show the following options

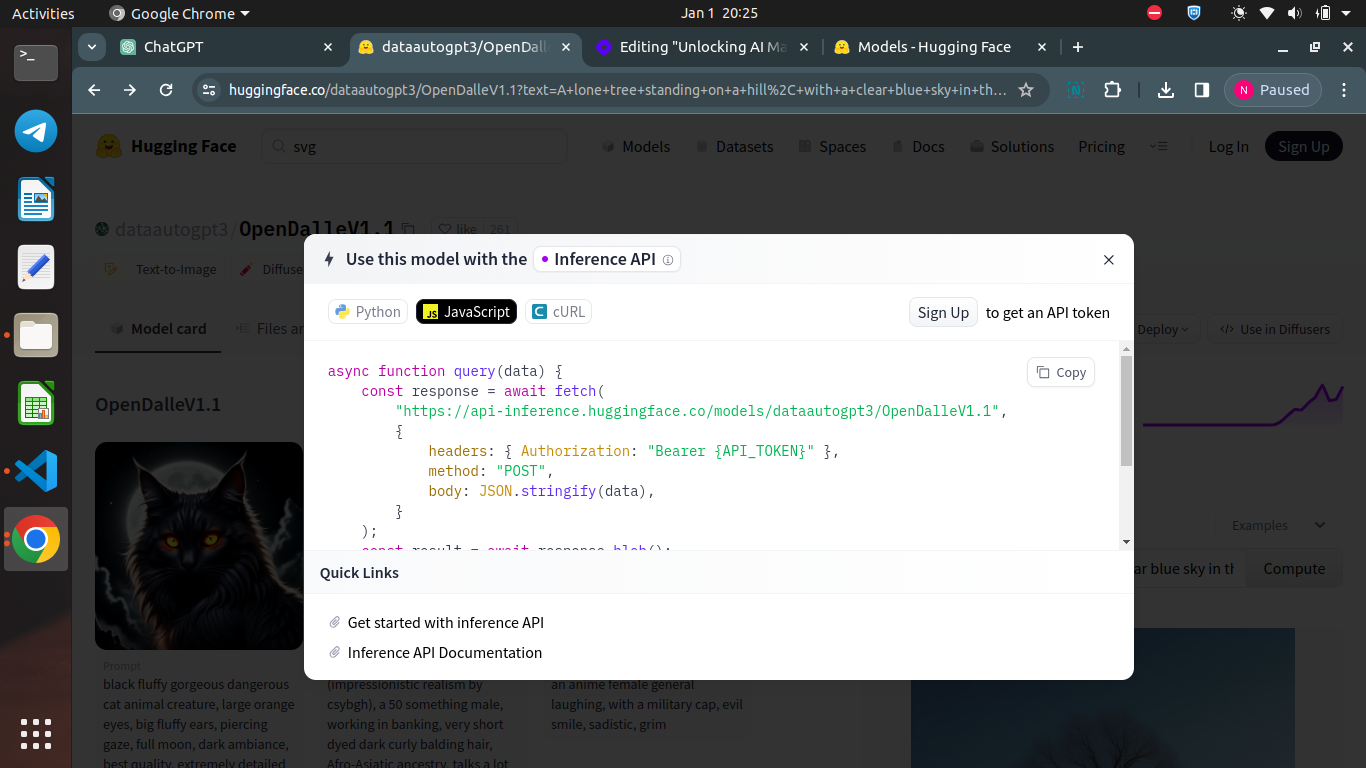

Click on the Inference API section you could see the API implementation for the model in Python, Javascript and Curl. We will select Javascript for now and you could see how it is implemented

Now we will implement the Inference API in our Node.js application to reterive an image from the Text to Image Model.

Node.js Application

Create a new directory named "HuggingFace" and then create a Node.js application by opening up a terminal and type the following command

npm init -y

once the initial setup is completed install the huggingface inference api like below

npm i @huggingface/inference

Once the necessary package is installed create a new file named "huggingFace.js" and add the following code

async function query(data) {

const response = await fetch(

"https://api-inference.huggingface.co/models/dataautogpt3/OpenDalleV1.1",

{

headers: { Authorization: "Bearer YOUR_HF_TOKEN" },

method: "POST",

body: JSON.stringify(data),

}

);

const result = await response.blob();

return result;

}

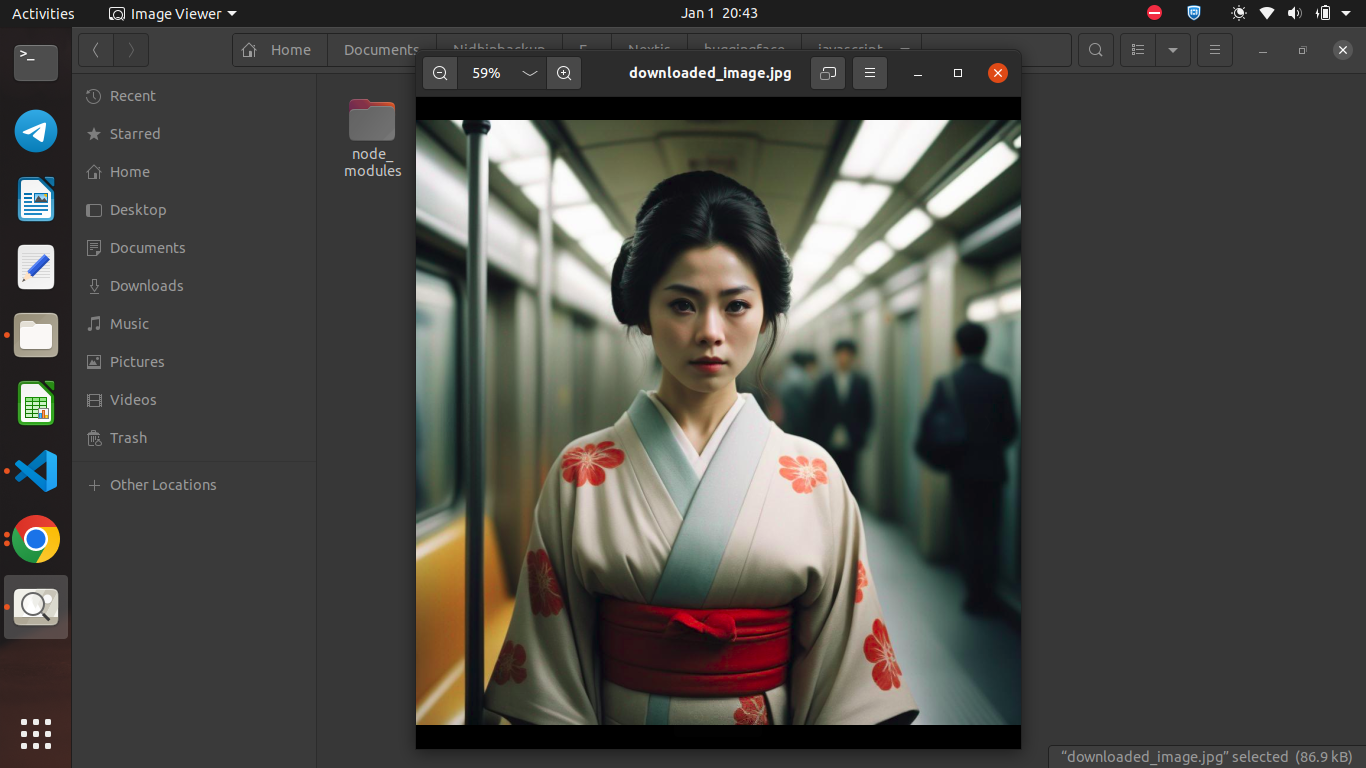

query({"inputs": "cinematic film still of Kodak Motion Picture Film: (Sharp Detailed Image) An Oscar winning movie for Best Cinematography a woman in a kimono standing on a subway train in Japan Kodak Motion Picture Film Style, shallow depth of field, vignette, highly detailed, high budget, bokeh, cinemascope, moody, epic, gorgeous, film grain, grainy"}).then(async (response) => {

// Use image

fs.writeFileSync('downloaded_image.jpg', Buffer.from(await response.arrayBuffer()));

console.log('Image downloaded successfully.');

});

In the above code we are calling the OpenDalleV1.1 model and passing the necessary input prompt to the query(). In the query function we are calling the model endpoint and then passing the Access token which we have taken earlier along with the input prompt.

Since it is a text to image model we will receive the response as a blob file. The response which we received is downloaded to the local using filesystem.

Now run the application by typing the following command in the terminal

node huggingFace.js

You could see an downloaded image like below

Rate Limits

The free Inference API may be rate limited for heavy use cases.If your account suddenly sends 10k requests then you’re likely to receive 503 errors saying models are loading. In order to prevent that, you should instead try to start running queries smoothly from 0 to 10k over the course of a few minutes.

End Notes

Today you have learnt on how to use the Inference API of HuggingFace in Javascript. Will catch up in another post till then Happy Learning :)

Subscribe to my newsletter

Read articles from nidhinkumar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by