Coping with Challenges and Fine-Tuning: Enhancements in Synthetics Monitoring Implementation.

MohanRaj

MohanRaj

Are you tired of spending hours upon hours optimizing your synthetics? Do you find yourself constantly tweaking and adjusting, only to still end up with less-than-perfect results? If so, then this would help in improving your synthetics in terms of better performance of system.

What is monitoring / synthetic according to you?

System performance is a crucial aspect of any software or application. Performance could be measured from running programs, data processing, and responding to user input. This document highlights the tasks carried out to optimize synthetics which is an integral part of the measurement of the availability of various sub services. The aim of the exercise was to streamline the execution of Monitoring jobs / Jenkins jobs (synthetics) by removing various bottlenecks existing both at the application and infra level.

Which areas you can focus on service monitoring to improve it

Reduce the load on VMs by optimizing the number of pull requests.

Mitigate Disruption caused by frequent VM Reboots.

Minimizing the high disk space usage by build log retention strategy.

Monitoring agents’ unavailability notification by configuring New Relic/monitoring agents on VMs.

Converting portal-based subsystem access to API based.

Reduce the load on VM by optimizing the number of pull requests

Running high number of jobs & code pull from repo will lead to high network traffic to repository server, and it will impact performance of job execution on VM. When distribution of synthetics jobs is skewed or uneven, and specific VMs (virtual

machines) bear excessive workload, it can lead to challenge of job processing as well as network issues. This imbalance often results in jobs failures primarily caused by insufficient network bandwidth and processing bottlenecks on these overloaded VMs.

How to improve?

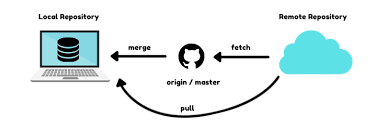

During execution, every job in monitoring pulls the latest test code from repository. However, as part of optimization we can implement a different approach to reduce pull count.Create a local repository corresponding to different branches on repo on each monitoring agent.

Dedicate monitoring jobs to pull the latest code from Git to the local repos on different VMs. (These jobs are required to run only whenever there is a change in code).

The synthetic jobs can pull the test code from common local repo to pull latest test code.

Identify the overloaded VMs and redistributed the jobs evenly across majority of the VMs.

Mitigate Disruption caused by frequent VM Reboots

One common challenge faced in the synthetic monitoring is the frequent rebooting of virtual machines (VMs), which can significantly impact the seamless operation of monitoring activities that run 24/7/365.This involves adding nodes / agents and establishing connections by running scripts recommended by the respective monitoring tools.

Challenges arising from OS reboots & Auto-upgrades.

Post auto upgrades, operating systems will reboot in-order to get the upgrade reflections, during this time the connections responsible for node’s / agent’s online availability will break & node will get stuck in offline status & it will prevent continuous job run.

Frequent Windows OS auto upgrades disrupt the synthetic monitoring if-

Monitoring agents are configured on windows VMs & windows OS has the auto-upgrade feature enabled, triggering upgrades at regular intervals.

Upon completion of the upgrade, an automatic system reboot is initiated.

How to Prevent?

To prevent this disruption, users can customize installations and schedule the upgrade process at a convenient time, ensuring the smooth continuation of synthetic monitoring operations.

Minimizing the high disk space usage by build log retention strategy.

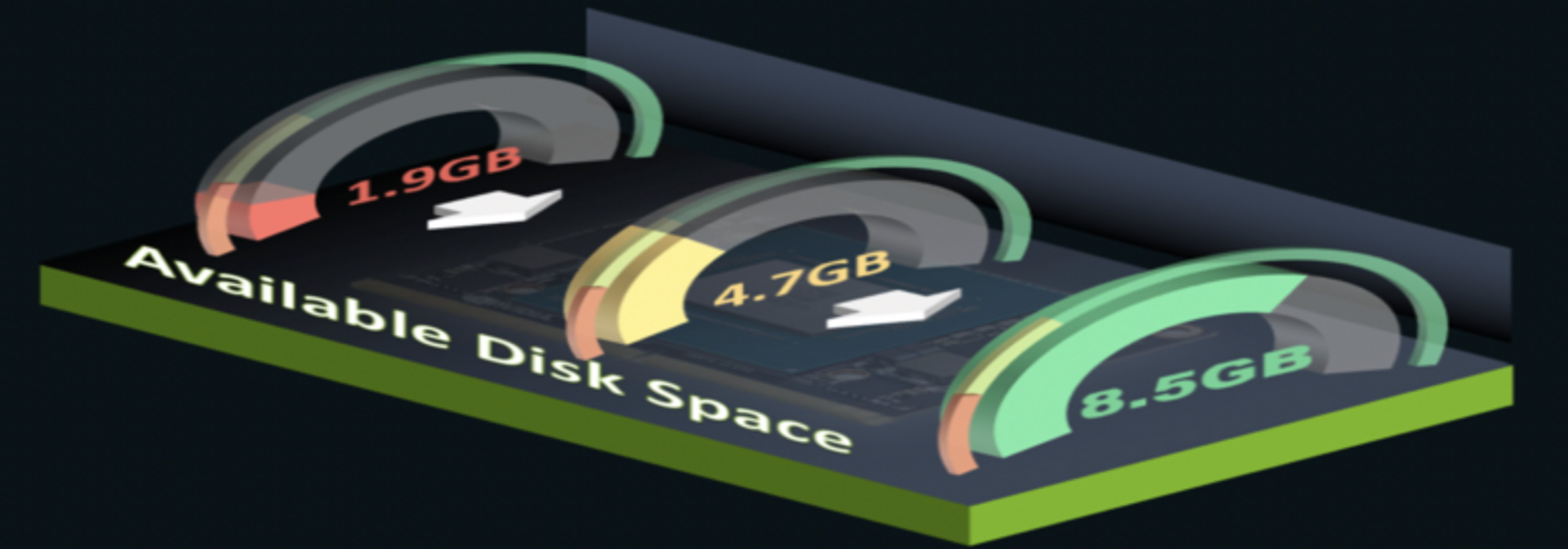

Monitoring tool host retains individual job’s build logs. We can’t leave a build log for a longer period which will consume the disk space. Leaving build logs for longer time will keep consuming the disk space at some point, we would expect “out of disk space” issue which internally affects the monitoring jobs by not allowing them to run.

How would we prevent high disk space usage? What is build retention?

All these executed build logs which are way older need not be stored until there is a high importance of that activity. One simple & effective way of reducing the disk space usage is configuring the build retention on each build. In a simple word, build retention is how long, we can keep a particular job’s builds in system’s storage (basically number of days we are interested to keep).

How to reduce bottleneck space consequences

The build log retention strategy can be configured at each job level in monitoring tools.

As part of optimization, we reconfigured the build log retention strategy at each job level (to a maximum period of 1 day and a total of 10 builds).

Monitoring agents’ unavailability notification by configuring New Relic/monitoring agents on VMs

Connection breaks of agents from monitoring is dynamic & at any moment we can expect that, but important section comes how? How we are making sure that these issues are getting notified to end user so that they can take immediate required action. What would happen if we failed to notify users about agent’s status? We would again fail & end up low service frequency run by not knowing the agent’s status (unavailable / storage issues / network issues / maintenance activities)

How to notify about these agent’s & make reachable till end user

There should be some medium to communicate these issues from monitoring tools to end users. We can make use of any available 3rd party tool (New Relic) infra-agents on the VMs so that their availability can be monitored, and actions can be taken as soon as they become unavailable.

Converting portal-based subsystem access to API based.

In the era of agile growth of software industry there are many factors which are reasons for agile growth but how these software systems are built & tested.? Development & testing are two main activities for functional & efficient software delivery. Testing & monitoring first preference was UI based testing back then but now situation is has changed. API based testing is being preferred for monitoring in most cases. There are many factors that would affect to UI testing / monitoring as mentioned below which may lead to in-effective way of monitoring.

Page loading (Timeouts) and rendering issues, longer execution time, static or dynamic wait timings & UI elements identification issues (Stale elements issues).

Browsers inter-dependency.

The advantage of API based sub service monitoring.

Api testing over UI always rocks & with minimal hazards. It always uses less time in comparison with UI for execution. Frequency of execution is more compared to UI. API execution will fetch you faster results.

Subscribe to my newsletter

Read articles from MohanRaj directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by